An explainable intrusion detection system using novel Indian millipede optimization and WGAN-GP with a dynamic attention-based ensemble model

- Published

- Accepted

- Received

- Academic Editor

- Markus Endler

- Subject Areas

- Artificial Intelligence, Computer Networks and Communications, Optimization Theory and Computation, Security and Privacy, Neural Networks

- Keywords

- Intrusion detection system, Cyber security, Indian millipede optimization algorithm, Ensemble learning, Dynamic-attention, Enhanced WGAN-GP

- Copyright

- © 2025 Chinnasamy and Subramanian

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2025. An explainable intrusion detection system using novel Indian millipede optimization and WGAN-GP with a dynamic attention-based ensemble model. PeerJ Computer Science 11:e3278 https://doi.org/10.7717/peerj-cs.3278

Abstract

In the rapidly changing field of cybersecurity, strong and efficient Intrusion Detection Systems (IDS) are essential for spotting malicious activities on the network traffic. However, traditional IDS models often face challenges such as too many irrelevant features (high-dimensional data), uneven class distributions (imbalanced datasets), and constantly evolving threats (shifting attack patterns). To overcome these issues, we introduce a hybrid framework called WGAN-GP_IMOA_DA_Ensemble. It combines: (i) a new bio-inspired Indian Millipede Optimization Algorithm (IMOA), based on the movement and foraging behavior of Indian millipedes, for selecting the most relevant features; (ii) an enhanced Wasserstein Generative Adversarial Network with Gradient Penalty (WGAN-GP) that uses attention layers, layer normalization, and skip connections in the discriminator, producing more realistic synthetic samples for rare attack types; and (iii) a dynamic attention-based ensemble, DA_Ensemble, which integrates three deep learning models namely Feedforward Neural Network (FNN), Convolutional Neural Network (CNN), and Long Short-Term Memory (LSTM), and adaptively weights their predictions in real time, emphasizing the most accurate model for a specific type of traffic. The model was tested on benchmark datasets such as UNSW-NB15, H23Q, and CIC-IDS2017 under multiclass and binary settings. In binary classification, the model achieved 100% “accuracy, precision, recall, and F1-score” on the UNSW-NB15 dataset, surpassing the best benchmark method, Optimized Hybrid Deep Neural Network + Enhanced Conditional Random Field (OHDNN+ECRF), by nearly 2%. On CIC-IDS2017 and H23Q, it attained about 99% across all four metrics, improving previous baselines by 2% to 3%. In multiclass classification, it reached 99% in all four metrics on UNSW-NB15 and CIC-IDS2017, and about 98% on H23Q, demonstrating a steady 2% to 4% improvement over current leading methods. These results, confirmed through five-fold cross-validation and ablation studies, show that the proposed approach reliably delivers statistically significant improvements in both binary and multiclass intrusion detection tasks.

Introduction

In the ever-changing landscape of cybersecurity, the robustness and flexibility of Intrusion Detection Systems (IDS) are essential for safeguarding information systems against malicious activities and sophisticated cyber threats (Kumar, 2025; Park et al., 2022). The two main techniques used in traditional intrusion detection are anomaly-based and signature-based (Park et al., 2022). Signature-based methods excel at identifying known threats because they recognize specific patterns (Chinnasamy & Subramanian, 2023). However, they cannot detect new, unknown attacks such as zero-day exploits. Additionally, anomaly-based techniques can detect unusual behavior, making them more flexible against new threats (Shankar et al., 2024; Momand, Jan & Ramzan, 2024). Nevertheless, they often generate many false alarms and have difficulty defining normal activity in a dynamic network environment (Bella et al., 2024). Furthermore, the success of these methods largely relies on the quality and completeness of the dataset used for detection, as well as the amount of training data available, which is often limited or imbalanced in real-world situations (Lee, Li & Li, 2023; Ahmed et al., 2024).

Deep Learning (DL) and Machine Learning (ML) methods are commonly employed to enhance IDS (Chinnasamy, Malliga & Sengupta, 2022). However, several ongoing challenges remain: feature redundancy, dataset imbalance, and the static nature of many ensemble approaches (Subramani & Selvi, 2023; Rajasoundaran et al., 2024).

Research gap

Despite considerable advancements, existing studies have critical limitations.

Feature Redundancy: Many systems do not remove irrelevant or overlapping features, leading to increased computational costs and overfitting. For example, Momand, Jan & Ramzan (2024) introduced Attention-Based CNN-IDS, which showed strong performance on Internet of Things (IoT) traffic with about 96% accuracy but had poor recall of less than 80% for minority classes due to a lack of feature selection.

Imbalanced Datasets: Rare attack types are consistently under-detected. Park et al. (2022) employed Generative Adversarial Networks (GANs) for data augmentation on CIC-IDS2017; however, detection of minority classes, such as infiltration attacks, remained insufficient, highlighting the limitations inherent in traditional GAN frameworks.

Static Ensemble Fusion: Current IDS frameworks often combine multiple models with fixed weighting schemes, which poorly adapt to changing network traffic. Shankar et al. (2024) proposed an approach merging optimization techniques with deep learning, but the static nature of ensemble weighting limited adaptability and caused decreased performance across diverse datasets.

Collectively, these drawbacks emphasize the importance of IDS architectures that incorporate efficient feature selection, robust data augmentation strategies, and adaptive model fusion mechanisms to enhance accuracy, recall, and particularly the detection of minority classes.

It is crucial to recognize that the examples given are illustrative; a comprehensive discussion of related works and additional supporting evidence can be found in the literature survey section.

Research hypothesis

This study hypothesizes that combining a biologically inspired feature selection method called Indian Millipede Optimization Algorithm (IMOA), an enhanced Wasserstein Generative Adversarial Network with Gradient Penalty (WGAN-GP), and a dynamic attention-based (DA)_ensemble classification model will notably enhance intrusion detection performance in terms of precision, accuracy, F1-score, and recall, especially in managing imbalanced datasets and identifying minority attack classes, compared to existing IDS approaches.

Novelty and advantages over prior work

Previous studies have independently examined GANs, ensemble deep learning, or optimization-driven feature selection, but significant limitations remain (Park et al., 2022; Shankar et al., 2024; Momand, Jan & Ramzan, 2024; Lee, Li & Li, 2023). In contrast, our contributions are threefold.

-

1.

Novel IMOA:

A first-of-its-kind bio-inspired optimizer that models the behavioral patterns of Anoplodesmus saussurii (Indian millipedes), including seasonal abundance (Usha, Vasanthi & Esaivani, 2022), obstacle avoidance (Dave & Sindhav, 2025), temperature response (Aswathy & Sudhikumar, 2022), resource utilization (Ramanathan et al., 2023), group movement (Anilkumar, Wesener & Moritz, 2022), defensive behavior (Dave & Sindhav, 2025), and mating behavior (Usha, Vasanthi & Esaivani, 2022). These behaviors are mathematically modeled to improve the exploration-exploitation balance. Unlike conventional optimization algorithms such as Genetic Algorithm (GA) (Fang et al., 2024), Particle Swarm Optimizer (PSO) (Jain et al., 2022), and Grey Wolf Optimizer (GWO) (Mirjalili, Mirjalili & Lewis, 2014), which primarily rely on predefined equations for search dynamics, IMOA introduces adaptive strategies that respond to the current state of search. These biologically inspired adaptations enhance feature selection efficiency in IDS applications, leading to improved classification performance.

-

2.

Feature-level discriminator enhancements in WGAN-GP:

While GAN-based data augmentation (Park et al., 2022; Lee, Li & Li, 2023) has been explored, our approach is the first to implement attention layers, layer normalization, and skip connections within the WGAN-GP discriminator to improve the realism of synthetic minority-class data. Unlike traditional oversampling techniques such as Synthetic Minority Oversampling Technique (SMOTE) (Meliboev, Alikhanov & Kim, 2022) or oversampling, this research gives better discrimination between real and generated samples and enhances minority class recall without overfitting.

-

3.

DA_Ensemble learning:

This research incorporates a DA_Ensemble mechanism, integrating Convolutional Neural Network (CNN), Long Short-Term Memory (LSTM), and Feedforward Neural Network (FNN) models. Unlike fixed-weight ensemble methods (Kumar, 2025; Bella et al., 2024; Rajasoundaran et al., 2024), this approach dynamically adjusts model weights in real time, ensuring more accurate predictions across diverse attack categories.

Practical benefits

Beyond theoretical novelty, the proposed framework offers several practical benefits for real-world IDS deployments, including:

-

(1)

Lightweight deployment for edge devices: The features selected through IMOA reduces computational overhead. As a result, the model becomes lightweight. So, it is appropriate for resource-limited settings such as IoT and edge devices.

-

(2)

Improved detection of rare and emerging attacks: WGAN-GP-based data augmentation ensures balanced training, which helps detect minority class attacks more effectively and improves security in critical infrastructure.

-

(3)

Context-aware decision making: The dynamic attention mechanism customizes the decision-making process to individual traffic instances, increasing accuracy in complex, real-world traffic where static models may fail.

-

(4)

Scalability across datasets: The framework is tested on diverse datasets, including CIC-IDS2017, UNSW-NB15, and H23Q. It shows robustness and flexibility across different network environments and traffic patterns.

-

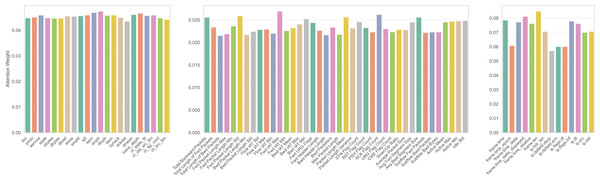

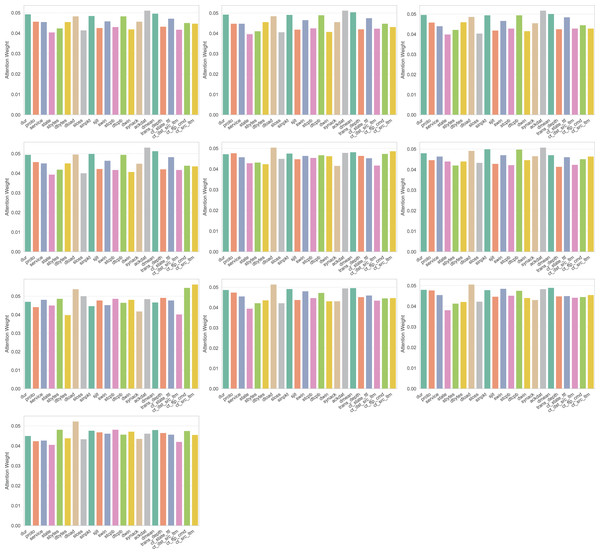

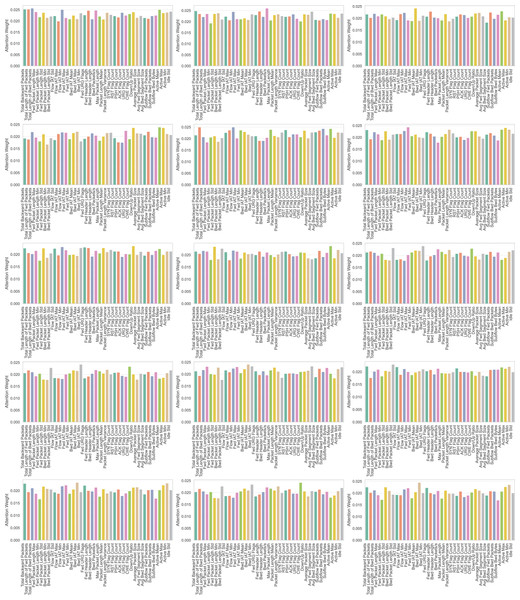

(5)

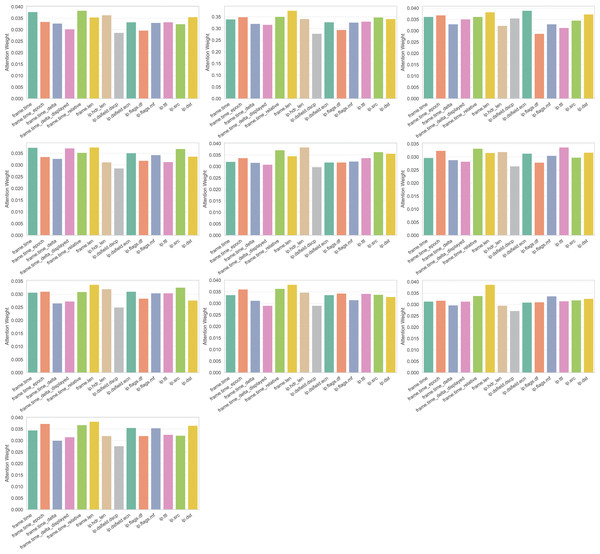

Better interpretability for analysts: The attention weights can be visualized to indicate which features or base learners impacted a prediction, aiding human analysts in trust and decision justification.

-

(6)

Ablation study for model validation: Shows how each part, including IMOA, WGAN-GP, and attention-based ensemble, contributes to overall system performance, allowing users to adjust or simplify the model for specific deployment needs without significant performance loss.

-

(7)

Computational efficiency analysis: Evaluates the model’s ability to deploy in real-world scenarios, especially on resource-limited edge devices, by providing insights into time and memory usage and supporting scalability and hardware compatibility decisions.

-

(8)

Comprehensive comparison: Confirms the effectiveness of the WGAN-GP_IMOA_DA_Ensemble model across various scenarios.

The remainder of this study is arranged in the following manner: The literature review covers similar studies in the field of IDS. The materials and methods provide the details of IMOA and WGAN-GP, as well as the proposed DA_Ensemble, including the architectures of IMOA, WGAN-GP, and the ensemble models leveraged in this research. The Results section describes the experimental setup, evaluation metrics, and experiment outcomes. The Discussion section provides details of the classification report and a comprehensive comparison with benchmark datasets. Finally, the conclusion covers the summary and possible further research potential.

Literature survey

The details, such as feature selection methods, classification techniques, datasets used, advantages, and limitations of some related works, are listed in Table 1. This section offers a summary of recent research on strategies for handling high-dimensional data, addressing class imbalance in datasets, and DL based methodologies for developing effective IDS.

| Authors | Feature selection methods | Classification methods | Datasets used | Advantages | Limitations |

|---|---|---|---|---|---|

| UNSW-NB15 binary (Kareem et al., 2022) | GTO-BSA | K-NN | UNSW-NB15, CICIDS2017, NSL-KDD, BoT-IoT | Better convergence, higher accuracy. | Limited scope, high cost. |

| Turukmane & Devendiran (2024) | Modified Singular Value Decomposition (M-SvD) |

M-MultiSVM | CSE-CIC-IDS 2018, UNSWNB-15 | High accuracy, reduced imbalance. | Dataset-bound, complex model. |

| Meliboev, Alikhanov & Kim (2022) | SMOTE for balancing classes | CNN+LSTM | UNSW-NB15, KDDCup’99, NSL-KDD | Balancing the datasets significantly improved model accuracy and F-scores across all benchmarks. | Training recurrent models requires higher computation and longer epochs compared to CNN. |

| Ragab & Sabir (2022) | Poor and Rich Optimization Algorithm (PROA) for hyperparameter tuning | Hybrid CNN-ALSTM with attention mechanism | KDDCup’99, NSL-KDD, UNSW-NB15, CICIDS2017 | High accuracy, robust detection. | Complex design, dataset-dependent. |

| Altunay & Albayrak (2023) | CNN+LSTM | UNSW-NB15, X-IIoTID | High accuracy, hybrid effectiveness. | Dataset-specific, limited generalization. | |

| Thilagam & Aruna (2023) | Hybrid CNN-LSTM with AES encryption | NSL-KDD, UNSW-NB15 | Strong security, high accuracy | Complex process, dataset-limited | |

| Karthic & Kumar (2023) | Enhanced Conditional Random Field-based feature selection | Optimized Hybrid Deep Neural Network (OHDNN): Hybrid CNN-LSTM, optimized using Adaptive Golden Eagle Optimization | NSL-KDD, UNSW-NB15 | Improved accuracy, effective features. | Dataset-limited, high complexity. |

|

CIIC-IDS2017 Binary (Hanafi et al., 2023) |

IBGJO | LSTM | CICIDS2017, NSL-KDD |

High accuracy, effective feature selection. | Dataset-limited, reduced efficiency scaling. |

| Bowen et al. (2023) | Recursive Feature Elimination (RFE) | Hybrid CNN + BLSTM | CIC-IDS2017, IoT-23, Bot-IoT, UNSW-NB15 | Strong detection, hybrid effectiveness | Dataset-dependent, misses rare attacks |

| Li, Li & Li (2023) | GAN for data augmentation, CNN-BiLSTM with self-attention mechanism | CIC-IDS2017 | Handles imbalance, higher accuracy | High complexity, dataset-specific | |

| Vishwakarma & Kesswani (2023) | Naïve Bayes and Elliptic Envelope | NSL-KDD, UNSW-NB15, CIC-IDS2017 | High accuracy, efficient detection. | Multi-phase complexity, dataset-bound. | |

| Chinnasamy, Subramanian & Sengupta (2023a) | HBO | ANN | CIC-IDS2017 | Efficient feature selection | Dataset limited, class imbalance issue |

|

UNSW-NB15 multiclass (Bakro et al., 2024) |

Grasshopper Optimization Algorithm (GOA) and GA | Random Forest classifier | UNSW-NB15, CIC-DDoS2019, CIC Bell DNS EXF 2021 | High accuracy, improved feature selection | Complex process, high computation |

| Sayegh, Dong & Al-madani (2024) | Correlation-Based Feature Selection (CFS) Recursive Feature Elimination (RFE) |

Random Forest (RF) Support Vector Machine (SVM), K-Nearest Neighbor (KNN), Naïve Bayes (NB), Decision Tree (DT) |

UNSW-NB15 | Improved detection, handles imbalance | Oversampling risks, dataset-specific |

| Sajid et al. (2024) | Principal Component Analysis (PCA) and Information Gain (IG) | Random Forest (RF) Gradient Boosting Machine (GBM), Logistic Regression (LR), Naïve Bayes (NB), Decision Tree (DT), K-Nearest Neighbors (KNN) |

UNSW-NB15 | High detection, low FAR | Complex design, dataset-dependent |

| More et al. (2024) | Correlation Analysis and Random Sampling | Logistic Regression, Decision Tree | UNSW-NB15 | Better accuracy, improved evaluation | Dataset-specific, limited generalization |

| Yin et al. (2023) | Random Forest Importance, Recursive Feature Elimination (RFE) | MLP with two hidden layers | UNSW-NB15 dataset | Reduced features, improved accuracy | Dataset-limited, modest gains |

|

CIC-IDS2017 multiclass (Yao, Shi & Zhao, 2023) |

Bidirectional GAN (BiGAN) with Wasserstein distance, Fog-Cloud joint training | UNSW-NB15, CIC-IDS2017 | Scalable detection, reduced false alarms | Complex training, dataset-limited | |

| Bacevicius & Paulauskaite-Taraseviciene (2023) | Logistic Regression, Decision Trees | CIC-IDS2017, CSE-CIC-IDS2018 | Strong multi-class performance, interpretable results | Imbalance persists, dataset-specific | |

| Aljehane et al. (2024) | Golden Jackal Optimization Algorithm (GJOA) | Attention-based bi-directional long short-term memory (A-BiLSTM) | CIC-IDS2017 | Better accuracy, optimized feature selection | High complexity, dataset-specific |

| Ahmed et al. (2024) | Consensus Hybrid Ensemble Model (CHEM): Voting-based ensemble with RF, DT, XGBoost, MLP | Kdd99, NSL-KDD, CIC-IDS2017, BoTNeTIoT, Edge-IIoTset | Adaptive, interpretable, strong accuracy | Complex ensemble, high computation | |

| Aktar & Nur (2023) | Deep Contractive Autoencoder (DCAE) with stochastic threshold selection | CIC-IDS2017, NSL-KDD, CIC-DDoS2019 | High accuracy, effective anomaly detection | Dataset-limited, high training cost | |

| H23Q dataset (multiclass classification) (Chatzoglou et al., 2023) | Shallow and deep learning techniques (various ML models) | H23Q Dataset | New dataset, real attack coverage | Early stage, limited scope |

Handling high-dimensional data

IDS datasets are often high-dimensional, which may include noise, redundant, and irrelevant information (Chinnasamy, Malliga & Sengupta, 2022). Dimensionality reduction and feature selection are approaches employed to handle the problems related to high-dimensional data and reduce the computational complexity, improve the accuracy, and avoid overfitting (Ahmed et al., 2024; Fang et al., 2024; Meliboev, Alikhanov & Kim, 2022).

Kareem et al. (2022) proposed GTO-BSA framework that integrates “Gorilla Troops Optimizer” (GTO) and “Bird Swarm Algorithm” (BSA), using K-Nearest Neighbour for classification, achieving up to 98.7% accuracy on four datasets. GTO-BSA relies on two metaheuristics, increasing complexity and limiting scalability for large datasets. It doesn’t address data imbalance or adaptive classification, restricting use in dynamic environments. In contrast, the WGAN-GP_IMOA_DA_Ensemble framework uses IMOA-based feature selection, which retains discriminative features with lower computational overhead. It integrates data augmentation with enhanced WGAN-GP and adaptive ensemble fusion to address redundancy, imbalance, and adaptability within a unified framework.

Turukmane & Devendiran (2024) designed a hybrid IDS that uses “Advanced Synthetic Minority Oversampling Technique” (ASmoT) to tackle the problem of class imbalance. Additionally, feature extraction is performed by “Modified Singular Value Decomposition” (M-SVD). Later, essential features are identified using “Opposition-based Northern Goshawk Optimization algorithm” (ONgO). The system employs a “Mud Ring assisted multilayer support vector machine” (M-MultiSVM) classifier. The performance assessment is done by utilizing the CIC-IDS 2018 and UNSW-NB15 datasets. While effective, the pipeline is computationally demanding and lacks detailed analysis of minority-class detection or ablation to determine which modules contribute most to performance. In contrast, the WGAN-GP_IMOA_DA_Ensemble framework uses WGAN-GP for realistic data balancing and a dynamic attention-based ensemble to provide both adaptability and explainability.

Hanafi et al. (2023) introduced a hybrid IDS model called IBGJO-LSTM, where the essential features are identified by the improved Binary Golden Jackal Optimization (IBGJO). Then, the classification is done by LSTM, which is optimized through opposition-based learning (OBL) to avoid local optima. NSL KDD and CICIDS2017 datasets are utilized to assess the performance. It does not address class imbalance or the adaptive fusion of multiple learners. Our approach instead provides feature selection, imbalance handling, and dynamic attention for the explainability of the framework.

Chinnasamy, Subramanian & Sengupta (2023a) designed an IDS model that utilizes the honey badger optimization algorithm (HBO) to identify the essential features. Additionally, the classification is conducted by the Artificial Neural Network (ANN). But the system was evaluated using only one dataset and doesn’t tackle the class imbalance problem. In contrast, our approach tests the framework on CIC-IDS2017, H23Q, and UNSW-NB15 datasets, tackles the problem of class imbalance with WGAN-GP, and provides explainability through dynamic attention.

Bakro et al. (2024) designed an IDS for the cloud where feature selection is performed using a hybrid bio-inspired method that combines GOA and GA. A Random Forest classifier trained on these features was tested on CIC Bell DNS, CIC-DDoS2019, and UNSW-NB15 datasets. The framework does not explicitly address class imbalance or offer mechanisms for adaptive integration of multiple learners. The proposed model mitigates these limitations by using IMOA for lightweight feature selection, WGAN-GP to balance classes, and a DA_Ensemble to enhance scalability and generalization across diverse attack scenarios.

Aljehane et al. (2024) proposed a GJOADL-Intrusion Detection System for Network Security (IDSNS) model, where the most relevant features are identified by the Golden Jackal Optimization Algorithm (GJOA). Then, the classification is performed with Attention-based Bidirectional Long Short Term Memory (A-BiLSTM). Besides, hyperparameter tuning is performed by the SSA. However, the model didn’t tackle the class imbalance problem. The proposed framework directly addresses the class imbalance issue by leveraging WGAN-GP to produce synthetic minority class instances, ensuring balanced training and improved detection across both majority and minority attack classes.

In summary, researchers have used various bio-inspired optimization algorithms for identifying the essential features and have employed either ML or DL models for classification. The feature selection methods tackle the problems of high-dimensional data.

Handling imbalanced data

During IDS development, the dataset has an uneven distribution between attack and benign classes. Managing this imbalance remains essential. Researchers have suggested different methods to deal with the problem of class imbalance.

Meliboev, Alikhanov & Kim (2022) discussed how class imbalance in intrusion detection datasets may cause low performance, especially in identifying minority attacks. The SMOTE technique has been used to balance the data. Moreover, DL models like LSTM and CNN performed significantly better on the balanced datasets compared to the imbalanced ones. However, the framework did not address the high-dimensionality problem and lacked interpretability in its results. In contrast, the proposed WGAN-GP_IMOA_DA_Ensemble addresses the high-dimensionality problem through feature selection with IMOA and offers interpretability in results with dynamic attention.

Park et al. (2022) utilized a Boundary Equilibrium Generative Adversarial Network (BEGAN) with Wasserstein distance for generating synthetic instances for minority attack classes. Constantin et al. (2024) evaluated various GAN models, like energy-based, Wasserstein, gradient penalty, LSTM-GAN, and conditional on traffic data from 16 users, with results demonstrating improved performance of intrusion detection models.

Kumar & Sinha (2023) employed a Wasserstein Conditional Generative Adversarial Network (WCGAN) with a gradient penalty to produce synthetic attack instances for underrepresented classes. In addition, the synthetic samples were combined with the real data and evaluated with the XGBoost classifier. Jamoos et al. (2023) developed a GAN-based model, named Temporal Dilated Convolutional Generative Adversarial Network (TDCGAN), for producing synthetic instances for minority attack classes in the UGR’16 dataset. By integrating three discriminators and an election mechanism, the model ensures high-quality data generation, leading to improved detection accuracy, precision, and recall. Cai et al. (2023) suggested an IDS framework named the CycleGAN Self Attention-Recurrent Neural Network (CGSA-RNN), which enhances attack detection by addressing data imbalance through an improved CycleGAN, which performs data augmentation using style transfer. By integrating a self-attention mechanism and replacing Rectified Linear Unit (ReLU) with LeakyReLU in the CycleGAN generator, the model reduces image distortion and captures critical features more effectively. Alsirhani et al. (2023) developed a DL-based IDS that utilizes Deep Convolutional GAN (DCGAN) for data augmentation to handle the issue of imbalance in datasets. By producing realistic synthetic samples of minority attack classes, the model significantly improved detection accuracy and robustness.

Although many GAN-based methods, such as WCGAN (Kumar & Sinha, 2023), BEGAN (Park et al., 2022), DCGAN (Alsirhani et al., 2023), TDCGAN (Jamoos et al., 2023), and CycleGAN (Cai et al., 2023), successfully mitigate class imbalance by generating synthetic samples, they do not incorporate feature selection algorithms, which may lead to redundant or irrelevant features that degrade performance. Moreover, these approaches lack explainability mechanisms, making it difficult to interpret or trust the decisions of the IDS models in critical security contexts. As a result, they remain limited in practical deployment despite achieving improved accuracy on benchmark datasets.

The proposed WGAN-GP_IMOA_DA_Ensemble addresses these gaps by using IMOA for effective feature selection, reducing dimensionality, and improving detection efficiency, while the dynamic attention ensemble enhances interpretability by highlighting feature contributions. Additionally, WGAN-GP ensures balanced training data, allowing the model to achieve both high accuracy and explainability in real-world IDS.

Srivastava, Sinha & Kumar (2023) suggested an IDS using WCGAN-GP for realistic data augmentation and GA for feature selection to address data imbalance. The model, combined with a Boost classifier, outperformed traditional and state-of-the-art methods by generating high-quality synthetic samples and optimizing features. The framework did not address interpretability. In contrast, the proposed WGAN-GP_IMOA_DA_Ensemble approach introduces interpretability through a dynamic attention-based ensemble.

Deep learning-based classification

Recently, DL methods have been employed for classification tasks in developing effective IDS. Meliboev, Alikhanov & Kim (2022) explored DL architectures, including CNN, LSTM, Recurrent Neural Network (RNN), and Gated Recurrent Unit (GRU), for identifying intrusion by analyzing sequential network traffic data. Among the models tested, CNN and the CNN-LSTM hybrid performed better. However, it did not address the issue of high dimensionality. The proposed WGAN-GP_IMOA_DA_Ensemble framework introduces feature selection through IMOA and reduces dimensionality.

Altunay & Albayrak (2023) designed an IDS for Industrial IoT (IIoT) by integrating CNN and LSTM architectures to enhance threat identification. Although tested on two datasets, the model only utilized classification with basic preprocessing methods. It didn’t address issues like high dimensionality, data imbalance, and model interpretability. In contrast, the proposed WGAN-GP_IMOA_DA_Ensemble framework introduces feature selection through IMOA, which reduces dimensionality, handles class imbalance with WGAN-GP by producing realistic samples of minority attack instances, and provides interpretability through dynamic attention.

Thilagam & Aruna (2023) proposed an IDS for cloud computing environments by integrating an Lion Mutated-Genetic Algorithm (LM-GA) and a hybrid CNN-LSTM DL model. The LM-GA optimizes encryption keys for securing non-intruded data using AES encryption, while the CNN-LSTM model effectively detects intrusions by analyzing preprocessed and balanced input data. The system did not address the issue of model interpretability. In contrast, our proposed system offers model interpretability through dynamic attention.

Karthic & Kumar (2023) designed an IDS where the essential features are identified using an enhanced Conditional Random Field. Additionally, an Optimized Hybrid DeepNeural Network (OHDNN) is employed for classification. This approach focuses on performance but did not address the explainability of results and class imbalance problems. The proposed system offers interpretability through dynamic attention and utilizes WGAN-GP to tackle the class imbalance.

Li, Li & Li (2023) introduced a GAN-CNN-BiLSTM model to enhance network intrusion detection by addressing data imbalance with GAN for data augmentation and combining CNN with Bidirectional LSTM, called BiLSTM networks for classification. However, they didn’t address the problem of a high-dimensional dataset. Our proposed system addresses this limitation with IMOA-based feature selection.

Attention mechanism

In IDS, attention mechanisms enhance DL models by enabling them to concentrate on the essential parts of network traffic instances (Ragab & Sabir, 2022). This selective focus allows the models to assign higher importance to critical features indicative of malicious activity, thereby reducing false positives and improving detection accuracy. However, the class imbalance issue and problems of high dimensionality, which lead to computational overhead, persist. The proposed system overcomes these limitations with IMOA-based feature selection and WGAN-GP-based synthetic data generation.

Aljehane et al. (2024) developed an IDS that utilizes Attention-based BiLSTM (A-BiLSTM) to improve the capability of the model to focus on critical temporal patterns in intrusion samples. The system fails to handle the class imbalance issue. The suggested approach addresses the class imbalance problem through WGAN-GP-based synthetic data generation.

Ahmed et al. (2024) employed a random oversampling technique to overcome the class imbalance issue. Besides, the model provides interpretability through “Shapley Additive explanations” (SHAP) and “Local Interpretable Model-agnostic Explanations” (LIME). However, it did not address the high dimensionality issue. The proposed WGAN-GP_IMOA_DA_Ensemble addresses the high dimensionality issue through IMOA-based feature selection.

In summary, recent advancements in IDS have utilized bio-inspired optimization algorithms for feature selection, GAN-based frameworks for addressing data imbalance, and DL models for classification. Notably, the integration of attention mechanisms has further contributed to the overall improvement in performance by making them focus on critical features of network traffic, enhancing detection precision, and reducing false positives.

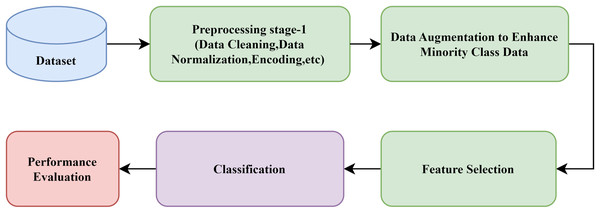

From the detailed study of the previous literature, the general block diagram for the development of an IDS is designed and is shown in Fig. 1. It has been observed that the AI-based IDS generally preprocesses the dataset, such as data cleaning, normalization, and scaling (Devendiran & Turukmane, 2024). Next, some optimization algorithms identify the essential features of the dataset. Then, the dataset is divided into training and test datasets. Later, the classification model is trained with the training dataset. Finally, the framework’s effectiveness is evaluated using performance metrics.

Figure 1: General block diagram of a typical IDS.

Materials and Methods

This section provides the details of the suggested model, including the IMOA algorithm, WGAN-GP algorithm, dataset preprocessing, proposed WGAN-GP_IMOA_DA_Ensemble model, and the dynamic attention mechanism. The source code for this research can be accessed at https://doi.org/10.5281/zenodo.17153877.

Novel Indian millipede optimization

A technique for finding the best solution to a problem from a set of possible solutions is known as an optimization algorithm (Nandhini & SVN, 2024). These algorithms are designed for maximizing or minimizing an objective function by iteratively improving candidate solutions (Devendiran & Turukmane, 2024).

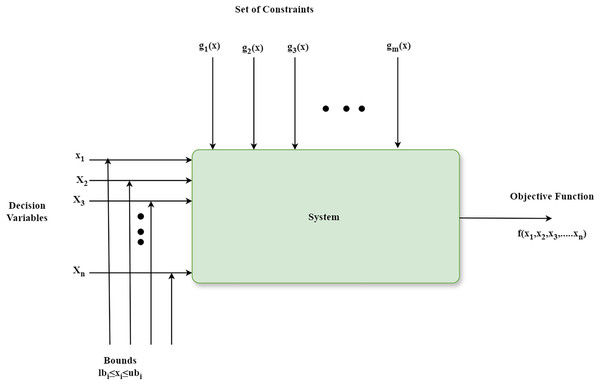

The schematic diagram that shows the components of an optimization algorithm is depicted in Fig. 2. Firstly, it has a parameter named decision variable x1, x2, …, xn that can be fine-tuned for identifying an optimal solution to a problem. Secondly, the limits on decision variables, known as bounds lbi ≤ xi ≤ ubi, define the feasible region within the search space. Next, constraints g1(x), g2(x), …, gm(x) are requirements that a solution must fulfill. Finally, a mathematical expression known as an objective function f(x1, x2, …, xn) assesses the quality of a solution by taking into account the decision variables (Otair et al., 2022). The optimization algorithms are classified broadly into traditional and metaheuristic algorithms. Metaheuristic optimization algorithms are more straightforward to comprehend and implement in comparison with conventional optimization algorithms (Jia et al., 2023). The metaheuristic optimization process finds the optimal solution xbest after numerous iterations. It yields a new solution xnew {x1, x2, …xn} in every iteration. If xnew is superior to xbest, then xbest is updated to xnew. The iterations persist until the discovered solution fulfills specific predefined criteria. The last solution is the optimal or best solution (Jia et al., 2023). Despite the numerous meta-heuristic algorithms developed in recent years, as per the No Free Lunch theorem, there is no single algorithm that can solve every problem (Fraihat et al., 2023). An algorithm that performs exceptionally well for one problem may not achieve the same effectiveness for other issues.

Figure 2: Fundamental components of the optimization algorithm.

In this article, a novel IMOA that mimics the behavior of the Indian millipede is proposed for identifying the crucial features in the very large dataset.

Indian millipede general biology

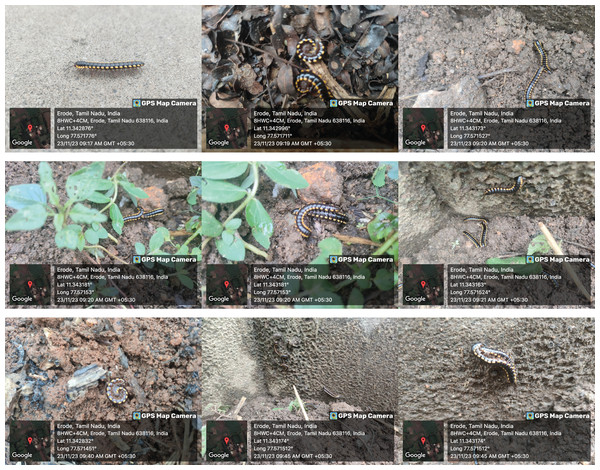

The millipedes are specialists of the soil, which can be found on the ground, in the soil, among leaf litter, or in shallow subterranean environments (Ramanathan et al., 2023). They are primarily found in the tropics and subtropics of the world because they are adapted to exist in humid environments with mild temperatures. With a length of about 21–33 mm, the adult millipede is black or dark brown. They are primarily herbivores, although they also consume wood, rotting fish, and cow dung in addition to any decaying and rotting leaves and vegetable pieces (Aswathy & Sudhikumar, 2022). Figure 3 illustrates different species of Indian millipedes along with their activities such as movement, mating, and defensive behaviors. These natural behaviors serve as the biological foundation of our proposed IMOA. The most notable behavior of millipedes that can be modelled for optimization is as follows.

-

Seasonal Abundance: Millipedes are more active during the rainy season (Usha, Vasanthi & Esaivani, 2022), which can be modelled to increase exploration during certain phases (Jia et al., 2023).

-

Obstacle Avoidance: When encountering obstacles, millipedes curl up and wait before changing direction (Anilkumar, Wesener & Moritz, 2022), which can be used to avoid local optima (Otair et al., 2022).

-

Temperature Response: Millipedes seek shady areas when temperatures are high (Aswathy & Sudhikumar, 2022), analogous to moving towards better solutions in high-stress scenarios (Jia et al., 2023).

-

Resource Utilization: Millipedes prefer areas rich in organic material (Aswathy & Sudhikumar, 2022), representing the focus on high-quality solutions (Nandhini & SVN, 2024).

-

Group Movement: Millipedes move in groups (Dave & Sindhav, 2025), indicating cooperative behavior in the algorithm (Devendiran & Turukmane, 2024).

-

Defensive Behavior: Millipedes emit a foul odor when threatened (Dave & Sindhav, 2025), analogous to penalizing poor solutions (Alsirhani et al., 2023).

-

Mating Behavior: Millipedes mate by stacking (Usha, Vasanthi & Esaivani, 2022), representing crossover operations (Jia et al., 2023).

-

Predator Avoidance: Millipedes are avoided by predators due to their odor (Dave & Sindhav, 2025), which can be used to maintain diversity by reinitializing specific populations (Jia et al., 2023).

Figure 3: Composite image showcasing varied behavioral expressions of the Indian millipede.

Inspiration

IMOA is inspired by the seasonal abundance, group movement, predator avoidance, temperature response, resource utilization, defensive behavior, and mating behavior of Indian millipedes (Usha, Vasanthi & Esaivani, 2022; Dave & Sindhav, 2025; Aswathy & Sudhikumar, 2022; Ramanathan et al., 2023; Anilkumar, Wesener & Moritz, 2022). In this, the seasonal abundance, group movement, and predator avoidance correspond to the exploration phase. On the other hand, temperature response, resource utilization, defensive behavior, and mating behavior correspond to the exploitation phase of IMOA.

Mathematical model

This section gives the mathematical equivalence of the IMOA, which simulates various behaviors of the millipedes.

Algorithmic steps

IMOA is a global optimization technique since it has the potential to include both exploration and exploitation stages. The stepwise procedure of the IMOA, adapted for feature selection in IDS, is presented in Algorithm 1.

| Input: Population size N, Maximum iterations T, Temperature threshold Tth |

| Parameters: α (seasonal activity factor), β (reversal factor), γ (learning rate), |

| δ (step size), ε (social factor), λ (penalty coefficient), η (crossover coefficient) |

| Output: Best solution found |

| 1. Initialize population P with N millipedes at random positions |

| 2. Evaluate the fitness of each millipede using the objective function f(x). |

| • If constraints are violated → apply a penalty using the coefficient λ. |

| 3. Set iteration counter . |

| 4. While (t < T and not converged) do |

| 4.1. for each millipede i in P do |

| • Seasonal Abundance: update position using periodic factor (Eqs. (4) and (5)). |

| • Obstacle Avoidance: if an obstacle is detected, apply a reversal update (Eq. (6)). |

| • Temperature Response: if Temperature > Tth, move towards best-known position (Eq. (7)). |

| • Resource Utilization: refine position using local gradient (Eq. (8)). |

| • Group Movement: move towards the population mean (Eq. (9)). |

| • Defensive Behavior: apply a penalty if poor conditions are encountered (Eq. (10)). |

| • Mating Behavior: generate offspring via crossover with another individual (Eq. (11)). |

| • Predator Avoidance: if population diversity < threshold, reinitialize to a random position (Eq. (12)). |

| 4.2. Evaluate new positions and compute fitness for all millipedes (Eq. (13)). |

| • Apply a penalty if constraints are violated (Eq. (14)). |

| 4.3. Update the best solution found so far (Xbest). |

| 4.4. Increment iteration counter (t = t + 1) (Eq. (16)). |

| 5. Return the best solution Xbest. |

The detailed steps of the suggested IMOA algorithm are given below.

Step 1: Initialization

The initialization step of IMOA begins with creating an initial population of millipedes, each positioned randomly within the defined bounds of the search space. It aims to cover a wide range of the search space, which in turn promotes diversity and enhanced exploration. The detailed initialization is as follows.

-

•

Define the Bounds of the Search Space:

Each dimension j of the search space has a lower bound lbj = xmin,j and an upper bound ubj = xmax,j.

-

•

Generate Initial Positions:

For each millipede i and dimension j, generate a random position within the bounds.

Initialization for the entire population is given by Eq. (1).

(1) where P is the candidate solution

N is the number of millipedes (population size),

d is the dimensionality of the search space,

Xi is the position vector of the ith millipede.

The initial position for each millipede i in each dimension j is given by the following Eq. (2).

(2) where rand(0, 1) is a random number uniformly distributed between 0 and 1.

Also set algorithm parameters, population size N, maximum iterations T, temperature threshold Tth, and scaling factors α, β, γ, δ, ε, λ, and η.

Step 2: Fitness Evaluation

The initial fitness evaluation in IMOA involves defining the fitness function, computing the fitness for each millipede, and applying penalties for constraint violations if necessary. This process provides the initial quality assessment of the solutions, guiding the optimization process in subsequent iterations. Let be the position vector of the ith millipede,

) be the fitness function applied to ,

λ be the penalty coefficient for constraint violations, and

) be a constraint violation function that returns a positive value if constraints are violated and zero otherwise.

The penalized fitness function is given by Eq. (3).

(3) Step 3: Iterative Process

The iterative process in the IMOA includes (i) updating the positions of millipedes based on their behaviors, (ii) evaluating their fitness, and (iii) checking for convergence. This section explains in detail he iterative procedure and its mathematical equivalents.

Iterative Loop

Set the iteration counter t = 0

For each iteration until convergence:

• Update positions based on various behaviors.

• Evaluate the fitness of new positions.

• Apply penalty for constraints (if any).

• Check for convergence.

Iterative steps

Iterative step1: Seasonal Abundance

During the rainy season, Indian millipedes experience a significant increase in activity and population (Aswathy & Sudhikumar, 2022). This trait allows increased exploration. The mathematical equivalence for the seasonal abundance for more exploration is given in Eqs. (4) and (5).

(4)

(5) where α is the scaling factor, t is the current iteration, T is the maximum number of iterations, and is the random vector.

Iterative step 2: Obstacle Avoidance

When it encounters an obstacle, the Indian millipede curls up and waits for some time. Afterwards, it changes its direction (Aswathy & Sudhikumar, 2022). This is equivalent to reversing the search when there is a poor fitness to avoid local optima trapping. The mathematical equivalence of obstacle avoidance is given in Eq. (6).

(6) where is the reversal factor.

Iterative step 3: Temperature Response

Millipedes move to cooler areas when temperatures rise above 26 °C. It is analogous to moving towards better solutions in high-stress situations. The mathematical equivalence of temperature response is given in Eq. (7). Move towards better solutions xbest, if the temperature exceeds the threshold Tth.

(7) where γ is the learning rate and is the best position found so far.

Iterative step 4: Resource Utilization

Indian millipedes utilize resources efficiently by seeking out areas with abundant degradable leaves and mud. This behavior allows them to thrive in environments rich in organic matter, ensuring their survival and growth (Usha, Vasanthi & Esaivani, 2022). This behavior can be modelled as focusing on high-fitness areas. The mathematical equivalence of resource utilization is given in Eq. (8).

(8) where δ is a step size and ) is the gradient of the fitness function.

Iterative step5: Group Movement

Indian millipedes travel in clusters and exhibit group movement, enhancing their chances of finding resources and protection (Usha, Vasanthi & Esaivani, 2022). This collective behaviour helps them navigate their environment more effectively and increases their overall survival rate. This simulates the cooperative behaviour that involves multiple agents working together and sharing information to explore the search space more effectively and improve the chances of finding optimal solutions. The mathematical equivalence of group movement is given in Eq. (9).

(9) where is a social factor.

Iterative step 6: Defensive Behavior

Indian millipedes display defensive behavior by emitting a foul odor when threatened, deterring predators and ensuring their safety. This chemical defense mechanism is crucial for their survival, as it makes them unappealing to potential threats. This can be modelled as penalizing poor solutions. The mathematical equivalence of defensive behavior is given by the penalized fitness function in Eq. (10).

(10) where, λ is a penalty coefficient.

Iterative step 7: Mating Behavior

Indian millipedes exhibit mating behavior where one millipede climbs on top of another, facilitating reproduction. This behavior is crucial for the continuation of their species and helps maintain their population in suitable environments (Usha, Vasanthi & Esaivani, 2022). It represents crossover or recombination. This process involves combining parts of two or more parent solutions to create new offspring solutions, promoting genetic diversity and enhancing the search for optimal solutions. The mathematical equivalence of mating behavior is given in Eq. (11).

(11) where is a crossover coefficient.

Iterative step 8: Predator Avoidance

Indian millipedes avoid predators by emitting a foul odor, making them unappealing to birds and other animals. This chemical defense strategy is highly effective, as it deters potential threats and ensures their safety (Usha, Vasanthi & Esaivani, 2022). It can be modelled to reinitialize specific populations to avoid premature convergence and maintain diversity. This, in turn, ensures that the algorithm explores new regions by introducing randomness and avoiding stagnation in local optima. The mathematical equivalence of mating behavior is given in Eq. (12).

(12) Iterative step 9: Evaluate Fitness

Compute the fitness of the new position.

Compute

Iterative step 10: Apply penalty for constraints (if any)

Apply a penalty for solutions that violate constraints as shown in Eq. (13).

(13) Step 4: Convergence Check

This checks if the convergence criteria are met.

Check if the maximum number of iterations T is reached or if there is no significant improvement in fitness. It is given in Eq. (14).

(14)

If converged, stop the iteration; otherwise, proceed to the next iteration by incrementing the counter as shown in Eq. (15).

(15)

Step 5: Solution Selection

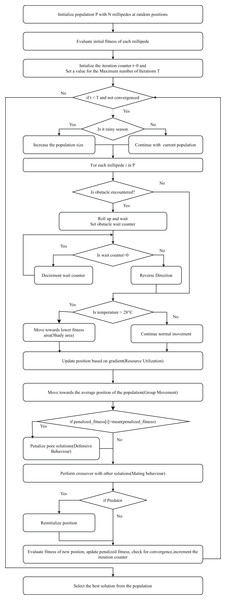

Select the best solution from the population based on the highest fitness value. Figure 4 presents the flowchart of IMOA, highlighting the initialization, fitness evaluation using mutual information, and iterative updates through seasonal activity, resource utilization, and predator avoidance. Each block corresponds to steps in Algorithm 1.

Figure 4: Flowchart of the IMOA.

Theoretical analysis of IMOA

Convergence behavior of IMOA

Optimization algorithms require a balance between global search and local refinement to ensure convergence to an optimal solution (Jia et al., 2023). IMOA achieves this by dynamically adjusting its movement strategies inspired by Indian millipede behaviors (Usha, Vasanthi & Esaivani, 2022; Dave & Sindhav, 2025; Aswathy & Sudhikumar, 2022; Ramanathan et al., 2023; Anilkumar, Wesener & Moritz, 2022). The iterative update mechanism follows a diminishing learning rate strategy, preventing stagnation in local optima while ensuring gradual convergence. A convergence proof in heuristic optimization typically relies on demonstrating that the search space coverage diminishes over time, leading the algorithm toward a stable solution (Jia et al., 2023).

Theoretical convergence guarantees

The convergence of IMOA can be analyzed through its adaptive phase transitions. In the exploration phase, the seasonal abundance (Ramanathan et al., 2023) and group movement (Usha, Vasanthi & Esaivani, 2022) behaviors allow millipedes to spread widely across the search space, reducing the likelihood of premature convergence. In the exploitation phase, temperature response (Aswathy & Sudhikumar, 2022) and resource utilization (Anilkumar, Wesener & Moritz, 2022) encourage millipedes to refine their search around promising regions, gradually stabilizing towards optimal solutions. Given that IMOA follows a structured adaptation of movement and interaction rules, it aligns with convergence properties observed in traditional nature-inspired algorithms such as GA (Fang et al., 2024), PSO (Jain et al., 2022), and GWO (Mirjalili, Mirjalili & Lewis, 2014), as shown in Table 2.

| Algorithm | Exploration strategy | Exploitation strategy | Local optima escape mechanisms | Adaptability |

|---|---|---|---|---|

| GA (Fang et al., 2024) | Random mutation | Selection pressure on fittest solution | Mutation | moderate (Static Mutation and crossover rates) |

| PSO (Jain et al., 2022) | Inertia weighted velocity updates | Position refinement based on global/local bests | No explicit escape mechanism | Moderate (Fixed inertia weight) |

| GWO (Mirjalili, Mirjalili & Lewis, 2014) | Alpha, beta, delta wolf-based exploration | Hunting mechanism via encircling prey | Leader-centric approach | Moderate (Depends on hierarchy) |

| IMOA (Proposed) | Seasonal abundance and group movement | Temperature response and resource utilization | Obstacle avoidance and Predator avoidance | High (Adaptive transition based on the optimization phase) |

Empirical evidence of convergence

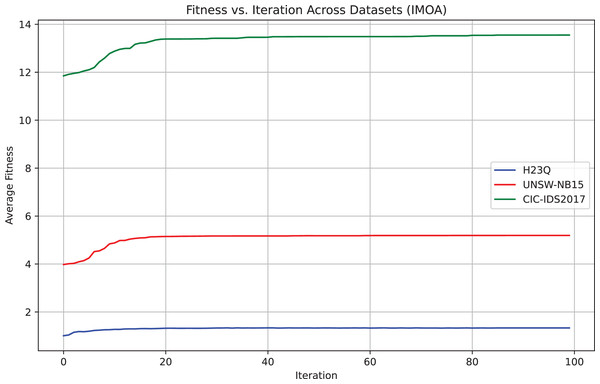

To empirically validate IMOA’s convergence, we analyze its fitness function evolution across 100 iterations on the UNSW-NB15, H23Q, and CIC-IDS2017 datasets. Figure 5 demonstrates the empirical evidence of convergence for the proposed IMOA across three benchmark datasets. The fitness curves show a consistent and smooth convergence pattern, where the algorithm rapidly improves its performance in the early iterations and gradually stabilizes as it approaches the optimal solution. CIC-IDS2017 exhibits the highest average fitness, indicating a more complex optimization landscape, while H23Q converges faster due to its relatively more straightforward structure.

Figure 5: Convergence behavior of the IMOA across UNSW-NB15,CIC-IDS2017 and H23Q datasets.

While a universal formal proof of convergence remains a research challenge for metaheuristics, IMOA’s adaptive control mechanisms ensure stable behavior comparable to GA (Fang et al., 2024), PSO (Jain et al., 2022), and GWO (Mirjalili, Mirjalili & Lewis, 2014).

Computational complexity analysis

Theoretical complexity

The computational complexity of IMOA can be analyzed and compared with traditional metaheuristics like GA, PSO, and GWO through the key steps, namely initialization, fitness evaluation, and iterative movement. These steps define its computational complexity as shown in Table 3.

| Operation | GA (Fang et al., 2024) complexity | PSO (Jain et al., 2022) complexity | GWO (Mirjalili, Mirjalili & Lewis, 2014) complexity | IMOA complexity |

|---|---|---|---|---|

| Initialization | O (N × d) | O (N × d) | O (N × d) | O (N × d) |

| Fitness evaluation | O (N) | O (N) | O (N) | O (N) |

| Iterative update | O (N) | O (N × d) | O (N2 × d) | O (N2 × d) |

| Overall complexity | O (N × d × T) | O (N × d × T) | O (N2 × d × T) | O (N2 × d × T) |

Let N be the population size.

d be the number of features and

T be the number of iterations.

The worst-case time complexity of IMOA is

O (N2 × d × T).

In theory, from Table 3, we deduce that among the algorithms compared, IMOA and GWO (Mirjalili, Mirjalili & Lewis, 2014) have higher per-iteration costs due to their group-interaction-based update strategies, unlike GA (Fang et al., 2024) and PSO (Jain et al., 2022), which primarily rely on individual-based updates.

Runtime and fitness comparisons

To empirically validate IMOA’s computational efficiency, we conducted runtime comparisons as shown in Table 4. The runtime and fitness performance analysis compares IMOA with PSO, GWO, and GA across the UNSW-NB15, CIC-IDS2017, and H23Q datasets. IMOA demonstrates a strong balance between optimization accuracy and computational efficiency, achieving significantly lower average iteration times than PSO and GA while maintaining competitive fitness values. For instance, on the UNSW-NB15 dataset, IMOA required only 31.03 s per iteration compared to 718.38 s for PSO and 824.08 s for GA. Although GWO had the fastest runtime, its fitness performance was consistently the lowest across all datasets. These results highlight IMOA’s suitability for real-time intrusion detection applications where both speed and accuracy are essential.

| Algorithm | (UNSW-NB15) | (CIC-IDS2017) | (H23Q) | |||

|---|---|---|---|---|---|---|

| Average time per iteration in sec | Average best fitness | Average time per iteration in sec | Average best fitness | Average time per iteration in sec | Average best fitness | |

| PSO (Jain et al., 2022) | 718.3821 ± 121.7650 s | 5.4856 ± 0.3687 | 34,336.9969 ± 1,560.9912 s | 14.3848 ± 0.6970 | 1,188.7140 ± 183.7473 s | 1.4882 ± 0.0851 |

| GWO (Mirjalili, Mirjalili & Lewis, 2014) | 19.9321 ± 2.7349 s | 0.3286 ± 0.0469 | 838.9168 ± 36.9396 s | 0.5139 ± 0.0148 | 71.2276 ± 4.3994 s | 0.2478 ± 0.0786 |

| GA (Fang et al., 2024) | 824.0888 ± 141.8974 s | 5.6282 ± 0.0439 | 75,434.3345 ± 749.7889 s | 13.4396 ± 1.0739 | 1,496.4866 ± 45.3354 s | 1.6351 ± 0.0232 |

| IMOA (Proposed) | 31.0347 ± 5.0961 s | 5.0811 ± 0.3663 | 2,819.3449 ± 575.5523 s | 13.3224 ± 0.6850 | 137.0148 ± 21.3123 s | 1.3121 ± 0.0168 |

Comparative advantages of IMOA over traditional metaheuristics

While numerous nature-inspired metaheuristics such as GA (Fang et al., 2024), PSO (Jain et al., 2022), and GWO (Mirjalili, Mirjalili & Lewis, 2014) exist, they often suffer from premature convergence, limited diversity, or rigid parameter adaptation. But the IMOA is not only biologically novel but also provides algorithmic benefits due to its multi-phase behavioral modelling, as given below.

-

The seasonal abundance and group movement behaviors in IMOA allow dynamic adjustment of the exploration rate based on the optimization stage. This offers greater flexibility and adaptability compared to the static inertia weights in PSO (Jain et al., 2022) or fixed crossover and mutation rates in GA (Fang et al., 2024).

-

In IMOA, the obstacle avoidance behavior introduces directional reversal and temporary stagnation, simulating a biologically inspired pause-and-redirect mechanism. This enables the algorithm to escape local optima without relying solely on random mutation or high stochasticity. In contrast, optimizers like PSO (Jain et al., 2022) and GWO (Mirjalili, Mirjalili & Lewis, 2014) may experience premature convergence due to their fixed update equations and leader-centric designs. While standard GA (Fang et al., 2024) introduces diversity through mutation, it still requires careful tuning of mutation rates and selection pressure to avoid getting trapped in suboptimal regions.

-

Temperature response in IMOA modulates search intensity based on convergence status, while predator avoidance enables selective reinitialization of stagnated agents. These behaviors help maintain population diversity. In comparison, PSO (Jain et al., 2022) and GWO (Mirjalili, Mirjalili & Lewis, 2014) lack explicit mechanisms for diversity control or stagnation recovery, and GA (Fang et al., 2024) relies on fixed mutation rates that may be suboptimal in complex search spaces. IMOA offers a more adaptive and context-aware approach to managing exploration during optimization.

-

In IMOA, resource utilization and mating behaviors guide the search toward high-fitness regions, similar to elitism in GA (Fang et al., 2024) but driven by biologically inspired selection pressures. Unlike PSO (Jain et al., 2022), which updates positions based on global and personal bests without direct exploitation of elite zones, or GWO (Mirjalili, Mirjalili & Lewis, 2014), which relies on leader-driven convergence, IMOA enables a more focused yet diverse local search guided by adaptive biological mechanisms.

Relevance to our IDS

In the proposed WGAN-GP_IMOA_DA_Ensemble framework, the IMOA was utilized to tackle the issue of feature redundancy and high dimensionality. For example, the UNSW-NB15 dataset includes 44 features, many of which are either irrelevant or overlapping. By applying IMOA, the feature space was reduced to 22 attributes, effectively removing nearly half of the redundant features while keeping the most informative ones. This reduction directly benefits real-time IDS deployment, where faster processing allows for timely attack detection. The comparative runtime and fitness analysis shown in Table 4 illustrates IMOA’s strong balance between computational efficiency and optimization accuracy. Furthermore, the adaptive exploration–exploitation strategies embedded in IMOA, such as obstacle avoidance and resource utilization, ensure that feature selection remains both accurate and robust. This makes it more suitable for IDS environments than traditional metaheuristics like PSO, GA, or GWO.

Generative adversarial networks (GAN)

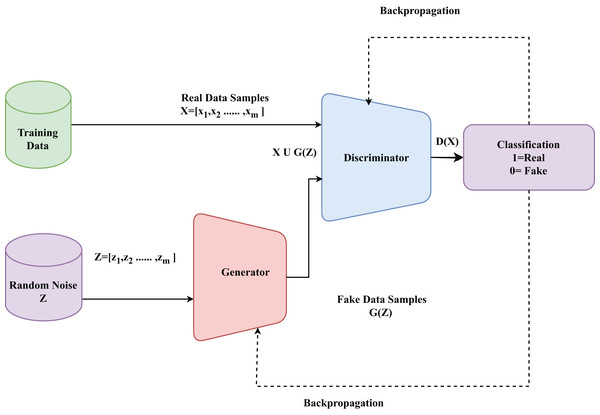

A generative model is a type of machine learning model that learns to generate new data instances that resemble the training data. Among the various generative models, the GAN (Jamoos et al., 2023) is gaining momentum due to its ability to produce highly realistic and quality data samples. The architecture of a GAN is shown in Fig. 6. The generator G creates synthetic traffic samples, while the discriminator D distinguishes between real and generated data, forming the basis of our enhanced WGAN-GP. GANs are composed of two neural networks (Cai et al., 2023): a generator and a discriminator. These networks are trained together in an adversarial process. The generator aims to create realistic data samples, while the discriminator’s objective is to differentiate between genuine and generated samples (Alsirhani et al., 2023).

Figure 6: Basic architecture of a GAN model.

The generator G is a neural network that takes a random noise vector Z = [z1, z2,……, zm] as input and generates a fake data sample G(Z) (Jamoos et al., 2023). The discriminator D is a neural network designed to evaluate data samples and produce a probability D(x) that reflects whether the sample is authentic (originating from the real dataset) or synthetic (created by the generator) (Srivastava, Sinha & Kumar, 2023). The objective function of the GANs is a minimax game between the generator and the discriminator. The generator tries to minimize the objective while the discriminator tries to maximize it. The objective function of G can be formulated as shown in Eq. (16) (Srivastava, Sinha & Kumar, 2023).

(16)

The objective function of D can be formulated as shown in Eq. (16) (Srivastava, Sinha & Kumar, 2023).

(17)

Clearly, GAN can be formulated as a minmax problem where is a value function and is defined in Eq. (18).

(18) where is a real data sample from the true data distribution .

is a random noise vector sampled from a prior distribution

is the generated data sample from the generator.

is the probability that is a real data sample.

is the probability that the generated sample It is a real data sample.

Some of the most widely used GAN models are Vanilla GAN (Jamoos et al., 2023), Conditional GAN (cGAN) (Devendiran & Turukmane, 2024), Deep Convolutional GAN (DCGAN) (Cai et al., 2023) and WGAN (Park et al., 2022). Among these models, WGAN uses the Wasserstein distance that encourages the generation of diverse samples and yields better-quality generated samples. WGAN-GP is a variation of WGAN in which the Wasserstein distance with gradient penalty is used to improve the training stability and alleviate issues such as mode collapse (Alsirhani et al., 2023). This research utilized WGAN-GP to tackle the problem of an imbalanced dataset by generating synthetic data samples that are similar to the original data samples. The WGAN utilizes the Wasserstein distance to measure the difference between the real and generated data distributions. The Wasserstein distance between two probability distributions and is given by Eq. (19) (Alsirhani et al., 2023).

(19) where denotes the set of all joint distributions γ( ) whose margins are respectively. γ( ) denotes the expectation is taken over pairs ( ) sampled from the joint distribution γ.

In WGAN-GP, the critic (discriminator) D is trained to approximate the Wasserstein distance, while the generator G is trained to minimize it. The objective function of the critic is given in Eq. (20) (Alsirhani et al., 2023).

(20) where is the gradient penalty coefficient is generated data and are the samples interpolated between real and generated data.

To ensure the critic is a 1-Lipschitz function (required for Wasserstein distance), WGAN-GP uses a gradient penalty instead of weight clipping. The gradient penalty is calculated as

(21)

With the ability to provide high-quality and diverse synthetic samples that improve model robustness and detection accuracy, WGAN-GP shows potential in augmenting data for IDS (Alsirhani et al., 2023). By using the Wasserstein distance and gradient penalty, WGAN-GP produces realistic data that accurately replicates the intricate patterns of network traffic and ensures stable training (Alsirhani et al., 2023). This improves the ability of the IDS to identify new and sophisticated attacks that may not be well represented in the original dataset. The practical training process of our enhanced WGAN-GP, which incorporates attention layers, normalization, and skip connections in the discriminator, is summarized in Algorithm 2.

| Input: Real training data Xreal, Noise distribution Z, Number of training steps T |

| Parameters: λ (gradient penalty coefficient), learning rates αG and αD |

| Output: Trained Generator G and Discriminator D |

| 1. Initialize Generator G and Discriminator D with random weights. |

| • Add attention layers, layer normalization, and skip connections to D for stability. |

| 2. For each training iteration t = 1 to T: |

| 2.1 Sample real data batch x from minority classes (e.g., Worms, Heartbleed). |

| 2.2 Sample noise vector z from distribution Z (e.g., Gaussian). |

| 2.3 Generate synthetic samples: |

| - = G(z) |

| 2.4 Update Discriminator D: |

| • Compute real score: Dr = D(x) |

| • Compute fake score: Df = D( ) |

| • Compute gradient penalty (Eq. (21)): |

| • Update D by minimizing loss: |

| LD = Df − Dr + GP |

| 2.5 Update Generator G: |

| • Sample noise vector z → generate . |

| • Update G by minimizing: |

| LG = −D( ) |

| 3. Repeat steps 2.1–2.5 until convergence. |

| 4. Return trained G and D. |

| • Use G to augment minority classes in the IDS dataset, balancing the distribution. |

WGAN-GP—relevance to our IDS

The application of enhanced WGAN-GP effectively addresses the severe class imbalance issue in IDS datasets. As shown in Table 5, minority classes such as Infiltration with 36 samples, Heartbleed with 11 samples, and Worms with 174 samples were augmented to several thousand synthetic samples. As a result, imbalance ratios are reduced from extreme values like 1:50 to near-balanced distributions such as 1:3. This augmentation is significant because conventional classifiers tend to bias toward majority classes, leading to poor recall on rare but high-impact attacks.

| Dataset | Minority classes | Number of Instances | Generated synthetic data instances using WGAN-GP | Total number of instances |

|---|---|---|---|---|

| CIC-IDS2017 | Infiltration | 36 | 4,327 | 4,363 |

| Web Attack-Sql Injection | 21 | 2,586 | 2,602 | |

| Heartbleed | 11 | 1,791 | 1,802 | |

| H23Q | Quic-enc | 1,663 | 2,000 | 3,663 |

| http-smuggle | 508 | 2,000 | 2,508 | |

| UNSW-NB15 | Worms | 174 | 2,784 | 2,958 |

By generating realistic synthetic traffic patterns, WGAN-GP improves both the diversity and representativeness of training data. For instance, the UNSW-NB15 Worms class expanded from 174 to 2,958 instances, while CIC-IDS2017 Heartbleed increased from 11 to 1,802 instances. These enriched datasets enhance the learning capability of the ensemble classifier, enabling more reliable detection of rare intrusions.

Overall, the integration of WGAN-GP into our IDS pipeline transforms theoretical generative modeling into a practical solution for skewed data distributions. It leads to measurable improvements in performance for minority attacks without introducing significant overfitting.

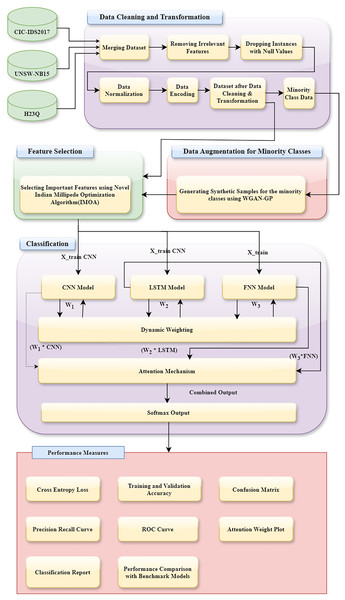

Proposed methodology

This section provides the details of the proposed methodology. It has five significant steps. (1) Description of datasets used, (2) initial data preprocessing, (3) data augmentation with WGAN-GP, (4) feature selection using IMOA, and (5) classification using a DA_Ensemble approach. The complete workflow of our IDS is illustrated in Fig. 7. The pipeline integrates IMOA-based feature selection, WGAN-GP augmentation for class balancing, and a DA based ensemble classifier for final decision-making.

Figure 7: Architecture of the proposed WGAN-GP_IMOA_DA_Ensemble method for intrusion detection.

Dataset description

This section gives a detailed description of the datasets used in this research. It uses three datasets, namely (i) CIC-IDS2017, (ii) UNSW-NB15, and (iii) H23Q, to evaluate the effectiveness of the suggested IDS as described by Table 6.

| Dataset | Attacks | Number of instances | Total .CSV files | Instances used in experiment | Number of features |

|---|---|---|---|---|---|

| CIC-IDS2017 | 1. BENIGN | 2,273,097 | Eight files in total | 2,273,097 | 79 |

| 2. DoS Hulk | 231,073 | 231,073 | |||

| 3. PortScan | 158,930 | 158,930 | |||

| 4. DDoS | 128,027 | 128,027 | |||

| 5. DoS GoldenEye | 10,293 | 10,293 | |||

| 6. FTP-Patator | 7,938 | 7,938 | |||

| 7. SSH-Patator | 5,897 | 5,897 | |||

| 8. DoS slowloris | 5,796 | 5,796 | |||

| 9. DoS Slowhttptest | 5,499 | 5,499 | |||

| 10. Bot | 1,966 | 1,966 | |||

| 11. Web Attack Brute Force | 1,507 | 1,507 | |||

| 12. Web Attack XSS | 652 | 652 | |||

| 13. Infiltration | 36 | 36 | |||

| 14. Web Attack Sql Injection | 21 | 21 | |||

| 15. Heartbleed | 11 | 11 | |||

| Total | 2,830,743 | 2,830,743 | |||

| UNSW-NB15 | 1. Normal | 93,000 | Two files (Train file, test file) | 93,000 | 45 |

| 2. Generic | 58,871 | 58,871 | |||

| 3. Exploits | 44,525 | 44,525 | |||

| 4. Fuzzers | 24,246 | 24,246 | |||

| 5. DoS | 16,353 | 16,353 | |||

| 6. Reconnaissance | 13,987 | 13,987 | |||

| 7. Analysis | 2,677 | 2,677 | |||

| 8. Backdoor | 2,329 | 2,329 | |||

| 9. Shellcode | 1,511 | 1,511 | |||

| 10. Worms | 174 | 174 | |||

| Total | 257,673 | 257,673 | |||

| H23Q | 1. Normal | 95,69,662 | In all 60 files | 1,372,539 | 200 |

| 2. HTTP3-flood | 498,810 | 6 | 62,383 | ||

| 3. Fuzzing | 22,224 | 6 | 8,046 | ||

| 4. HTTP3-loris | 74,572 | 6 | 17,502 | ||

| 5. QUIC-flood | 61,340 | 6 | 17,159 | ||

| 6. QUIC-loris | 24,269 | 6 | 3,762 | ||

| 7. QUIC-enc | 5,829 | 6 | 1,663 | ||

| 8. HTTP-smuggle | 3,337 | 6 | 508 | ||

| 9. HTTP2-concurrent | 60,273 | 6 | 8,980 | ||

| 10. HTTP2-pause | 53,549 | 6 | 7,458 | ||

| Total | 10,401,928 | 60 files | 1,500,000 |

UNSW-NB15

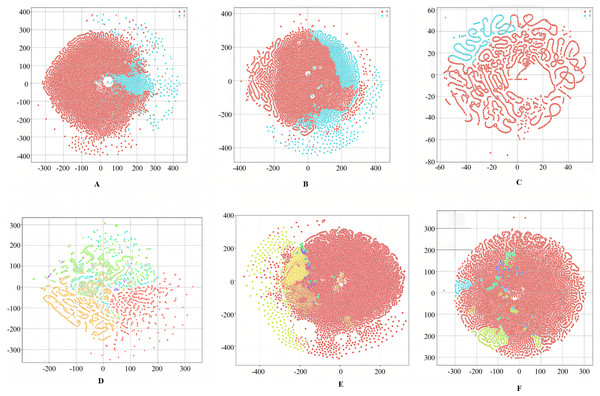

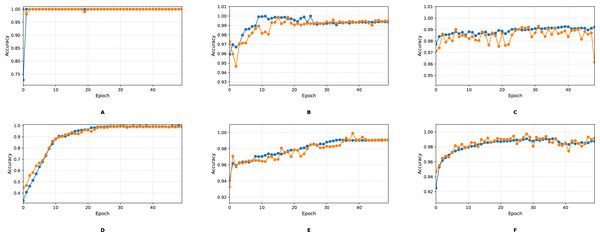

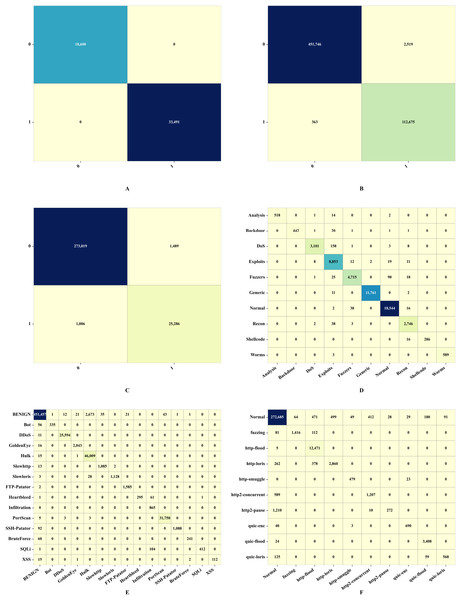

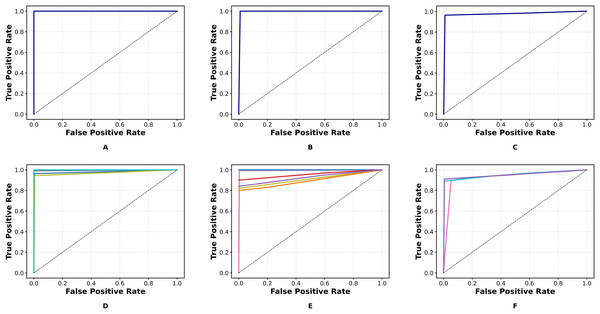

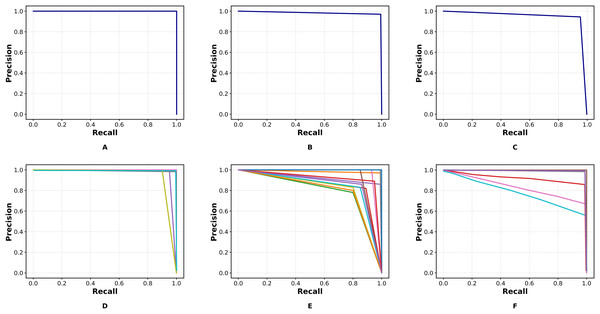

The UNSW-NB15 dataset is a comprehensive intrusion detection dataset created by the Australian Centre for Cyber Security (ACCS) (Otair et al., 2022). It is freely available for download at https://research.unsw.edu.au/projects/unsw-nb15-dataset. It consists of two .csv files, namely “UNSW_NB15_training_set.csv” of 175,341 instances and “UNSW_NB15_testing_set.csv” of 82,332 instances. It contains a total of 257,673 instances. It has nine attack categories. Various attacks and their total number of occurrences in the dataset are depicted in Table 6. It has 45 features, including four categorical features and 41 numerical features. The t-distributed Stochastic Neighbor Embedding (t-SNE) visualizations of binary and multiclass labels for this dataset are shown in Figs. 8A and 8D, respectively.

Figure 8: t-SNE visualizations of the WGAN-GP_IMOA_DA-Ensemble model for binary (A–C) and multiclass (D–F) classification on UNSW-NB15, CIC-IDS2017, and H23Q datasets.

t-SNE plots illustrating the feature space separation achieved by the proposed model for both binary and multi-class classification tasks across the UNSW-NB15, CIC-IDS2017, and H23Q datasets. Each color represents a distinct class label.CIC-IDS 2017

The Canadian Institute for Cybersecurity IDS (CIC-IDS) 2017 dataset is a comprehensive dataset designed for evaluating the performance of IDS (Jia et al., 2023). It is freely available for download at https://www.unb.ca/cic/datasets/ids-2017.html. It has 2,273,097 instances of benign samples. In addition, it has 14 attack types of a total of 557,646 instances, as shown in Table 6. It has 79 features. It has all numeric features except the label. The t-SNE visualizations of binary and multiclass labels for this dataset are shown in Figs. 8B and 8E, respectively.

H23Q

The H23Q Dataset is a comprehensive 802.3 dataset containing labelled (Aljehane et al., 2024) traces of ten attack types against Hypertext Transfer Protocol (HTTP)/2, HTTP/3, and Quick UDP (User Datagram Protocol) Internet Connections (QUIC) services, with a focus on modern HTTP/3-specific attacks. Available at https://icsdweb.aegean.gr/awid/other-datasets/H23Q in pcap and CSV formats, it has 9,569,662 normal instances and 804,203 attack instances. Additionally, this dataset has 200 features, as shown in Table 6. It has all numeric features except the label. The t-SNE visualizations of binary and multiclass labels for this dataset are shown in Figs. 8C and 8F, respectively.

Initial data preprocessing

Data preprocessing, also known as data preparation, is the process of transforming raw data into a clean and usable format (Fraihat et al., 2023). The main aim of data preprocessing is to improve the quality of the data. The proposed model uses the following data preprocessing techniques.

Merging datasets (data integration)

Usually, the IDS dataset is very large in size and is available in chunks of many files. To make use of the complete dataset for the comprehensive analysis or modelling, all the chunks need to be merged (Moustafa & Slay, 2015). Table 6 provides the count of files in each dataset, along with the total number of features and instances for each one (Fraihat et al., 2023). The CIC-IDS2017 dataset consists of a total of eight .csv files, while the UNSW-NB15 dataset contains two files. In contrast, the H23Q dataset comprises sixty files. For the CIC-IDS2017 and UNSW-NB15 datasets, all the files have been merged for utilization. Due to the substantial size of the H23Q dataset, which is 30 GB, a sample of 150,000 instances for each attack type has been extracted, resulting in a total of 1,500,000 instances for experimentation.

Data cleaning

Data cleaning is the process of identifying and correcting or removing errors or inconsistencies in the dataset (Hanafi et al., 2023). The data cleaning techniques employed for the CIC-IDS2017 dataset include removing columns that are entirely homogeneous and dropping rows with NA values. For the UNSW-NB15 dataset, the methods applied involve eliminating the ‘id’ column, which is deemed unnecessary; checking for any missing values and removing those rows; and replacing categorical columns with a value of ‘-’ with ‘None.’ In the case of the H23Q dataset, missing values for categorical features are filled using mode imputation, while numerical features are addressed with mean imputation.

Data Transformation

Data encoding is a kind of data transformation where categorical data are converted into numerical data as required by modelling and analysis (Bowen et al., 2023). In this study, label encoding was utilized for all the categorical features in binary classification to reduce dimensionality. Conversely, one-hot encoding was employed for the ‘Label’ feature in multiclass classification to avoid making any ordinal assumptions among the multiple classes. Data normalization is a data transformation technique where numerical data is scaled to a consistent range, such as 0–1 (Fraihat et al., 2023).

Min-Max Scaling: Min-max scaling transforms features to a fixed range, usually 0 and 1 (Hanafi et al., 2023). The formula for min-max scaling is

(22)

Min-max scaling was applied to the CIC-IDS 2017 and UNSW-NB15 datasets to normalize features within a bounded range of [0, 1], because these datasets have limited outlier influence.

Z-Score Scaling: Z-score scaling, also known as standardization, transforms the data to have a mean of 0 and a standard deviation of 1 (Sajid et al., 2024). The formula for z-score scaling is:

(23)

Standard scaling (z-score scaling) was utilized for the H23Q dataset, given its larger size and potentially diverse feature distributions, ensuring that all features had a mean of 0 and a standard deviation of 1 while being less sensitive to outliers.

Data augmentation with WGAN-GP

This research utilizes the WGAN-GP model to generate synthetic samples for the minority classes to address the class imbalance problem. The details of various parameters of WGAN-GP used in this study are given in Table 7. This noise vector, z ~ N(0, 1), has a dimension of z_dim = 10. The generator network has three fully connected layers, each followed by batch normalization and a Leaky ReLU activation function. The generator’s output layer applies a tanh activation function. The discriminator D is also composed of three dense layers, each followed by Leaky ReLU activations. Its final layer outputs a single scalar value D(x) for a given input x. After training, the generator G is used to produce synthetic data samples corresponding to the minority classes identified in CIC-IDS2017, UNSW-NB15, and H23Q, as shown in Table 5. For example, in UNSW-NB15, the discriminator assigns higher scores to synthetic ‘Worms’ traffic that resembles real samples, penalizing only when gradients deviate significantly from 1. This stabilizes training and avoids mode collapse. Specifically, for CIC-IDS2017, synthetic data is generated for the classes Infiltration, Web Attack—SQL Injection, and Heartbleed. In contrast, for UNSW-NB15, the targeted class is Worms, and for H23Q, they are quic-enc and http-smuggle. The created augmented dataset combines both real and synthetic samples, denoted by Xcombined and ycombined. This augmented dataset is subsequently used to train the final detection model.

| Variable | Value |

|---|---|

| z_dim | 10 |

| gp_weight | 10.0 |

| batch_size | 128 |

| epochs | 50 |

| input_dim | Based on X |

| output_dim | Based on X |

| generator_optimizer | Adam (learning rate: 10−4) |

| discriminator_optimizer | Adam (learning rate: 10−4) |

| real_data | Based on X |

| noise | Sampled from a normal distribution with dimension z_dim |

IMOA-based feature selection

Feature selection is a data pre-processing method that reduces the number of features of a dataset that is fed as input to an artificial intelligence model. It enhances the performance of the learning models by keeping only the most significant features (Moustafa & Slay, 2015). Various techniques, such as wrapper methods, filter methods, and embedded methods, are available for feature selection (Chatzoglou et al., 2023). Recently, researchers have shown phenomenal interest in using optimization algorithms for feature selection due to the improved model performance (Bowen et al., 2023). This article utilizes the IMOA algorithm for feature selection from the datasets.

IMOA mechanisms in feature selection

In this application, IMOA’s objective is to maximize the relevance of selected features based on their mutual information with the target variable (attack type or benign). This is formulated as a fitness function given in Eq. (24).

(24) where f is a binary mask indicating selected features, Xj denotes a specific feature in the dataset, and y represents the target class labels. For instance, in UNSW-NB15, IMOA evaluates feature sbytes by calculating its mutual information with the attack label. A higher MI score indicates that this feature strongly correlates with attack presence, hence it is more likely to be selected. The mutual information, MI (Xj, y), measures the statistical dependency between each selected feature Xj and y, guiding the algorithm towards high-information features. The parameter configuration of IMOA for feature selection is given in Table 8.

| Parameter | Value |

|---|---|

| Population size (N) | 20 |

| Dimensions (d) | Feature count varies based on the dataset |

| Maximum iterations (T) | 100 |

| Seasonal activity (α) | 0.1 |

| Reversal factor (β) | 0.5 |

| Learning rate (γ) | 0.1 |

| Penalty coefficient (λ) | 10 |

| Crossover coefficient (η) | 0.5 |

| Batch size | 128 |

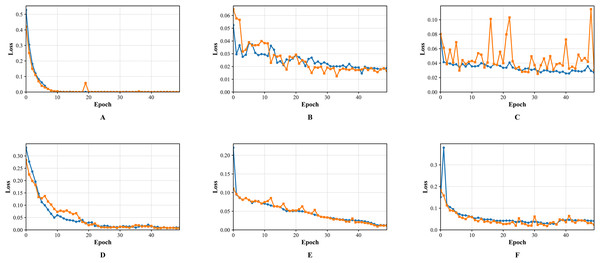

| No improvement limit | 10 |