Dynamic token encryption for preventing permission leakage in serverless architectures

- Published

- Accepted

- Received

- Academic Editor

- Kübra Seyhan

- Subject Areas

- Algorithms and Analysis of Algorithms, Computer Networks and Communications, Distributed and Parallel Computing, Optimization Theory and Computation, Security and Privacy

- Keywords

- Serverless computing, Access control, Data protection, Dynamic tokens, Fine-grained access control

- Copyright

- © 2025 Liu et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2025. Dynamic token encryption for preventing permission leakage in serverless architectures. PeerJ Computer Science 11:e3029 https://doi.org/10.7717/peerj-cs.3029

Abstract

Serverless architecture simplifies application development and operation, but its permission control model based on static execution roles struggles to adapt to highly dynamic runtime environments, which can easily lead to the risk of permission and key leakage. To address this challenge, this article proposes a runtime dynamic token-based access control scheme. The scheme combines function context and user-defined security rules to achieve function-level dynamic authorization and request-level identity authentication. The generated dynamic tokens possess strong randomness, unpredictability, and one-time use characteristics, effectively reducing the harm caused by token leakage. Moreover, the designed multi-factor token verification model integrates dynamic factors such as call chain features and behavior patterns, which can defend against various security threats. Through social surveys, qualitative analysis, and extensive experiments, this article confirms that the proposed scheme significantly enhances the security of serverless applications while maintaining a controllable impact on platform performance. This research enriches the theoretical knowledge in the field of serverless security and provides new ideas for development practices, which is expected to promote the expansion of serverless architecture to enterprise-level scenarios and contribute to the healthy development of its ecosystem.

Introduction

In recent years, serverless computing has gained widespread attention in both academia and industry due to its unique advantages, such as automated resource management, on-demand scaling, and reduced operational overhead (Eismann et al., 2020; Kritikos & Skrzypek, 2018; Li, Leng & Chen, 2022). An increasing number of enterprises are choosing to migrate their applications to serverless platforms to lower operational costs and improve resource utilization. However, the serverless computing model also faces unique security challenges, particularly in the aspect of permission management (de Oliveira, 2022; Calles, 2020; Li, Leng & Chen, 2022).

Permission management is the cornerstone of security protection in serverless platforms. The platform needs to configure corresponding execution permissions for each function to support flexible access to required cloud services. However, the complex permission configuration process not only increases the burden on development and operation but also easily introduces security vulnerabilities (Barrak et al., 2024). Improper configuration can lead to functions obtaining excessive permissions and even being exploited by attackers to access sensitive data, posing serious threats to user privacy and data security. Moreover, traditional static access control mechanisms struggle to effectively adapt to the highly dynamic and uncertain nature of serverless applications (Sankaran, Datta & Bates, 2020). In practice, developers are often forced to grant higher permissions to functions to avoid impacting business functionality, which, however, lays the groundwork for permission abuse.

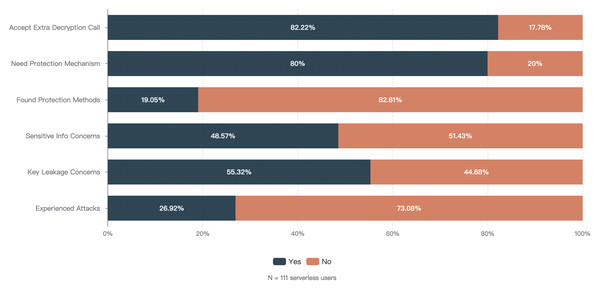

Permission management defects bring severe security risks to serverless platforms and applications. Our survey shows that 27% of serverless users have experienced various types of attacks, 55% of users are concerned about the risk of permission leakage, and 48% of users express concern about the practice of storing execution permission keys through environment variables. The industry has witnessed sensitive data leakage incidents caused by improper permission management in serverless environments, where attackers exploited overly permissive execution roles to gain unauthorized access to cloud resources (Barrak et al., 2024). These incidents highlight the inadequacies of existing mechanisms in terms of dynamicity, flexibility, and defense-in-depth.

Academia has conducted a series of explorations on serverless security issues, focusing on aspects such as execution environment isolation, cold start security, and access control optimization (Datta et al., 2020; Alpernas et al., 2018). These approaches have made important contributions to improving serverless security. However, existing work is either limited to specific scenarios or struggles to adapt to the dynamicity of serverless platforms, and cannot fundamentally solve the core problem of permission management (Jegan et al., 2020). For example, information flow control approaches (Alpernas et al., 2018) provide strong security guarantees but face challenges in practical implementation across different serverless platforms. Similarly, workflow-based security solutions (Datta et al., 2020; Sankaran, Datta & Bates, 2020) offer valuable protection but may not fully address the dynamic nature of function-level permissions.

In the industry, mainstream serverless platforms provide some security enhancement mechanisms, but their static and predefined policies are difficult to fully adapt to the flexibility and variability of serverless applications. The complex permission configuration and narrow usage scenarios also greatly reduce the usability and universality of these mechanisms. Traditional methods such as JSON Web Tokens (JWT) and key management services (KMS) are not specifically designed for the unique characteristics of serverless architectures, particularly the ephemeral nature of function instances and their dynamic execution contexts.

In summary, how to achieve efficient, fine-grained, and dynamic serverless permission management without sacrificing function flexibility is a critical challenge that needs to be addressed urgently. This is also the main research motivation of this article. To tackle this challenge, this article proposes an innovative dynamic token scheme, aiming to achieve further control over permissions by introducing dynamically generated temporary tokens at runtime. Unlike static authorization mechanisms, our approach incorporates function execution context and request-specific information to generate unique tokens for each operation, significantly enhancing security while maintaining flexibility.

The scheme combines function context information and custom rules to generate highly dynamic and unpredictable tokens and introduces multi-factor authentication in the request verification phase to build a defense-in-depth access control system. This approach differs from traditional token-based mechanisms by tightly coupling tokens with both function execution context and request parameters, making them inherently resistant to replay attacks and unauthorized access attempts.

The main contributions of this article are as follows:

-

(1)

We propose a novel access control scheme based on runtime dynamic tokens that addresses the permission management challenges in serverless platforms, providing enhanced granularity and dynamicity compared to existing static permission models;

-

(2)

We develop a token generation mechanism that integrates function context information and user-defined rules, creating tokens with high dynamicity, time-effectiveness, and unpredictability, which effectively resist common attacks such as replay attacks;

-

(3)

We introduce a multi-factor verification process that combines request context analysis and behavioral patterns to create a comprehensive defense-in-depth permission control system;

-

(4)

We implement and evaluate a prototype system on mainstream serverless platforms, demonstrating through comprehensive experiments that our approach effectively enhances security while maintaining acceptable performance overhead.

The rest of this article is organized as follows: “Serverless Computing Model and Security Challenges” introduces the basic concepts and security challenges of serverless computing; “Implementation Principles of Dynamic Tokens” reviews existing serverless security protection schemes and their limitations; “Security and Performance Evaluation” elaborates on the system architecture and key technologies of the proposed dynamic token scheme; “Discussion” comprehensively evaluates the security and performance of the scheme around typical attack scenarios; “Conclusion” summarizes the work and outlines future research directions.

Serverless computing model and security challenges

Security threat analysis of serverless platforms

The unique system architecture and working methods of serverless platforms expose them to a series of new security threats that traditional security protection mechanisms struggle to effectively address (de Oliveira, 2022; Calles, 2020; Li, Leng & Chen, 2022). These threats mainly stem from the inherent characteristics of serverless platforms in terms of permission management, user code execution, and data flow.

First, the permission management mechanism of serverless platforms has inherent flaws that can lead to multiple security risks. Serverless platforms need to configure corresponding execution permissions for each function to support flexible access to required cloud services, such as object storage and databases. The working logic of this execution permission is mainly that when a function instance is created and started, the serverless platform generates temporary keys based on the user-configured execution permissions and permission system and configures the temporary keys into environment variables for direct use by the user. However, the highly dynamic and unpredictable nature of serverless applications means that overly fine-grained permission configuration often increases the learning and usage costs for users (Barrak et al., 2024). In reality, developers are often forced to configure relatively lax permission sets for functions to avoid impacting business functionality, even if the developer tools provided officially often default to configuring relatively lax permissions for user experience, which lays the groundwork for permission abuse and leakage. According to the OWASP Serverless Top 10 (OWASP Foundation, 2018), broken access control is a critical vulnerability where “granting functions access to unnecessary resources or excessive permissions on resources is a potential backdoor to the system.” Excessively broad permission configurations significantly increase the potential attack surface, allowing attackers to exploit leaked high-privilege keys to illegally access other users’ sensitive data and critical resources, posing serious threats to user privacy and data security (Alpernas et al., 2018).

In the industry, permission leakage incidents on serverless platforms have been frequent, and major security incidents caused by permission management vulnerabilities have occurred in the actual operation of some serverless platforms (Datta et al., 2020). For example, a well-known cloud service provider’s serverless deployment tool supports the synchronous deployment of multiple serverless functions and surrounding related services. To enhance user experience, the originally locally-executed tool was changed to execute in the cloud. Since the tool is a component-based tool that needs to load user-declared components during execution to complete corresponding capabilities, it allows users to customize deployment components through configuration files. As a result, attackers can construct malicious components to steal temporary keys in environment variables of the deployment service during the deployment process. Using these keys, attackers can illegally access code and configuration information uploaded by other users on the account corresponding to the service. Another mainstream cloud service provider’s WebIDE platform is implemented based on serverless services. Originally, relevant execution permissions were required during function startup to perform some initialization operations, and the execution permissions would be automatically cleared after completion. However, after an upgrade of the serverless service provider’s platform, due to an incremental change in key naming conventions that caused compatibility issues, the temporary key cleanup logic of the WebIDE platform failed. Attackers can thus obtain platform-level temporary keys in the WebIDE and gain execution permissions for the function, illegally reading other users’ function logs, which often contain business logic and sensitive data. Although cloud service providers took remedial measures after the incidents occurred, these incidents reflect the inherent vulnerability of serverless platforms in key management. The impact of such incidents often extends to the security of the entire cloud account (Jegan et al., 2020). Once core systems or databases are compromised, the business continuity and data confidentiality of users will be seriously threatened, which is undoubtedly disastrous for enterprises that heavily rely on cloud services.

To summarize, user code on serverless platforms often originates from complex sources, making it difficult to ensure there are no potential vulnerabilities. The OWASP Serverless Top 10 (OWASP Foundation, 2018) highlights that “attackers will try to look for a forgotten resource, like a public cloud storage, or open APIs” and that “secrets could be accidentally uploaded to the github repo, put it on a public bucket or even used hardcoded in the function.” Attackers can trigger function execution through carefully crafted malicious events and induce function code containing vulnerabilities to leak high-privilege key information, thereby gaining access to cloud platform resources. Moreover, the event-driven data flow method of serverless platforms also introduces new security risks (Alpernas et al., 2018). Sensitive data frequently flows between distributed functions. If end-to-end encryption protection and integrity verification are lacking, it can easily be hijacked and tampered with by intermediaries.

Furthermore, log auditing and anomaly detection on serverless platforms face numerous challenges. According to the OWASP Serverless Top 10 (OWASP Foundation, 2018), “applications which do not implement a proper auditing mechanism and rely solely on their service provider probably have insufficient means of security monitoring and auditing.” Traditional security information and event management systems struggle to effectively adapt to the high dynamicity and short instance lifecycle of serverless platforms. Current serverless platforms still have obvious shortcomings in providing fine-grained, comprehensive log recording and intelligent security analysis, making it very difficult to restore and investigate security incidents.

In summary, the unique technical characteristics and working methods of serverless platforms expose them to many new security threats that traditional security solutions struggle to effectively cover. The OWASP Serverless Top 10 provides a framework for understanding these threats, covering aspects such as injection, broken authentication, sensitive data exposure, broken access control, security misconfiguration, and insufficient logging and monitoring (Li, Leng & Chen, 2022). These threats involve multiple aspects such as permission management, code execution, data flow, and log auditing, and they intertwine to form a complex security threat landscape. There is an urgent need to explore a more flexible, dynamic, and fine-grained new paradigm of security protection that specifically addresses the disconnect between static permission models and highly dynamic execution environments. This paradigm must incorporate runtime context into access decisions, implement request-level permission validation, and provide defense-in-depth through multi-factor verification. This is also the starting point and focus of this article’s work.

Existing solutions and their limitations

To address the security issues in serverless architecture, academia and industry have conducted explorations at multiple levels. Following a systematic classification approach (Li, Leng & Chen, 2022), these efforts can be organized into platform-level protection, function-level controls, and application-level security enhancements.

One line of work focuses on security hardening of the serverless platform itself, such as permission management at the scheduling level (Li et al., 2022), security isolation mechanisms (Zhang et al., 2019), and timely cleanup of instance space (Alzayat et al., 2023). These efforts aim to eliminate potential security risks from the underlying architecture by improving the platform’s own security protection capabilities. Another line of work focuses on peripheral monitoring, alerting, and auditing mechanisms, such as security event notification (Agache et al., 2020) and behavior anomaly detection, aiming to discover and respond to potential security issues in a timely manner by real-time monitoring of the running state of serverless applications.

In terms of fine-grained access control, existing work has actively explored the issues of improper permission management and insufficient access control that are common in serverless platforms. Excessive permission settings and coarse-grained authorization models are the main reasons for potential unauthorized access and sensitive information leakage in serverless applications (Govind & González–Vélez, 2021). To this end, some work proposes using fine-grained security policies and dynamic authorization mechanisms to strengthen permission control in serverless platforms. Notable approaches include Valve (Datta et al., 2020), which enables fine-grained control of information flows in function workflows through network-layer monitoring, and will.iam (Sankaran, Datta & Bates, 2020), which implements a workflow-aware access control model at the point of ingress. However, traditional centralized authorization services are often tightly coupled with application code, which may affect the overall performance of the system (Sabbioni et al., 2022). Although decentralized authorization solutions can alleviate performance bottlenecks to a certain extent, they may introduce new issues such as management complexity and cross-environment compatibility when actually implemented.

Furthermore, some research attempts to enhance the security of serverless applications from the application architecture level. For example, literature (Ouyang et al., 2023) proposes combining the microservice architecture with serverless computing. By splitting applications into multiple fine-grained function services and utilizing the short-lived instances and resource isolation mechanisms of the serverless platform, the security of cross-domain transactions can be enhanced. However, the introduction of this architecture may further increase system complexity, and the technical requirements for development and operation personnel are also higher.

Some inherent technical characteristics of serverless computing may also introduce new security risks. For example, the automatic elastic scaling mechanism common in serverless platforms significantly improves resource utilization, but if effective monitoring and protection are lacking, it may be exploited by malicious users to launch targeted Distributed Denial of Service (DDoS) attacks (Wang et al., 2022). Additionally, to solve the problem of sensitive data sharing and protection in serverless applications, some studies propose introducing information flow control (IFC) technology (Alpernas et al., 2018). IFC achieves fine-grained security protection of data flow by security labeling data objects and computing entities and enforcing access control based on predefined flow rules. However, the actual deployment of IFC requires deep integration with the access control mechanisms of various cloud services. How to implement a unified and compatible IFC labeling system across different serverless platforms is an urgent problem to be solved.

When examining conventional security technologies in the context of serverless characteristics, fundamental limitations emerge. Serverless computing features event-driven execution, ephemeral instances, high-frequency cold starts, dynamic scaling, and multi-tenant environments. These characteristics create unique security challenges that traditional mechanisms struggle to address. For instance, JWT (JSON Web Tokens) typically operate on a time-based validity model, where tokens remain valid for predetermined periods (minutes to hours). This approach fundamentally misaligns with serverless functions’ millisecond-level execution duration, creating a significant security vulnerability window where leaked tokens remain valid long after the function execution completes. In multi-tenant serverless environments, this time-scale mismatch significantly amplifies the risk of token misuse.

Similarly, key management services (KMS) face inherent limitations when applied to serverless architectures. The per-invocation KMS API calls introduce latency that can be disproportionate to the actual function execution time, potentially becoming a performance bottleneck during rapid scaling events. This is particularly problematic in serverless’s pay-per-use economic model, where security-related overhead directly impacts operational costs. Furthermore, when applications are decomposed into dozens of fine-grained functions, configuring and managing KMS access policies for each function becomes exceedingly complex, often leading to overly permissive settings that compromise security.

Existing solutions exhibit several critical limitations when viewed through the lens of serverless computing’s unique characteristics: (1) temporal mismatch between security mechanisms designed for long-running services and the millisecond-scale execution of serverless functions; (2) inability to efficiently handle the high-frequency state changes inherent in serverless environments; (3) security overhead disproportionate to function execution time; (4) coarse-grained permission models inadequate for microfunction architectures; and (5) lack of cost-effective validation mechanisms compatible with the pay-per-use model.

A particularly significant limitation of traditional approaches is their reliance on time-based rather than invocation-based security controls. Time-based tokens like JWT remain valid for extended periods, creating unnecessary exposure windows, while serverless functions typically complete execution in milliseconds. In contrast, an invocation-based approach where tokens are generated per request and immediately invalidated after use would align more naturally with serverless execution patterns and significantly reduce the potential impact of token leakage.

Future research needs to explore a new paradigm of cloud-native security protection that is flexible, scalable, and cross-platform consistent based on the unique technical characteristics of serverless itself (Li, Leng & Chen, 2022). Such a paradigm should address the fundamental disconnect between existing security models and the serverless execution environment by incorporating execution context into security decisions, implementing fine-grained request-level authorization, and providing strong protection against credential theft and misuse, all while maintaining the performance benefits that make serverless computing attractive. This is also the focus of this article’s work. Only by developing new security mechanisms that are compatible with serverless from the kernel architecture and working mechanisms can we truly achieve the trusted development of serverless computing.

Implementation principles of dynamic tokens

Design philosophy

Dynamic token generation mechanism

The dynamic token encryption and decryption mechanism consists of two main components: the generation of encrypted tokens and the verification of decrypted tokens. The generation of encrypted tokens is based on user-defined encryption rules, while the verification of decrypted tokens depends not only on user-defined encryption rules but also on additional information such as random strings and timestamps. The entire process involves dynamic and unpredictable authentication information exchanges, which is why this method is called the dynamic token encryption and decryption mechanism.

The core of the encrypted token generation mechanism is to provide a flexible and secure way to generate encrypted tokens, aiming to enhance the security of function invocations in serverless architecture. This mechanism allows for user-defined rules, including the integration of built-in dynamic parameters and custom static parameters, to achieve accurate verification and release of sensitive operations, further ensuring the security of serverless applications.

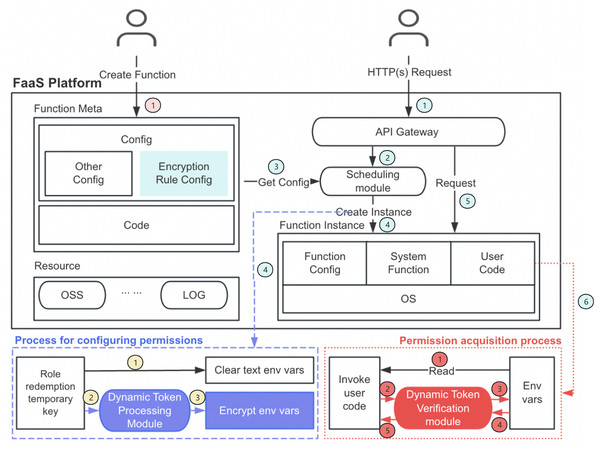

Specifically, as shown in Fig. 1, the token generation process allows users to dynamically generate signature strings by utilizing built-in parameters (such as request ID, instance ID, and timestamp) in the serverless environment combined with custom rules. For example, users can use the expression $md5($requestid, $instanceid) to set the generation rule for the signature string. This is merely one example of how dynamic parameters can be combined; users can define various rules based on their security requirements and choose different cryptographic algorithms according to their needs. The rule will exhibit different encrypted token values in different instances of the same function, and even under different requests of the same function instance.

Figure 1: Dynamic token encryption architecture diagram.

Figure 1’s architecture diagram illustrates the complete function lifecycle and permission management process. This process can be divided into three main phases:

First is the function creation and configuration phase (pink section, step 1): Users create functions and provide metadata and code, while defining encryption rules in the configuration. The function metadata, including encryption rule configuration, is stored in the platform for subsequent processing.

Next is the cold start process (blue section, steps 1–4), which includes the permission configuration phase (blue dashed box): When an HTTP(s) request arrives (step 1), the API gateway forwards it to the scheduling module (step 2). The scheduling module retrieves the configuration information (step 3) and creates the corresponding function instance (step 4). During instance creation, the system performs role redemption to obtain temporary keys and immediately clears plaintext environment variables to eliminate direct exposure risks (yellow section, step 1). Subsequently, the dynamic token processing module processes these temporary keys according to predefined encryption rules (yellow section, steps 2–3), generating encrypted environment variables that are securely stored. This process ensures that sensitive information is protected from the very beginning, rather than existing in plaintext form in the function environment.

Finally, there is the permission acquisition phase (red dotted box, steps 1–4) that occurs after the cold start completes (step 5 in the blue section initiates normal function invocation, leading to step 6 for sensitive information acquisition): When the user code is invoked and needs to access protected resources (red section, step 1), it attempts to read environment variables. The dynamic token verification module intercepts this request (red section, step 2), comprehensively validates the provided verification information (red section, step 3), and only after all security checks pass does it return the decrypted environment variable values (red section, step 4), allowing the code to securely access the required resources.

When requesting sensitive information, users need to provide the decryption token, a non-repeatable random string within the instance, a timestamp, and the key values of the required parameters. More formally, if we denote H as the cryptographic hash function chosen by the user (such as MD5, SHA-256, or any other algorithm based on security requirements), and | as the concatenation operator, the decryption token can be represented as:

(1)

This formulation clearly illustrates how multiple dynamic factors are combined to create a unique, context-bound token. It’s important to note that this is a flexible framework rather than a fixed implementation. The parameters included in the equation represent a recommended combination, but users can adjust the specific components based on their security needs—adding additional contextual factors or simplifying the combination for performance considerations. Similarly, the concatenation operation shown here can be replaced with more complex combining methods if desired. The essential requirement is consistency between token generation and verification processes.

The components of this equation are defined in Table 1.

| Component | Description |

|---|---|

| Identifies the specific request being processed, providing request-level uniqueness | |

| Binds the token to a particular function instance, preventing cross-instance usage | |

| A random string that ensures one-time use, preventing replay attacks | |

| Establishes the token’s validity period, ensuring tokens cannot be used indefinitely | |

| Represents the specific resource or data being accessed, binding the token to particular sensitive information |

The system generates a verification key through the same formula using the submitted parameters and matches it against the provided decryption token. To prevent replay attacks, the system designs an anti-replay mechanism that verifies the uniqueness of the random string (Nonce) and confirms the timestamp is within a reasonable validity period. This anti-replay implementation is straightforward, typically maintaining a simple list of used random strings within the function instance process for the duration of the instance’s lifecycle.

In the dynamic token mechanism, the management of random numbers (Nonce) and timestamps is key to building replay protection. This scheme adopts a concise and efficient anti-replay mechanism, designed around the lifecycle characteristics of each function instance. Specifically, the system requires each token request to include a unique random number and current timestamp, and during verification, it checks whether this random number has been used before in the current function instance.

In implementation, the system only needs to maintain a simple list of used random numbers in the function instance’s memory, without requiring complex persistent storage or global registries. This design fully leverages the instance-level isolation characteristics of serverless functions, as function instances themselves are request-level or limited-lifecycle execution environments with predictable maximum execution times. Even in extreme cases, the size of the random number list has an upper limit and will not cause memory leaks or resource exhaustion issues.

Token validity adopts a flexible mechanism based on timestamp comparison, rather than a fixed long-term validity design. The system compares the timestamp in the token with the current time at verification, and if the difference exceeds the configured threshold (e.g., plus or minus 10 s), the request is rejected. This “generate-as-needed” time window design significantly narrows the timeframe in which tokens can be misused, particularly suitable for the brief, high-frequency operations in serverless scenarios. The specific range of the time window can be configured by users according to business requirements and security needs, with the system recommending shorter time windows by default to maximize security.

It is important to emphasize that the core value of the dynamic token scheme proposed in this article lies in its overall encryption architecture design and dynamic context integration mechanism, rather than in any specific hash algorithm selection. In implementation, the hash algorithm is merely a configurable component of the scheme, and users can choose appropriate algorithms based on their security requirements and performance considerations.

In our example implementation, we demonstrate the possibility of using lightweight algorithms such as MD5, primarily to provide a reference for performance-sensitive scenarios. However, the system fully supports users in employing any hash algorithm they deem appropriate, including but not limited to more modern and secure choices like SHA-256, SHA-3, and BLAKE2. In fact, our framework design allows users to explicitly specify the desired algorithm in the encryption rule configuration, and even combine multiple algorithms to create more complex signature logic.

This flexibility enables our scheme to adapt to different application scenarios and security requirements while maintaining its core advantage—creating highly dynamic and one-time access tokens by combining function execution context, request parameters, timestamps, and random numbers, among other multiple factors. Regardless of which specific hash algorithm is chosen, this multi-factor combination’s dynamic nature provides strong security assurances, effectively preventing unauthorized access and token abuse.

Only when token verification is successful, the random string appears for the first time in the current instance, the signature is within the validity period, and the key-value information to be obtained matches the decryption token, will the request be considered legitimate, and the user can safely obtain the corresponding key-value information. Otherwise, the request will be regarded as illegal.

Compared with the traditional method of directly storing and retrieving keys in plain text in environment variables, the dynamic token encryption and decryption mechanism provides significant security advantages. In the traditional method, the keys and sensitive information stored in plain text are extremely vulnerable to capture and exploitation by malware or insider threats, leading to security risks and data leakage. The dynamic token mechanism combines the built-in parameters of the serverless environment and user-defined encryption rules, greatly enhancing the security and verification stringency of temporary keys and other permission-related information.

The generation of encrypted temporary key information depends on the request context and random factors, effectively preventing unauthorized access and abuse risks. Additionally, the anti-replay function combined with the decryption token validity period determination ensures that even if the decryption token is maliciously intercepted, it cannot be reused. This forms a stark contrast to the traditional plain text storage lacking effective protection measures. The dynamic token mechanism improves the complexity and tamper-proofing capability of security verification by encrypting permission information and utilizing unpredictable parameter generation processes, not only plugging the vulnerability of directly reading plain text keys but also setting up multiple lines of defense, significantly enhancing the robustness of the overall security architecture.

In practical scenarios like the WebIDE platform mentioned earlier, this mechanism effectively prevents sensitive information such as platform-level keys from being leaked through improper code printing or network transmission. By requiring proper token verification before accessing sensitive data, even if malicious code manages to execute within the function environment, it cannot directly access protected credentials without generating valid tokens that incorporate the correct context parameters.

The optimized dynamic token encryption and decryption mechanism provides a flexible and secure access control and data protection solution suitable for serverless application scenarios of various security levels. This mechanism ensures that applications can provide highly secure protection while maintaining efficient and stable service responses. By implementing this mechanism, serverless applications can provide dual assurance for users and developers, ensuring data security and system reliability.

Function-level token management policy

Building upon the dynamic token generation mechanism described previously, the function-level token management policy provides an implementation pathway that transforms the theoretical framework into a practical deployment solution. This strategy is founded on the principle of separation of concerns, achieving effective segregation of policy definition and execution by configuring encryption rules at the function level while performing actual encryption and decryption operations at the instance or request level.

This policy avoids the complex inheritance relationships common in traditional hierarchical permission systems, instead adopting the principle of function independence. Each function independently maintains its encryption rules, and every token operation is executed in an isolated environment without dependence on the state of other functions. This design highly aligns with the stateless nature of serverless computing while providing stronger security isolation guarantees. The boundaries between functions are clearly delineated, effectively preventing permission leakage and propagation—even if a single function is compromised, it will not affect the security architecture of the entire application.

In practical implementation, the function-level policy can be divided into three critical phases: configuration, initialization, and runtime. During the configuration phase, developers declare sensitive information requiring protection and corresponding encryption rules in the function definition. In the initialization phase, the system automatically executes an initialization function that encrypts sensitive environment variables according to predefined rules, replacing original plaintext values with ciphertext. In the runtime phase, function code accesses protected resources by generating valid tokens containing necessary contextual parameters, with the system returning decrypted information only after successful verification. This multi-phase implementation ensures that sensitive information remains protected throughout its entire lifecycle.

A core security design decision in function-level token policy is the principle of non-transferable tokens across functions. Each function constitutes an independent security domain responsible for its own token generation and verification processes, eliminating security risks that might arise from token transmission in function call chains. This decision is based on in-depth threat model analysis: in complex microservice architectures, if token transfer were allowed, any compromised function could intercept and misuse valid tokens, creating a path for privilege escalation attacks. By enforcing independent verification for each function, the system establishes a security model approaching zero trust architecture, maintaining overall security even when certain components are compromised.

From an engineering practice perspective, this strategy’s implementation organically combines with modern serverless deployment tools (such as Serverless Devs), significantly reducing integration complexity. Deployment tools can automatically inject necessary initialization code during function deployment, making the security enhancement process nearly transparent to developers. This engineered design allows security policies to be managed separately from application code, ensuring consistency in security implementation without interfering with normal application development processes. Using Alibaba Cloud Function Compute as an example, developers can mark which information needs protection through environment variables (such as ENCRYPTION_KEYS: "DB_PASSWORD,API_KEY"), and specify an initialization function for security processing, with the entire process remaining independent from business logic development.

The value of function-level token management policy is reflected not only at the technical level but also in its deep alignment with organizational security governance. By allowing different functions to adopt differentiated security policies, this method supports organizations in implementing risk-based security controls, concentrating limited security resources on the most critical system components. High-sensitivity functions like payment processing and authentication can be configured with stricter token generation rules (such as using SHA-256 algorithm combined with more contextual parameters), while low-sensitivity features like logging can adopt more lightweight configurations to optimize performance. This fine-grained security resource allocation model significantly improves overall protection efficiency.

This strategy also provides a rich data source for security analysis through its audit logging mechanism. The system records all token verification attempts, including detailed reasons for verification failures (such as nonce reuse, timestamp expiration, or token mismatch), giving security teams the ability to precisely identify potential attacks. These audit records not only support post-event analysis and forensics but can also be integrated into real-time security monitoring systems, building anomaly detection capabilities based on dynamic token behavior.

In conclusion, the function-level token management policy achieves organic unity between centralized management and distributed execution by defining security rules at the function level but performing verification at the request level. This design satisfies both the technical characteristics of serverless architecture and the best practice principles of modern application security, providing a scalable, efficient, and implementable security framework for building large-scale serverless applications with diverse security requirements.

Security enhancement mechanisms

Encryption and decryption process

The encryption and decryption process builds upon the dynamic token generation mechanism outlined earlier, implementing a comprehensive security workflow that spans the entire lifecycle of sensitive data in serverless environments. While the previous section established the theoretical foundation of token generation, this section delves into the practical aspects of how encrypted data is processed, stored, and accessed within the system architecture.

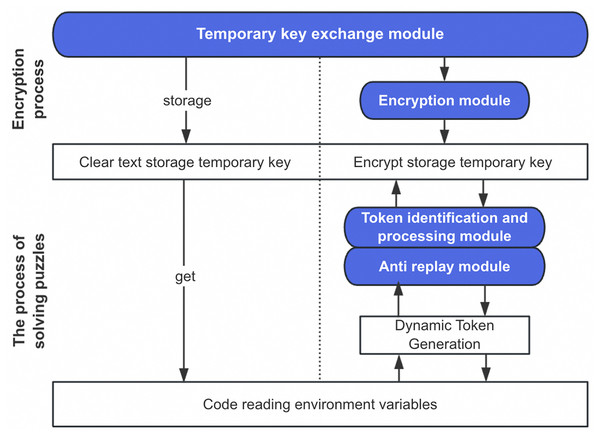

As illustrated in Fig. 2, the process implements a separation between encryption policy and execution. The encryption rules configured at the function level are stored alongside function metadata in the platform’s control plane, creating a robust association between the function definition and its security policy. This architectural decision enhances security by ensuring that encryption rules remain isolated from the function’s runtime environment, preventing potential extraction through code vulnerabilities.

Figure 2: Principle diagram of dynamic token encryption process.

From a storage perspective, the system takes a dual-layer approach to protecting sensitive data. The first layer involves replacing plaintext environment variables with their encrypted counterparts during the initialization phase. The second layer implements access control through the dynamic token verification process, creating an effective defense-in-depth strategy. This approach significantly mitigates the risk of sensitive data exposure even if an attacker gains access to the function’s environment variables, as extracting the actual values would require successfully navigating the token verification process.

The verification process follows a strict multi-step validation sequence as shown in Algorithm 1. The system first validates the uniqueness of the parameter against the instance’s used-nonce registry, immediately rejecting any requests attempting to reuse a previously seen random string. Next, it verifies the timestamp to ensure the request falls within the configured validity window. Only after these preliminary checks does the system proceed to generate the comparison token using the same rules defined for the function. This progressive validation approach optimizes performance by failing fast on invalid requests, reducing unnecessary cryptographic operations.

| Require: User request containing data identifier DataKey, decryption token DecryptToken, random string Nonce, and timestamp Timestamp |

| Ensure: Allow access to sensitive information if the request is legitimate |

| 1: Verify the uniqueness of Nonce to prevent replay attacks |

| 2: Verify Timestamp to ensure DecryptToken is within the validity period |

| 3: Generate encrypted token EncryptToken using the same rules as the user |

| 4: if then |

| 5: Grant access to the requested data |

| 6: Record the successful access attempt for auditing |

| 7: else |

| 8: Deny access and record the anomaly event for further analysis |

| 9: end if |

A distinctive characteristic of this encryption system is its exceptional performance profile. Unlike traditional encryption solutions that often introduce significant latency, our experimental evaluations (detailed in “Discussion”) demonstrate minimal performance overhead. This efficiency stems from several design decisions: (1) the verification process is lightweight and executed in-memory, (2) the function-local nature of nonce verification eliminates cross-service communication delays, and (3) the time-bound nature of tokens aligns perfectly with the ephemeral execution model of serverless functions. These factors combine to create a security layer that provides robust protection without necessitating additional caching mechanisms or cold-start optimizations.

The system implements a comprehensive anomaly detection and response strategy based on two primary failure categories. Function-level failures, where the instance itself encounters issues, are handled through platform-level recovery mechanisms. More critical from a security perspective are signature verification failures, which may indicate attempted unauthorized access. The system records each verification failure and implements a progressive response strategy—after a configurable threshold of consecutive failures, the instance is terminated and replaced, eliminating any potentially compromised execution environment. This self-healing approach creates a moving-target defense that significantly raises the difficulty of sustained attacks.

For open-source implementations or cross-platform deployments, the system can be extended to interface with cloud-native KMS. However, it is important to recognize the potential limitations of directly depending on platform-specific KMS solutions. These limitations include increased latency due to external API calls, potential throttling during high-concurrency scenarios, and added complexity in local development environments. The current design intentionally maintains independence from cloud provider-specific services, allowing for greater portability while still offering substantial security improvements over plaintext credential storage.

By binding each token to specific data identifiers ( ), the system enables fine-grained access control at the individual data element level. This granularity allows for precise security policies that follow the principle of least privilege—each token grants access only to the specific piece of data required for the current operation. The token’s tight coupling with request context ( , ), temporal factors ( ), and uniqueness guarantees ( ) creates a security boundary around each sensitive data access operation. Even in the unlikely event of token interception, the combination of timestamp validation and replay prevention renders the token useless outside its intended context and timeframe.

The comprehensive audit logging mechanism captures the entire lifecycle of sensitive data access, recording both successful and failed access attempts with contextual details. These logs serve multiple critical functions: they enable security teams to detect abnormal access patterns, provide evidence for forensic investigation following security incidents, and satisfy compliance requirements for sensitive data handling. The structured nature of these logs facilitates integration with security information and event management (SIEM) systems for real-time threat monitoring and automated response.

In conclusion, the encryption and decryption process implements a security model specifically tailored to the unique characteristics of serverless architectures. By combining function-level encryption policies with request-level verification, the system creates a balanced approach that delivers strong security guarantees with minimal performance impact. This approach represents a significant advancement over traditional static credential management techniques, addressing the unique security challenges of dynamic, ephemeral computing environments.

Audit log recording

The audit log recording mechanism extends the security foundation of our dynamic token system by providing comprehensive visibility and accountability across all security-relevant operations. Rather than implementing a custom logging infrastructure, our approach leverages cloud platforms’ native logging and auditing services, creating an efficient integration that maximizes both security coverage and operational efficiency.

This architectural decision offers several distinct advantages in serverless environments. First, cloud-native audit services provide built-in scalability that automatically adjusts to workload fluctuations, ensuring consistent log capture even during extreme traffic spikes. Second, platform-managed log services eliminate the persistence challenges inherent in ephemeral function instances, where locally stored logs would vanish upon function termination. Third, these services typically offer advanced features such as tamper-evident storage, encrypted transmission, and configurable retention policies that would be resource-intensive to implement independently.

To address the potential performance impact of audit logging, our implementation employs an asynchronous logging pattern specifically optimized for serverless execution models. When a security-relevant event occurs (such as token verification), the system generates a structured audit record containing all pertinent contextual information but delegates the actual transmission of this record to an asynchronous process. This approach prevents logging operations from blocking the main execution thread or extending function execution time, effectively decoupling security observability from application performance (Algorithm 2). Benchmark testing confirms that this implementation introduces negligible overhead—typically less than 1ms per function invocation—even when recording detailed contextual data.

| Require: Security event details including EventType, RequestContext, TokenData (sanitized), Result, Timestamp |

| Ensure: Event is recorded in the audit system without blocking function execution |

| 1: Create structured audit record with all security-relevant parameters |

| 2: Sanitize sensitive data from audit record (e.g., remove actual token values) |

| 3: Add function context metadata (function name, version, etc.) |

| 4: Append record to in-memory buffer with configurable size limit |

| 5: Trigger asynchronous flush if buffer threshold reached or on critical events |

| 6: Return control to main execution flow immediately |

| 7: Background process: Transmit buffered records to cloud audit service |

The audit system implements differentiated logging based on event criticality to balance comprehensive security visibility with storage efficiency. Standard operations capture essential metadata for routine successful token verifications, while security exceptions record detailed contextual information for all verification failures. For critical security events such as consecutive verification failures—a potential indicator of brute force attempts—the system employs enhanced logging that captures additional environmental variables and request details that might otherwise be omitted from standard audit records.

A key security enhancement involves integration with cloud platform alerting systems. The system defines several alert conditions based on audit patterns, particularly focusing on consecutive verification failures from the same source. When predefined thresholds are exceeded, the system automatically triggers platform-level alerts through cloud monitoring services, enabling rapid security response. This integration creates a security feedback loop where audit data drives automated defensive actions—such as temporary IP blocking or instance replacement—significantly reducing the window of opportunity for potential attackers.

For organizations operating across multiple cloud environments, our implementation provides a standardized audit schema that maintains consistent security observability regardless of the underlying platform. This approach creates a unified security view across hybrid deployments while still leveraging each platform’s native audit services for optimal performance and reliability. The audit schema includes standardized fields for event type, function context, verification parameters, result codes, and temporal information, ensuring that security analysts can correlate events across diverse environments without manual field mapping.

To maximize the security value of audit data, the system supports integration with common security information and event management (SIEM) platforms through standardized log formats and export mechanisms. This integration enables advanced security analytics including behavioral baseline analysis to identify abnormal token usage patterns, geographic and network path anomaly detection for potential token theft, correlation of token verification activities with other security telemetry, and automated compiling of evidence for post-incident forensic investigation.

The audit system’s design also accounts for regulatory compliance requirements, with configurable retention policies and selective field encryption to address data protection regulations. For industries with specific compliance mandates (such as finance or healthcare), the system provides predefined compliance templates that automatically adjust logging detail and retention parameters to meet regulatory standards while maintaining security effectiveness.

In production environments, this comprehensive audit mechanism has demonstrated significant value in detecting sophisticated attacks that might otherwise evade detection. The detailed contextual information captured in verification failure logs enables security teams to distinguish between legitimate application errors and potential security threats, significantly reducing false positive alerts while maintaining high detection sensitivity for actual attack scenarios.

By leveraging cloud-native audit services while implementing serverless-specific optimizations, our approach creates a robust security observability layer that enhances the overall security posture of the dynamic token system without compromising the performance advantages inherent to serverless architectures.

Performance optimization considerations

In serverless computing environments, balancing performance and security is particularly crucial. The characteristics of serverless architecture—microsecond-level billing, high concurrency fluctuations, and resource-constrained execution environments—impose strict performance requirements on security mechanisms. Through systematic analysis, this study has implemented multi-layered performance optimizations for the dynamic token mechanism, significantly enhancing system responsiveness and resource utilization efficiency while maintaining security.

We first optimized the execution order of verification logic, constructing an efficient tiered verification process. This optimization is based on an analysis of the computational complexity of each verification step, reorganizing the verification process into a progressive structure from low to high computational cost: first performing simple operations such as nonce uniqueness checking and timestamp validity verification, followed by computationally intensive token generation and comparison. This “fail-fast” strategy allows invalid requests to be identified and rejected at an early stage, avoiding unnecessary high-cost cryptographic operations and significantly improving the system’s processing efficiency when facing numerous requests.

At the system architecture level, we implemented an instance-level distributed processing model, embedding token verification decision logic into each function instance. This design fundamentally changes the traditional centralized verification approach, eliminating network latency and potential bottlenecks from cross-service calls. In the technical implementation, each function instance maintains its own verification state (such as a list of used nonces) and completes the entire verification process locally, without depending on external services. This distributed architecture allows the system’s verification capability to scale linearly with the number of function instances, making it particularly suitable for the elastic scaling characteristics of serverless environments.

To provide a clear understanding of our optimization strategies, Table 2 summarizes the key improvements implemented in the dynamic token mechanism.

| Optimization aspect | Original approach | Optimized version | Purpose/Motivation |

|---|---|---|---|

| Verification order | Sequential processing of all checks | Tiered verification with fail-fast logic | Reduce unnecessary computation by early rejection of invalid requests |

| System architecture | Centralized verification service | Instance-level distributed processing | Eliminate network latency and improve scalability |

| Hash function selection | Fixed SHA-256 for all operations | Configurable algorithms based on sensitivity levels | Balance security strength with performance requirements |

| State management | External state storage | Function-local nonce registry | Reduce cross-service calls and improve response time |

| Token validation | Full validation for all requests | Progressive validation based on operation criticality | Optimize for common cases while maintaining security |

Based on the code structure adjustment approach proposed in the original text, we optimized the implementation details of token verification. These optimizations include reducing unnecessary data conversions, simplifying intermediate state management during the verification process, and adopting efficient data structures for storing verification information. While these technical detail optimizations may seem minor, their cumulative effect is significant in high-frequency verification scenarios, effectively reducing CPU usage and memory consumption.

In terms of integration with cloud platforms, our design fully considers the unique operating mode of serverless functions. Unlike traditional server environments, serverless function instances have short and unpredictable lifecycles, making the management of verification state particularly critical. Our optimization strategy adapts to this characteristic by efficiently managing verification state within the instance lifecycle, avoiding issues of state loss or redundant storage.

Practical application validation is an important proof of the effectiveness of our optimization strategy. In the actual deployment of the anycodes online programming platform, which is built on a serverless architecture to provide WebIDE services for multi-tenants with high concurrency and security-sensitive characteristics, our dynamic token mechanism was successfully applied. The performance overhead introduced by the verification process was controlled within an acceptable range while effectively preventing sensitive information leakage, even when user code contained potentially malicious behavior. This example demonstrates that the optimized dynamic token scheme has practicality and effectiveness in real production environments.

Compared to existing security solutions, our optimized approach demonstrates clear advantages. Traditional centralized verification methods (such as API gateway verification) introduce additional network calls with each request, increasing latency and limiting system scalability. Static token-based approaches (such as long-lived JWTs) offer fast verification but significantly reduced security, lacking the ability to dynamically bind to request contexts. Our approach achieves a balance between the two, combining the high performance of distributed architecture with the strong security of dynamic tokens.

In terms of adaptability to changing system loads, the optimized scheme exhibits good stability. Traditional verification methods often experience performance cliffs as load increases, while our distributed design allows the system to scale smoothly with load. Particularly in function chain call scenarios, the performance overhead of traditional methods accumulates with call depth, while our approach effectively mitigates this problem by reducing the overhead of each verification.

From both theoretical and practical perspectives, our optimization strategies achieve good performance levels within the constraints of serverless environments. While maintaining necessary security features (replay prevention, time-bound validity, context binding), the verification overhead of the system approaches the practical lower limit. Future performance improvements may primarily depend on optimizations in the underlying platform and more efficient cryptographic primitives, rather than major improvements in the verification logic itself.

The “Discussion” section will provide detailed performance test results, quantitatively demonstrating optimization effects from multiple dimensions. These data will further validate the practical value of the optimization strategies described in this section, proving that the dynamic token mechanism can meet both the performance and security requirements of serverless environments.

In conclusion, through verification process optimization, instance-level distributed processing, and code structure improvements, we have successfully addressed the performance challenges of dynamic token mechanisms in serverless environments. These optimizations not only improve system response speed and resource utilization efficiency but also maintain the security advantages of dynamic tokens, making them an ideal choice for serverless applications requiring both security and high performance. These optimization strategies and design principles are not only applicable to the specific scenarios in this research but also provide valuable references for performance optimization of other serverless security mechanisms.

Security and performance evaluation

Security analysis

Attack model analysis

In the security analysis of the dynamic token encryption and decryption method, constructing a systematic attack model is essential for deeply understanding potential security threats. The attack model established in this research not only describes the possible actions attackers may take, attack targets, and implementation conditions, but also focuses on security risks unique to serverless architectures. Building upon the inherent defects in serverless permission management analyzed previously, this research categorizes attack models into two main types: generic attacks and scenario-based attacks.

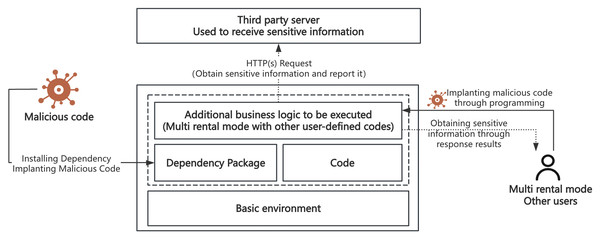

Generic attacks primarily involve security issues related to open-source dependency packages. As shown on the left side of Fig. 3, attackers inject malicious code into widely-used open-source dependency packages. When serverless applications reference these contaminated packages, the malicious code gains the opportunity to execute within the function environment. Such attacks are particularly dangerous in serverless environments because function execution environments typically store sensitive information such as temporary keys, and once malicious code executes, it can send this information to attacker-controlled servers via HTTP requests. This attack pattern directly corresponds to A9 (Using Components with Known Vulnerabilities) in the OWASP Serverless Top 10, but has more profound implications in serverless environments, as leaked temporary keys may possess extensive cross-service permissions.

Figure 3: Schematic diagram of generic and scenario-based attacks.

Scenario-based attacks target specific application scenarios, especially multi-tenant environments that execute third-party code. As illustrated on the right side of Fig. 3, these attacks primarily occur in two scenarios: online programming platforms and automated deployment tools. Taking Alibaba Cloud Function Compute WebIDE as an example, such services allow users to edit and test code online, typically running under serverless accounts with broad permissions. If environment variables contain sensitive data and are improperly handled, platform-level key information may be leaked. Similarly, when automated deployment tools like Serverless Devs process resource description files, maliciously crafted components may lead to key information leakage or unauthorized access. These attacks reflect serious security issues that may arise from improper configuration or insufficient security measures in specific application scenarios, closely related to A5 (Broken Access Control) and A6 (Security Misconfiguration) in the OWASP Serverless Top 10.

Notably, these two attack models differ significantly from attacks in traditional applications. In traditional server architectures, attackers typically need to first breach network boundaries and then attempt to gain server privileges. In serverless architectures, however, functions can be triggered by various events, each function potentially becoming an independent attack entry point, and functions are often configured with execution roles allowing access to multiple cloud services. These architectural characteristics blur permission boundaries and significantly expand the attack surface.

In response to these specific attack models, the dynamic token method proposed in this research adopts security strategies fundamentally different from traditional static authorization mechanisms. By tightly binding sensitive information with function execution context and request parameters, the dynamic token mechanism ensures that even if function code is maliciously injected, attackers cannot obtain valid keys without meeting strict verification conditions. This request-level dynamic authorization model counters the unique permission leakage risks in serverless environments, demonstrating significant advantages particularly when handling high-risk scenarios involving third-party code execution.

Constructing these targeted attack models not only provides a framework for subsequent security evaluations but, more importantly, reveals the limitations of traditional security methods in serverless environments. By deeply understanding attackers’ objectives, methods, and constraints in serverless environments, we can design more precise and effective defense strategies, thereby truly enhancing the security resilience of serverless applications and supporting their robust operation in complex threat environments.

Qualitative evaluation of defense effect

In the security analysis of the dynamic token encryption and decryption method, evaluating the defense effect is a key step in measuring the effectiveness of the method. This section comprehensively evaluates the defensive capabilities of the dynamic token mechanism in practical application scenarios by establishing a systematic evaluation framework and building upon the attack models defined earlier. Our research constructs an evaluation framework from four security dimensions: confidentiality protection, integrity assurance, availability maintenance, and anti-forgery/non-repudiation, for systematic analysis of the defensive effects of the dynamic token method. Through this framework, we can comprehensively assess the performance of the dynamic token method under different threat scenarios and establish correlations with security risk categories in the OWASP Serverless Top 10.

Against the threat of token theft and abuse, the dynamic token encryption and decryption method significantly reduces the exploitation value of stolen tokens by limiting token validity periods and implementing a one-time use principle. Even if an attacker successfully steals a token, due to the token’s rapid expiration, the attacker finds it difficult to complete unauthorized access within the validity period after the theft. This directly addresses the A2 (Broken Authentication) risk in the OWASP Serverless Top 10 (OWASP Foundation, 2018), which specifically points out: “In serverless architectures, with multiple potential entry points, services, events and triggers and no continuous flow, things can get even more complex.” The dynamic token addresses this complexity by creating unique authentication contexts for each function instance and request.

For token forgery attack attempts, because token generation involves complex security algorithms and dynamic context information, unauthorized users find it difficult to replicate or generate valid tokens. Security tests demonstrate that the dynamic token mechanism can effectively identify and reject forged token requests, thereby preventing the success of forgery attacks. In existing serverless security solutions, although adopting the principle of least privilege helps reduce unnecessary permission grants, it cannot solve the security risks of permission leakage caused by static permission configuration. This directly relates to A5 (Broken Access Control) in the OWASP Serverless Top 10 (OWASP Foundation, 2018), which clearly states: “In serverless, we do not own the infrastructure, so removing admin/root access to endpoints, servers, network and other accounts (SSH, logs, etc.,) is not an issue. Rather, granting functions access to unnecessary resources or excessive permissions on resources is a potential backdoor to the system.” The optimized scheme, by introducing dynamic tokens, significantly increases the difficulty and scientific degree of obtaining temporary keys, and although it does not directly dynamically adjust permissions, this enhancement effectively controls the risk of temporary key leakage and malicious acquisition even if permissions are configured too broadly.

For the dependency package security issues (generic attacks) analyzed earlier, the dynamic token method provides effective defense. This problem directly corresponds to A9 (Using Components with Known Vulnerabilities) in the OWASP Serverless Top 10 (OWASP Foundation, 2018), which states: “Serverless functions are usually small and used for micro-services. To be able to execute the desired tasks, they make use of many dependencies and 3rd-party libraries.” In serverless environments, functions frequently depend on external libraries, which, if maliciously modified or “poisoned,” may become sources of security vulnerabilities. The OWASP document describes a typical case where attackers contaminate the url-parse library to implement server-side request forgery (SSRF) attacks. The optimized dynamic token mechanism, by encrypting permissions for sensitive operations a second time, ensures that even if dependent libraries are poisoned, malicious code cannot directly exploit temporary keys in environment variables. In the specific defense process, the system replaces sensitive information in the original environment variables with encrypted versions. When the function executes, even if injected malicious code attempts to read environment variables, it can only obtain encrypted content. To decrypt and use this information, a valid dynamic token must be generated, which requires meeting specific execution context conditions and passing through strict verification processes. Since token generation requires dynamic parameters such as function instance ID and request ID, malicious code typically cannot meet these conditions and thus cannot generate valid tokens to decrypt sensitive information.

For the scenario-based attacks defined earlier, the dynamic token method demonstrates significant advantages in multi-tenant environments. These attacks correspond to A3 (Sensitive Data Exposure) and A6 (Security Misconfiguration) in the OWASP Serverless Top 10 (OWASP Foundation, 2018). Category A3 particularly emphasizes: “In serverless, writing data to the /tmp directory without deleting it after use, based on the assumption that the container will die after the execution, could lead into sensitive data leakage in case the attacker gains access to the environment.” Taking WebIDE platforms as an example, these platforms that allow users to upload and execute custom code face the risk of user code attempting to read temporary keys in environment variables. The “Poisoning the Well” attack mentioned in the OWASP document is an example where attackers gain long-term persistence in the application through upstream attack means and then patiently wait for the new version to make its way into cloud applications. In traditional methods, once code gains execution privileges, it can typically directly access plaintext information in environment variables. With the dynamic token mechanism, sensitive information in environment variables is stored in encrypted form, and even if the attacker’s code executes, it cannot directly use this encrypted information.

Category A6 (Security Misconfiguration) further points out: “Misconfiguration in serverless could lead to sensitive information leakage, money loss, DoS or in severe cases, unauthorized access to cloud resources.” The dynamic token method provides an additional security layer that effectively prevents sensitive information from being directly exploited even in cases of misconfiguration. The OWASP document describes a scenario where cloud storage is misconfigured and has public upload (write object) access, allowing users to directly upload files with their own accounts. If the upload event triggers internal functionality, an attacker could use that to manipulate the application execution flow. The dynamic token mechanism, by requiring token verification at each critical operation point, ensures that only legitimate requests can execute sensitive operations, effectively blocking such attack paths.

Another important scenario is the CI/CD process. The OWASP document (OWASP Foundation, 2018) mentions a scenario under category A2: “To enable high velocity development, each time a pull request is created the designated manager receives an email message with the relevant information. The manager can then reply to the mail to approve/decline the request. This is done via an SES service that triggers a function with the relevant permissions to approve or close a request. However, if attackers gain knowledge of the email address as well as the required email format, they can sabotage the development or even insert backdoors into the code by sending a malicious email directly to the designated email address.” The dynamic token method, by binding authentication with function execution context and requiring multi-factor verification, can effectively prevent such cross-channel attacks.

Compared to traditional security mechanisms such as JWT (JSON Web Tokens) and KMS, the dynamic token method has unique advantages in serverless environments. JWT typically operates on a time-based validity model, with validity periods (minutes to hours) severely mismatched with the millisecond-level execution time of serverless functions, meaning that tokens remain valid long after function execution completes, increasing the risk of misuse. This issue is implied in category A2 of the OWASP document (OWASP Foundation, 2018): “On the plus side, using the infrastructure provider’s authentication services eliminates any need to handle passwords and sessions that, in many cases, were the weakest link in traditional architectures.” Dynamic tokens, by binding with specific requests and function instances, achieve request-level precise control, significantly reducing the effective window period.

The system also implements an automated response mechanism that automatically executes cleanup operations, removes relevant keys and sensitive information, and immediately triggers security alerts when multiple consecutive illegal requests are detected. This directly responds to concerns in category A10 (Insufficient Logging and Monitoring) of the OWASP Serverless Top 10 (OWASP Foundation, 2018): “Applications which do not implement a proper auditing mechanism and rely solely on their service provider probably have insufficient means of security monitoring and auditing.” The dynamic token mechanism not only provides defensive capabilities but also enhances the system’s monitoring capabilities by recording verification failures and abnormal behaviors.