Employing SAE-GRU deep learning for scalable botnet detection in smart city infrastructure

- Published

- Accepted

- Received

- Academic Editor

- Kübra Seyhan

- Subject Areas

- Artificial Intelligence, Computer Networks and Communications, Data Mining and Machine Learning, Security and Privacy, Internet of Things

- Keywords

- Botnet detection, IoT security, Smart city networks, Deep learning models, Temporal pattern recognition

- Copyright

- © 2025 Tariq and Ahanger

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2025. Employing SAE-GRU deep learning for scalable botnet detection in smart city infrastructure. PeerJ Computer Science 11:e2869 https://doi.org/10.7717/peerj-cs.2869

Abstract

The proliferation of Internet of Things (IoT) devices in smart cities has revolutionized urban infrastructure while escalating the risk of botnet attacks that threaten essential services and public safety. This research addresses the critical need for intrusion detection and mitigation systems by introducing a novel hybrid deep learning model, Stacked Autoencoder–Gated Recurrent Unit (SAE-GRU), specifically designed for IoT networks in smart cities. The study targets the dual challenges of processing high-dimensional data and recognizing temporal patterns to identify and mitigate botnet activities in real time. The methodology integrates Stacked Autoencoders for reducing dimensionality and gated recurrent units for analyzing sequential data to ensure both accuracy and efficiency. An emulated smart city environment with diverse IoT devices and communication protocols provided a realistic testbed for evaluating the model. Results demonstrate significant improvements in detection performance with an average accuracy of 98.65 percent and consistently high precision and recall values. These findings enhance the understanding of IoT security by offering a scalable and resource-efficient solution for botnet detection. The functional investigation establishes a foundation for future research into adaptive security mechanisms that address emerging threats and highlights the practical potential of advanced deep learning techniques in safeguarding next-generation smart city ecosystems.

Introduction

The growth of Internet of Things (IoT) devices in urban environments has significantly enabled advancements in urban management and an improved quality of life for residents. Yet, it has also introduced substantial challenges in ensuring security and privacy (Hazman et al., 2024). As smart cities evolve, the centrality of IoT devices necessitates robust systems capable of managing and safeguarding interconnected technologies. With IoT applications extending across traffic control, public safety, and environmental monitoring, the security of these devices becomes vital for the effective operation of modern urban systems (Bhardwaj et al., 2024).

The increasing integration of IoT devices has been accompanied by a parallel rise in security threats, particularly from IoT botnets that exploit vulnerabilities to carry out malicious activities (Krishnan & Shrinath, 2024). Recent high-profile incidents highlight the need for urgent enhancements to security measures. Botnets are capable of taking over large numbers of IoT devices, turning them into networks of remotely controlled bots that can initiate large-scale attacks, disrupting city operations and compromising sensitive data.

These escalating threats have made the need for effective intrusion detection systems (IDS) apparent (Indra et al., 2024). IDS are critical in detecting and neutralizing threats originating from IoT botnets, thereby preserving the integrity and reliability of smart city infrastructures. Without such robust IDS, smart city initiatives face potential disruptions that can undermine public trust and jeopardize essential services.

Traditional IDS, despite their importance, show significant limitations (i.e., includes but not limited to: high false positive rates; poor scalability; incompatibility; limited real-time analysis, inflexibility; lack of contextual awareness; protocol incompatibility, reliance on signature-based detection, etc.,) when used in IoT environments. The heterogeneous and dynamic nature of interconnected sensing devices, coupled with scalability and resource limitations, presents challenges that conventional IDS solutions cannot adequately address (Li et al., 2023). Thus, techniques that once sufficed for simpler networks are no longer effective in tackling the diverse security requirements (such as: Real-time threat detection; Scalability for large-scale networks; low latency response; adaptability to diverse protocols; data integrity assurance; etc.,) presented by complex networks.

To address these limitations, deep learning (DL) technologies offer a promising solution to the challenges present in IoT security (Zakariyya, Kalutarage & Al-Kadri, 2023). These advanced methods can detect complex, subtle patterns that traditional approaches often miss which makes them well-suited for the intricacies of smart city networks. Deep learning models have demonstrated their capacity to enhance detection capabilities and adapt more effectively to evolving threats. Nonetheless, integrating these models into IoT intrusion detection poses its own challenges. The need for real-time processing and the diverse data types found in networks demand highly efficient and flexible models that operate effectively even with the typical limitations of devices, such as restricted processing power and limited energy resources.

Even with the progress made with DL-based IDS, significant gaps remain (Shahin et al., 2024). Many current systems fail to meet real-time processing needs or adequately manage the range of node-specific threats. These deficiencies underscore the necessity for more advanced models capable of addressing the unique challenges of IoT security. Motivated by these limitations, this research aims to develop and assess a novel deep learning-powered IDS specifically designed for IoT environments in smart cities. This study advances beyond the existing state of the art by addressing these nuanced needs through a new architectural approach.

Significant contributions

The primary objective of this research is to push the boundaries of IoT security through the introduction of a novel deep learning architecture. The proposed Stacked Autoencoder–Gated Recurrent Unit (SAE-GRU1 ) model aims to enhance both the accuracy and efficiency of intrusion detection within networks. This article details the design, implementation, and deployment of the proposed model in a smart city context to furnish a comprehensive evaluation of its performance across several metrics to validate its effectiveness. Hereby, the main contributions of proposed research are listed below:

Introduced a hybrid deep learning model, SAE-GRU, that combines Stacked Autoencoders for dimensionality reduction2 and gated recurrent units for temporal pattern recognition to detect botnet activities in smart city IoT networks.

Implemented model pruning and weight quantization techniques to reduce computational complexity and improve the efficiency of the intrusion detection system without compromising accuracy.

Developed an emulation environment replicating a smart city network using diverse IoT devices and communication protocols, which enabled comprehensive testing of the proposed system.

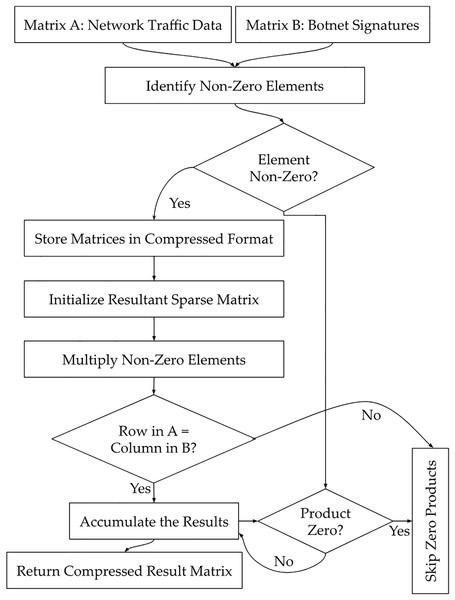

Integrated sparse matrix multiplication and batch processing to optimize the inference process to minimize computational overhead and guarantee real-time detection capabilities.

Applied truncated backpropagation through time in the GRU to manage sequential data processing effectively to effectively reduce latency in real-time applications.

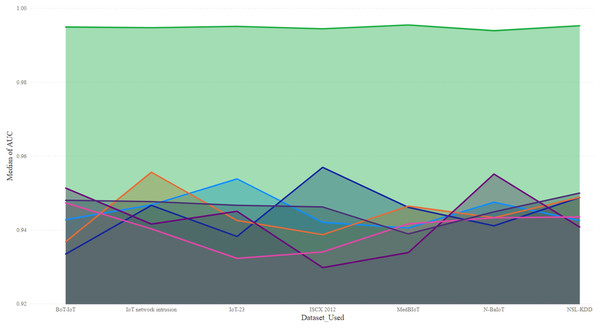

Conducted rigorous performance evaluations using k-fold cross-validation and various datasets to demonstrate superior accuracy, precision, recall, and AUC values compared to traditional models.

Employed feature importance analysis techniques, including SHAP values to identify key features like packet size and traffic volume to contribute significantly to detection accuracy.

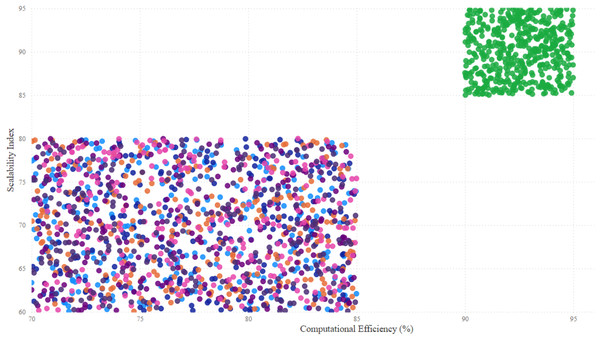

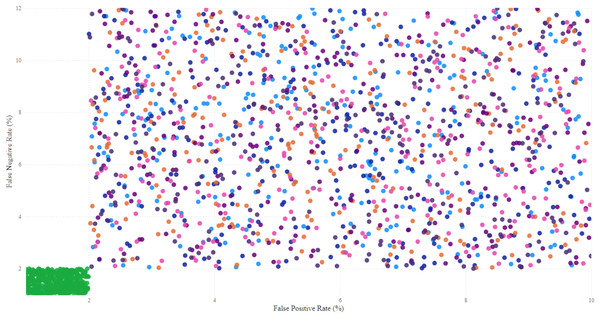

Demonstrated the model’s scalability and robustness in handling high data volumes and diverse network conditions to make it suitable for large-scale smart city implementations.

Highlighted the model’s resilience against zero-day attacks and its ability to adapt to evolving botnet strategies through advanced deep learning techniques.

Provided practical insights for future research by suggesting methods to enhance computational efficiency and extend the system’s capability to handle more complex IoT network environments.

The remainder of this article is organized to provide a detailed exploration of each component of the proposed IDS. Following this introduction, the article presents a review of related works to set the context for this study, followed by an explanation of fundamental concepts essential for understanding IoT botnet detection. The subsequent sections describe the proposed methodology, emulation setup, results, and an in-depth analysis of the key features identified during the study. The article concludes with a summary of findings and discusses their implications for future research and practical applications.

Related works

An IoT botnet within smart city network represents a conglomeration of compromised IoT devices integrated into urban infrastructures, orchestrated by malicious actors to conduct synchronized cyber-attacks that jeopardize the confidentiality, integrity, and availability of critical municipal services (Alshahrani, 2023). These botnets capitalize on intrinsic vulnerabilities such as insecure firmware, inadequate authentication protocols, and unencrypted communication channels like Message Queuing Telemetry Transport (MQTT) & Constrained Application Protocol (CoAP) which infiltrates in devices from environmental sensors to satellite communication modules. Novel and ultra-advanced botnet attacks employ sophisticated methodologies, including decentralized peer-to-peer command and control architectures, polymorphic malware that adapts its code to evade detection, and the use of machine learning algorithms to tailor attack vectors dynamically (Kornyo et al., 2023). Attack strategies may encompass cross-layer exploits targeting physical, network, and application layers simultaneously or manipulate satellite communication pathways to disseminate malicious payloads across a vast array of IoT devices, thereby amplifying the attack’s scale and complexity. The availability of extensive botnet datasets (Kalakoti, Bahsi & Nõmm, 2024), such as MedBIoT and IoT-23, delivers researchers with critical empirical data to analyze network traffic anomalies, model malware behavior, and develop advanced intrusion detection systems using machine learning techniques, ultimately enhancing the defensive posture against evolving botnet threats.

Hazman et al. (2024) proposed an IDS that combines deep learning with feature engineering to improve threat detection in IoT-based smart cities. The core of the framework is a long short-term memory (LSTM) model enhanced by autoencoders (AEs), genetic algorithms (GAs), and information gain (IG) for dimensionality reduction and input optimization. This integration improved classification efficiency in handling high-dimensional, imbalanced IoT data. The IDS was validated on datasets such as BoT-IoT, Edge-IIoT, and NSL-KDD, which demonstrated high accuracy, precision, and recall with notably low false positive and false negative rates. A key strength of this research was the use of Tensor Processing Units (TPUs), which significantly reduced training and classification time in real-time deployment. Furthermore, the feature engineering pipeline enhanced data quality and detection performance, especially against complex threats like Distributed Denial of Service (DDoS) and reconnaissance attacks. The limitations of model included potential overfitting due to reliance on labeled data and reduced generalizability across varied IoT infrastructures. Despite these, the study marked a meaningful step toward improving IDS performance in smart city environments.

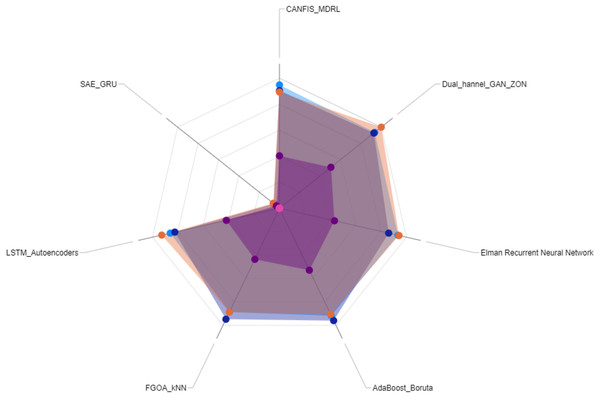

Almasri & Alajlan (2023) introduced a two-phase deep learning model for detecting and isolating cyber-attacks in IoT-based smart city systems. The first phase utilized a cascaded adaptive neuro-fuzzy inference system (CANFIS) to identify malicious traffic, detect compromised devices, and isolate them. In the second phase, modified deep reinforcement learning (MDRL) blocked communication channels of the infected devices to prevent further threats. The model achieved 98.7% detection accuracy by outperforming LSTM, support vector machines (SVM), and standard deep reinforcement learning (DRL) models across precision, recall, and F1-score. Its ability to significantly reduce detection time supported real-time threat mitigation. Validation on IoT network intrusion and ISCX 2012 datasets confirmed its generalizability. CANFIS’s sequential feature selection improved precision detection, while MDRL contributed to adaptive response strategies. A key limitation was computational demand which made its deployment on resource-constrained edge devices challenging. Despite this, the model’s high accuracy and low false positive rate make it a strong candidate for smart city security, with further potential in industrial and healthcare IoT through efficiency optimization.

Taher et al. (2023) proposed a machine learning framework to improve botnet detection in Industrial IoT systems. The approach integrates a hybrid feature selection method, FGOA-kNN, which combines Fisher-score, Grasshopper Optimization algorithm, and k-nearest neighbor to identify relevant features and eliminate redundancy with a focus to improve accuracy and computational efficiency. The model also incorporated an optimized neural network, IHHO-NN that was fine-tuned using an enhanced Harris Hawks Optimization algorithm to classify multiclass botnet attacks with high precision. Validated on the N-BaIoT dataset, the model outperformed traditional classifiers in accuracy, recall, and precision for both known and novel attacks. Its combination of unsupervised clustering with supervised learning strengthened robustness in handling high-dimensional, complex IIoT data. Improvements in convergence via chaotic maps and Random Opposition-Based Learning allowed real-time use in constrained settings. During our investigation, we experienced a few limitations, such as dependence on the N-BaIoT dataset, which raised concerns about generalizability across varied IoT systems and evolving threats.

Manickam et al. (2023) presented a novel integration of Billiard Based Optimization (BBO) and deep learning for anomaly detection in IoT-enabled smart cities while focusing/emphasizing on sustainable applications. The model employed Binary Pigeon Optimization (BPEO) for feature selection, Elman recurrent neural network (ERNN) for anomaly detection, and BBO for hyperparameter tuning to achieve high accuracy in anomaly classification. The main contribution lies in its ability to handle IoT data’s complexity and identify patterns in resource-constrained environments with an aim to enhance both detection accuracy and computational efficiency. The model achieved outstanding performance with an accuracy rate exceeding 99% on benchmark datasets. This result highlights its ability to capture temporal and spatial patterns while reducing false positives. Nonetheless, the reliance on centralized data processing presented a limitation, as it may lead to latency and increased vulnerability to network congestion in large-scale IoT systems. Furthermore, the model’s dependence on specific datasets raised concerns about its capacity to generalize effectively across diverse and evolving IoT environments.

Hazman et al. (2023) demonstrated significant advancements in IDS by adopting an ensemble learning approach that combined AdaBoost with Boruta feature selection. They implemented dimensionality reduction techniques such as PCA to address the challenges of IoT-based smart environments. This approach aimed to improve detection performance through optimized feature selection and reduced data redundancy. The model achieved high accuracy and low false alarm rates on benchmark datasets like NSL-KDD and BoT-IoT. By removing outliers and identifying the most relevant features, the model enhanced computational efficiency and reduced both training and prediction durations. The integration of CatBoost improved the model’s ability to detect anomalies in highly imbalanced datasets which made it suitable for real-time applications in IoT networks. Despite its strengths, the research faced challenges in generalizing results across diverse IoT environments due to the limited representation of threats in the datasets used.

The research by Ahmed, Beyioku & Yousefi (2024) has examined the integration of honeypot data with machine learning to enhance cyber-attack detection in smart city IoT environments. Using high-interaction honeypots deployed over extended periods, the study captured authentic real-world attack data with an ambition to improve relevance and reliability. Several algorithms—naive Bayes, decision tree, K-nearest neighbor (KNN), sequential neural network (SNN), and LSTM—were evaluated against IoT-specific attacks. The key contributions of this framework included a comprehensive assessment of feature selection techniques, particularly Information Gain and One Rule (OneR), which improved model efficiency. Decision tree and LSTM models achieved high accuracy, with LSTM excelling in identifying temporal patterns critical for intrusion detection. In context of limitations of this research, the challenges included inconsistent performance from naive Bayes and missing data requiring preprocessing. Although feature selection enhanced performance, our assessment revealed that maintaining efficiency with larger datasets remained difficult. Moreover, the study emphasized thoroughly the limited availability of diverse public datasets and the complexity of scaling detection systems across heterogeneous IoT architectures. This phenomenon has highlighted the intense need for future work in honeypot-driven machine learning approaches for IoT security.

Shareef et al. (2024) introduced an IDS that combined the Zebra Optimization algorithm (ZOA) for feature selection with a dual-channel graph attention network (DGAN) for classification. This system addressed the structural and semantic challenges of IoT communications by incorporating node and semantic attention networks to identify intricate patterns in device interactions. Hyperparameter optimization using the Sooty Tern Optimization algorithm (STOA) further enhanced detection accuracy, achieving 99.87 percent and surpassing traditional models. The research demonstrated the ability to process large and noisy datasets while improving feature extraction efficiency. Temporal and relational patterns in real-time traffic were also effectively captured by this system. The author emphasized high classification precision and adaptability to evolving botnet behaviors that was achieved through the tailored use of deep learning architectures for IoT traffic. Nonetheless, the reliance on computationally intensive architecture posed scalability issues in resource-constrained IoT environments. Therefore, this study stressed the need for future research to optimize the balance between detection accuracy and system efficiency. Hereby, the ‘Table 1’ presents a comparative analysis of recent intrusion detection models designed for smart city infrastructure, focusing on scalability, real-time processing, and efficiency in handling IoT threats. Each model is evaluated based on its core techniques, optimization strategies, dataset sources, and performance metrics to highlight advancements and practical applicability in securing IoT-based environments.

| Model (Ref) | Properties (Scalability, efficiency, Real-time, Adaptability) | Techniques used (Algorithms, Methods) | Basic primitives (Datasets, classification approach, Evaluation metrics) | Scalability (Large-scale readiness) | Real-time processing | Optimization methods (Pruning, FS, etc.) | Dataset source | Performance metrics (Accuracy, precision, recall, F1, AUC) |

|---|---|---|---|---|---|---|---|---|

| Hazman et al. (2024) | High accuracy and low false alarms; handles high-dimensional, imbalanced IoT data; optimized for efficiency (TPU acceleration)–suitable for large-scale, real-time deployment | Deep LSTM network combined with Autoencoder-based feature extraction and Genetic Algorithm + Information Gain for feature selection. | Evaluated on multiple IoT intrusion datasets (BoT-IoT, Edge-IIoT, NSL-KDD) in both binary and multi-class modes; used accuracy, precision, recall as metrics (focused on minimizing FPR/FNR). | Yes–Designed for large-scale IoT networks; uses TPU hardware to handle high data volumes (though model generalization to all IoT environments is a noted challenge). | Yes–Capable of real-time detection due to greatly reduced training/inference time (TPU acceleration). | Dimensionality reduction via Autoencoders; feature selection with GA and IG to optimize input features; TPU-based acceleration for faster processing (no explicit pruning/quantization reported). | BoT-IoT, Edge-IIoT, NSL-KDD (public IoT/ICS intrusion datasets) | ~99% accuracy (high precision & recall) with negligible FPR/FNR on all tested datasets. |

| Almasri & Alajlan (2023) | Two-phase detection & mitigation system; offers real-time threat response with high accuracy and low false positives; adaptable (fuzzy logic adjusts to traffic changes); some computational overhead (heavy model) noted for resource-limited devices | Cascaded Adaptive Neuro-Fuzzy Inference System (CANFIS) for attack detection, followed by modified Deep Reinforcement Learning (MDRL) to isolate/block compromised devices. Also employs sequential feature selection during CANFIS phase to refine detection rules | Evaluated on two datasets: an IoT network intrusion dataset and the ISCX-2012 dataset; supervised classification of normal vs. attacks (comparisons made to LSTM, SVM, standard DRL); metrics include accuracy, precision, recall, F1-score | Moderate–Demonstrated generalizability by testing on multiple datasets, but the model’s high memory/CPU requirements could hinder deployment at IoT edge scale (cloud resources likely needed). | Yes–Achieves significantly reduced detection time for immediate threat response (meets real-time detection needs in testing). | Feature selection (sequential) integrated to optimize CANFIS detection accuracy; combination of neuro-fuzzy and deep RL provides adaptive learning (no special pruning/quantization noted beyond architectural optimization). | Custom IoT intrusion traffic dataset + ISCX 2012 (benchmark IDS dataset) | Accuracy = 98.7%, outperforming baseline LSTM, SVM, and single-phase DRL models; high precision, recall, F1 (>0.95 reported) achieving low false-positive rate. |

| Taher et al. (2023) | Focused on IIoT botnet detection; robust to high-dimensional data and diverse attacks (detects known and unknown botnet activities); improves efficiency by removing redundant features; suitable for real-time use in resource-constrained environments (fast convergence). | Hybrid feature selection FGOA-kNN (Fisher Score + Grasshopper Optimization + k-NN) to identify relevant features; optimized neural network (IHHO-NN) fine-tuned via improved Harris Hawks Optimization; uses chaotic maps and opposition-based learning to avoid local minima and speed up training. | Evaluated on N-BaIoT dataset (IoT device botnet traffic); multiclass classification of various botnet attack types vs. normal behavior; evaluated by accuracy, precision, recall–significantly higher than conventional classifiers (SVM, etc.) on this dataset. | Good–Effective in complex, high-volume IIoT data (demonstrated on large feature space). Nevertheless, reliance on a single dataset means broader scalability/generalization to all IoT scenarios is unproven; the optimization algorithms add overhead that could tax extremely constrained devices. | Yes–Emphasizes real-time applicability; fast convergence and reduced feature set make it viable for timely detection in IIoT networks. | Bio-inspired optimization throughout: Grasshopper Optimization for feature selection, improved HHO for network parameter tuning; chaotic initialization and Random Opposition-Based Learning enhance search efficiency. (No specific model pruning mentioned, but feature elimination serves to streamline computation.) | N-BaIoT (Network-Based IoT) botnet dataset. | Outperformed baseline methods on N-BaIoT–higher accuracy, precision, recall (exact values not given, but substantially improved detection rates); low false alarm rate due to optimized feature set and tuning. |

| Manickam et al. (2023) | Anomaly detection model for IoT smart cities; achieves very high accuracy (~99%); captures temporal patterns via RNN; reduces false positives. Yet, it relies on centralized data processing, which may introduce latency and limit efficiency at scale; adaptability across highly diverse IoT setups not fully verified. | Binary Pigeon Evolution Optimization (BPEO) for feature selection; Elman Recurrent Neural Network (ERNN) for anomaly detection (sequence learning); Billiard Based Optimization (BBO) for hyperparameter tuning of the deep model. | Evaluated on benchmark IoT intrusion dataset(s) (not explicitly named in summary); supervised classification distinguishing normal vs malicious events; main evaluation metric is accuracy (along with false positive rate reduction). | Limited–While the approach is effective on the test dataset, the need to funnel data to a central node can become a bottleneck in large-scale deployments (network congestion, latency). Model generalization to other IoT datasets/environments remains a concern due to possible overfitting to specific data. | Not explicitly–The centralized architecture could impede real-time performance in expansive networks (due to processing delays). In smaller-scale scenarios or with sufficient infrastructure, it can operate with low latency, but real-time capability isn’t a primary proven feature. | BBO meta-heuristic optimizes the RNN’s hyperparameters; BPEO selects a minimal relevant feature subset to reduce complexity. These optimizations jointly maximize detection accuracy and efficiency. (No mention of model pruning or quantization.) | Not specified (likely standard IDS datasets such as NSL-KDD, KDD99 or IoT-23,) . |

Accuracy >99% on test data; low false-positive rate achieved (high precision) due to effective pattern learning and feature selection . |

| Hazman et al. (2023) | Ensemble-based IDS for smart environments; high accuracy and low false alarm rates on tests; improved computational efficiency via aggressive feature reduction and outlier removal (faster training/inference); suitable for real-time IoT deployment. Generalization may be limited by the narrow range of attacks in training data. | Ensemble learning with AdaBoost (and CatBoost) classifiers, combined with Boruta feature selection and PCA for dimensionality reduction. Data pre-processing included removing outliers to improve model focus. This pipeline optimizes the feature set and leverages boosting to handle class imbalance. | Evaluated on NSL-KDD and BoT-IoT datasets; performed binary/multi-class classification of normal vs various attacks; measured by accuracy and false alarm rate (and likely precision/recall). The inclusion of CatBoost aimed at maintaining performance on imbalanced data. | Moderate–By reducing data dimensionality and focusing on key features, the model is more scalable to larger datasets than unoptimized approaches. Nonetheless, its true scalability across different smart city setups is uncertain, as the training data covers limited threat types (potentially requiring retraining for new attack patterns). | Yes–The optimizations (feature reduction, faster ensemble learning) make it feasible for real-time intrusion detection in IoT networks, with quick model responses and manageable computational load. | Boruta algorithm and PCA prune irrelevant and redundant features, reducing complexity; outlier removal streamlines the training data. Uses ensemble boosting (AdaBoost + CatBoost) to optimize learning especially on imbalanced classes. No specific mention of network pruning/quantization, focusing instead on input feature optimization. | NSL-KDD (classic IDS dataset) and BoT-IoT (IoT botnet traffic). | High accuracy (near 99%) on both datasets with very low false positive rates; improved precision/recall in detecting attacks due to feature optimization and ensemble methods (exact metrics not given, but false alarms notably reduced) . |

| Ahmed, Beyioku & Yousefi (2024) | Leverages real-world IoT attack data via honeypots, improving authenticity of detection; evaluated multiple ML algorithms for intrusion detection; feature selection enhanced efficiency. Highlights challenges in consistency (some classifiers like NB underperformed) and data quality (missing values) for IoT security. Primarily an offline analysis (not yet optimized for live deployment). | Deploys high-interaction honeypots over an extended period to gather genuine IoT attack traffic. Applies various machine learning classifiers – Naïve Bayes, Decision Tree, k-NN, Sequential NN, LSTM – to detect attacks. Utilizes Information Gain and OneR feature selection to identify important features and improve model accuracy/efficiency. | Dataset is a custom IoT attack dataset derived from the honeypot logs (captures real attack attempts on IoT devices). Framed as supervised classification of malicious vs normal events; evaluated using accuracy (and implicitly precision/recall via analysis of false positives/negatives). Decision Tree and LSTM showed consistently high accuracy in identifying IoT-specific attacks. | Limited–While using real attack data increases relevance, the approach is tested on a specific collected dataset. Scaling this method to a city-wide IoT deployment (with continuous data streams from many honeypots or live traffic) is non-trivial, as noted by the need for more diverse attack data and improved handling of large data volumes. | No (offline)–The study analyzes stored attack data rather than performing live monitoring. Real-time detection in practice would require addressing the processing of streaming data and model deployment on IoT infrastructure, which the authors note as a challenge (due to data volume and varying performance of algorithms). | Feature selection (IG, OneR) is used to reduce feature space and improve classification speed/accuracy. Otherwise, standard ML optimizations per algorithm were applied; no custom pruning or model compression beyond data preprocessing. | IoT honeypot-derived dataset (authentic attack traffic collected from deployed traps). | High accuracy achieved by Decision Tree and LSTM models (best performers) in detecting captured attacks. NB was less effective. Precise metrics not given in summary, but results indicate strong detection capability for DT/LSTM (likely >90% accuracy) and improved efficiency after feature selection. |

| Shareef et al. (2024) | Graph neural network-based botnet detector for IoT; extremely high accuracy (99.87%) and precision; captures complex structural and temporal patterns in network traffic via dual attention mechanisms; adaptable to evolving botnet behaviors. Computationally intensive architecture (Graph Attention Network + optimization algorithms) may impede deployment on constrained IoT devices | Zebra Optimization Algorithm (ZOA) for feature selection (identifies salient network traffic features); Dual-channel Graph Attention Network (DGAN) for classification, with separate node-level and semantic attention sub-networks to learn intricate communication patterns; Sooty Tern Optimization (STOA) for hyperparameter tuning of the DGAN. | Evaluated on a large-scale IoT botnet traffic dataset with substantial noise and diversity (dataset name not given, likely a recent IoT/CPS dataset). Performs binary classification (botnet vs normal traffic) with graph-based approach. Key metrics: accuracy = 99.87%, with very high precision and strong recall (surpassing traditional IDS models). | Mixed–Capable of handling large, noisy data inputs (demonstrated on big dataset), indicating good data scalability. Though, the method’s heavy computational demands raise scalability issues for real-world deployment: it may not scale down well to many low-power devices without further optimization. Likely suited for centralized analysis or powerful edge servers in a smart city. | Partial–The system is designed to analyze streaming IoT traffic and did capture real-time patterns effectively in testing. Yet, due to its complexity, achieving low-latency inference on typical IoT hardware is challenging; real-time performance is attainable only if sufficient computational resources are available. | Feature selection via ZOA reduces input complexity; STOA tunes hyperparameters for optimal model performance. The dual-attention GNN architecture itself is an optimization for capturing both structural and temporal features of traffic. No mention of model pruning/quantization–the focus is on algorithmic optimization (bio-inspired search for features and params). | Large IoT network traffic dataset with botnet attacks (name not specified). | Accuracy = 99.87%; very high precision (near-perfect classification performance) reported, with strong recall and AUC presumably close to 1.0 given the accuracy. Significantly outperforms conventional IDS benchmarks. |

To further strength the argument, we have also compiled Table 2, which provides a detailed comparison of key IoT datasets, outlining their characteristics and differences to aid in identifying features suited to the proposed methodology.

| Dataset | Year | Attack types | Features | Devices | Real/Sim | Labelled | Related works reference |

|---|---|---|---|---|---|---|---|

| MedBIoT (Hao et al., 2024) | 2020 | IoT botnets (Mirai, Bashlite, Torii–causing DDoS, C&C traffic) | 115 (Network flow stats) | Mixed IoT (83 devices) | Real | Yes | Ahmed, Beyioku & Yousefi (2024) |

| IoT-23 (Sharma & Babbar, 2024) | 2020 | Various IoT malware (20 captures incl. Mirai variants, C&C traffic, DDoS) | 20+ (Zeek flow fields) | Various IoT (Raspberry Pi + real IoT devices) | Real | Yes | Ahmed, Beyioku & Yousefi (2024) |

| BoT-IoT (Alosaimi & Almutairi, 2023) | 2018 | DDoS, DoS, scanning (Reconnaissance), keylogging & data exfiltration (Theft) | 47 (Extracted flow features) | Smart home | Sim | Yes | Hazman et al. (2024, 2023) |

| Edge-IIoT (Nuaimi et al., 2023) | 2022 | 14 IoT/IIoT attacks in five categories (DoS/DDoS, information gathering, injection, MITM, malware) | 84 (Selected features from 1,176) | Industrial | Sim | Yes | Hazman et al. (2024) |

| NSL-KDD (Zakariah et al., 2023) | 2009 | Classic attacks (DoS, R2L, U2R, Probe) | 41 (Connection features) | General network | Sim | Yes | Hazman et al. (2024, 2023) |

| IoT network intrusion (Smart Home) (Kaur et al., 2023) | 2019 | Various (e.g., host scan, botnet malware, MITM, DDoS) | 115 (46 (Traffic features)) | Smart home | Real | Yes | Almasri & Alajlan (2023) |

| ISCX 2012 (Shiravi et al., 2012) | 2012 | Multi-stage attacks (SSH brute force, HTTP DoS/DDoS, infiltration) | 20 (flow metrics) | General network | Real | Yes | Almasri & Alajlan (2023) |

| N-BaIoT (Naveed, 2020) | 2018 | IoT botnet malware (Mirai, BASHLITE– multiple attack vectors) | 115 | Smart home | Real | Yes | Taher et al. (2023) |

| CIC-IoT 2023 (Canadian Institute for Cybersecurity, 2023) | 2023 | 33 large-scale IoT attacks in seven classes (DDoS, DoS, Recon, Web, Brute Force, Spoofing, Mirai) | ~80 (Network-flow features, CSV) | Diverse IoT (105 devices) | Real | Yes | – |

| ACI-IoT 2023 (Army Cyber Institute, 2023) | 2023 | Scanning (Reconnaissance), flooding (DoS), password cracking (Brute Force), ARP spoofing | NetFlow records (e.g., 15 fields) | Smart home IoT (lab setup) | Real | Yes | – |

Preliminaries

Vulnerability context for smart city networks

Smart city infrastructures are attractive targets for cyberattacks due to the convergence of critical services and interconnected devices that make it crucial to understand the potential attack vectors, targets, and impacts. Prominent attack vectors include DDoS which overwhelms systems with excessive traffic, Command and Control (C&C) exploits that manipulate devices for malicious activities, and Advanced Persistent Threats (APTs) which involve prolonged infiltration to compromise network security and disrupt essential services. These attacks often target critical infrastructure such as power grids and emergency services, aiming to cause significant disruptions and compromise public safety. Botnets, a key component in many attacks, are networks of compromised devices controlled by attackers. They can be structured in centralized, decentralized, or hybrid architectures, each influencing their resilience and control mechanisms. Malware employed in these attacks includes polymorphic malware that changes its code to evade detection, and techniques like cross-layer exploits that target multiple layers of a system. Infection vectors, such as vulnerability exploitation, supply chain compromise, and social engineering, are used to infiltrate devices. Attackers also utilize defense evasion techniques to conceal their malicious activities.

Challenges in IoT botnet detection

Our investigation revealed several limitations and challenges, one of the major challenges in IoT botnet detection is the extreme heterogeneity of IoT devices, which introduces a wide variety of communication protocols, processing capabilities, and security vulnerabilities across networks. Each IoT device can behave differently depending on its configuration which proves its’ eligibility in creating a highly diverse attack surface that complicates the modeling of normal and malicious behavior. Another significant challenge arises from the resource constraints inherent in IoT devices, such as limited computational power, memory, and energy. These limitations obstruct the deployment of advanced security measures such as encryption and real-time anomaly detection. As a result, IDS must depend on lightweight solutions that maintain a balance between detection accuracy and computational efficiency. IoT devices generate a substantial volume of network traffic due to their continuous operation. This results in massive data streams that must be processed and analyzed in real time. It was evident that high data throughput demands real-time detection capabilities, which places a significant computational load on detection systems. These systems must operate efficiently to handle the traffic without causing latency. A further challenge lies in obtaining labeled datasets necessary for training machine learning models. IoT botnets often exhibit subtle behavioral changes that are difficult to distinguish from legitimate traffic. Herewith, the absence of comprehensive labeled datasets limits the effectiveness of supervised learning methods in detecting emerging threats. Likewise, modern IoT botnets add to this complexity by frequently adapting their attack strategies to evade detection mechanisms. Techniques such as encryption, polymorphism, and traffic obfuscation make IDS ineffective when relying solely on signature-based or traditional anomaly detection methods.

Traditional IDS limitations

Traditional IDS face several limitations (Hajiheidari et al., 2019; Khraisat & Alazab, 2021; Najafli, Haghighat & Karasfi, 2024) when applied to modern IoT environments. For instance:

It lacks the capability to manage the evolving and diverse nature of IoT devices and protocols. This results in significant gaps in detecting targeted and complex attacks.

High false positive rates are a common issue due to rigid rule-based detection methods that fail to adapt to varying traffic patterns.

These systems often exhibit poor scalability in large-scale IoT environments. This leads to performance degradation and delays in threat detection.

Limited real-time analysis capabilities arise because majority of traditional IDS rely on batch processing methods, which are unsuited for the continuous flow of IoT data.

Resource-constrained IoT devices are incompatible with traditional IDS due to the high computational demands of these systems.

Signature-based detection methods in traditional IDS cannot identify novel or previously unknown threats effectively.

Contextual awareness is insufficient in traditional IDS. They fail to recognize the holistic behavior of IoT devices within their operational settings, resulting in incomplete assessments of threats.

Integration challenges occur with IoT-specific communication protocols and encryption mechanisms, leading to undetected vulnerabilities in traffic analysis.

Features of ideal DL architectures for intrusion detection

Our investigation revealed the following advantages of optimum DL for intrusion detection (Najafli, Haghighat & Karasfi, 2024; Muneer et al., 2024), which includes, but not limited to:

Ability to extract features from raw network traffic directly from data without requiring manual feature engineering. This ensures suitability for high-dimensional IoT datasets.

The capability to identify and interpret intricate patterns within data that enable detection of sophisticated and evolving attack strategies is often undetected by traditional methods.

High adaptability to diverse IoT devices and communication protocols. This ensures robustness across heterogeneous network environments.

Scalability that allows effective handling of large data volumes. This makes these methods ideal for real-time intrusion detection in smart city networks.

Potential to significantly reduce false positives by identifying subtle traffic deviations. This improves alerting precision and operational effectiveness.

Resilience against adversarial tactics through advanced modeling of non-linear relationships and the integration of diverse data sources.

Ability to perform well even with limited labeled data by employing semi-supervised or unsupervised approaches. This addresses challenges in scenarios where labeled datasets are scarce.

Applied preprocessing and optimization techniques

To ensure the integrity, efficiency, and effectiveness of the proposed intrusion detection framework, a combination of data preprocessing and model optimization techniques was employed throughout the design and training phases. Each technique was selected to address specific challenges associated with high-dimensionality, data sparsity, computational efficiency, and model generalization in considered network environment. The following definitions provide concise explanations of the methods utilized within this study to support feature representation, model training, and real-time inference:

→ Min-max normalization: A scaling technique that transforms numerical features into a fixed range, typically [0, 1], to ensure uniform contribution to the model’s learning process.

→ Mutual information and variance thresholding techniques: Feature selection methods used to retain informative attributes and eliminate low-variance or weakly relevant features which usually leads to reducing redundancy in high-dimensional IoT traffic data.

→ Imputation techniques: Methods applied to handle missing data by substituting null values with statistical estimates, such as mean for numerical and mode for categorical features, to maintain dataset consistency.

→ Feature scale normalization: A transformation approach that adjusts the magnitude of numerical features to a common scale, preventing dominant attributes from skewing model training.

→ One-hot encoding: A categorical encoding scheme that converts non-numeric variables into binary vectors which allow the models to process protocol types and device states without assuming ordinal relationships.

→ Weight decay: A regularization technique that penalizes large weight values during training to prevent overfitting and promote model generalization in noisy IoT environments.

→ L2 regularization: A specific form of weight decay that adds the squared magnitude of weights to the loss function to discourage complexity and improve robustness.

→ Dropout and early stopping: Regularization strategies where dropout randomly disables neurons during training and early stopping halts training once validation performance ceases to improve and reduce overfitting.

→ Pruning optimization: A technique that removes non-contributing or weakly active neural connections to reduce model size and improve inference speed without compromising detection accuracy.

→ Weight quantization: The process of converting model weights from high-precision to lower-precision formats (e.g., 32-bit to 8-bit) to decrease memory footprint and accelerate computations.

→ Sparse matrix multiplication: An inference-time optimization that leverages zero-valued weight sparsity to skip unnecessary computations to enhance real-time performance on edge devices.

Proposed methodology

It is evident that the presence of botnets in IoT-equipped smart city networks can lead to disastrous consequences, undermining the functionality and security of critical infrastructure systems. These networks, designed to manage everything from traffic lights and public transportation to utilities and public safety systems, are particularly vulnerable to botnet attacks, which can harness compromised IoT devices to launch coordinated disruptions. Such attacks can cripple urban operations, cause widespread service outages, and expose sensitive public and personal data.

Data collection and preprocessing

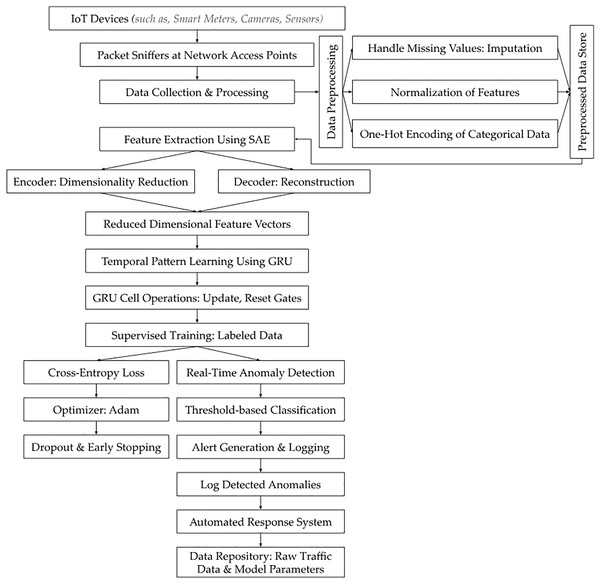

Raw network traffic data were collected from a diverse set of IoT devices operating within the smart city infrastructure, including but not limited to smart meters, environmental sensors, traffic monitoring systems, and surveillance cameras. These devices were selected due to their heterogeneous communication protocols (i.e., includes but not limited to: Zigbee, Wi-Fi, LoRaWAN, RTSP (Real-Time Streaming Protocol), etc.,) and varying data generation rates, representing the complexity of an interconnected smart city ecosystem. The collection process spanned over a period of 4 weeks to ensure temporal diversity and capture data variations in network behavior. Data capture was conducted using network monitoring tools (i.e., Wireshark & TCPDUMP) capable of passive traffic analysis, which allowed for continuous recording without interfering with device operations. Packet sniffers (i.e., as illustrated in Fig. 1) were deployed at multiple network access points across the emulated smart city setup to capture all inbound and outbound traffic to ensure that the dataset reflected both normal and anomalous behavior from different sectors of the emulated city. This approach allowed for the monitoring of device-level communications, network congestion patterns, and potential cyber threats such as DDoS attempts, while maintaining data integrity. To ensure comprehensive representation, network traffic from high-traffic nodes such as public Wi-Fi hotspots was included alongside traffic from low-traffic IoT devices such as smart parking meters. This approach captured a broad spectrum of traffic patterns and device interactions. The collection framework incorporated various IoT protocols including MQTT, CoAP, and HTTP, which are integral to smart city infrastructure. Random sampling was applied across different time slots and network segments to avoid overrepresentation of any specific device type or network traffic pattern. In this context, we observed that data bias may arise from unequal representation of certain patterns or features within the dataset that can potentially lead to skewed or inaccurate model outcomes. Also, overrepresentation of traffic from high-traffic IoT devices like public Wi-Fi hotspots could hinder the detection of attacks targeting fewer common devices. Similarly, an imbalance in protocol representation, such as focusing on MQTT over HTTP was observed with the ability to reduce the model’s capacity to detect threats in underrepresented communication types.

Figure 1: SAE-GRU based intrusion detection system for IoT botnets.

In our investigation, data preprocessing was essential for maintaining the quality and usability of collected IoT network traffic data for intrusion detection. We realized the importance of preserving the integrity and statistical reliability of the dataset, and for this reason, the mean imputation was used for numerical features by replacing null entries with the arithmetic average of observed values, which preserved the statistical distribution and prevented distortion in features such as packet size, byte counts, and flow duration—attributes critical for distinguishing volumetric and behavioral anomalies in botnet detection.

To further strengthen the data preprocessing, we also applied Mode imputation to categorical features such as protocol type or device state, where the most frequent class was substituted to retain consistency in discrete attribute distribution. These strategies prevented information loss while maintaining model input uniformity. It is worth highlighting that for in botnet detection, numerical values are especially important as they capture subtle deviations in traffic volume, session timing, and data transfer behavior that often precede or accompany coordinated botnet activity, thus allowing the model to learn and identify patterns that are otherwise undetectable through categorical inspection alone. In accordance, the feature selection improved model efficiency by eliminating irrelevant attributes while preserving essential patterns in network behavior.

We also applied mutual information along with a fixed variance thresholding technique set at 0.01 to eliminate features exhibiting low variability across observations, as such features contribute minimal discriminative power and can introduce noise into the learning process. This dual-step approach enabled the selection of highly informative attributes that not only improve classification accuracy by focusing the model on relevant patterns but also reduce computational complexity by removing redundant or static input dimensions. The applied dataset covered various intrusion types, including DDoS attacks, Command and Control activities, traffic injection attempts, data exfiltration, spoofing events, malware propagation, credential harvesting, brute force attacks, firmware exploitation, and eavesdropping on communication channels.

The heterogeneous nature of IoT devices, as presented in Table 3, introduced variations in data formats, requiring specific methods to handle missing values. In this context, imputation techniques were applied to maintain dataset integrity, where mean imputation was used for numerical features and mode imputation for categorical attributes to address data sparsity. Whereas the feature scale normalization was applied to account for differences in magnitude, including packet sizes and traffic volumes. Min-max normalization adjusted feature values within a unified scale, typically between 0 and 1, which guaranteed consistency across the dataset and improving the effectiveness of model training.

(1)

| IoT device name | Vendor | Purpose | Specifications | Communication protocols | Vulnerabilities |

|---|---|---|---|---|---|

| Smart meter | Siemens (INHEM1216) |

Monitor and report energy usage | Communication: ZigBee, Protocol: IEC 62056, Power: Low Power (Battery) | ZigBee, LoRaWAN | Data tampering, Unauthorized access |

| Traffic monitoring camera | Bosch (MIC inteox 7100i) |

Track traffic flow and violations | Resolution: 1080p, Connectivity: 4G/5G, Power: Wired | 4G/5G, Wi-Fi | Eavesdropping, DDoS |

| Environmental sensor | Honeywell (C7355A1050) |

Monitor air quality and environmental conditions | Sensor Type: Temp./Hum. /CO2/TVOC/PM, Connectivity: LPWAN, Power: Solar/Battery | LPWAN, MQTT | Spoofing, Data tampering |

| Smart streetlight | GE Lighting (ERL1-ERLH-ERL2) |

Control and manage street lighting | Connectivity: LoRaWAN, Power: Solar, Control: Remote Dimming | LoRaWAN | Unauthorized access, Remote exploitation |

| Public Wi-Fi hotspot | Cisco (240AC, Catalyst IW9165E) |

Provide public wireless internet access | Standard: IEEE 802.11ac, Range: 90 m, Power: Power over Ethernet (PoE) | Wi-Fi, IEEE 802.11ac | Man-in-the-Middle (MITM), Data Interception |

| Smart parking meter | Presto (Presto 1000), ParkingBOXX |

Monitor parking spaces and payment systems | Communication: NB-IoT, Payment: Contactless, Power: Battery | NB-IoT, LTE | Spoofing, Data exfiltration |

| Surveillance drone | DJI (Mavic Pro Platinum) |

Provide aerial surveillance | Range: 10 km, Camera: 4K, Power: Rechargeable Battery | 4G/5G, Wi-Fi | GPS Spoofing, Signal jamming |

| Smart waste management Bin | Bigbelly (Smart) |

Manage waste levels in urban areas | Sensor: Ultrasonic, Communication: LoRa, Power: Solar | LoRaWAN | Denial of service, Sensor spoofing |

| Smart water monitoring system | Xylem (DIQ/S 281–WTW, pH 298-WTW) |

Monitor water levels and quality | Sensor Type: pH, Turbidity, Connectivity: LTE-M, Power: Battery | LTE-M | Data exfiltration, Device hijacking |

As per Eq. (1), the is normalized value, is the original feature value, & are the minimum and maximum values of the feature, respectively. This normalization is crucial in preventing features with larger ranges from dominating the training process.

To convert categorical variables, such as protocol types, into numerical formats, one-hot encoding (Rezvan et al., 2024) was employed. This method transformed each categorical feature into a binary vector, where each category was represented as a distinct dimension that has allowed the model to interpret the categorical information without imposing artificial ordinality. The choice of one-hot encoding was particularly relevant given the non-hierarchical nature of many categorical features in IoT traffic, where no intrinsic ranking exists between different protocols or device types.

We selected preprocessing algorithms such as the Pandas and Scikit-learn libraries due to their proven efficiency and scalability. These qualities were critical for handling the extensive volume and high dimensionality of IoT data. The preprocessing steps (such as: Missing value imputation; Normalization of feature scales; One-hot encoding for categorical variables; Data cleaning; Standardization; Feature extraction; and Feature dimensionality reduction) standardized the dataset while eliminating inconsistencies. This process ensured a robust foundation for feature extraction and effective model training.

Rationale for employing stacked autoencoder

The adoption of SAEs in this research stems from their capacity to process high-dimensional data effectively while maintaining essential features. The emulated IoT environment generated extensive network traffic with a mix of significant and redundant information. Hereby, the SAE proved effective in this context due to its ability to uncover latent patterns such as recurring traffic bursts, synchronized connection attempts, and consistent session durations by encoding these into compact, information-rich representations (e.g., protocol activity footprint; connection uniformity pattern, etc.). Through dimensionality reduction, the autoencoder transformed correlated features: like ‘packet size, flow duration, and byte count’ into a unified latent variable capturing their combined variance. This abstraction enabled the model to detect subtle yet coordinated anomalies in communication behavior that are often obscured in the original high-dimensional feature space especially for those which are indicative of botnet activity. This compression process minimized noise and preserved critical characteristics without compromising analytical depth (i.e., feature importance analysis; performance metrics evaluation; temporal pattern recognition; model generalization assessment; real-time detection capability; false positive and false negative analysis; cross-validation; and dataset diversity evaluation). In our resource-constrained IoT system, this dimensionality reduction demonstrated its value by enabling computational efficiency and facilitating real-time analysis. The compressed data produced by SAEs reduced the computational demands of subsequent tasks, thereby it improved the overall performance of implemented intrusion detection model.

In the proposed emulated settings, SAE, the encoder function transforms the high-dimensional input data into a lower-dimensional hidden representation . This procedure (i.e., as exhibited in Eq. (2)) was important to reduce redundancy and emphasize essential feature interactions which enabled the model to focus on patterns that are most relevant for distinguishing between normal and malicious traffic behavior.

(2) where is the weight matrix, is the bias term, and is the activation function, typically ReLU (Rectified Linear Unit). The hidden representation encapsulates the essential features in a compressed form. The decoder then attempts to reconstruct the input from using the Eq. (3):

(3) where and are the decoder’s weight matrix and bias term, respectively. The objective of training the SAE is to minimize the reconstruction error, which measures the difference between the original input and its reconstruction . The reconstruction error is often expressed as the mean squared error (MSE) and is minimized as follows (i.e., expressed in Eq. (4)):

(4) where is the loss function, and and represent the original and reconstructed values of the input data, respectively. It is worth noting that minimizing the MSE during the training of the SAE ensured that the reconstructed output closely matches the original input for preserving critical traffic patterns. Lower MSE indicates that the encoder has captured the most informative features needed to differentiate normal from botnet-induced anomalies. Thus, an encoder compresses input data into a lower-dimensional space and a decoder reconstructs the original input. The objective is to retain the most relevant features while discarding noise which facilitates efficient pattern recognition in high-dimensional datasets.

The SAE was trained in an unsupervised manner by optimizing the loss function using the gradient-based optimization algorithm ‘Adam’ (Reyad, Sarhan & Arafa, 2023). This algorithm was selected for training the SAE because it combined the benefits of both ‘AdaGrad and RMSProp (i.e., are the optimization algorithms used to update model parameters during training)’ by adaptively adjusting learning rates for each parameter using first and second moment estimates of gradients. This made it well-suited for handling sparse features and non-stationary objectives common in high-dimensional IoT traffic data. Regularization technique, weight decay or regularization, was applied to the weight matrices during training to mitigate overfitting. Our investigation revealed that this approach is particularly critical when handling large and noisy datasets that are common in IoT networks. Weight decay introduced a penalty to the loss function based on the magnitude of the weights. This penalty helped prevent the model from becoming overly complex and improved its ability to generalize to unseen data. The applied loss function with regularization is expressed in Eq. (5) as:

(5) where is the regularization parameter and is the squared norm of the weight matrices.

After training the SAE, the encoder component processed high-dimensional input data to generate lower-dimensional feature representations. These compact feature vectors retained the most critical information for subsequent analysis while minimizing computational complexity in later stages of intrusion detection. This reduction in dimensionality enhanced computational efficiency and concentrated on the most crucial patterns which eventually led to improved accuracy in identifying malicious activities.

Underlying principle for exercising gated recurrent unit network

The GRU network was implemented to detect temporal patterns (e.g., repeated connection intervals, synchronized packet bursts, periodic data uploads, uniform session durations, cyclic protocol switching, timed beacon signals, consistent idle-to-active transitions, recurring port access sequences, repetitive handshake patterns, scheduled command transmissions) in IoT network traffic data. Herewith, the structured gated recurrent units handled sequential data (i.e., time-stamped network flows, session-wise traffic logs, packet arrival sequences, protocol exchange orders, connection attempt histories, authentication sequences, port scanning timelines, device communication intervals, traffic burst timings, malware propagation traces) efficiently by maintaining long-term dependencies while reducing computational demands. Temporal patterns and sequential data were important because they captured the timing and order of network activities, which are critical for identifying behaviors characteristic of botnet operations. Such patterns revealed coordinated actions, delayed triggers, and repetitive access sequences that static feature analysis could not detect.

Our comprehensive evaluation showed that GRUs address vanishing gradient challenges that frequently affect traditional recurrent neural networks. This property strengthens their effectiveness in real-time intrusion detection for IoT environments. Cross-validation confirmed the capability of GRUs to capture sequential dependencies with greater stability, whereas conventional recurrent networks struggled to retain long-range information. Thus, this implementation enabled the emulated system to learn patterns efficiently and identify both normal and malicious activities within the traffic. Normal activities include regular data transmission intervals, periodic updates, scheduled maintenance tasks, consistent communication behaviors, synchronized sensor readings, uniform packet sizes, and steady bandwidth utilization. Whereas, the malicious activities encompassed sudden surges in traffic volume, irregular packet timings, anomalous data flows, unscheduled device interactions, inconsistent protocol behaviors, rapid data bursts, significant packet size deviations, coordinated surges across multiple devices, repeated connection attempts to external servers, frequent contact with command-and-control servers, brute force login attempts, high-frequency port scans, periodic data exfiltration bursts, prolonged DDoS attacks, unexpected increases in device-to-device communication, synchronized anomalies from geographically dispersed devices, abnormal encryption patterns, frequent traffic destination changes, and suspicious outbound connections to unverified domains. We have also observed that the GRUs require fewer parameters than LSTMs, demonstrated reduced computational overhead, which was vital in environments with limited resources. This efficiency and robust ability to process sequential dependencies established GRUs as an ideal solution for intrusion detection within the proposed architecture.

In our emulated settings, the functionality of a GRU (i.e., as evident from Fig. 1) is controlled by two primary gates: the update gate and the reset gate, which govern how much past information is passed to the future. Herewith, the ‘update gate’ determines how much of the previously observed network behavior (e.g., normal or suspicious traffic patterns) is carried forward for further analysis in the detection process. For botnet detection, this helped track long-term anomalies, such as coordinated attacks that evolve over time. Whereas the ‘reset gate’ decides how much of the past information should be ignored, enabling the model to focus on immediate and relevant traffic patterns. In our applied detection, this allowed the GRU to disregard irrelevant or benign variations, such as normal fluctuations in traffic, while concentrating on identifying emerging botnet behavior.

Accordingly, the update gate, denoted as , determines the extent to which the hidden state from the previous time step should be carried forward. This phenomenon is represented in Eq. (6):

(6) where and are the weight matrices for the input and the previous hidden state , respectively, and is the bias term. The sigmoid function ensures that the values of remain between 0 and 1, effectively controlling the weight of the previous hidden state in the current computation.

The reset gate, denoted as , is responsible for deciding how much of the previous hidden state should be forgotten. As stated earlier, this gate plays a critical role in allowing the GRU to selectively reset portions of its memory when modeling sequential dependencies, as exhibited in Eq. (7):

(7)

Here, and are the weight matrices, and is the bias associated with the reset gate. By modulating the reset gate, the GRU can ignore irrelevant parts of the past sequence when they are not useful for future predictions. With this, as exhibited in Eq. (8), the candidate activation, denoted as , represents the new memory content to be added to the hidden state. This candidate is computed based on both the current input and the reset hidden state, which allows the GRU to conditionally forget or remember parts of the sequence:

(8)

Herewith, the , , and are the corresponding weights and biases for the candidate activation, and represents element-wise multiplication. The reset gate ensures that only relevant historical information influences the new candidate activation. Ultimately, the hidden state at time step is updated as a linear interpolation between the previous hidden state and the candidate activation, controlled by the update gate:

(9)

Equation (9) determines the final output of the GRU unit at each time step by balancing the influence of the new candidate activation with the previous hidden state , depending on the value of the update gate . This interpretation allowed GRUs to adaptively retain relevant information over long time sequences which no doubt proved to be a critical feature for identifying patterns of malicious activity within sequential IoT network traffic.

Herewith, the Table 4 provides an algorithmic and structured breakdown of the SAE-GRU-based intrusion detection workflow by detailing each step from data preprocessing to real-time threat classification. This formal representation enhances the clarity of the proposed methodology.

| Step | Algorithmic pseudocode |

|---|---|

| 1. Data collection and preprocessing | Input: Raw network traffic data |

| For each network traffic record in : | |

|

|

| End For | |

| 2. Feature extraction using stacked autoencoder (SAE) | Input: Preprocessed network traffic features |

| Encode: | |

| Decode: | |

| Compute Loss: ( ) | |

| 3. Temporal pattern recognition using GRU | Input: from SAE |

| Initialize: GRU hidden state | |

| For each time step | |

|

|

| End For | |

| Output: Final hidden state for classification | |

| 4. Intrusion classification using thresholding | Input: GRU output Compute classification score |

| If then: | |

|

|

| 5. Optimization for real-time processing | Apply: |

|

|

| 6. Alert generation and response | If attack is detected: |

|

|

| End If | |

| 7. Model training and performance evaluation | Train model: |

|

Temporal pattern recognition in IoT network traffic

The compressed feature vectors generated by the SAE were passed as input into the GRU network to model temporal dependencies3 . These lower-dimensional feature vectors, which represented condensed and denoised versions of the original high-dimensional network traffic, encapsulated critical information relevant to identifying both normal and malicious activities. The GRU network processed these sequential inputs by maintaining a hidden state that captured information from previous time steps. This mechanism allowed it to learn long-term dependencies and temporal correlations within the data. Each feature vector in the input sequence passed through the update and reset gates to enable the GRU to determine how much past information should be retained or discarded at each time step. Through this modulation, the GRU dynamically adjusted its memory of past events, which was essential for distinguishing between normal traffic patterns and anomalies indicative of botnet activity. As the GRU iterated through the sequence of feature vectors, it identified patterns that signaled malicious behaviors such as coordinated spikes in traffic or abnormal data flows while filtering out routine variations in the network. The ability of the GRU to maintain context over extended periods enabled it to detect botnet activities that unfold over long time frames. This temporal modeling was crucial for differentiating between benign and malicious behaviors, as it accounted for both short-term fluctuations and long-term trends in order to ensure high accuracy in threat identification.

Supervised training process of the GRU network

The supervised training process of the GRU network was conducted using labeled data, where each sequence of input feature vectors derived from the SAE was associated with a corresponding class label indicating whether the traffic pattern represented normal behavior or malicious activity. The use of labeled data allowed the GRU to learn from ground-truth examples and distinguish between benign and anomalous patterns in network traffic. The cross-entropy loss function was employed during training to quantify the difference between the predicted class probabilities and the actual class labels. This loss function was suitable for binary classification tasks, such as detecting botnet attacks, and is represented in Eq. (10):

(10) where is the true label, is the predicted probability, and represents the number of samples. Herewith, we applied the Adam optimizer to compute individual adaptive learning rates for each parameter and combines the benefits of both the AdaGrad and RMSProp algorithms (as exhibited in Table 5), ensuring stable convergence even in complex, high-dimensional spaces.

| AdaGrad | 1. Initialize: |

|

|

| 2. For each parameter at time : | |

|

|

| 3. End For. | |

| RMSProp | 1. Initialize: |

|

|

| 2. For each parameter at time : | |

|

|

| 3. End For. | |

Herewith,

|

|

To enhance the generalization capability of the GRU network and prevent overfitting, two techniques were applied: Dropout and Early Stopping. Dropout was introduced during training by randomly setting a fraction of the units in the hidden layers to zero at each iteration. This forced the network to learn redundant representations and reduced its dependency on specific neurons. The dropout rate was carefully selected to achieve a balance between underfitting and overfitting, typically ranging from 0.3 to 0.5 for optimal performance. Early Stopping was employed as a regularization method that monitored the model’s performance on a validation set and halted training when the validation loss stopped improving. This ensured that the GRU network stopped training at its optimal state and avoided overfitting the training data. Our observation revealed that by applying these strategies, the GRU network achieved high accuracy and robustness in detecting anomalies across diverse and complex IoT traffic patterns and demonstrated strong generalization to unseen data during deployment.

Architecture of the combined SAE-GRU model

From the preceding discussion, it is evident that we have effectively conceptualized & favorably designed optimal SAE-GRU model to efficiently process high-dimensional IoT network traffic data and extracted temporal dependencies for effective intrusion detection. Herewith, at this stage, the SAE acted as a dimensionality reduction layer to reduce noise and compressed the raw high-dimensional input data into lower-dimensional feature vectors. These feature vectors are then passed into the GRU network, which models the temporal relationships between the sequences of traffic data. We designed the SAE as a three-layer architecture: an input layer, a hidden layer, and an output (reconstruction) layer. The number of hidden units in each layer was empirically determined, with the hidden layer of the encoder having 128 units to balance expressiveness and computational efficiency. The rectified linear unit (ReLU) was used to introduce non-linearity and enhance the model’s ability to learn complex patterns. The GRU component comprised two layers of recurrent units, each with 64 hidden units, and uses the tanh activation function4 , which provided stability in gradient propagation, reduced the risk of exploding or vanishing gradients, especially when processing long sequences. The choice of this architecture ensured that the model could capture both spatial and temporal features in network traffic data while remaining computationally feasible for real-time deployment.

The deployment of the trained SAE-GRU model for real-time intrusion detection in the emulated network was achieved using Python-based machine learning libraries (i.e., TensorFlow), which supported efficient implementations of deep learning architectures. The model was integrated into the existing network monitoring system through RESTful APIs to enable real-time data stream input and analysis. Real-time inference was optimized by batching incoming network packets and processed them through the SAE for compression followed by GRU-based temporal analysis. The system operated on a cluster of edge computing nodes equipped with moderate GPU support, which distributed the computational workload of IoT traffic processing across multiple nodes. This hardware configuration ensured that the model fulfilled the strict low-latency requirements of real-time anomaly detection.

The mechanism for anomaly detection relied on analyzing incoming data streams and comparing them to normal behavior patterns learned during training. Once a sequence of compressed feature vectors passes through the GRU, the model outputs a score representing the likelihood of the sequence being normal or malicious. Herewith, the Thresholding method was applied to classify network behavior. A decision threshold is empirically set by analyzing the Receiver Operating Characteristic (ROC) curve during validation. The value of is chosen to balance the trade-off between false positives and false negatives, minimizing the total classification error. If the output score exceeds , the behavior is classified as malicious. This is represented in Eq. (11):

(11)

The model also incorporated a mechanism to handle false positives and false negatives by maintaining an alert threshold buffer, which reduced the likelihood of triggering false alarms due to benign fluctuations in network traffic. Upon detection of suspicious patterns indicative of botnet activity, the SAE-GRU model automatically triggered a sophisticated alert mechanism that was designed to efficiently inform network administrators and initiate response protocols. The alert mechanism was integrated directly into the IDS in order to ensure swift and effective responses.

Herewith, when the SAE-GRU model identifies an anomaly that exceeds the predefined decision threshold , it triggers an alert generation process. This process involves the formation of an alert packet that includes detailed information about the detected anomaly, such as the time of detection, affected network segments, and a risk assessment score. This packet is then communicated to the IDS through a secure communication channel, ensuring that the information is relayed promptly and securely to the network administrators.

The implemented alert mechanism included an automated logging system that records every detected event into a centralized log database. This database is structured to store comprehensive details of all alerts, facilitating subsequent analysis and forensic investigations. The stored data includes timestamps, sensor IDs, the type of detected anomalies, severity levels, and the actions taken in response to the alerts. This logging is crucial for tracking the effectiveness of the detection system, auditing system responses, and refining detection strategies over time.

The applied IDS was programmed to parse incoming alerts and categorize them based on severity. Depending on the severity and the specific characteristics of the detected anomaly, predefined mitigation strategies are automatically initiated. These strategies include, but are not limited to, autonomic reconfiguring firewalls to block malicious traffic, segmenting parts of the network to isolate compromised devices, and deploying additional monitoring to the affected areas/zones. The system employs a rule-based decision algorithm, as outlined in Table 6, to determine the appropriate response based on the risk assessment score and the type of anomaly detected.

| Input: Anomaly type, Risk score |

| Output: Mitigation action |

| begin |

| if Risk score > High risk threshold then |

| Execute high-priority response protocol |

| Actions: Immediate isolation of affected network nodes, automated blocking of suspected malicious IP addresses, rapid deployment of autonomic security patches to vulnerable systems, forceful termination of unauthorized connections, real-time alerts to security operation centers. |

| else if Risk score > Medium risk threshold then |

| Execute Moderate-priority response protocol |

| Actions: Enhanced monitoring of suspected traffic, temporary restriction of network access privileges for suspicious accounts, updating autonomically the firewall rules to restrict unusual traffic patterns, automatically conducting vulnerability scans on potentially affected segments, initiating detailed forensic analysis for gathered intelligence. |

| else |