An online intelligent detection method for slurry density in concept drift data streams based on collaborative computing

- Published

- Accepted

- Received

- Academic Editor

- Paulo Jorge Coelho

- Subject Areas

- Algorithms and Analysis of Algorithms, Artificial Intelligence, Real-Time and Embedded Systems, Neural Networks

- Keywords

- Concept drift, Slurry density, Sliding window, Forgetting mechanism, Stochastic configuration network

- Copyright

- © 2025 Wang et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2025. An online intelligent detection method for slurry density in concept drift data streams based on collaborative computing. PeerJ Computer Science 11:e2683 https://doi.org/10.7717/peerj-cs.2683

Abstract

In industrial environments, slurry density detection models often suffer from performance degradation due to concept drift. To address this, this article proposes an intelligent detection method tailored for slurry density in concept drift data streams. The method begins by building a model using Gaussian process regression (GPR) combined with regularized stochastic configuration. A sliding window-based online GPR is then applied to update the linear model’s parameters, while a forgetting mechanism enables online recursive updates for the nonlinear model. Network pruning and stochastic configuration techniques dynamically adjust the nonlinear model’s structure. These approaches enhance the mechanistic model’s ability to capture dynamic relationships and reduce the data-driven model’s reliance on outdated data. By focusing on recent data to reflect current operating conditions, the method effectively mitigates concept drift in complex process data. Additionally, the method is applied in industrial settings through collaborative computing, ensuring real-time slurry density detection and model adaptability. Experimental results on industrial data show that the proposed method outperforms other algorithms in all density estimation metrics, significantly improving slurry density detection accuracy.

Introduction

The mineral processing workflow comprises several stages, including raw ore transportation, crushing and screening, grinding and classification, beneficiation, and dewatering (Hodouin et al., 2001). Among these, grinding and classification serve as a critical link between crushing and beneficiation, significantly influencing the overall workflow. Key equipment in grinding operations includes ball mills and hydrocyclones, whose performance directly affects grinding efficiency (Mukhitdinov et al., 2024; Bradley, 2013). The hydrocyclone feed density is a vital parameter impacting its overflow particle size. Higher feed density increases slurry viscosity and resistance, resulting in coarser overflow particles and reduced classification efficiency. On the other hand, lower feed density improves classification efficiency but reduces throughput while increasing water and power consumption. Therefore, accurate monitoring and control of hydrocyclone feed density are essential for optimizing grinding and classification efficiency (Reddy et al., 2023).

Slurry density is a key metric in grinding and classification, directly influencing metal recovery rates, concentrate grades, production efficiency, and process stability (Whitworth et al., 2022). Current detection methods primarily rely on manual laboratory techniques and densitometers, with limited exploration of artificial intelligence (AI) applications. The pycnometer method is the most common manual technique, where a pycnometer is filled with slurry, weighed, and its density calculated using a formula. Densitometers, on the other hand, use precise instruments to determine material density based on physical principles. Recently, advances in AI technologies have enabled some innovative approaches for slurry density detection. For example, the combination of Prompt Gamma Neutron Activation Analysis (PGNAA) technology and artificial neural networks (ANN) has been proposed for online detection (Huang et al., 2024). Similarly, an approach based on closed-loop input error and deep learning offers a novel method for real-time slurry concentration prediction (Han et al., 2024).

In mineral processing, operational fluctuations such as variations in feed rate and water addition often lead to concept drift, causing slurry density detection models to degrade in performance (Bayram, Ahmed & Kassler, 2022). To address this issue, researchers have developed methods to enhance model adaptability to changing data distributions. These include selecting training samples that represent recent data distributions (Fan, 2004), employing online learning algorithms to update model parameters continuously, dynamically adjusting model structures for new data features (Yang & Fong, 2015), and applying weighted updates to reduce the influence of outdated data (Sen, 2014; Martínez-Rego et al., 2011). These techniques ensure model accuracy and adaptability in dynamic environments. This study investigates a modeling approach that combines mechanistic and data-driven methods to address the challenges of concept drift and meet the demands for accurate, real-time slurry density detection in mineral processing (Cui et al., 2024). We propose an online intelligent detection method for slurry density in concept drift data streams, leveraging collaborative computing. This approach is not limited to slurry density detection and can be extended to monitor other industrial process variables, enhancing the accuracy of industrial parameter detection and improving production efficiency (Wang et al., 2023).

Process description and characteristics analysis

Grinding and classification are among the most critical stages in mineral processing (Yuan et al., 2020). These stages typically involve a closed grinding circuit comprising ball mills, hydrocyclones, and slurry pumps. The primary grinding circuit includes a ball mill and a spiral classifier, while the secondary circuit consists of a ball mill, hydrocyclone, and pump sump. In the primary circuit, ore is mixed with water and ground in the ball mill, after which the slurry is classified by the spiral classifier. Coarse particles are returned to the ball mill for further grinding, while finer particles proceed to the secondary circuit. In the sump, additional water is added, and the slurry is pumped into the hydrocyclone. The hydrocyclone uses centrifugal force to separate the slurry, discharging coarse particles for further grinding and sending finer particles to subsequent beneficiation processes (Wang & Chai, 2019).

The mechanistic analysis of slurry flow in pipelines focuses on selecting auxiliary variables and building a comprehensive model for slurry density detection (Ma, Wang & Peng, 2024). Resistance losses are categorized based on boundary conditions. For smooth boundaries, frictional resistance arises from boundary-fluid interactions and fluid viscosity. Local resistance losses occur due to sudden boundary changes, such as pipe bends, valves, or cross-sectional variations, which can alter flow paths and velocities, potentially causing vortices. Since slurry density detection is performed in vertical pipelines, local resistance losses are negligible, and only frictional resistance losses are included in calculations (Peet, Sagaut & Charron, 2009).

In an ideal scenario without resistance losses, the pressure difference is given by:

(1)

In Eq. (1), is the slurry density, g is gravitational acceleration, and is the height difference of the liquid surface. During slurry flow, frictional resistance losses occur, which are described by:

(2)

In Eq. (2), is the frictional resistance coefficient, L is the pipe length, D is the pipe diameter, V is the average flow velocity, and g is gravitational acceleration. In actual industrial processes, the total pressure difference can be expressed as:

(3)

In industrial production, density measurement commonly relies on pressure differential signals from sensors placed at different heights. However, directly using these signals as inputs for detection models may reduce accuracy (Li et al., 2020). According to Bernoulli’s principle, the total pressure in a fluid remains constant; as flow velocity increases, static pressure decreases. Slurry pressure meters, however, measure only static pressure.

As inlet velocity rises, the dynamic pressure difference between two points also increases. Traditional pressure sensors convert pressure into electrical signals by inducing deformation in a force-sensitive element, which changes resistance in a Wheatstone bridge and generates a potential difference output (Xu et al., 2018). While effective for measuring static pressure differences, this method cannot capture dynamic pressure changes, potentially reducing measurement accuracy if differential signals are used directly in density models. Additionally, system and random errors in pressure measurements necessitate corrections to the high-pressure side absolute pressure and the low-pressure side absolute pressure . The pressure difference measured at time t is adjusted as follows:

(4)

In Eq. (4), A and B are correction coefficients for high pressure and low pressure, respectively; C is the offset term, and represents unknown nonlinear errors in pressure measurement. The average flow velocity V has a nonlinear relationship with the slurry pump current i and frequency f:

(5)

This relationship can be expressed as follows:

(6)

In Eq. (6),

where includes measurement errors and unknown nonlinear terms in the slurry flow process.

Mechanism and data-driven online intelligent detection method for slurry

Classification and handling methods of concept drift

In industrial environments, slurry density detection models face the challenge of concept drift, which refers to dynamic changes in data distribution or characteristics over time. Concept drift often arises from external factors such as variations in raw material properties, production processes, or equipment aging. To maintain prediction accuracy, detection models must adapt continuously to these evolving conditions.

Concept drift is generally categorized as follows:

-

1)

Sudden drift: This involves rapid and significant changes in data features over a short time, often caused by abrupt shifts in raw material properties, equipment failures, or emergency adjustments. Such changes can lead to sudden prediction errors, requiring models to quickly adapt.

-

2)

Gradual drift: Gradual drift occurs when data features evolve slowly over time, such as equipment aging or long-term fine-tuning of process parameters. Although these changes may not immediately affect data distribution, model performance will degrade if left unaddressed. Dynamic update mechanisms are commonly used to adapt to these gradual changes.

-

3)

Incremental drift: This refers to stable, cumulative changes in data distribution, such as progressive variations in slurry concentration across production batches. While each change is small, the cumulative effect can shift the data distribution, necessitating models capable of incremental learning.

-

4)

Recurrent drift: Recurrent drift arises from cyclical factors like periodic equipment cleaning or routine production adjustments. Handling this type of drift requires models to recognize and leverage cyclical patterns to make appropriate adjustments.

In industrial slurry density detection, concept drift is common and often involves multiple drift types coexisting, placing high demands on model robustness and adaptability. In this study, the dataset primarily exhibits sudden and gradual drift. Sudden drift arises from abrupt changes in raw material properties, equipment failures, or emergency operational adjustments, leading to rapid shifts in data features. Gradual drift, in contrast, involves slow changes over time due to equipment aging or minor adjustments in process parameters. To address these drift types, the proposed detection model incorporates a sliding window mechanism and a forgetting mechanism to dynamically update model parameters. For sudden drift, the sliding window mechanism focuses on recent data, discarding outdated information to enable quick adaptation to abrupt changes. The window size is dynamically adjusted to promptly capture new feature distributions during drift events. Recursive formulas are also used to update key parameters online, ensuring the model responds without delays. For gradual drift, the forgetting mechanism reduces the weight of historical data over time, enhancing the model’s sensitivity to current data. By dynamically adjusting the forgetting factor, the model ensures smooth updates for gradual changes while avoiding overreactions to short-term fluctuations. By combining these mechanisms, the proposed model effectively handles diverse types of concept drift in complex industrial environments, significantly improving the accuracy and stability of slurry density detection.

Establishing and calibrating the comprehensive model for slurry density

In dynamic data environments, concept drift occurs when the statistical properties of data change over time, posing challenges for density detection models. This article proposes an intelligent detection algorithm for streaming data, combining a mechanistic model based on Gaussian process regression (GPR) and a data-driven model (Wei et al., 2022) based on a regularized stochastic configuration (RSC) Network for offline learning (Zhang & Wang, 2021). Initially, a subset of the data is selected to establish the initial model. Subsequently, the linear and nonlinear models are updated with streaming data, and the results of both models are combined to obtain the final slurry density detection value (Zhang et al., 2024). As new samples arrive, the linear model parameters are updated online using a recursive formula, yielding a linear model estimate and its variance . The nonlinear model’s output weights are updated online using the teacher signal and the variance of the linear estimate as labels, without altering the model structure. This provides the nonlinear model’s density estimate . If the estimate falls outside the confidence interval , the nonlinear model structure is dynamically adjusted to improve generalization performance. Otherwise, the overall model is updated.

Mechanism-based model using online Gaussian process regression

The mechanistic model, representing the linear component, is based on the physical principles of slurry flow in pipelines (Lui, Liu & Xie, 2022). Using Gaussian process regression with a sliding window mechanism (OGPRSWM), the linear model updates its parameters in real-time. This approach reduces the influence of outdated data, improves parameter estimation, and ensures the model remains accurate and up-to-date (Gu, Fei & Sun, 2020).

Initially, GPR is employed to identify the linear component of slurry density (Cao et al., 2023). When input data at time is provided, the probability distribution of the mechanism model’s output can be obtained as follows:

(7)

In Eq. (7), , represents the total number of training samples in the data pool. and denote the input and output data used for training the linear model up to a given time, respectively. and represent the input and output data for training the linear model at a specific time; is the estimated result of the linear model, is the variance estimated by the Gaussian process regression.

Subsequently, an online Gaussian process regression with a sliding window mechanism is applied. During the initialization phase, dataset is used to construct the initial linear model, and the posterior distribution of parameters is estimated using , the number of training samples in the data pool. and denote the input and output data for training the linear model up to time , while and represent the input and output data at time :

(8)

In Eq. (8),

After adding new samples and discarding older historical data, dataset is updated to , The newly added sample is , and the discarded sample is denoted as ; Following the update of the data samples, is updated to . The key issue in updating the linear model is to update to , where is:

(9)

The computational complexity of primarily stems from matrix multiplication, with the original expression having a complexity of . Let represent the sliding window size; the complexity of the recursive computation is . Based on , the derived recursive formula is used for online updates of the linear model to reduce computational load and improve efficiency. By performing matrix inversion on , the updated parameters are obtained, and the estimated density value and variance for the new incoming data sample are calculated as follows:

(10)

Data-driven model based on online regularized stochastic configuration networks

This article presents a novel learning algorithm, the Forgetting Mechanism Regularized Stochastic Configuration (FMRSC) algorithm, to address concept drift and enable online learning for data-driven models based on Regularized Stochastic Configuration (RSC) Networks (Luo et al., 2022). Unlike the Online Sequential Stochastic Configuration (OSSC) algorithm (Chen & Li, 2022), the proposed FMRSC method processes streaming data without requiring the retraining of the entire historical dataset. It achieves this by integrating regularization and forgetting mechanisms into the OSSC algorithm. Additionally, FMRSC dynamically adjusts the model structure using network pruning and stochastic configuration to handle concept drift effectively. This approach leverages recent data, minimizes reliance on outdated information, and enhances processing efficiency and adaptability (Dai, Liu & Wang, 2024).

Online parameter update strategy

The RSC algorithm, used as the data-driven method in this study, is an improved version of the Stochastic Configuration Network (SCN) (Wang & Li, 2017). By incorporating regularization techniques, RSC effectively mitigates overfitting, producing more robust and generalized neural network models.

The output of the hidden layer node and the supervision mechanism , q = 1, 2 are defined as follows:

(11)

(12)

In Eqs. (11) and (12), . Given , let and C be the regularization coefficients. Then, perform stochastic configurations. In each configuration, randomly select the input weights and bias for the hidden layer node within a certain range, and compute . If is satisfied, store , , ; if none of the configurations meet the condition, choose a larger value and reconfigure. After completing the random configurations, select the and corresponding to the largest as the input weights and bias for the node.

The estimated value of the data-driven model at time is expressed as:

(13)

Next, the parameters are updated online using a forgetting mechanism. Given dataset , during the initialization phase of the nonlinear model, is used to construct the initial nonlinear model. Here, and denote the input and output data for training the nonlinear model up to time , and and represent the input and output data at time . If a regularized random configuration network with hidden layer nodes is constructed based on these sets of training data, the optimization objective for the output layer weights is as follows:

(14)

Let represent the output of the hidden layer nodes of the nonlinear model initialized with training data from Group . Let denote the regularization term coefficient. The solution can be obtained as follows:

(15)

When data from Group reaches the model, it is necessary to update the weights with the latest data. To mitigate the influence of past data on the model parameter updates, a forgetting mechanism has been introduced. Given the fixed structure of the neural network and the constant weights of the input layer, the optimization objective for obtaining new output layer weights is as follows:

(16)

Let represent the forgetting factor, represent the hidden layer node outputs calculated from the Group dataset, and be given.

The solution can be obtained as follows:

(17)

In Eq. (17), .

Let , then we obtain:

(18)

From this, we can derive the recursive formula for the output weights that incorporates a forgetting mechanism. Similarly, when the dataset is fed into the model, we have:

(19)

In Eq. (19), .

The recursive formula for is:

(20)

In Eq. (20), .

Dynamic adjustment strategy for model structure

Online adjustment of output layer parameters helps the model adapt to new data. However, as operational conditions change and data distributions shift, the neural network may struggle to handle new data characteristics. To address this, a dynamic structural adjustment strategy based on network pruning is proposed. This strategy optimizes the model structure and parameters, enhancing the adaptability of Stochastic Configuration Networks.

Assuming that a regularized stochastic configuration network with L hidden layer nodes has been constructed based on the training data set , the output of the neural network is given by:

(21)

In Eq. (21), represents the output of a regularized stochastic configuration network without network structure adjustment, while represents the output weight of the hidden node , the activation function of the hidden node , the input weight of the hidden node , and the bias of the hidden node , respectively. When new data flows into the model and the accuracy remains unsatisfactory after updating the parameters of both the linear and nonlinear models, an adjustment of the structure of the nonlinear model is necessary. The adjustment criterion can be described as follows:

(22)

When the difference between the estimated values of the nonlinear model and the nonlinear labels exceeds three standard deviations, the model structure requires a dynamic adjustment. After pruning the hidden node, the model output is:

(23)

Thus, the change in network residual can be expressed as:

(24)

By comparing the impact of each hidden layer node on the change in model output residuals and sorting them by , we select and prune the nodes with the least impact. The value of satisfies and , where is the pruning coefficient that determines the number of nodes to be pruned. After pruning, the output of the nonlinear model is represented as , and the current network residual is as follows:

(25)

Incorporating new nodes based on the supervision mechanism. Subsequently, compute the output weights as follows:

(26)

, denotes the hidden layer output of the nonlinear model at the hidden node trained using dataset . After incorporating the new nodes, the network output is:

(27)

Next, determine if the network output error meets the predefined error criteria. If the criteria are satisfied, the model construction is complete; otherwise, new hidden layer nodes will be added based on a supervisory mechanism to minimize the output error until the termination condition is met.

Adaptive intelligent detection method for slurry density based on collaborative computing

With the rapid advancement of Internet of Things (IoT) technology, we have entered an era of ubiquitous connectivity. Innovations such as cloud computing, big data, and artificial intelligence are transforming industrial applications through Internet platforms. In this context, edge-cloud collaboration has emerged as a crucial technology. Unlike traditional frameworks, edge computing enhances data processing by performing initial tasks near the data source (e.g., equipment or sensors). Edge devices handle data acquisition and preliminary analysis, while edge control systems conduct initial data processing. This reduces the burden on central cloud servers, improving processing speed and efficiency. By addressing the limitations of traditional edge-cloud collaboration in real-time data processing, this approach enables efficient, real-time analysis and decision-making (Zhou et al., 2021). Edge-cloud collaboration has advanced industrial automation and intelligence, laying a strong foundation for Industry 4.0.

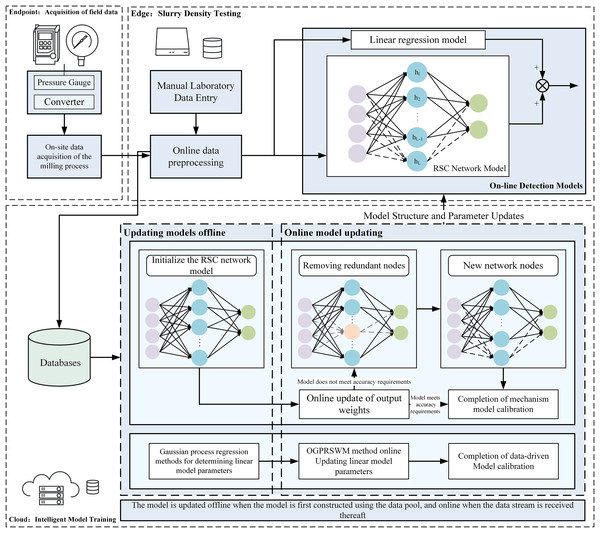

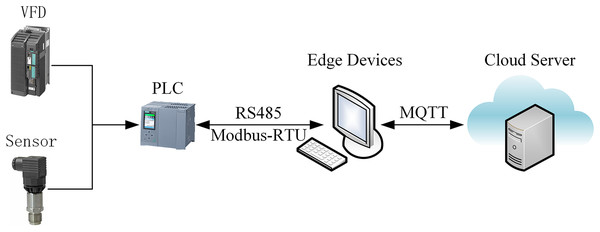

As illustrated in Fig. 1, the proposed online intelligent detection method for slurry density uses an edge-cloud collaborative framework to enhance real-time monitoring and intelligent analysis. Edge devices acquire and preprocess data, ensuring system stability and responsiveness. The edge control system processes data, runs online detection models, and allows operators to monitor key parameters such as slurry pump current, frequency, pressure, and density in real time. Operators can also input manual assay values via an interactive interface for model updates. The edge system’s low latency and real-time capabilities meet the demands of industrial environments. Meanwhile, the cloud platform provides centralized computing power, managing databases and running slurry density detection software. It updates the initial model offline or online and deploys the updated model back to the edge for real-time detection. This architecture leverages the cloud’s robust resources for iterative model optimization and centralized data management.

Figure 1: Collaborative computing-based pulp density intelligent detection system structure diagram.

Experimental analysis

The process data in this study were collected from the grinding and classification stages of an actual mineral processing operation using industrial instruments. High-pressure sensors, low-pressure sensors, motor current, and motor voltage transmitted data via 4-20 mA signals to a Siemens S7-1500 PLC. The PLC used the Modbus-RTU protocol to communicate with edge servers, transferring real-time field data. These data captured various operating conditions, such as changes in raw ore properties, equipment aging, and fluctuations in process parameters. Manual data were obtained through periodic on-site assays, covering slurry densities ranging from 1,000 to 1,500 kg/m3. Variations were influenced by operational changes, such as the addition of ore or water. Measurement errors, caused by instrument limitations and environmental factors, were inevitable. To improve model performance, significant outliers were removed by cross-referencing with manual assay results. The cleaned dataset contained 800 samples, split in a 1:3 ratio for initial model training and streaming data. Min-Max Normalization was applied to remove dimensional unit interference and standardize the data for model training. Let , and the data were processed using Eq. (28):

(28)

The dataset exhibited both sudden and gradual concept drift. Sudden drift resulted from abrupt changes, such as ore property variations, equipment failures, or emergency operational adjustments. Gradual drift arose from factors like equipment aging or long-term parameter fine-tuning. During the evaluation phase, the dataset was fed sequentially into the model as a data stream, maintaining the chronological order of collection. After processing each data point, the estimation error was calculated, and the model was updated. RMSE and MAE were computed cumulatively to compare different models’ performance, demonstrating the proposed method’s robustness under various drift scenarios.

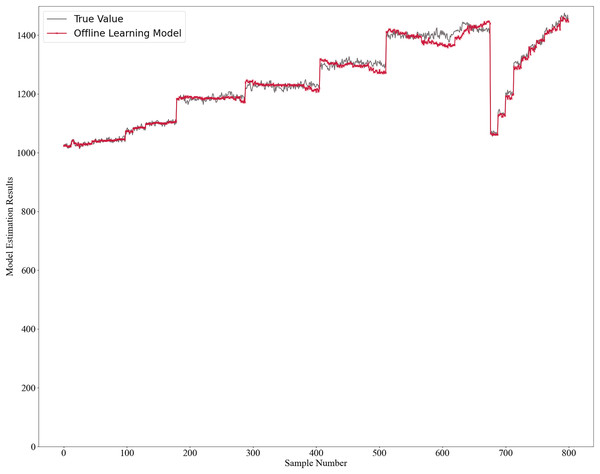

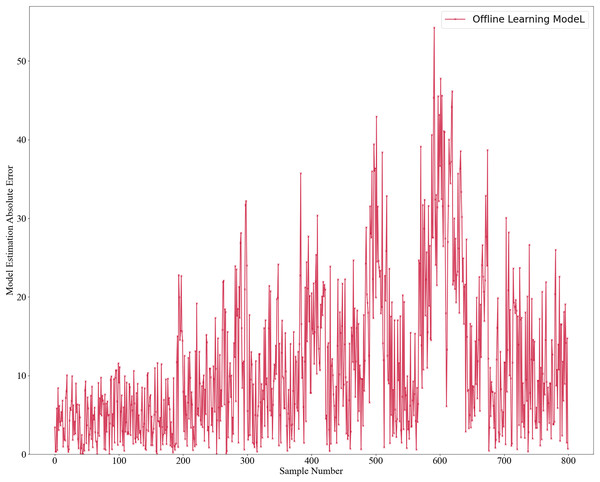

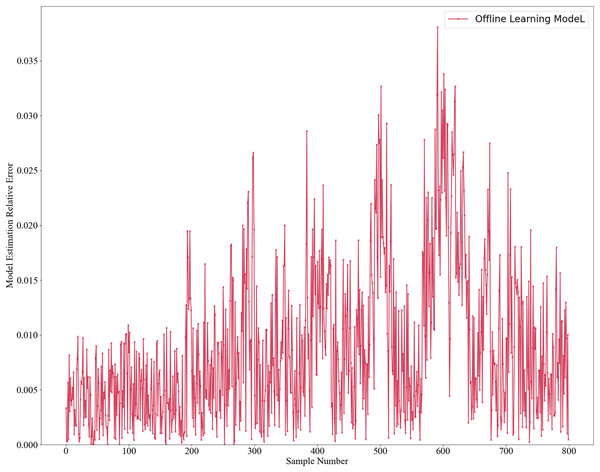

The initial model was trained using two offline learning methods: GPR for the mechanistic model and RSC Network for the data-driven model. Once trained, the model is not further updated. The model estimates’ results are shown in Fig. 2, with absolute errors in Fig. 3 and relative errors in Fig. 4. In the first 180 samples, conditions were relatively stable, and the model achieved high accuracy, with most absolute errors under 10 and relative errors below 1%. However, for samples 180–200, significant operational changes led to poor estimates, suggesting the model failed to capture new data distribution features. In the remaining dataset, the model’s performance deteriorated further, highlighting the need for continuous learning to address frequent changes in operational conditions. This degradation reflects a concept drift phenomenon. To mitigate this, we propose an algorithm enabling online updates to adapt quickly to new distributions, ensuring high performance in industrial applications.

Figure 2: Offline learning model estimation results.

Figure 3: Absolute error estimation of offline learning model.

Figure 4: Relative error estimation of offline learning model.

To demonstrate the effectiveness and superiority of the proposed intelligent detection method for concept drift data streams, we compared our method, OGPRSWM-FMRSC, with several other models. The linear model used is the online Gaussian process regression with sliding window mechanism (OGPRSWM), and the nonlinear model is a Regularized Stochastic Configuration Network (RRCN) with a forgetting mechanism. The alternative models evaluated include OGPR-FMRSC, which uses a standard Online Gaussian Process Regression (OGPR) without the sliding window, retaining historical data. The key parameter update formula is shown in Eq. (29), and the nonlinear model is the same as our proposed algorithm.

(29)

OGPRSWM-OSSC uses OGPRSWM for the linear model and an Online Sequential Stochastic Configuration Network (OSSC) for the nonlinear model. OGPRSWM-OSRSC utilizes OGPRSWM for the linear model and an Online Sequential Regularized Stochastic Configuration Network (OSRSC) for the nonlinear model. The output weights are updated online as follows:

(30)

In Eq. (30), ; OGPRSWM-FWRSC incorporates OGPRSWM for the linear model and updates the output weights of the nonlinear model using the proposed online update method without dynamic structural adjustments. We evaluated the models using metrics such as R2, minimum error frequency, , MAE, RMSE, true positive rate (TPR), true negative rate (TNR), and mean relative error (MRE).

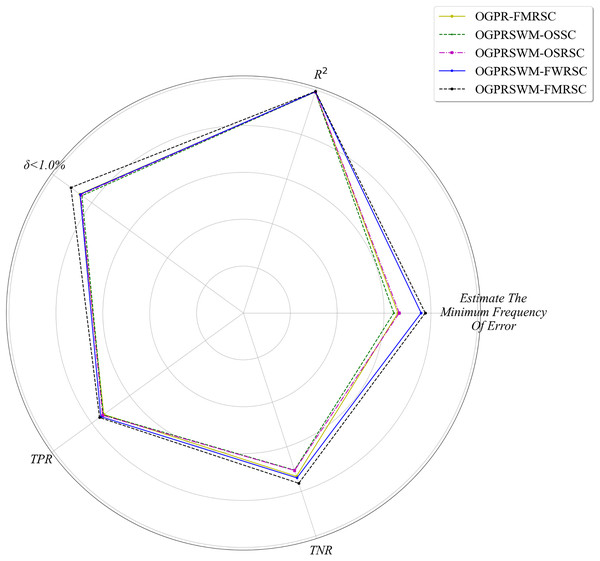

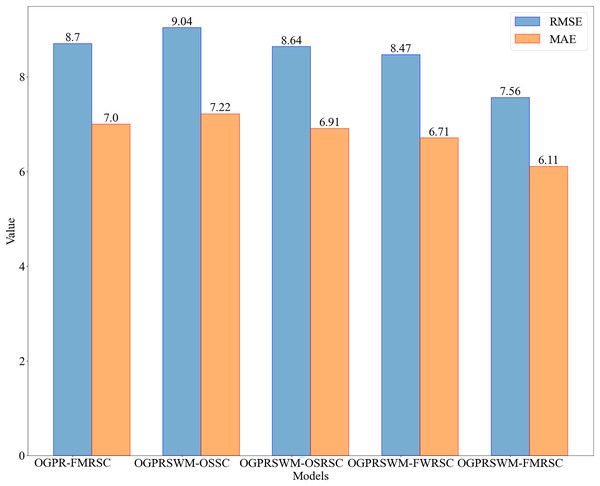

Figure 5 compares R2, minimum error frequency, TPR, TNR, and A across different models, while Fig. 6 compares RMSE and MAE. Overall, OGPRSWM-FMRSC outperformed the other models in all metrics. Specifically, the sliding window mechanism in OGPRSWM-FMRSC proved effective in handling concept drift, as evidenced by its superior performance compared to OGPR-FMRSC. The comparative performance of OGPRSWM-FMRSC, OGPRSWM-FWRSC, OGPRSWM-OSRSC, and OGPRSWM-OSSC sequentially declined, highlighting the importance of dynamic structure adjustment and the combination of the forgetting mechanism with regularized least squares in enhancing model performance.

Figure 5: Comparison of different models in terms of R2, minimum estimation error frequency, TPR, TNR and Pδ<1.0%.

Figure 6: Comparison of RMSE and MAE among different models.

Table 1 presents the performance evaluation metrics of the five models for slurry density detection. The initial condition of the test dataset is labeled as Condition 1, with subsequent significant density changes due to sample addition labeled as Conditions 2, 3, and 4. Condition 5 begins around sample number 680, reflecting multiple sample additions over a short period. Table 2 shows the MRE of each model under different conditions. OGPRSWM-FMRSC demonstrated superior performance, with the lowest MAE and RMSE of 6.11 and 7.56, respectively. Its R2 value reached 99.40%, indicating a high fit between the model’s estimates and actual data. The OGPRSWM-FMRSC model also had 91% of samples with a relative error below 1.0%, and its TPR and TNR were 75.88% and 76.39%, respectively, outperforming the other models.

| Model | Estimate the minimum frequency of error | MAE | RMSE | TPR% | TNR% | R2% | Pδ<1.0% |

|---|---|---|---|---|---|---|---|

| OGPRSWM-FMRSC | 22.17% | 6.11 | 7.56 | 75.88% | 76.39% | 99.40% | 91.00% |

| OGPR-FMRSC | 18.83% | 7.00 | 8.70 | 73.63% | 73.26% | 99.20% | 86.33% |

| OGPRSWM-OSSC | 18.33% | 7.22 | 9.04 | 73.95% | 70.49% | 99.14% | 85.16% |

| OGPRSWM-OSRSC | 19.00% | 6.91 | 8.64 | 74.28% | 70.64% | 99.22% | 85.83% |

| OGPRSWM-FWRSC | 21.67% | 6.55 | 8.27 | 75.24% | 73.96% | 99.28% | 86.16% |

| Model | Operating conditions 1 | Operating conditions 2 | Operating conditions 3 | Operating conditions 4 | Operating conditions 5 |

|---|---|---|---|---|---|

| Offline Model | 0.7983% | 1.3347% | 1.6825% | 2.0699% | 2.4299% |

| OGPRSWM-FMRSC | 0.5269% | 0.5072% | 0.4656% | 0.4383% | 0.4507% |

| OGPR-FMRSC | 0.6253% | 0.4927% | 0.5720% | 0.4864% | 0.5691% |

| OGPRSWM-OSSC | 0.6276% | 0.5428% | 0.5614% | 0.5315% | 0.5499% |

| OGPRSWM-OSRSC | 0.5975% | 0.5076% | 0.5694% | 0.5087% | 0.5133% |

| OGPRSWM-FWRSC | 0.5439% | 0.6166% | 0.5499% | 0.4394% | 0.4954% |

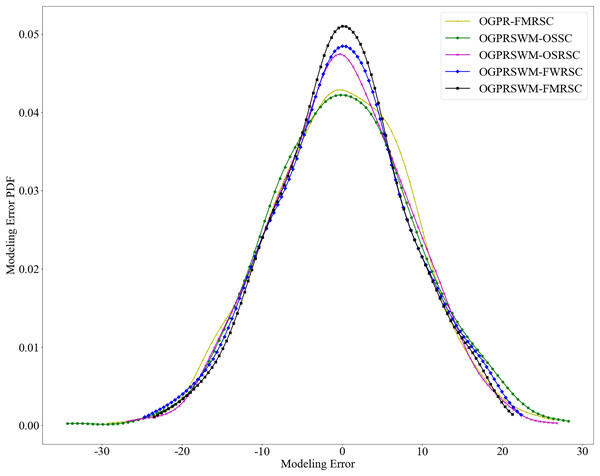

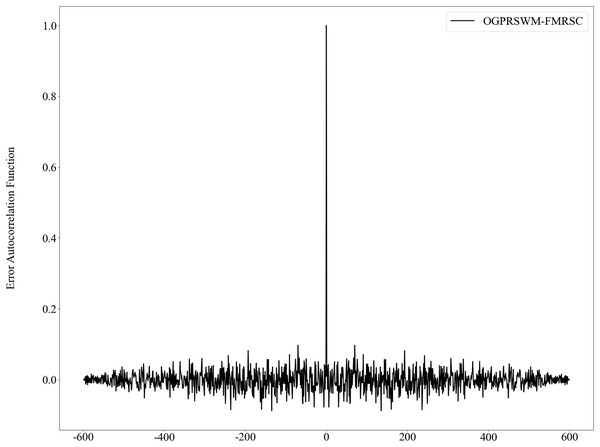

Figure 7 presents the probability density function of estimation errors for the five models. The error distribution for OGPRSWM-FMRSC is centered around zero and exhibits a unimodal peak consistent with Gaussian distribution characteristics, suggesting that the error sequence approximates randomness. Figure 8 presents the autocorrelation function of OGPRSWM-FMRSC’s error, indicating that it approaches white noise levels, with errors primarily attributed to random factors rather than poor model generalization. This suggests that OGPRSWM-FMRSC has superior estimation and generalization capabilities, making it more suitable for dynamic industrial environments and potentially more stable under specific conditions compared to the other models.

Figure 7: Comparison of estimation error PDFs for different models.

Figure 8: Self-correlation function of estimation error for the OGPRSWM-FMRSC model.

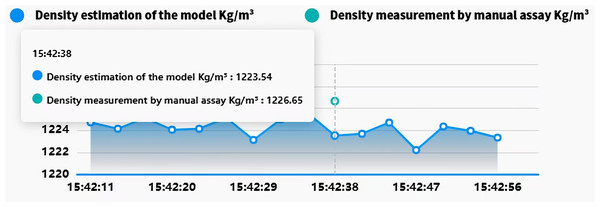

Industrial application analysis

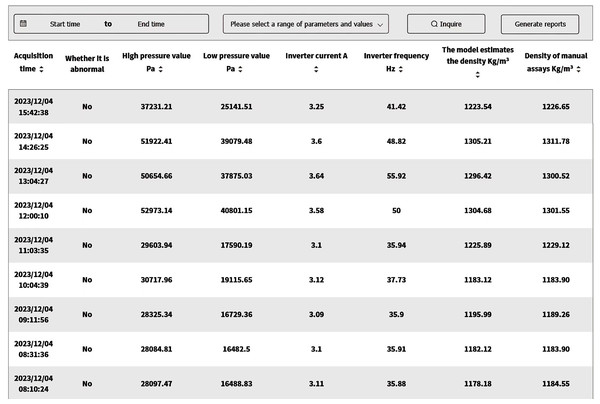

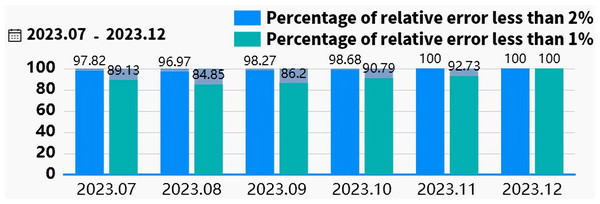

In industrial applications, the Siemens S7-1500 PLC interfaces with edge devices via the RS485 bus and Modbus-RTU protocol to collect and transmit real-time field data. Edge devices utilize TIAV16 and Modscan32 software to simulate Modbus communication, enabling remote monitoring and control of field equipment. The edge data is transmitted to the cloud for analysis and storage using a proprietary cloud protocol, as illustrated in Fig. 9. To enable online slurry density detection and provide a intuitive interface, a software application based on Vue, Spring Boot, and Flask frameworks was developed. This software supports data visualization, storage, and query functions. It has been deployed for over 5 months at a beneficiation plant in Shenyang. The interface design and human-machine interaction prioritize efficiency and ease of use, optimizing operational procedures, reducing operational difficulty, and significantly reducing the frequency of operator errors, thereby enhancing operational safety and production efficiency. As shown in Fig. 10, the real-time slurry density detection module displays the slurry density trend calculated by the intelligent detection model alongside scatter points representing manually obtained density values. By hovering the mouse over any data point reveals the specific slurry density value at that point. Figure 11 illustrates a table from the software interface, showing the most recent nine sets of comparison values obtained through random sampling and testing post-system deployment. In these nine sets, the relative error between the estimated slurry density and the actual test results did not exceed 1%. Figure 12 illustrates a bar chart distribution of the slurry density estimation errors over the 5 months of operation, and Table 3 details the corresponding error analysis data. With an acceptable relative error threshold of less than 2%, all months showed a qualification rate above 95%, indicating that the proposed adaptive intelligent detection system based on collaborative computing performed effectively in industrial settings, significantly enhancing production efficiency.

Figure 9: Hardware platform framework.

Figure 10: Pulp density real-time monitoring module demonstration.

Figure 11: Sampling inspection result table.

Figure 12: Bar chart of the pulp density estimation error after more than 5 months of operation.

| Month | Monthly Laboratory Tests | Average Density (Manual Laboratory Test) |

Average Absolute Error | Maximum Absolute Error | Pδ<1.0% | Pδ<2.0% (Satisfactory Rate) |

|---|---|---|---|---|---|---|

| 2023.07 | 92 | 1,311.52 | 7.32 | 30.04 | 89.13% | 97.82% |

| 2023.08 | 33 | 1,295.63 | 6.98 | 27.43 | 84.85% | 96.97% |

| 2023.09 | 58 | 1,310.46 | 7.54 | 34.76 | 86.20% | 98.27% |

| 2023.10 | 76 | 1,255.34 | 6.91 | 25.78 | 90.79% | 98.68% |

| 2023.11 | 55 | 1,287.64 | 7.11 | 19.94 | 92.73% | 100.00% |

| 2023.12 | 9 | 1,234.58 | 3.98 | 6.73 | 100.00% | 100.00% |

Conclusion

This study addresses concept drift in slurry density detection models within industrial environments and proposes an intelligent detection algorithm for concept drift data streams. Operational changes over time often lead to a gradual decline in model performance. To address this, a sliding window mechanism is incorporated into the linear model, with recursive formulas derived for real-time parameter updates. This approach minimizes the impact of outdated data on current model accuracy. For nonlinear models, a forgetting mechanism is introduced, with recursive formulas developed for online updating of output weights, reducing the influence of historical data on new detections. Additionally, network pruning and stochastic configuration methods are used to optimize the model structure, enhancing its adaptability to new data distributions. Weighted least squares and regularization methods are integrated during the stochastic configuration process to evaluate output weights, improving the model’s generalization capabilities. Experimental results show that the proposed method achieves superior accuracy and stability when handling concept drift, significantly improving the reliability of slurry density detection. This research has both academic significance and industrial value. The real-time update algorithm enhances slurry density detection precision and stability, providing an efficient monitoring tool for production processes. Accurate slurry density detection is vital for optimizing process parameters, improving coal preparation accuracy, and minimizing resource waste. By quickly responding to operational changes, the proposed method prevents fluctuations in concentrate quality caused by detection errors, improving production controllability. Its low computational cost makes it suitable for real-time industrial applications, while also reducing resource waste and the need for frequent manual adjustments or shutdown maintenance in high-frequency production scenarios. Beyond slurry density detection, the proposed model framework is versatile and can be applied to other industrial domains. For example, it can be used for sensor data monitoring in equipment fault prediction and dynamic load regulation in energy management. By addressing concept drift effectively, this method adapts to various complex industrial scenarios, providing robust support for intelligent manufacturing in the Industry 4.0 era.

Supplemental Information

Datasets.

The results of the offline model running on the test set; the raw data for the tables in the article; the processed dataset used in this article; the experimental results.

Code.

The algorithm can be executed using ‘the_proposed_algorithm.py’ function. The experimental results are visualized using two scripts: ‘visualization_of_experimental_results_1.py’ and ‘visualization_of_experimental_results_2.py’.

The ‘tabular_data.py’ script corresponds to the data used for the tables.