Qualitative reachability for open interval Markov chains

- Published

- Accepted

- Received

- Academic Editor

- Joost-Pieter Katoen

- Subject Areas

- Theory and Formal Methods, Software Engineering

- Keywords

- Interval Markov chains, Model checking, Probabilistic systems

- Copyright

- © 2023 Sproston

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2023. Qualitative reachability for open interval Markov chains. PeerJ Computer Science 9:e1489 https://doi.org/10.7717/peerj-cs.1489

Abstract

Interval Markov chains extend classical Markov chains with the possibility to describe transition probabilities using intervals, rather than exact values. While the standard formulation of interval Markov chains features closed intervals, previous work has considered open interval Markov chains, in which the intervals can also be open or half-open. In this article we focus on qualitative reachability problems for open interval Markov chains, which consider whether the optimal (maximum or minimum) probability with which a certain set of states can be reached is equal to 0 or 1. We present polynomial-time algorithms for these problems for both of the standard semantics of interval Markov chains. Our methods do not rely on the closure of open intervals, in contrast to previous approaches for open interval Markov chains, and can address situations in which probability 0 or 1 can be attained not exactly but arbitrarily closely.

Introduction

The development of modern computer systems can benefit substantially from a verification phase, in which a formal model of the system is verified exhaustively in order to identify undesirable errors or inefficiencies. In this article we consider the verification of probabilistic systems, in which state-to-state transitions are accompanied by probabilities that specify the relative likelihood with which the transitions occur, using model-checking techniques; see Baier & Katoen (2008), Forejt et al. (2011) and Baier et al. (2018) for general overviews of this field. One drawback of classical formalisms for probabilistic systems is that they require typically the specification of exact probability values for transitions: in practice, it is likely that such precise information concerning the probability of system behaviour is not available. A solution to this problem is to associate intervals of probabilities with transitions, rather than exact probability values, leading to interval Markov chains (IMCs) or interval Markov decision processes. IMCs have been studied in Jonsson & Larsen (1991) and Kozine & Utkin (2002), and considered in the qualitative and quantitative model-checking context in Sen, Viswanathan & Agha (2006), Chatterjee, Sen & Henzinger (2008) and Chen, Han & Kwiatkowska (2013). Qualitative model checking concerns whether a property is satisfied by the system model with probability (equal to or strictly greater than) 0 or (equal to or strictly less than) 1, whereas quantitative model checking considers whether a property is satisfied with probability (strictly or non-strictly) above or below some threshold in the interval [0, 1], and generally involves the computation of the probability of property satisfaction, which is then compared to the aforementioned threshold.

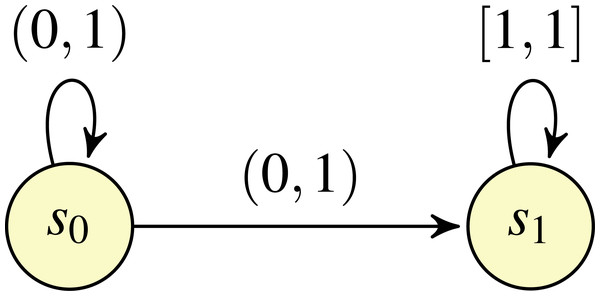

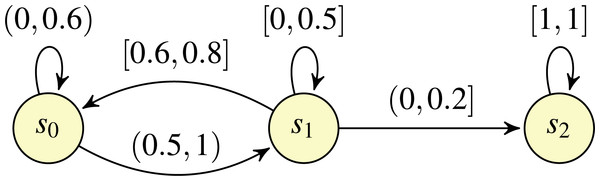

In Sen, Viswanathan & Agha (2006), Chatterjee, Sen & Henzinger (2008) and Chen, Han & Kwiatkowska (2013), the intervals associated with transitions are closed. This limitation was adressed in Chakraborty & Katoen (2015), which considered the possibility of utilising open (and half-open) intervals, in addition to closed intervals. Example of such open IMCs are shown in Figs. 1 and 2. In Chakraborty & Katoen (2015), it was shown that the probability of the satisfaction of a property in an open IMC can be approximated arbitrarily closely by a standard, closed IMC obtained by changing all (half-)open intervals featured in the model to closed intervals with the same endpoints. However, as noted in Chakraborty & Katoen (2015), closing the intervals can involve the loss of information concerning exact solutions. Take, for example, the open IMC in Fig. 1: changing the intervals from (0, 1) to [0, 1] on both of the transitions leaving state s0 means that the minimum probability of reaching the state s1 after starting in state s0 becomes 0, whereas the probability of reaching s1 from s0 is strictly greater than 0 for all ways of assigning probabilities to the transitions in the original IMC.

In this article we propose verification methods for qualitative reachability properties of open IMCs. We consider both of the standard semantics for IMCs. The uncertain Markov chain (UMC) semantics associated with an IMC comprises an infinite number of standard Markov chains, where each Markov chain is obtained from the IMC by fixing a probability for each transition, chosen from the transition’s interval. In contrast, the interval Markov decision process (IMDP) semantics associates a single Markov decision process (MDP) with the IMC, where the state set is identical to that of the IMC, and where there are available generally an uncountable number of choices from a state, each of which corresponds to an assignment of probabilities belonging to the intervals of the transitions leaving the associated IMC state. The key difference between the two semantics can be summarised by considering the behaviour from a particular state of the IMC: for a Markov chain of the UMC semantics, the same probability distribution over outgoing transitions must always be used from the state, whereas in the IMDP semantics the outgoing probability distribution may change for each visit to the state. We show that we can obtain exact (not approximate) solutions to qualitative reachability problems for both semantics in polynomial time in the size of the open IMC.

For the UMC semantics, and for three of the four classes of the qualitative reachability problem in the IMDP semantics, the algorithms presented are inspired by methods for qualitative reachability problems of finite MDPs. Certain cases can be dealt with by straightforward reachability analysis on the underlying graph of the IMC. Other cases require the construction of a finite MDP that represents sufficient information regarding the qualitative properties of the IMC. Recall that the classical definition of MDPs (see, for example, Puterman (1994) and Baier & Katoen (2008)) specifies that an MDP comprises a set of states and a transition relation that associates a number of distributions with each state. A transition from state to state consists of two phases: first a nondeterministic choice between the distributions associated with the source state is made, and second a probabilistic choice to determine the target state is made according to the distribution chosen in the first phase. The set of states of the finite MDP that we construct equals that of the IMC and, for each state s and each set X of states, a unique distribution that assigns positive probability to exactly the states in X is associated with s if and only if there exists at least one probability assignment for target states in the IMC available in s that assigns positive probability to each state in X. Intuitively, a distribution associated with s and X in the finite MDP can be regarded as the representative distribution of all probability assignments of the IMC that assign positive probability to the transitions from s to states in X. For example, in an MDP constructed for the IMC of Fig. 2, there will be two distributions associated with state s1: one distribution assigns positive probability to s0 and s2 (corresponding to the assignment of probability 0.8 to s0, probability 0 to s1, and probability 0.2 to s2), and the other assigns positive probability to s0, s1 and s2 (corresponding to all other possible assignments of probability to the transitions leaving s1, given by the intervals labelling those transitions). Unfortunately, such a finite MDP construction does not yield polynomial-time algorithms in the size of the open IMC, because the presence of transitions having zero as their left endpoint means that the number of representative distributions can be exponential in the number of IMC transitions. In our methods, apart from considering issues concerning the difference between closed and open intervals and the subsequent implications for qualitative reachability problems, we avoid such an exponential blow up. In particular, we show how the predecessor operations used by some qualitative reachability algorithms for MDPs can be applied directly on the IMC.

Figure 1: An open IMC O1.

Figure 2: An open IMC O2.

The remaining, fourth class of reachability problems in the IMDP semantics concerns determining whether the probability of reaching a certain set of states from the current state is equal to 1 for all schedulers, where a scheduler resolves nondeterminism by choosing an outgoing probability distribution from a state on the basis of the choices made so far. For this class of problems, retaining the memory of previous choices can be important for showing that the problem is not satisfied, i.e., that there exists a scheduler such that the reachability probability is strictly less than 1. As an example, we can take the open IMC in Fig. 1. Consider the memoryful scheduler that assigns probability to the ith attempt to take a transition from s0 to s1, meaning that the overall probability of reaching s1 when starting in s0 under this scheduler is . Instead a memoryless scheduler will reach s1 with probability 1: for any λ ∈ (0, 1) representing the (constant) probability of taking the transition from s0 to s1, the overall probability of reaching s1 is limk→∞1 − (1 − λ)k = 1. Similar reasoning can be used to conclude that any finite-memory scheduler also reaches s1 with probability 1.1 Hence our results for this class of reachability problems take the inadequacy of memoryless and finite-memory schedulers into account; indeed, while the algorithms presented for all other classes of problems (and all problems for the UMC semantics) proceed in a manner similar to that introduced in the literature for finite MDPs, for this class we present an ad hoc algorithm, based on an adaptation of the classical notion of end components (de Alfaro, 1997).

Our results also allow us to show that, for both of the considered semantics, the same set of IMC states satisfy analogous reachability problems (i.e, problems with the same quantification over Markov chains in the UMC semantics and over schedulers in the IMDP semantics, and the same kind of comparison with 0 or 1), except in the case of the reachability problem concerning whether all Markov chains in the UMC semantics or all schedulers in the IMDP semantics reach a certain set of states with probability 1.

After introducing open IMCs in Section ‘Open Interval Markov Chains’, the algorithms for the UMC semantics and the IMDP semantics are presented in Section ‘Qualitative Reachability: UMC semantics’ and Section ‘Qualitative Reachability: IMDP semantics’, respectively.

Related work. Model checking of qualitative properties of Markov chains (see, for example, Vardi (1985) and Courcoubetis & Yannakakis (1995)) relies on the fact that transition probability values are fixed throughout the behaviour of the system, and does not require that exact probability values are taken into account during analysis. The majority of work on model checking for IMCs considers the more general quantitative problems: Sen, Viswanathan & Agha (2006) and Chatterjee, Sen & Henzinger (2008) present algorithms utilising a finite MDP construction based on encoding extremal probabilities allowed from a state (known as the state’s basic feasible solutions) within distributions available from that state. Such a construction results in an exponential blow up, which is also incurred in Chatterjee, Sen & Henzinger (2008) for qualitative properties (when transitions can have 0 as their left endpoint). Chen, Han & Kwiatkowska (2013) and Puggelli et al. (2013) improve on these results to present polynomial-time algorithms for reachability problems based on linear or convex programming. Haddad & Monmege (2018) includes polynomial-time methods for computing (maximal) end components, and for computing a single step of value iteration, for interval MDPs. We note that IMCs are a special case of constraint Markov chains (Caillaud et al., 2011), and that the UMC semantics of IMCs corresponds to a special case of parametric Markov chains (Daws, 2004; Lanotte, Maggiolo-Schettini & Troina, 2007; Junges et al., 2021). In particular, the polynomial-time algorithms for positive probability qualitative reachability problems for parametric Markov decision processes in Junges et al. (2021) have similarities with our algorithms for the analogous problems for the UMC semantics of IMCs: both are based on the principle that assigning to transitions probability 0, as opposed to positive probability, can never be beneficial from the point of view of satisfying a positive probability reachability problem, an observation which allows us to avoid the aforementioned exponential blow up. Bart et al. (2018) present an IMDP semantics that uses finite-memory schedulers, which (as indicated above) is a key difference with our work. As far as we are aware, only (Chakraborty & Katoen, 2015) considers open IMCs. This article is a revised and extended version of the conference paper (Sproston, 2018a) and the preprint (Sproston, 2018b), and includes a detailed treatment of the connections between assignments of probabilities to intervals and syntactic properties of open IMCs, in addition to full proofs of all results.

Preliminaries

A (probability) distribution over a countable set Q is a function μ:Q → [0, 1] such that ∑q∈Qμ(q) = 1. Let Dist(Q) be the set of distributions over Q. We use support(μ) = {q ∈ Q∣μ(q) > 0} to denote the support set of μ, i.e., the set of elements assigned positive probability by μ, and use {q↦1} to denote the distribution that assigns probability 1 to the single element q. Given a binary function f:Q × Q → [0, 1] and element q ∈ Q, we denote by f(q, ⋅):Q → [0, 1] the unary function such that f(q, ⋅)(q′) = f(q, q′) for each q′ ∈ Q.

Let ℚ be the set of rational numbers. We let ℐ denote the set of (open, half-open or closed) intervals that are subsets of [0, 1] and that have rational-numbered endpoints (i.e., endpoints in ℚ∩[0, 1]). Given an interval I ∈ ℐ, we let left(I) (respectively, right(I)) be the left (respectively, right) endpoint of I. The set of closed (respectively, left-open, right-closed; left-closed, right-open; open) intervals in ℐ is denoted by ℐ[⋅,⋅] (respectively, ℐ(⋅,⋅]; ℐ[⋅,⋅); ℐ(⋅,⋅)). Note that ℐ[⋅,⋅], ℐ(⋅,⋅], ℐ[⋅,⋅) and ℐ(⋅,⋅) form a partition of ℐ. Let 〈⋅, ⋅] be the set of right-closed intervals in ℐ, and let ℐ〈0,⋅〉 be the set of intervals in ℐ with left endpoint equal to 0. We partition ℐ〈0,⋅〉 into the set ℐ[0,⋅〉 of left-closed intervals with the left endpoint equal to 0, and the set ℐ(0,⋅〉 of left-open intervals with the left endpoint equal to 0. Furthermore, let ℐ〈+,⋅〉 = ℐ∖ℐ〈0,⋅〉 be the set of intervals such that the left endpoint is positive.

A discrete-time Markov chain (DTMC) D is a pair (S, P) where S is a set of states, and P:S × S → [0, 1] is a transition probability matrix, such that, for each state s ∈ S, we have ∑s′∈SP(s, s′) = 1. Note that P(s, ⋅) is a distribution, for each state s ∈ S. Intuitively, given states s, s′ ∈ S, a transition from s to s′ is made with probability P(s, s′). A path of DTMC D is a sequence s0s1⋯ such that P(si, si+1) > 0 for all i ≥ 0. Given a path ρ = s0s1⋯ and i ≥ 0, we let state[ρ](i) = si be the (i + 1)-th state along ρ. The set of paths of D starting in state s ∈ S is denoted by . In the standard manner (see, for example, Baier & Katoen (2008) and Forejt et al. (2011)), given a state s ∈ S, we can define a probability measure over .

A Markov decision process (MDP) ℳ is a pair (S, Δ) where S is a finite set of states and Δ:S → 2Dist(S) is a transition function such that Δ(s) ≠ 0̸ for all s ∈ S. An MDP is finite if Δ(s) is finite for all s ∈ S. Intuitively, a transition from state s to some target state is chosen according to two phases: first, a nondeterministic choice is made between distributions in Δ(s); second, a probabilistic choice between target states is made according to the distribution chosen in the first phase.

A(n infinite) path of an MDP ℳ is a sequence s0μ0s1μ1⋯ such that μi ∈ Δ(si) and μi(si+1) > 0 for all i ≥ 0. Given a path ρ = s0μ0s1μ1⋯ and i ≥ 0, we let state[ρ](i) = si be the (i + 1)-th state along ρ, and let dist[ρ](i) = μi be the (i + 1)-th distribution along ρ. A finite path is a sequence r = s0μ0s1μ1⋯μn−1sn such that μi ∈ Δ(si) and μi(si+1) > 0 for each 0 ≤ i < n. Let last(r) = sn denote the final state of r. For distribution μ ∈ Δ(sn) and state s′ ∈ S such that μ(s′) > 0, we write rμs′ to denote the finite path s0μ0s1μ1⋯μn−1snμs′. We say that r is a prefix of the infinite path ρ if state[ρ](i) = si for each 0 ≤ i ≤ n, and dist[ρ](i) = μi for each 0 ≤ i < n. Let be the set of finite paths of the MDP ℳ. Let and be the sets of infinite paths and finite paths, respectively, of ℳ starting in state s ∈ S.

A scheduler is a mapping such that σ(r) ∈ Dist(Δ(last(r))) for each . Let Σℳ be the set of schedulers of the MDP ℳ. Given a state s ∈ S and a scheduler σ, we can define a countably infinite-state DTMC that corresponds to the behaviour of the scheduler σ from state s. Formally, the DTMC has as its state set, and where its transition probability matrix is defined in the following way: for finite path , distribution μ ∈ Δ(last(r)) and state s′ ∈ S, we have that . The DTMC can be used to define a probability measure over in the standard manner (see Baier & Katoen (2008) and Forejt et al. (2011)).

A scheduler σ ∈ Σℳ is memoryless if, for finite paths such that last(r) = last(r′), we have σ(r) = σ(r′). In the sequel, a memoryless scheduler will generally be written as the mapping σ:S → Dist(⋃s∈SΔ(s)). Let be the set of memoryless schedulers of ℳ. Note that, for a memoryless scheduler , we can construct a finite DTMC with : we call this DTMC the folded DTMC of σ. The probability measures and assign the same probabilities to measurable sets of paths. This can be seen by considering the following. Recall that the scheduler σ and state s induce a DTMC . Now consider the smallest relation such that (r, s′) ∈ ℛ if last(r) = s′. Note that, for a given and s′ ∈ S, we have . This allows us to conclude that ℛ induces a probabilistic bisimulation (Larsen & Skou, 1991) (more formally, the equivalence relation induced by ℛ on the “combined DTMC” obtained by the union of the state spaces of and and the standard transition function that corresponds directly from and ). Given that probabilistic bisimilar states of DTMCs induce probability measures that assign the same probability to measurable sets of paths (see, for example, Chapter 10 of Baier & Katoen, 2008), we conclude that and assign the same probabilities to measurable sets of paths.

Remark 1 Note that the classical definition of MDPs (Puterman, 1994; Baier & Katoen, 2008) features actions and a transition function mapping states and actions to distributions over states. As above, a transition consists of two phases: the first phase regards the nondeterministic choice of an action, and the second phase regards the probabilistic choice of the unique distribution associated with the current state and the action chosen in the first phase. In our setting, in which we use MDPs to define the IMDP semantics of IMCs, actions have no explicit significance. For this reason, and in accordance with other papers on IMCs (Sen, Viswanathan & Agha, 2006; Chatterjee, Sen & Henzinger, 2008; Chen, Han & Kwiatkowska, 2013), we choose to omit actions and present the transition function as a mapping from states to sets of distributions. □

Open Interval Markov Chains

In this section, we recall of the definition of (open) interval Markov chains, and introduce notions such as assignments of probability to transitions, well-formed interval Markov chains and edges of interval Markov chains, together with the relationships between these concepts that will be necessary for subsequent technical results. Portions of this text were previously published as part of a preprint (Sproston, 2018b).

Definition 1: IMCs

An (open) interval Markov chain (IMC) O is a pair (S, δ), where S is a finite set of states, and δ:S × S → ℐ is an interval-based transition function. □

Intuitively, the probability of making a transition from state s to state s′ of an IMC (S, δ) is a value from the interval δ(s, s′). Given a state s ∈ S, a distribution a ∈ Dist(S) is an assignment for s if a(s′) ∈ δ(s, s′) for each state s′ ∈ S.

Well-formed IMCs

In the sequel, we will consider only IMCs for which there exists at least one assignment for each state. This restriction can be captured by the following syntactic conditions on the transition function. Let s ∈ S be a state. The well-formedness conditions for s are defined as follows:

-

∑s′∈S left(δ(s, s′)) ≤ 1,

-

∑s′∈S left(δ(s, s′)) = 1 implies that δ(s, s′) is left-closed for all s′ ∈ S,

-

∑s′∈S right(δ(s, s′)) ≥ 1, and

-

∑s′∈S right(δ(s, s′)) = 1 implies that δ(s, s′) is right-closed for all s′ ∈ S.

The following proposition establishes the correspondence between the well-formedness conditions and the existence of an assignment, and generalises to open IMCs similar assertions for IMCs with closed intervals only (for example, in Section 4 of Haddad & Monmege (2018)).

Proposition 1 Let (S, δ) be an IMC and s ∈ S be a state. Then the well-formedness conditions for s are satisfied if and only if there exists an assignment for s. □

(⇒) Assume that the well-formedness conditions for s are satisfied. We proceed by defining a function f:S → [0, 1] such that:

-

left(δ(s, s′)) + f(s′) ∈ δ(s, s′) for all s′ ∈ S and

-

∑s′∈S left(δ(s, s′)) + f(s′) = 1.

Intuitively, the value f(s′) is an “offset” from left(δ(s, s′)) towards right(δ(s, s′)). Then we let a:S → [0, 1] be the function defined by setting a(s′) = left(δ(s, s′)) + f(s′) for all s′ ∈ S. The function a is an assignment: condition (a) in the definition of f establishes that a(s′) ∈ δ(s, s′) for each state s′ ∈ S, and condition (b) establishes that a ∈ Dist(S).

In order to define an appropriate f, we consider the following cases.

Case ∑s′∈Sleft(δ(s, s′)) = ∑s′∈Sright(δ(s, s′)) = 1. Given that left(δ(s, s′)) ≥ 0, right(δ(s, s′)) ≥ 0 and left(δ(s, s′)) ≤ right(δ(s, s′)) for all s′ ∈ S, we have that left(δ(s, s′)) = right(δ(s, s′)) (i.e., δ(s, s′) is the degenerate interval [left(δ(s, s′)), right(δ(s, s′))]) and therefore δ(s, s′) is left- and right-closed for all s′ ∈ S. Let f(s′) = 0 for all s′ ∈ S. Hence left(δ(s, s′)) + f(s′) = left(δ(s, s′)) for all s′ ∈ S. Given that δ(s, s′) being left-closed implies that left(δ(s, s′)) ∈ δ(s, s′), and that ∑s′∈Sleft(δ(s, s′)) = 1 for this case, conditions (a) and (b) in the definition of f aref satisfied.

Case ∑s′∈Sleft(δ(s, s′)) = 1 and ∑s′∈Sright(δ(s, s′)) > 1. We let f(s′) = 0 for all s′ ∈ S. Given that the antecedent of the well-formedness condition (2) is satisfied for this case, we have that δ(s, s′) is left-closed for all s′ ∈ S. Following the reasoning presented for the previous case, conditions (a) and (b) in the definition of f are satisfied.

Case ∑s′∈Sleft(δ(s, s′)) < 1 and ∑s′∈Sright(δ(s, s′)) = 1. We let f(s′) = right(δ(s, s′)) − left(δ(s, s′)) for all s′ ∈ S. Hence left(δ(s, s′)) + f(s′) = right(δ(s, s′)) for all s′ ∈ S. We then proceed similarly to the previous cases: well-formedness condition (4) implies that, for all s′ ∈ S, the interval δ(s, s′) is right-closed, and therefore right(δ(s, s′)) ∈ δ(s, s′), in turn establishing condition (a) in the definition of f. Given that ∑s′∈Sright(δ(s, s′)) = 1 in this case, condition (b) in the definition of f is satisfied trivially.

Case ∑s′∈Sleft(δ(s, s′)) < 1 < ∑s′∈Sright(δ(s, s′)) Given s′ ∈ S, let width(δ(s, s′)) = right(δ(s, s′)) − left(δ(s, s′)). Then, for each s′ ∈ S, we let: First we show that condition (a) in the definition of f is satisfied. Our task is to show that left(δ(s, s′)) + f(s′) ∈ δ(s, s′) for all s′ ∈ S. Let s′ ∈ S, and consider the following two cases.

Sub-case left(δ(s, s′)) = right(δ(s, s′)). In this sub-case width(δ(s, s′)) = 0 and hence f(s′) = 0. Showing that left(δ(s, s′)) + f(s′) ∈ δ(s, s′) then reduces to showing that left(δ(s, s′)) ∈ δ(s, s′). Given that this sub-case specifies that δ(s, s′) is the degenerate interval [left(δ(s, s′)), right(δ(s, s′))], we have established that left(δ(s, s′)) + f(s′) ∈ δ(s, s′).

Sub-case left(δ(s, s′)) < right(δ(s, s′)). To show that the choice of f(s′) satisfies left(δ(s, s′)) + f(s′) ∈ δ(s, s′), it suffices to show that 0 < f(s′) < width(δ(s, s′)). First, we show that f(s′) > 0. From the definition of the case we have ∑s′′∈Sleft(δ(s, s′′)) < 1, and hence 1 − ∑s′′∈Sleft(δ(s, s′′)) > 0. The definition of the sub-case specifies that width(δ(s, s′)) > 0. Combining these two facts with the fact that width(δ(s, s′′)) ≥ 0 for all s′′ ∈ S establishes that f(s′) > 0.

Second, we show that f(s′) < width(δ(s, s′)). From our choice of f(s′), we need to show that . Noting that the definition of the case specifies that 1 < ∑s′′∈Sright(δ(s, s′′)), we obtain the following equivalent statements from that fact and the definition of width(δ(s, s′)): Hence we have established that the choice of f(s′) satisfies left(δ(s, s′)) + f(s′) ∈ δ(s, s′).

Next we show that condition (b) of the definition of f is satisfied. From the choice of f and straightforward rearranging, we have:

Hence we have established the (⇒) direction of the theorem.

(⇐) Assume that there exists an assignment a for state s. Recall that, from the definition of assignments, we have a(s′) ∈ δ(s, s′) for all s′ ∈ S. Hence a(s′) ≥ left(δ(s, s′)) and a(s′) ≤ right(δ(s, s′)) for all s′ ∈ S. Furthermore, given that an assignment is a distribution, we have ∑s′∈Sa(s′) = 1. From these facts, we obtain ∑s′∈Sleft(δ(s, s′)) ≤ ∑s′∈Sa(s′) = 1 and ∑s′∈Sright(δ(s, s′)) ≥ ∑s′∈Sa(s′) = 1, establishing conditions (1) and (3) of well-formedness for s. Now consider the case in which ∑s′∈Sleft(δ(s, s′)) = 1. Given that ∑s′∈Sa(s′) = 1 and a(s′) ≥ left(δ(s, s′)) for all s′ ∈ S, we must have a(s′) = left(δ(s, s′)) for all s′ ∈ S. From the fact that a(s′) ∈ δ(s, s′) for all s′ ∈ S, it must be the case that δ(s, s′) is left-closed for all s′ ∈ S. Hence condition (2) of well-formedness for s is established. Following similar reasoning, we can show that, in the case in which ∑s′∈Sright(δ(s, s′)) = 1, we have δ(s, s′) is right-closed for all s′ ∈ S, thereby establishing condition (4) of well-formedness. ■

Henceforth we assume that the IMCs that we consider satisfy the well-formedness conditions for each of their states.

Edges of IMCs

In the following, we refer to edges as those state pairs to which the transition function can associate positive probability. There are two situations in which the transition function enforces the association of probability 0 to a state pair (s, s′) ∈ S × S: first, when the right endpoint of δ(s, s′) is equal to 0 (and hence δ(s, s′) is the interval [0, 0]); second, when the sum of the left endpoints of transitions from s that do not have s′ as target state is equal to 1 (thereby preventing the assignment of positive probability to (s, s′)). Hence we define formally the set of edges E of O as the smallest set such that (s, s′) ∈ E if (1) right(δ(s, s′)) > 0 and (2) ∑s′′∈S∖{s′}left(δ(s, s′′)) < 1.2

We now formalise the intuition that edges are those state pairs which can be assigned positive probability by relating the notions of edges and assignments.

Proposition 2 Let (S, δ) be an IMC and s, s′ ∈ S be states. Then (s, s′) ∈ E if and only if there exists an assignment a for s such that a(s′) > 0. □

(⇒) Assume that (s, s)′ ∈ E. From the definition of the set E of edges, we have right(δ(s, s)′) > 0 and ∑s′′∈S∖{s′}left(δ(s, s′′)) < 1. We now show that there exists an assignment a for s such that a(s′) > 0 by adopting the construction of a from the (⇒) direction of Proposition 1; in particular, we note that a(s′) = left(δ(s, s′)) + f(s′) where .

Consider the following three cases:

Case left(δ(s, s′)) = right(δ(s, s′)). Given that width(δ(s, s′)) = 0 in this case, we have f(s′) = 0. Hence a(s′) = left(δ(s, s′)) + f(s′) = left(δ(s, s′)) = right(δ(s, s′)). From right(δ(s, s′)) > 0, we conclude that a(s′) > 0.

Case left(δ(s, s′)) < right(δ(s, s′)) and ∑s′′∈Sleft(δ(s, s′′)) = 1. By the definition of edges, we have ∑s′′∈S∖{s′}left(δ(s, s′′)) < 1. Given that ∑s′′∈Sleft(δ(s, s′′)) = 1 in this case, we have left(δ(s, s′)) > 0. Given that f(s′) ≥ 0, we conclude that a(s′) > 0.

Case left(δ(s, s′)) < right(δ(s, s′)) and ∑s′′∈Sleft(δ(s, s′′)) < 1. In this case, width(δ(s, s′)) > 0 and 1 − ∑s′′∈Sleft(δ(s, s′′)) > 0. From the fact that left(δ(s, s′′)) ≥ 0 for all s′′ ∈ S, we have that f(s′) > 0, and hence a(s′) > 0.

(⇐) We prove the contrapositive, i.e., we show that (s, s′)⁄ ∈ E implies that all assignments a for s are such that a(s′) = 0. Assume (s, s′)⁄ ∈ E. Hence (1′) right(δ(s, s′)) = 0 or (2′) ∑s′′∈S∖{s′}left(δ(s, s′′)) = 1 (note that, by well-formedness, we cannot have ∑s′′∈S∖{s′}left(δ(s, s′′)) > 1). We consider these two cases in turn:

Case (1′): right(δ(s, s′)) = 0. For all assignments a for s, given that a(s′) ∈ δ(s, s′), we have a(s′) ≤ right(δ(s, s′)) = 0.

Case (2′): ∑s′′∈S∖{s′}left(δ(s, s′′)) = 1. Consider an (arbitrary) assignment a for s. Recall that a(s′′) ≥ left(δ(s, s′′)) ≥ 0 for all s′′ ∈ S. Hence ∑s′′∈S∖{s′}left(δ(s, s′′)) = 1 means that ∑s′′∈S∖{s′}a(s′′) ≥ 1. Then, given that assignments are distributions, and hence that ∑s′′∈Sa(s′′) = 1, we must have a(s′) = 0. Because we chose a to be an arbitrary assignment for s, we have shown that a(s′) = 0 for all assignments a for s.

Hence we have established that the existence of an assignment a for s such that a(s′) > 0 implies that (s, s′) ∈ E. ■

We use edges to define the notion of path for IMCs: a path of an IMC O = (S, δ) is a sequence s0s1⋯ such that (si, si+1) ∈ E for all i ≥ 0. Given a path ρ = s0s1⋯ and i ≥ 0, we let state[ρ](i) = si be the (i + 1)-th state along ρ. We use to denote the set of paths of O, to denote the set of finite paths of O, and and to denote the sets of paths and finite paths starting in state s ∈ S. We refer to (S, E) as the graph of O.

We define the size of an IMC O = (S, δ) as the size of the representation of δ, which is the sum over all states s, s′ ∈ S of the binary representation of the endpoints of δ(s, s′), where rational numbers are encoded as the quotient of integers written in binary.

IMCs are presented typically with regard to two semantics, which we consider in turn. Given an IMC O = (S, δ), the uncertain Markov chain (UMC) semantics of O, denoted by [O]U, is the smallest set of DTMCs such that (S, P) ∈ [O]U if, for each state s ∈ S, the distribution P(s, ⋅) is an assignment for s. The interval Markov decision process (IMDP) semantics of O, denoted by [O]I, is the MDP (S, Δ) where, for each state s ∈ S, we let Δ(s) be the set of assignments for s.

Let T⊆S be a set of states of IMC O = (S, δ). We define to be the set of paths of O that reach at least one state in T. Formally, . In the following we assume without loss of generality that states in T are absorbing in all the IMCs that we consider, i.e., δ(s, s) = [1, 1] for all states s ∈ T.

Valid edge sets

Let O = (S, δ) be an IMC with edge set E, and let s ∈ S be a state of O. Edge (s′, s′′) ∈ E has source s if s = s′ and target s if s = s′′. Let E(s) = {(s, s′) ∈ E∣s′ ∈ S} be the set of edges of O with source s. Given ⋆ ∈ {[⋅, ⋅], (⋅, ⋅], [⋅, ⋅), (⋅, ⋅), 〈 + , ⋅〉, [0, ⋅〉, (0, ⋅〉, 〈0, ⋅〉, 〈⋅, ⋅]}, let E⋆ = {(s, s′) ∈ E∣δ(s, s′) ∈ ℐ⋆}. Given X⊆S, and given s and ⋆ as defined above, let E(s, X) = {(s, s′) ∈ E(s)∣s′ ∈ X} be the set of edges with source s and target in X, and let E⋆(s, X) = E(s, X)∩E⋆.

In the sequel, we will be interested in identifying the sets of edges with source state s ∈ S and target states that correspond exactly to the set of states assigned positive probability by assignments for s. This will allow us to reason about sets of edges with certain characteristics rather than reasoning directly about assignments. The characteristics of sets of edges with the same source state that we consider are the following: the first condition, called largeness, requires that the sum of the upper bounds of the set’s edges’ intervals is at least 1; the second condition, called realisability, requires that the edges that are not included in the set can be assigned probability 0. The formal definition of these characteristics now follows.

Definition 2: Large, realisable and valid edge sets.

Let B⊆E(s) be a set of edges with source state s ∈ S. The set B is:

-

large if either (a) ∑e∈Bright(δ(e)) > 1 or (b) ∑e∈Bright(δ(e)) = 1 and B⊆E〈⋅,⋅];

-

realisable if E(s)∖B⊆E[0,⋅〉;

-

valid if it is large and realisable. □

The following lemma specifies that a valid set of edges with source state s characterises exactly the support sets of at least one assignment for s. In its statement and proof we use the following notation. Given s ∈ S and B⊆E(s), we partition S into the following sets:

-

TB = {s′∣(s, s′) ∈ B} denotes the target states of edges in B,

-

TE(s)∖B = {s′∣(s, s′) ∈ E(s)∖B} denotes the target states of edges with source s that are not in B, and

-

S¬E(s) = S∖{s′∣(s, s′) ∈ E(s)} denotes states that are not target states of the edges that have source state s.

Let s ∈ S and B⊆E(s). Then B is valid if and only if there exists an assignment a for s such that support(a) = TB. □

(⇒) Let B be a valid subset of E(s) of edges with source s. We have ∑e∈Bleft(δ(e)) ≤ ∑e∈E(s)left(δ(e)) ≤ ∑e∈Eleft(δ(e)) ≤ 1 from B⊆E(s)⊆E and condition (1) of well-formedness. Furthermore, ∑e∈Bright(δ(e)) ≥ 1 because B is large.

First we identify conditions that are analogues of well-formedness conditions restricted to the set B of edges. Then, using these conditions, we proceed as in the (⇒) direction of Proposition 1 to define an assignment that assigns positive probability only to target states of edges in B.

The well-formedness conditions (1) and (2), together with the fact that B⊆E(s), establishes the following conditions () and (), whereas the largeness of B establishes the following conditions () and ():

-

∑e∈Bleft(δ(e)) ≤ 1,

-

∑e∈Bleft(δ(e)) = 1 implies that δ(e) is left-closed for all e ∈ B,

-

∑e∈Bright(δ(e)) ≥ 1, and

-

∑e∈Bright(δ(e)) = 1 implies that δ(e) is right-closed for all e ∈ B.

It will be useful to consider the following strengthening of condition ():

-

∑e∈Bleft(δ(e)) = 1 implies that δ(s, s′) is left-closed for all s′ ∈ S.

Condition () has the following justification. Assume that ∑e∈Bleft(δ(e)) = 1. From this fact, and from the combination of ∑s′∈Sleft(δ(s, s′)) ≤ 1 (that is, condition (1) of well-formedness) and the fact that B⊆{s} × S, we have that ∑s′∈Sleft(δ(s, s′)) = 1. Then, by condition (2) of well-formedness, we have that δ(s, s′) is left-closed for all s′ ∈ S. We note that an analogous strengthening of condition () cannot in general be obtained (because it is possible that there exists at least one pair (s, s′) ∈ ({s} × S)∖B such that right(δ(s, s′)) > 0 without contradicting well-formedness condition (3)).

Next, we define an assignment in a similar manner to that featured in the (⇒) direction of Proposition 1, taking care to guarantee that the assignment we define assigns positive probability only to target states of edges in B. As in the (⇒) direction of Proposition 1, we define a function f:S → [0, 1] such that:

-

left(δ(s, s′)) + f(s′) ∈ δ(s, s′) for all s′ ∈ S and

-

∑s′∈Sleft(δ(s, s′)) + f(s′) = 1.

We define a:S → [0, 1] by setting a(s′) = left(δ(s, s′)) + f(s′) for all s′ ∈ S. As in the proof of Proposition 1, the function a is an assignment, because condition (a) in the definition of f establishes that a(s′) ∈ δ(s, s′) for each state s′ ∈ S, and condition (b) establishes that a ∈ Dist(S).

In order to define an appropriate f, we consider the following cases.

Case ∑e∈Bleft(δ(e)) = 1. As in analogous cases of the proof of Proposition 1, we let f(s′) = 0 for all s′ ∈ S. Condition (a) in the definition of f is satisfied for the following reason. Given that ∑e∈Bleft(δ(e)) = 1, the antecedent of condition () is satisfied, and hence δ(s, s′) is left-closed (i.e., left(δ(s, s′)) ∈ δ(s, s′)) for all s′ ∈ S; then the choice of f(s′) = 0 for all s′ ∈ S means that left(δ(s, s′)) + f(s′) ∈ δ(s, s′) is satisfied for all s′ ∈ S, hence establishing condition (a). We now establish the satisfaction of condition (b) in the definition of f. Given that f(s′) = 0 for all s′ ∈ S, showing condition (b) reduces to showing that ∑s′∈Sleft(δ(s, s′)) = 1. Given that ∑e∈Bleft(δ(e)) = 1, our task reduces in turn to showing that left(δ(s, s′)) = 0 for all s′ ∈ S∖TB. Recall that S∖TB = TE(s)∖B∪S¬E(s) (because TB, TE(s)∖B and S¬E(s) form a partition of S). We proceed first by showing that left(δ(s, s′)) = 0 for all s′ ∈ TE(s)∖B. Given that E(s)∖B⊆E[0,⋅〉 from the realisability of B, and that left(δ(e)) = 0 for all e ∈ E[0,⋅〉 by definition, we have that left(δ(s, s′)) = 0 for all s′ ∈ TE(s)∖B. Next, we show that left(δ(s, s′)) = 0 for all s′ ∈ S¬E(s). From the definition of edges, for s′ ∈ S¬E(s), either right(δ(s, s′)) = 0 or ∑s′′∈S∖{s′}left(δ(s, s′′)) ≥ 1. In the case of right(δ(s, s′)) = 0, from left(δ(s, s′)) ≤ right(δ(s, s′)) (by definition of δ), we must have left(δ(s, s′)) = 0. In the case of ∑s′′∈S∖{s′}left(δ(s, s′′)) ≥ 1, given that well-formedness condition (1) specifies that ∑s′′∈Sleft(δ(s, s′′)) ≤ 1, we must have left(δ(s, s′)) = 0. Hence we have established condition (b) in the definition of f. Next we establish that support(a) = TB. First we show that support(a)⊆TB. From the reasoning in the previous paragraph, we have left(δ(s, s′)) = 0 for all s′ ∈ S∖TB. Given that f(s′) = 0 for all s′ ∈ S, we have a(s′) = left(δ(s, s′)) + f(s′) = 0 for all s′ ∈ S∖TB. Hence support(a)⊆TB. Second we show that support(a)⊇TB. Note that ∑e∈Bleft(δ(e)) = 1 holds in the definition of this case, and that ∑s′′∈S∖{s′}left(δ(s, s′′)) < 1 holds for all (s, s′) ∈ E from the definition of edges. Combining these two facts allows us to conclude that left(δ(e)) > 0 for all e ∈ B. Then a(s′) = left(δ(s, s′)) + f(s′) > 0 for all s′ ∈ TB, establishing that support(a)⊇TB.

Case ∑e∈Bleft(δ(e)) < 1 and ∑e∈Bright(δ(e)) = 1. We let f(s′) = right(δ(s, s′)) − left(δ(s, s′)) for all s′ ∈ TB, and let f(s′) = 0 for all s′ ∈ S∖TB. Hence left(δ(s, s′)) + f(s′) = right(δ(s, s′)) for all s′ ∈ TB, and left(δ(s, s′)) + f(s′) = left(δ(s, s′)) for all s′ ∈ S∖TB. First we establish that the choice of f above yields an assignment by showing that conditions (a) and (b) in the definition of f are satisfied. First consider condition (a). The antecedent of condition () is satisfied for this case, hence δ(e) is right-closed for all e ∈ B, i.e., right(δ(s, s′)) ∈ δ(s, s′) for all s′ ∈ TB. For s′ ∈ S∖TB, because f(s′) = 0, we need to show that left(δ(s, s′)) ∈ δ(s, s′), i.e., that δ(s, s′) is left-closed. Recalling that TE(s)∖B and S¬E(s) form a partition of S∖TB, we have two sub-cases based on whether s′ belongs to TE(s)∖B or to S¬E(s). For s′ ∈ TE(s)∖B, given that E(s)∖B⊆E[0,⋅〉 from the realisability of B, we have that δ(s, s′) is left-closed. For s′ ∈ S¬E(s), from the definition of edges, we either have right(δ(s, s′)) = 0 or ∑s′′∈S∖{s′}left(δ(s, s′′)) ≥ 1. For right(δ(s, s′)) = 0, it must be the case that δ(s, s′) = [0, 0], which is left-closed. For ∑s′′∈S∖{s′}left(δ(s, s′′)) ≥ 1, given that well-formedness condition (1) specifies that ∑s′′∈Sleft(δ(s, s′′)) ≤ 1, we must have ∑s′′∈Sleft(δ(s, s′′)) = 1. Then well-formedness condition (2) specifies that δ(s, s′) is left-closed. Hence we have established condition (a) in the definition of f. Next we turn our attention to condition (b) in the definition of f. Recall that TB, TE(s)∖B and S¬E(s) form a partition of S. First consider TB. Noting that ∑e∈Bright(δ(e)) = 1, we have ∑s′∈TBright(δ(s, s′)) = 1. Then, from the choice of f, we have ∑s′∈TBleft(δ(s, s′)) + f(s′) = 1. Now consider TE(s)∖B. Recall that, for s′ ∈ TE(s)∖B, we have chosen f(s′) = 0 and established that left(δ(s, s′)) = 0 (because δ(s, s′) = [0, 0]) above, and hence ∑s′∈TE(s)∖Bleft(δ(s, s′)) + f(s′) = 0. Finally, for s′ ∈ S¬E(s), we either have right(δ(s, s′)) = 0, in which case left(δ(s, s′)) = 0, or ∑s′′∈S∖{s′}left(δ(s, s′′)) ≥ 1, in which case the well-formedness condition (1) specifying that ∑s′′∈Sleft(δ(s, s′′)) ≤ 1 establishes that left(δ(s, s′)) = 0. Given that f(s′) = 0 for all s′ ∈ S∖TB and S¬E(s)⊆S∖TB, we have ∑s′∈S¬E(s)left(δ(s, s′)) + f(s′) = 0. From the fact that TB, TE(s)∖B and S¬E(s) form a partition of S, we then conclude that: Hence we have established condition (b) in the definition of f, and therefore the choice of f yields an assignment.

It remains to show that support(a) = TB. From the reasoning in the previous two paragraphs, we have established that left(δ(s, s′)) + f(s′) = right(δ(s, s′)) for all s′ ∈ TB, and left(δ(s, s′)) + f(s′) = 0 for all s′ ∈ S∖TB. Hence support(a)⊆TB. From the fact that right(δ(s, s′)) > 0 for s′ ∈ S such that (s, s′) ∈ E(s), and from B⊆E(s), we have that right(δ(s, s′)) > 0 for all s′ ∈ TB. Hence support(a)⊇TB. We then conclude that support(a) = TB.

Case ∑e∈Bleft(δ(e)) < 1 < ∑e∈Bright(δ(e)). For each s′ ∈ TB, we let: and let f(s′) = 0 for each s′ ∈ S∖TB. First we show that this definition of f satisfies condition (a), i.e., left(δ(s, s′)) + f(s′) ∈ δ(s, s′) for all s′ ∈ S. In the sub-case in which left(δ(s, s′)) = right(δ(s, s′)) (i.e., δ(s, s′) is the degenerate interval [left(δ(s, s′)), right(δ(s, s′))]), we have f(s′) = 0, because either s′ ∈ S∖TB, or s′ ∈ TB and f(s′) = 0 is a consequence of the fact that width(δ(s, s′)) = 0. As in the proof of Proposition 1, we then conclude that left(δ(s, s′)) + f(s′) ∈ δ(s, s′). Now consider the sub-case in which left(δ(s, s′)) < right(δ(s, s′)). Recall again that TB, TE(s)∖B and S¬E(s) form a partition of S. If s′ ∈ TE(s)∖B or s′ ∈ S¬E(s), we conclude, following identical reasoning used in the previous case, that left(δ(s, s′)) + f(s′) ∈ δ(s, s′). On the other hand, if s′ ∈ TB, we proceed in a similar manner to that of the analogous case of Proposition 1. In order to show left(δ(s, s′)) + f(s′) ∈ δ(s, s′), we will establish that 0 < f(s′) < width(δ(s, s′)). The fact that f(s′) > 0 is a consequence of the following three facts: 1 − ∑e∈Bleft(δ(e)) > 0 (from the definition of the case), width(δ(s, s′)) > 0 (given that we are considering the sub-case of left(δ(s, s′)) < right(δ(s, s′))), and ∑s′′∈TBwidth(δ(s, s′′)) > 0 (from the previous fact and from the fact that width(δ(s, s′′)) ≥ 0 for all s′′ ∈ S). We now show that f(s′) < width(δ(s, s′)). From the definition of f, this requires showing that . Given that we are considering the case in which 1 < ∑e∈Bright(δ(e)), i.e., 1 < ∑s′′∈TBleft(δ(s, s′′)) + width(δ(s, s′′)), we can conclude that 1 − ∑s′′∈TBleft(δ(s, s′′)) < ∑s′′∈TBwidth(δ(s, s′′)) and hence . Thus we have established that the choice of f(s′) satisfies left(δ(s, s′)) + f(s′) ∈ δ(s, s′) for s′ ∈ TB. We now proceed to show that f satisfies condition (b), i.e., ∑s′∈Sleft(δ(s, s′)) + f(s′) = 1. Recall that TB, TE(s)∖B and S¬E(s) form a partition of S, and that f(s′) = 0 for each s′ ∈ S∖TB. Following similar reasoning to that of the previous case and of the proof of Proposition 1, we show that left(δ(s, s′)) = 0, and hence left(δ(s, s′)) + f(s′) = 0, for each s′ ∈ S∖TB. For s′ ∈ TE(s)∖B, we have left(δ(s, s′)) = 0 from the fact that E(s)∖B⊆E[0,⋅〉 because B is realisable. For s′ ∈ S¬E(s), we have left(δ(s, s′)) = 0 by the definition of edges: either right(δ(s, s′)) = 0 and hence left(δ(s, s′)) = 0, or the combination of ∑s′′∈S∖{s′}left(δ(s, s′′)) ≥ 1 and ∑s′′∈Sleft(δ(s, s′′)) ≤ 1 (well-formedness condition (1)) establishes that left(δ(s, s′)) = 0. Given that left(δ(s, s′)) + f(s′) = 0, for each s′ ∈ S∖TB, our aim reduces to showing that ∑s′∈TBleft(δ(s, s′)) + f(s′) = 1. We proceed as for the analogous case in Proposition 1: Next, we show that support(a) = TB. The fact that support(a)⊆TB follows from left(δ(s, s′)) + f(s′) = 0, for each s′ ∈ S∖TB, which was established in the previous paragraph. In order to establish that support(a)⊇TB, we observe the following facts. For s′ ∈ TB, we have (s, s′) ∈ B⊆E(s), and hence right(δ(s, s′)) > 0 from the definition of edges. Then if width(δ(s, s′)) = 0, from left(δ(s, s′)) = right(δ(s, s′)) we have left(δ(s, s′)) > 0. Instead, if width(δ(s, s′)) > 0, we have f(s′) > 0 from the choice of f. Hence, for each s′ ∈ TB, we have left(δ(s, s′)) + f(s′) > 0, from which we obtain support(a)⊇TB. We conclude that support(a) = TB.

This concludes the (⇒) direction of the proof.

(⇐) Let a be an assignment for state s such that support(a) = TB. Our aim is to show that B is valid, i.e., that B is large and realisable. From the definition of assignments, we have a(s′) ∈ δ(s, s′), and hence a(s′) ≤ right(δ(s, s′)) for each state s′ ∈ S. From this fact, and given that a is a distribution (i.e., sums to 1), we have: In the case of ∑(s,s′)∈Bright(δ(s, s′)) > 1, the fact that B is large follows immediately from the definition of largeness. In the case of ∑(s,s′)∈Bright(δ(s, s′)) = 1, then a(s′) = right(δ(s, s′)) (i.e., a(s′) must be equal to the right endpoint of δ(s, s′)) for each (s, s′) ∈ B. Hence B⊆E〈⋅,⋅], and as a consequence B is large. The fact that {(s, s′)∣s′ ∈ support(a)} = B implies that a(s′) = 0 for all (s, s′) ∈ E(s)∖B. Hence E(s)∖B⊆E[0,⋅〉, and therefore B is realisable. Because B is large and realisable, B is valid. ■

A consequence of Lemma 1, together with Proposition 1 and the fact that we consider only well-formed IMCs, is that there exists at least one valid subset of outgoing edges from each state.

For each state s ∈ S, we let V alid(s) = {B⊆E(s)∣B is valid}. Note that |V alid(s)| = 2|E(s)| − 1 in the worst case (when all edges in E(s) are associated with intervals [0, 1]). Given a valid set B ∈ V alid(s), we let V alidAssign(B) be the set of assignments a for s that witness Lemma 1, i.e., all assignments a for s such that {(s, s′)∣s′ ∈ support(a)} = B. Let V alid = ⋃s∈SV alid(s) be the set of valid sets of the IMC. A witness assignment function w:V alid → Dist(S) assigns to each valid set B ∈ V alid an assignment from V alidAssign(B).

For the state s1 of the IMC O2 of Fig. 2, the valid edge sets are B1 = {(s1, s0), (s1, s1), (s1, s2)} and B2 = {(s1, s0), (s1, s2)}, reflecting the intuition that the edge (s1, s1) can be assigned (exactly) probability 0. Note that modifying the IMC so that the right endpoint of (s1, s0) is reduced to 0.7 would result in B1 being the only valid set associated with s1; the set B2 would not be large, because it is not possible to assign probability 0 to edge (s1, s1) and total probability of 1 to edges (s1, s0) and (s1, s2). An example of a witness assignment function w for state s1 of O2 is w(B1)(s0) = 0.7, w(B1)(s1) = 0.12 and w(B1)(s2) = 0.18, and w(B2)(s0) = 0.8 and w(B2)(s2) = 0.2. □

The qualitative MDP abstraction of O with respect to witness assignment function w is the MDP [O]w = (S, Δw), where Δw is defined by Δw(s) = {w(B)∣B ∈ V alid(s)} for each state s ∈ S.

Qualitative Reachability: UMC Semantics

Qualitative reachability problems can be classified into four categories, depending on whether we ask that the probability of reaching the target set T is 0 or 1 for some or for all ways of assigning probabilities to intervals. For the UMC semantics of IMC O = (S, δ), state s ∈ S, quantifier Q ∈ {∀, ∃} and probability λ ∈ {0, 1}, the (s, Q, λ)-reachability problem for the UMC semantics of O asks whether holds. To solve the (s, Q, λ)-reachability problem for the UMC semantics of O, we compute the set , then check whether . Portions of this text were previously published as part of a preprint (Sproston, 2018b).

Let Q ∈ {∀, ∃} and λ ∈ {0, 1}. The set can be computed in polynomial time in the size of the IMC. □

A straightforward corollary of Theorem 1 is that qualitative reachability problems for the UMC semantics of IMCs are in P. The remainder of this section is dedicated to showing Theorem 1 for each of the combinations of Q ∈ {∀, ∃} and λ ∈ {0, 1}.

Computation of

The case for is straightforward. We compute the complement of , namely the set of states for which there exists a DTMC in the UMC semantics such that T is reached with positive probability, written formally as . The computation of this set reduces to reachability on the graph of the IMC according to the following lemma.

Let s ∈ S. There exists D ∈ [O]U such that if and only if there exists a finite path such that last(r) ∈ T.

(⇒) Let D = (S, P) ∈ [O]U be a DTMC such that . From , there exists at least one finite path of D such that sn ∈ T. For each i < n, we have that P(si, si+1) > 0. From the definition of the UMC semantics, we have that P(si, ⋅) is an assignment for si such that P(si, ⋅)(si+1) > 0, from which we then obtain that (si, si+1) ∈ E by Proposition 1. Repeating this reasoning for each i < n, we have that . Recalling that sn ∈ T, this direction of the proof is completed.

(⇐) Let be a finite path of O such that sn ∈ T, which we assume w.l.o.g. does not contain any cycle. By the definition of finite paths of O, we have (si, si+1) ∈ E for each i < n. From Proposition 1, for each i < n, there exists an assignment a for state si such that a(si+1) > 0. In turn, this means that there exists a DTMC D = (S, P) ∈ [O]U such that P(si, si+1) > 0 for each i < n (for any state t ∈ S∖{s0, s1, …, sn} not visited along the path, the distribution P(t, ⋅) can be defined in an arbitrary way). Given that sn ∈ T, we obtain . ■

Hence the set is equal to the complement of the set of states from which there exists a path reaching T in the graph of the IMC. Given that the graph of the IMC and the latter set of states can be computed in polynomial time, we conclude that can be computed in polynomial time.

Computation of

We show that can be obtained by computing, in the qualitative MDP abstraction [O]w = (S, Δw) of O with respect to some (arbitrary) witness assignment function w, the set of states for which there exists a scheduler such that T is reached with probability 0.

To establish the correctness of this approach, we show a more general result: for λ ∈ {0, 1}, the set of states of [O]w for which there exists a scheduler such that T is reached with probability λ is equal to the set of states of O for which there exists a DTMC in [O]U such that T is reached with probability λ. The case in which λ = 0 will be used subsequently for the computation of , whereas the case in which λ = 1 will be used later in the article for the computation of .

Let s ∈ S and λ ∈ {0, 1}. There exists D ∈ [O]U such that if and only if there exists a scheduler σ ∈ Σ[O]w such that .

(⇒) Let D = (S, P) be a DTMC such that D ∈ [O]U and . We define the (memoryless) scheduler σD ∈ Σ[O]w of [O]w in the following way. Consider a state s′ ∈ S, and let Bs′ = {(s′, s′′) ∈ E∣P(s′, s′′) > 0} be the set of edges with source s′ assigned positive probability by D. Note that Bs′ ∈ V alid(s′): this follows from the fact that P(s′, ⋅) is an assignment for s′ and by Lemma 1. Then, for any finite path ending in state s′ (that is, last(r) = s′), we let σD(r) = {w(Bs′)↦1}, i.e., σD chooses (with probability 1) the distribution that corresponds to the witness assignment function applied to the edge set Bs′ (this is possible because Bs′ ∈ V alid(s′) and Δw(s′) = {w(B)∣B ∈ V alid(s′)} by definition). From the fact that σD is memoryless, we can obtain the folded DTMC of σD. Now observe that the DTMC D and the folded DTMC are graph equivalent in the following sense: for any s′, s′′ ∈ S, we have P(s′, s′′) > 0 if and only if (which follows from the fact that, for all states s′ ∈ S, we have ). Then, from (Chatterjee, Sen & Henzinger, 2008, Lemma 2), which specifies that the sets of states satisfying any given qualitative ω-regular (and hence also reachability) property in graph equivalent DTMCs are identical, we have that implies .

(⇐) Assume that there exists a scheduler σ ∈ Σ[O]w of [O]w such that . Given that [O]w is a finite MDP, from standard results (de Alfaro, 1997; Baier & Katoen, 2008), we can assume that σ is (a) pure (that is, for all finite paths , we have that σ(r) = {μ↦1} for some μ ∈ Δw(last(r))) and (b) memoryless. Given (a) and (b), we can write σ as a mapping σ:S → ⋃s′∈SΔw(s′). Then consider the DTMC D = (S, P), where P(s′, ⋅) = σ(s′) for all states s′ ∈ S. Note that, for all states s′ ∈ S, we have that σ(s′) is an assignment, from the following two facts: (1) σ chooses a distribution w(B) for some B ∈ V alid(s′), and (2) w is a witness assignment function, i.e., w(B) is an assignment. Given that σ(s′) is an assignment for all s′ ∈ S, hence P(s′, ⋅) is an assignment for all s′ ∈ S, and therefore D ∈ [O]U. The folded DTMC and the DTMC D are graph equivalent. Hence, given that , we have . ■

In particular, Lemma 3 allows us to reduce the problem of computing to that of computing the set on [O]w for an arbitrary witness assignment function w. As in the case of standard finite MDP techniques (see Forejt et al. (2011)), we proceed by computing the complement of this set, i.e., we compute the set . For a state set X⊆S, let CPre(X) be the set of states for which there exists a distribution such that all states assigned positive probability by the distribution are in X, defined formally as CPre(X) = {s ∈ S∣∃μ ∈ Δw(s).support(μ)⊆X}. Furthermore, let be set of states such that all available distributions make a transition to X with positive probability, defined formally as . Note that is the dual of the CPre operator, i.e., . The standard algorithm for computing the set of states of a finite MDP for which all schedulers result in reaching a set T of target states with probability strictly greater than 0 operates in the following way: starting from X0 = T, we let for progressively larger values of i ≥ 0, until we reach a fixpoint (that is, until we obtain Xi∗+1 = Xi∗ for some i∗). However, a direct application of this algorithm to [O]w results in an exponential-time algorithm, given that the size of the transition function Δw of [O]w is in general exponential in the size of O. For this reason, we propose an algorithm that operates directly on the IMC O, without requiring the explicit construction of [O]w. We proceed by establishing that CPre can be implemented in polynomial time in the size of O.

Let s ∈ S and X⊆S. Then:

-

s ∈ CPre(X) if and only if E(s, X) is valid;

-

the set CPre(X) can be computed in polynomial time in the size of O.

The proof of the first part of Lemma 4 relies on Lemma 1 and the observation that for any B, B′⊆E(s, X) such that B⊆B′, if E(s)∖B⊆E[0,⋅〉 and B is large, then E(s)∖B′⊆E[0,⋅〉 and B′ is large (intuitively, the greater the subset of E(s, X), the easier it is to satisfy realisability and largeness). Formally, the observation holds because E(s)∖B′⊆E(s)∖B, and, in the case of B ⊂ B′, for e ∈ B′∖B, given that right(δ(e)) > 0 (from the definition of edges) and ∑e′∈Bright(δ(e′)) ≥ 1 (because B is large), we must have ∑e′∈B′right(δ(e′)) > 1 (that is, condition (a) in the definition of largeness holds for B′, regardless of whether condition (a) or (b) in the definition of largeness holds for B). Hence if there exists B⊆E(s, X) such that E(s)∖B⊆E[0,⋅〉 and B is large, then E(s)∖E(s, X)⊆E[0,⋅〉 and E(s, X) is large. We now show formally the first part of Lemma 4:

The second part of Lemma 4 follows from the first part of the lemma and the fact that checking validity of E(s, X) (that is, checking that E(s)∖E(s, X)⊆E[0,⋅〉 and E(s, X) is large) can be done in polynomial time in the size of O. ■

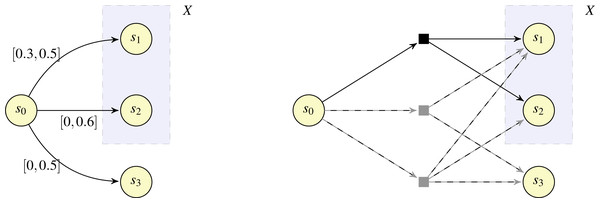

In Fig. 3, we illustrate the intuition underlying Lemma 4, making reference to an IMC fragment (left) and its corresponding qualitative MDP abstraction fragment (right; note that probabilities are not represented for the qualitative MDP abstraction to avoid clutter). Our aim is to determine whether s0 belongs to CPre(X) for X = {s1, s2}. According to Lemma 4, determining whether s0 belongs to CPre(X) is equivalent to determining whether E(s0, X) is valid. The edge set E(s0, X) is large (because right(δ(s0, s1)) + right(δ(s0, s2)) = 0.5 + 0.6 = 1.1 > 1) and realisable (because (s0, s3) ∈ E[0,⋅〉). Hence, by reasoning on the IMC, we can determine the existence of a distribution from s0 in the qualitative MDP abstraction (indicated by the black square) such that the distribution assigns positive probability only to states in X. For completeness, we also note that state s0 is associated not just with the valid edge set {(s0, s1), (s0, s2)}, but also with the valid edge sets {(s0, s1), (s0, s3)} and {(s0, s1), (s0, s2), (s0, s3)}, which correspond to the distributions illustrated with the grey squares with the support sets {s1, s3} and {s1, s2, s3}, respectively, in the qualitative MDP abstraction. □

Based on Lemma 4, instead of constructing and analysing the qualitative MDP abstraction [O]w, we propose computing directly on O the sets X0 = T and for increasing indices i until a fixpoint is reached. Given that a fixpoint must be reached within |S| steps, and the fact that Lemma 4 specifies that CPre(S∖Xi), and hence , can be done in polynomial time in the size of O, we have that the set can be computed in polynomial time in the size of O. The complement of this set is equal to , as established by Lemma 3, and hence we can compute in polynomial time in the size of O.

Figure 3: Example fragments of an IMC (left) and the corresponding qualitative MDP abstraction (right).

Computation of

We proceed in a manner analogous to that for the case of . First note that, by Lemma 3, the set is equal to the set of states of [O]w for which there exists a scheduler that results in T being reached with probability 1, i.e., . Hence our aim is to compute on [O]w. We recall the standard algorithm for the computation of this set on finite MDPs (de Alfaro, 1997; de Alfaro, 1999). Given state sets X, Y⊆S, we let That is, APre(Y, X) is the set of states for which there exists a distribution such that (a) all states assigned positive probability by the distribution are in Y and (b) there exists a state in X that is assigned positive probability by the distribution. The standard algorithm for computing the set of states for which there exists a scheduler that results in T being reached with probability 1 proceeds as follows. First we set Y0 = S and . Then sequence is computed by letting for progressively larger indices i0 ≥ 0 until a fixpoint is obtained, that is, until we obtain for some . Next we let , and compute for larger i1 ≥ 0 until a fixpoint is obtained. Then we let and , and repeat the process. We terminate the algorithm when a fixpoint is reached in the sequence Y0, Y1, ….3 The algorithm requires at most |S|2 calls to APre. In an analogous manner to CPre in the case of , we show that APre can be characterised by efficiently checkable conditions on O.

Let s ∈ S and let X, Y⊆S. Then:

-

s ∈ APre(Y, X) if and only if E(s, X∩Y) ≠ 0̸, and E(s, Y) is valid;

-

the set APre(Y, X) can be computed in polynomial time in the size of O.

The intuition underlying Lemma 5 is similar to that of Lemma 4. In particular, observe that if there exists B⊆E(s, Y) such that B∩(S × X) ≠ 0̸, E(s)∖B⊆E[0,⋅〉 and B is large, then E(s, Y)∩(S × X) ≠ 0̸, E(s)∖E(s, Y)⊆E[0,⋅〉 and E(s, Y) is large. We first show formally the first part of the lemma:

The second part of the lemma follows from the first part combined with the fact that checking whether E(s, X∩Y) ≠ 0̸ and E(s, Y) is valid can be done in polynomial time in the size of O.

Hence we obtain an overall polynomial-time algorithm for computing which, from Lemma 3, equals .

Computation of

We recall the standard algorithm for determining the set of states for which all schedulers reach a target set with probability 1 on a finite MDP (see Forejt et al. (2011)): from the set of states of the MDP, we first remove states from which the target state can be reached with probability 0 for some scheduler, then successively remove states for which it is possible to reach a previously removed state with positive probability. For each of the remaining states, all schedulers result in the target set being reached with probability 1.

We propose an algorithm for IMCs that is inspired by this standard algorithm for finite MDPs. Our aim is to compute the complement of , i.e., the state set .

Let s ∈ S. There exists D ∈ [O]U such that if and only if there exists a path such that .

(⇒) Let D = (S, P) ∈ [O]U be a DTMC such that . A bottom strongly connected component (BSCC) V⊆S of D is a strongly connected component of the graph of D (that is, the graph (S, {(s′, s′′)∣P(s′, s′′) > 0})) such that there is no outgoing edge from V (that is, for all states s′ ∈ V and s′′ ∈ S, if P(s′, s′′) > 0 then s′′ ∈ V). Let V⊆2S be the set of BSCCs of D. Given that D is a finite DTMC, by standard results for finite DTMCs (see, for example, Baier & Katoen (2008)), we have that BSCCs are reached with probability 1, i.e., , and that once a BSCC is entered all of its states are visited with probability 1. Hence implies that there exists some BSCC V ∈ V such that V∩T = 0̸ and . Next we repeat the reasoning of Lemma 2 to show that there exists a finite path such that sn ∈ V. We now show that : for any state s′ ∈ V, the fact that V∩T = 0̸ and from the fact that BSCCs do not feature outgoing edges, we have that , and hence . Therefore, for the finite path , we have , which completes this direction of the proof.

(⇐) Let be a finite path of O such that . We assume w.l.o.g. that s0s1⋯sn does not contain any cycle, and that for all i < n. As in the proof of Lemma 2, we can use these facts to conclude that there exists a DTMC D = (S, P) ∈ [O]U such that P(si, si+1) > 0 for each i < n. Furthermore, from the definition of , for any state , there exists a DTMC Ds′ = (S, Ps′) ∈ [O]U such that . In particular, from , we have . Note that all states on all paths in are in (if this was not the case, that is there exists a path in featuring a state , then we must also have ). The fact that the set {s0, s1, …, sn−1} (i.e., the states before sn along the finite path) and the set are disjoint means that we can combine the DTMCs D (along the finite path) and Dsn (within ) to obtain a DTMC D′ = (S, P′); formally, P′(si, ⋅) = P(si, ⋅) for i < n, P′(s′, ⋅) = Psn(s′, ⋅) for , and P′(s′, ⋅) can be defined by arbitrary assignments for all other states, i.e., for . Given that D, Dsn ∈ [O]U, we have that D′ ∈ [O] U. Furthermore, in D′, from s there exists a finite path to states in which the DTMC is subsequently confined, and (by the trivial fact that ) therefore , completing this direction of the proof. ■

Hence the set can be computed by taking the complement of the set of states for which there exists a path to in the graph of O. Given that , and the set of states reaching , can be computed in polynomial time, we have obtained a polynomial-time algorithm for computing . Together with the cases for , and , this establishes Theorem 1.

Qualitative Reachability: IMDP semantics

We now focus on the IMDP semantics of IMC O = (S, δ). Qualitative reachability problems in this setting take a similar form to that seen for the UMC semantics, with the key difference being the quantification over schedulers in Σ[O]I in the IMDP semantics, rather than over DTMCs in [O]U in the UMC semantics. For state s ∈ S, quantifier Q ∈ {∀, ∃} and probability λ ∈ {0, 1}, the (s, Q, λ)-reachability problem for the IMDP semantics of O asks whether holds. To solve the (s, Q, λ)-reachability problem for the IMDP semantics of O, we compute the set , then check whether . Portions of this text were previously published as part of a preprint (Sproston, 2018b). This section will be dedicated to showing the following result. We note that the cases for , and proceed in a manner similar to the UMC case (using either graph reachability or reasoning based on the qualitative MDP abstraction); instead the case for requires substantially different techniques.

Let Q ∈ {∀, ∃} and λ ∈ {0, 1}. The set can be computed in polynomial time in the size of the IMC.

A corollary of Theorem 2 is the membership in P of qualitative reachability problems for the IMDP semantics of IMCs. In the rest of this section, we establish Theorem 2 for Q ∈ {∀, ∃} and λ ∈ {0, 1}.

Computation of

As in the case of UMCs, the computation of relies on a straightforward reachability analysis on the graph of the IMC O to obtain the complement of . The correctness of this approach is based on the following lemma.

Let s ∈ S. There exists σ ∈ Σ[O]I such that if and only if there exists a path such that last(r) ∈ T. □

(⇒) Let σ ∈ Σ[O]I be a scheduler of [O]I such that . Recall that the DTMC is defined as : the state set of comprises the finite paths resulting from choices of σ from state s, and, for finite paths , we have if r′ = rμs′ such that μ ∈ Δ(last(r)), σ(μ) > 0 and μ(s′) > 0, otherwise . Furthermore, we say that a path ρ of is in Reach(T) if there exists a finite path in , with last state in T, that is visited along ρ. For a path ρ of , we can obtain a path ρ′ of O simply by extracting the sequence of the final states for all finite prefixes of ρ: that is, from the path s0(s0μ0s1)(s0μ0s1μ1s2)⋯ of we can obtain the path s0s1s2⋯ of O, because μi(si+1) > 0 implies that (si, si+1) ∈ E for all i ∈ ℕ (from the definition of [O]I and Lemma 1). Observe that ρ ∈ Reach(T) implies that ρ′ ∈ Reach(T). To conclude, implies that there exists such that ρ ∈ Reach(T), which in turn implies that there exists such that ρ′ ∈ Reach(T), i.e., there exists a finite prefix r of ρ′ (and therefore ) such that last(r) ∈ T.

(⇐) Let be a finite path of O such that sn ∈ T. By the definition of finite paths of O, we have (si, si+1) ∈ E for each i < n, which, by Lemma 1, implies in turn that there exists an assignment ai to si such that ai(si+1) > 0. We consider a scheduler σ ∈ Σ[O]I of [O]I defined in the following way: for each i < n, for all paths such that last(r) = si, then we let σ(r) = ai (σ can be defined in an arbitrary manner for all other finite paths). Observe that the finite path r = s0(s0a0s1)(s0a0s1a1s2)⋯(s0a0⋯an−1sn) is a finite path of such that all infinite paths of that have r as a prefix are in Reach(T), and hence . ■

Therefore, to obtain , we compute the state set , which reduces to reachability on the graph of the IMC according to Lemma 7, and then take the complement of the resulting set.

Computation of and

In the following we fix an arbitrary witness assignment function w of O. Lemma 8 establishes that (respectively, ) equals the set of states of the qualitative MDP abstraction [O]w with respect to w for which there exists some scheduler such that T is reached with probability 0 (respectively, probability 1).

Let s ∈ S and λ ∈ {0, 1}. There exists a scheduler σ ∈ Σ[O]I such that if and only if there exists a scheduler σ′ ∈ Σ[O]w such that . □

We first deal comprehensively with the case of λ = 0, and then consider the case of λ = 1.

Case: λ = 0. (⇒) Let σ ∈ Σ[O]I be a scheduler of [O]I such that . We show how we can define a scheduler 𝔣(σ) ∈ Σ[O]w such that .

For any finite path , let be the set of edges assigned positive probability by σ after r. Formally: We now define the partial function , which associates with a finite path of [O]w some finite path of [O]I (more precisely, of σ) that features the same sequence of states. For , let such that:

-

for all i ≤ n;

-

for all i < n;

-

and for all i < n.

The first and third conditions, and s0 = s, ensure that ; if these conditions do not hold, then 𝔤(r) is undefined. The second condition ensures that 𝔤 maps paths of to paths of in a consistent manner (for example, the condition ensures that, if there are two finite paths r1 and r2 of [O]w with a common prefix of length i, then 𝔤(r1) and 𝔤(r2) will also have a common prefix of length i). We are now in a position to define the scheduler 𝔣(σ) of [O]w: let for each finite path (recall that is an assignment that witnesses Lemma 1 for ).

Next we need to show that . Note that if and only if all paths are such that ρ⁄ ∈ Reach(T), and similarly if and only if all paths are such that ρ⁄ ∈ Reach(T). Hence we show that the existence of a path such that ρ ∈ Reach(T) implies the existence of a path such that ρ′ ∈ Reach(T). Assuming the existence of some such that ρ ∈ Reach(T), we will identify a path that visits the same states as ρ, and hence is such that ρ′ ∈ Reach(T). Writing ρ = s0μ0s1μ1⋯, and letting ri be the ith prefix of ρ (i.e., ri = s0μ0s1μ1⋯μi−1si), we now show the existence of ρ′ by induction, by considering prefixes ri′ of ρ′ of increasing length.

(Base case.) Let .

(Inductive step.) Assume that we have constructed the finite path . We show how to extend with one transition to obtain the finite path . The transition siμisi+1 along ρ implies that . This fact then implies that there exists some μ′ ∈ Δ(si) such that σ(𝔤(ri))(μ′) > 0 and μ′(si+1) > 0. We then let .

From this construction of ρ′, we can see that (because ρ′ follows the definition of a path of scheduler σ, and because the choice of μ′ after must be consistent with the choices made along all prefixes of ). Furthermore, we have ρ′ ∈ Reach(T) (because ρ and ρ′ feature the same states, and ρ ∈ Reach(T)). Hence we have shown that the existence of such that ρ ∈ Reach(T) implies the existence of such that ρ′ ∈ Reach(T). This fact means that implies , concluding this direction of the proof.

(⇐) Let σ ∈ Σ[O]w such that . Given that [O]w is a finite MDP, we can assume that σ is memoryless and pure. We now define σ′ ∈ Σ[O]I and show that . For a finite path , let σ′(r) = σ(last(r)) (recall that, for all states s′ ∈ S, σ(s′) = {w(B)↦1} for some B ∈ V alid(s′), where w(B) is an assignment for s′, hence σ′ is well defined). Then we have that the DTMCs and are identical, and hence implies that .

Hence the part of the proof for λ = 0 is concluded.

Case: λ = 1. (⇒) Let σ ∈ Σ[O]I be such that . We use the construction of the scheduler 𝔣(σ) of [O]I from the case of (i.e., the case λ = 0 of this proof), and show that . We show that implies by showing the contrapositive, i.e., implies . To show this property, we use end components (de Alfaro, 1997). An end component of finite MDP [O]w = (S, Δw) is a pair (C, D) where C⊆S and D:C → 2Dist(S) is such that (1) 0̸ ≠ D(s′)⊆Δw(s′) for all s′ ∈ C, (2) support(μ)⊆C for all s′ ∈ C and μ ∈ D(s′), and (3) the graph (C, {(s′, s′′) ∈ C × C∣∃μ ∈ D(s′).μ(s′′) > 0}) is strongly connected. Let ℰ be the set of end components of [O]w. For an end component (C, D), let sa(C, D) = {(s′, μ)∣s′ ∈ C∧μ ∈ D(s′)} be the set of state-action pairs associated with (C, D). For a path , we let inf(ρ) = {(s, μ)∣s and μ appear infinitely often along ρ}. The fundamental theorem of end components (de Alfaro, 1997) specifies that, for any scheduler σ′ ∈ Σ[O]w, we have that .

Now, given that [O]w is a finite MDP, from and the fact that states in T are absorbing, there exists an end component (C, D) ∈ ℰ such that C∩T = 0̸ and . We observe the following property: for any finite path , we have that , due to the fact that is a valid assignment for . From the definition of , we can see that σ assigns positive probability only to those distributions that assign positive probability to states that are targets of edges in . Putting these two facts together, we conclude that, for any set X⊆S, we have if and only if {s′ ∈ S∣∃μ ∈ Δ(last(𝔤(ρ))).(σ(𝔤(r))(μ) > 0∧μ(s′) > 0)}⊆X. This means that the existence of a finite path such that all suffixes of r generated by 𝔣(σ) visit only states in C implies that all suffixes of 𝔤(r) (which we recall is a finite path of σ, i.e., ) generated by σ visit only states in C. Given that , such a finite path in exists. Then, given that there exists a finite path in such that all suffixes generated by σ visit only states in C, and from the fact that C∩T = 0̸, we have that . Hence we have shown that implies that . This means that implies that . Hence, for σ ∈ Σ[O]I such that there exists σ′ ∈ Σ[O]w such that .

(⇐) Let σ ∈ Σ[O]w be such that . As in the case of the analogous direction of the case for λ = 0, we can show the existence of σ′ ∈ Σ[O]I such that and are identical, and hence implies that . ■

Given that we have shown in Section ‘Qualitative Reachability: UMC semantics’ that the set of states of the qualitative MDP abstraction [O]w for which there exists some scheduler such that T is reached with probability 0 (respectively, probability 1) can be computed in polynomial time in the size of O, we obtain polynomial-time algorithms for computing (respectively, ).

The following corollary summarises the relationship between the state sets satisfying analogous reachability problems in the UMC and IMDP semantics, and is obtained by combining Lemma 2, Lemma 3, Lemma 7 and Lemma 8.

, and . □

Computation of

This case is notably different from the other three cases for the IMDP semantics, because schedulers that are not memoryless may influence whether a state is included in . In particular, we recall the example of the IMC of Fig. 1: as explained in Section ‘Introduction’, we have . In contrast, for the UMC semantics, we have , and s0 would be in if we restricted the IMDP semantics to memoryless (actually finite-memory) schedulers. For this reason, a qualitative MDP abstraction is not useful for computing , because it is based on the use of witness assignment functions that assign constant probabilities to sets of edges available from states: on repeated visits to a state, the (finite) set of available distributions remains the same in a qualitative MDP abstraction. Therefore we require alternative analysis methods that are not based on the qualitative MDP abstraction. In this section we introduce an alternative notion of end components, defined solely in terms of states of the IMC, which characterise situations in which the IMC can confine its behaviour to certain state sets with positive probability in the IMDP semantics: for example, the IMC of Fig. 1 can confine itself to state s0 with positive probability in the IMDP semantics. The key characteristic of these end components is that the total probability assigned to edges that have a source state in the component but a target state outside of the component can be made to be arbitrarily small (note that such edges must have an interval with a left endpoint of 0). We now define formally our alternative notion of end components.

Definition 3: ILECs

A set C⊆S of states is anIMC-level end component (ILEC) if, for each state s ∈ C, we have (1) E〈+,⋅〉(s, S∖C) = 0̸, (2) ∑e∈E(s,C)right(δ(e)) ≥ 1, and (3) the sub-graph (C, E∩(C × C)) is strongly connected. □

In the IMC O1 ofFig. 1, the set {s0} is an ILEC: for condition (1), the edge (s0, s1) (the only edge in E(s0, S∖{s0})) is not in E〈+,⋅〉, and, for condition (2), we have right(δ(s0, s0)) = 1. In the IMC O2 ofFig. 2, the set {s0, s1} is an ILEC: for condition (1), the only edge leaving {s0, s1}has 0 as its left endpoint, i.e., δ(s1, s2) = (0, 0.2], hence E〈+,⋅〉(s0, {s2}) = E〈+,⋅〉(s1, {s2}) = 0̸; for condition (2), we have right(δ(s0, s0)) + right(δ(s0, s1)) = 1.6 ≥ 1 and right(δ(s1, s0)) + right(δ(s1, s1)) = 1.3 ≥ 1. In both cases, the identified sets clearly induce strongly connected subgraphs, thus satisfying condition (3).□

Remark 2 Both conditions (1) and (2) are necessary to ensure that the probability of leaving C in one step can be made arbitrarily small. Consider an IMC with state s ∈ C such that E(s, C) = {e1} and E(s, S∖C) = {e2, e3}, where δ(e1) = [0.6, 0.8], δ(e2) = [0, 0.2] and δ(e3) = [0, 0.2]. Then condition (1) holds but condition (2) does not: indeed, at least total probability 0.2 must be assigned to the edges (e2 and e3) that leave C. Now consider an IMC with state s ∈ C such that E(s, C) = {e1, e2} and E(s, S∖C) = {e3}, where δ(e1) = [0, 0.5], δ(e2) = [0, 0.5] and δ(e3) = [0.1, 0.5]. Then condition (2) holds (because the sum of the right endpoints of the intervals associated with e1 and e2 is equal to 1), but condition (1) does not (because the interval associated with e3 specifies that probability at least 0.1 must be assigned to leaving C). Note also that if E(s, C)⊆E[⋅,⋅)∪E(⋅,⋅) (all edges in E(s, C) have right-open intervals) and ∑e∈E(s,C)right(δ(e)) = 1, there must exist at least one edge in E(s, S∖C) by well formedness. □

Let be the set of ILECs of O. We say that an ILEC is maximal if there does not exist any such that C ⊂ C′. For a path , let infst(ρ)⊆S be the states that appear infinitely often along ρ, i.e., for ρ = s0μ0s1μ1⋯, we have infst(ρ) = {s ∈ S∣∀i ∈ ℕ.∃j > i.sj = s}. We present a result for ILECs that is analogous to the fundamental theorem of end components of de Alfaro (1997): the result specifies that, with probability 1, a scheduler of the IMDP semantics of O must confine itself to an ILEC.

For s ∈ S and σ ∈ Σ[O]I, we have . □

The proof is structured in the same manner as that for classical end components in de Alfaro (1997). Consider C⊆S such that . Our aim is to show that . Given that is a finite set, the required result then follows.

First suppose that the condition (1) in the definition of ILECs does not hold, i.e., there exists (s′, s′′) ∈ E such that s′ ∈ C, s′′⁄ ∈ C and (s′, s′′) ∈ E〈+,⋅〉. Observe that left(δ(s′, s′′)) > 0. The probability of remaining in C when visiting s′ is at most 1 − left(δ(s′, s′′)), where 1 − left(δ(s′, s′′)) is strictly less than 1. Given that s′ ∈ infst(ρ) for every ρ such that infst(ρ) = C, we have that .

Suppose that condition (2) in the definition of ILECs does not hold for some s′ ∈ C, i.e., ∑e∈E(s′,C)right(δ(e)) < 1. Therefore, for all μ ∈ Δ(s′), we must have ∑s′′∈Cμ(s′′) ≤ ∑e∈E(s′,C)right(δ(e)) < 1. Hence, the probability of remaining in C when visiting s′ is strictly less than 1. Then, as in the case of the first condition in the definition of ILECs, we conclude that .