A topic-aware evaluation of ChatGPT’s semantic alignment with community answers using BERTScore and BERTopic

- Published

- Accepted

- Received

- Academic Editor

- Davide Chicco

- Subject Areas

- Artificial Intelligence, Computer Education, Data Mining and Machine Learning, Emerging Technologies

- Keywords

- Large language models (LLMs), Open-domain question answering, ChatGPT, Topic modeling, Semantic evaluation

- Copyright

- © 2026 Alsulami

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2026. A topic-aware evaluation of ChatGPT’s semantic alignment with community answers using BERTScore and BERTopic. PeerJ Computer Science 12:e3446 https://doi.org/10.7717/peerj-cs.3446

Abstract

Large language models are increasingly deployed in everyday question-answering tasks, yet their performance across diverse, real-world queries remains underexplored. This study evaluates the semantic alignment of ChatGPT’s responses with human-selected best answers in an open-domain question answering (QA) setting, using data from the Yahoo! Answers platform. Distinct from prior research that centers on domain-specific datasets, this work explores ChatGPT’s general-purpose QA performance across a wide range of topics. BERTopic is used to extract latent themes from 500 diverse full-question samples, and BERTScore metrics (precision, recall, F1) are applied to quantify semantic similarity between ChatGPT-generated responses and top-rated community answers. Results indicate that ChatGPT (GPT-3.5-turbo) achieves a strong average F1-score of 0.824, reflecting high alignment with human judgments. Topic-level analysis reveals that ChatGPT performs particularly well on factual and encyclopedia-style questions, while performance varies across more subjective or open-ended topics. This study introduces a topic-sensitive evaluation framework that enhances understanding of large language model behavior in real-world QA scenarios and supports the development of more effective and explainable conversational artificial intelligence (AI) systems.

Introduction

Natural language processing (NLP) systems are relying more on large language models (LLMs), such as ChatGPT, for generating fluent, contextually aware, and sensible natural language responses across a variety of domains. Community question answering (CQA) platforms, such as Yahoo! Answers, provide further rich and difficult environments for evaluating the performance of these models because of the informality, breadth, and diversity of user-generated content. CQA platforms capture the naturalistic information seeking behavior across many domains and scenarios, ranging from technical problem resolution to social dilemmas. Therefore, they are particularly well positioned to evaluate the generalization properties of LLMs in various naturalistic settings (Devlin et al., 2018; Reimers & Gurevych, 2019). While prior research has demonstrated that ChatGPT can perform competitively with human annotators in structured NLP tasks such as sentiment analysis and topic classification (Liu et al., 2019; Zhang et al., 2019), its performance in replicating or improving upon human-generated responses in open-domain QA, particularly in contexts shaped by community interaction and cultural preference, remains underexplored. The Yahoo! Answers dataset, with its annotated fields for question titles, question content, and community-voted best answers, offers a grounded benchmark for such comparative evaluation (Yang & Zhang, 2017). This study aims to address two central research questions: How consistent is ChatGPT’s semantic alignment with human-voted answers in a large-scale community QA setting?, and Can topic modeling reveal patterns in ChatGPT’s answer quality across varying types of user-generated questions? Unlike conventional QA benchmarks, this study introduces a topic-sensitive diagnostic layer to uncover domain-specific strengths and weaknesses of ChatGPT. To investigate these questions, we conduct a comprehensive evaluation of ChatGPT’s answers using a stratified sample of Yahoo! Answers posts. Semantic alignment is measured using BERTScore (Zhang et al., 2019) which is a contextual similarity metric that accounts for paraphrasing and lexical variation. Additionally, we employ BERTopic to extract latent thematic structures within the dataset and analyze performance differences across topics. This two-tier approach allows for a nuanced understanding of ChatGPT’s capabilities and limitations in offering factual or subjective answers. Our results shed light on the situations and contexts in which ChatGPT delivers high semantic fidelity, and more importantly, where responses miss the mark, especially with emotionally tinged or ambiguously stated inquiries. The findings also contribute to the growing discussion of the interpretability and dependability of LLMs for real-life QA scenarios, while providing developers and researchers tangible takeaways when leveraging or evaluating generative artificial intelligence (AI) in open-ended information retrieval environments.

Related work

LLMs in open-domain QA

The surge in studies exploring the capacity of LLMs for open-domain question answering (QA) established both their capacities, as well as their associated limits. Most studies in particular have examined ChatGPT and its associated model(s) (e.g., GPT-3.5, GPT-4) in terms of fluency, adaptivity, and semantic understanding in the context of QA.

Earlier studies such as Bahak et al. (2023) emphasized that while ChatGPT achieves high performance on general-purpose QA benchmarks (e.g., SQuAD, NewsQA), it tends to underperform compared to task-specific models when contextual grounding is absent. Their comparative evaluation using exact match (EM) and F1 metrics showed that ChatGPT exhibits a strong bias toward simpler factual questions while struggling with inferential or “why” questions, often due to hallucination effects.

In a complementary vein, Tan et al. (2023) conducted a large-scale benchmark using over 190,000 KBQA test cases, systematically testing ChatGPT on multi-hop and compositional reasoning tasks. Their results reinforced the notion that while ChatGPT is effective in general scenarios, its accuracy declines in tasks demanding entity disambiguation or precise symbolic operations. The study also introduced a black-box diagnostic inspired by CheckList to identify model weaknesses.

In specialized domains, such as medicine, Singhal et al. (2025) explored the integration of domain-specific corpora into ChatGPT pipelines for biomedical question answering (MDQA). They emphasized the need for hybrid unimodal and multimodal methods, especially for high-risk scenarios requiring visual and textual inputs (e.g., X-rays and diagnoses). Their findings outline both the opportunities and constraints of using LLMs in expert-dependent QA domains. Additional work in healthcare, such as Zou & Wang (2024), Du et al. (2024), further supports the need for robust and domain-aware evaluation frameworks.

Evaluation metrics for semantic alignment

Semantic evaluation of QA responses has traditionally relied on surface-level metrics like BLEU, ROUGE, and METEOR, which are primarily based on n-gram overlap and often fail to detect paraphrased but semantically equivalent content. These metrics tend to penalize fluent but lexically diverse answers and may not align well with human judgment in open-domain QA.

BERTScore (Zhang et al., 2019) addresses this gap by using contextual embeddings from BERT to assess semantic similarity between model-generated and reference answers. It aligns tokens based on cosine similarity in embedding space, enabling a more nuanced assessment of meaning preservation. Despite its strengths, BERTScore does not directly evaluate factual accuracy, fluency, or pragmatic relevance.

BLEURT (Yan et al., 2023) has been introduced as an alternative that incorporates learned representations fine-tuned on human ratings, offering better alignment with perceived answer quality. Human evaluation remains the most comprehensive method, especially when judging subjective, emotional, or multi-turn responses. In this study, we chose BERTScore for its ability to handle lexical variance, while acknowledging its limitations and the potential benefit of hybrid metrics.

Topic modeling in QA analysis

Topic modeling provides a mechanism to analyze question diversity and its relationship with LLM performance. Traditional methods like LDA have been widely used to uncover latent topics in QA datasets. However, LDA struggles with coherence when applied to informal or user-generated content.

BERTopic (Grootendorst, 2022), in contrast, leverages transformer-based sentence embeddings combined with HDBSCAN clustering, producing semantically richer and more coherent topic groupings. This makes it particularly suitable for analyzing heterogeneous QA data, like the Yahoo! Answers dataset. We use BERTopic not just for categorization but as a tool to stratify ChatGPT’s performance across different semantic domains, an application that adds explanatory power to traditional evaluation techniques.

Prompting, system types, and human evaluation in QA

Prompt design has a substantial influence on the output quality of generative models. Sasaki et al. (2025) provided a taxonomy of prompt engineering strategies, highlighting zero-shot, few-shot, and chain-of-thought prompting as distinct approaches. His study found that instruction-tuned prompts significantly improve response accuracy and informativeness, especially in educational and reasoning domains.

Similarly, Chan, An & Davoudi (2023) demonstrated how role-based and instructive prompts impact ChatGPT’s ability to generate high-quality questions. These findings underscore the need for careful prompt construction and its role in evaluation design. Although our study uses a consistent prompt template to ensure standardization, we recognize the importance of conducting prompt sensitivity tests in future work.

In terms of model architecture, Omar et al. (2023) compared ChatGPT with symbolic knowledge-based QA systems, such as KGQAN, finding that while ChatGPT excels in fluent and natural-sounding responses, it often falls short in structured knowledge retrieval tasks requiring SPARQL or rule-based logic. This supports a complementary view where neural and symbolic systems can be integrated for improved QA performance.

Human preference studies, like the one conducted by Kabir et al. (2024), reveal that in 68% of cases, developers favored ChatGPT’s responses over those voted best by the community, citing improved fluency and clarity. These developer-in-the-loop evaluations offer important perspectives on practical deployment and user trust.

Methodology

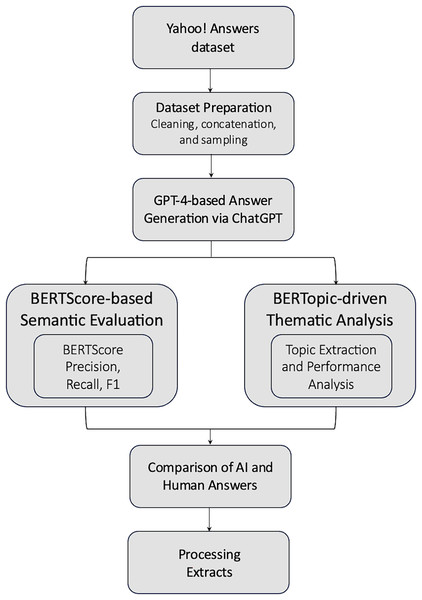

This study adopts a multi-stage evaluation pipeline to assess the performance of ChatGPT in open-domain question answering using community-sourced data. As illustrated in Fig. 1, the methodology consists of four main components: data preprocessing, ChatGPT response generation, semantic similarity evaluation, and topic-based performance analysis.

Figure 1: The workflow of the proposed methodology.

We first preprocessed the Yahoo! Answers dataset by concatenating each question’s title and content to form a unified input prompt. ChatGPT (GPT-3.5-turbo) was then prompted with these inputs to generate corresponding answers. To evaluate the semantic quality of these responses, we computed BERTScore precision, recall, and F1-score metrics by comparing the model’s output to the human-selected best answers.

To gain insight into thematic variation, we applied BERTopic to the dataset, leveraging transformer-based embeddings and density-based clustering to identify latent topics. Each question was assigned to a topic, and performance metrics were aggregated accordingly. Further analysis involved clustering high- and low-performing responses based on their semantic scores, using Term Frequency-Inverse Document Frequency (TF-IDF) and KMeans, followed by PCA for visualization.

This methodological framework enables both quantitative (semantic similarity) and qualitative (topic-based) evaluations of ChatGPT’s performance. The following subsections describe each stage in detail.

Dataset preparation

We utilized the Yahoo! Answers Topic Classification dataset, a well-known open-domain QA corpus frequently used in benchmarking classification and information retrieval systems (Yang & Zhang, 2017). The dataset was obtained from Kaggle (https://www.kaggle.com/datasets/bhavikardeshna/yahoo-email-classification), and each sample contains a question_title, question_content, and a best_answer field representing the highestrated response provided by community users. To generate input prompts with sufficient contextual coverage, we concatenated question_title and question_content into a new field labeled full_question, which was used as the input prompt for ChatGPT. Missing or malformed values were cleaned using string-based imputation, and all text was standardized to UTF-8 encoding.

To ensure diversity while maintaining computational feasibility, we used a 500-question sample for both ChatGPT response generation and semantic evaluation as well as for topic modeling with BERTopic. This approach ensures that fine-grained response analysis and thematic topic modeling are conducted on the same representative dataset, thereby strengthening generalizability while preserving broad topical coverage.

500-question sample for ChatGPT response generation and semantic evaluation;

500-question sample for topic modeling using BERTopic.

This strategy balances computational feasibility with topical diversity, providing a robust basis for assessing model performance across a wide range of open-domain questions.

ChatGPT response generation

For generating AI-based answers, we employed GPT-3.5-turbo, one of the most advanced autoregressive language models available at the time of writing (Brown et al., 2020). Following best practices in few-shot prompting and controlled evaluation (Ouyang et al., 2022), each prompt to the model included:

A system message guiding behavior: “You are a helpful question-answering assistant. Provide clear, accurate, and reliable responses.”;

A user message containing the full_question text.

All prompts followed an identical structure comprising a standardized system message and user input with full_question.

Responses were generated using OpenAI official SDK with a temperature of 0.7 and a maximum token limit of 300 to balance linguistic creativity and factual consistency (Bergs, 2019). All responses were captured and stored in a new column gpt_answer. Exception handling routines were added to manage rate limits, malformed inputs, or empty completions.

Prompt sensitivity was not explored in this study and remains an area for future experimentation.

This procedure produced a structured dataset of parallel ChatGPT-human answer pairs, suitable for both quantitative evaluation and qualitative inspection.

Topic modeling with BERTopic

To gain insights into the types of questions posed within the dataset, and to later assess topic-specific variation in ChatGPT’s performance, we employed BERTopic (Nedungadi et al., 2025), a hybrid topic modeling approach combining transformer embeddings with density-based clustering (Kriegel et al., 2011). Using the all-MiniLM-L6-v2 embedding model (Chen, Jones & Brennan, 2024), we encoded each full_question in the 500-question sample and reduced the embedding dimensionality via Uniform Manifold Approximation and Projection (UMAP) (McInnes, Healy & Melville, 2020). Clustering was then performed using HDBSCAN (Rahman et al., 2016), an unsupervised density-based algorithm, to group questions into interpretable topics. Each topic was described by a ranked list of top keywords and reviewed manually to assign semantic labels. These topics later served as the basis for stratified performance comparison in the results section.

Semantic evaluation with BERTScore

To evaluate the quality of ChatGPT’s responses against community-voted best answers, we employed BERTScore (Zhang et al., 2019), a state-of-the-art semantic similarity metric based on contextual embeddings. BERTScore computes similarity by aligning tokens from both candidate and reference responses using cosine similarity in embedding space, rather than relying on exact surface word matches. We computed BERTScore Precision, Recall, and F1-score using the bert-base-uncased model for all 500 question-answer pairs. The metrics are interpreted as follows:

Precision: measures the proportion of GPT-generated content that is semantically aligned with the reference;

Recall: captures how much of the original best answer is recovered in the GPT output;

F1-score: represents the harmonic mean of Precision and Recall, indicating balanced overlap.

All metric scores were stored alongside the original dataset to support topic-wise and overall analysis. The use of BERTScore enables a more nuanced understanding of the semantic fidelity of ChatGPT’s output, especially in cases of paraphrased or differently worded but conceptually equivalent answers.

Clustering high and low performing questions

To explore patterns underlying ChatGPT’s strengths and weaknesses, we performed a clustering analysis on questions where ChatGPT either succeeded or struggled. This post-evaluation phase builds on prior work in NLP interpretability, where clustering is used to expose latent structure in high-dimensional language tasks (Tenney, Das & Pavlick, 2019; Liu et al., 2022). Using the BERTScore F1 metric as a proxy for semantic performance, we defined two cohorts:

High-performance questions: BERTScore F1 0.85;

Low-performance questions: BERTScore F1 0.78

The high threshold captures cases where ChatGPT responses closely matched the best human-voted answers, indicating strong semantic fidelity. Thresholds (F1 0.85 for high, F1 0.78 for low) were chosen based on the quartile distribution and inflection points observed in the histogram of BERTScore F1 values. The high threshold represents responses in the top quartile that most closely align with community-voted answers, indicating strong semantic fidelity. The low threshold corresponds to the lower decile, capturing weak or misaligned responses. This stratification ensured the inclusion of clearly distinct performance groups while maintaining sample size balance for meaningful clustering.

We extracted the full_question field from both groups and applied TF_IDF vectorization (max features = 5,000, English stopword removal) to represent each question in vector space (Qaiser & Ali, 2018). Each set was then clustered separately using KMeans with k = 4, allowing us to identify dominant question clusters within high and low performance groups. To visualize semantic separability, we applied PCA (Jolliffe, 2002) to project the TF-IDF matrix into 2D space. Cluster assignments were added as labels and stored in new columns within the dataset.

Results

ChatGPT answer quality

To assess the semantic quality of ChatGPT-generated responses, we computed BERTScore precision, recall, and F1 metrics for each question–answer pair across a representative sample of 500 open-domain questions drawn from the Yahoo! Answers dataset. These metrics were chosen for their robustness in capturing meaning-preserving paraphrases and tolerance to lexical variation (Zhang et al., 2019). As shown in Table 1, ChatGPT achieved a mean BERTScore F1 of 0.824, with values ranging from 0.750 to 0.898. This indicates a generally strong semantic alignment between ChatGPT’s responses and the community-voted best answers.

| Metric | Precision | Recall | F1 |

|---|---|---|---|

| Count | 500.0 | 500.0 | 500.0 |

| Mean | 0.8200 | 0.8282 | 0.8237 |

| Std | 0.0275 | 0.0292 | 0.0229 |

| Min | 0.7140 | 0.7028 | 0.7501 |

| 25% | 0.8023 | 0.8116 | 0.8082 |

| 50% | 0.8213 | 0.8299 | 0.8252 |

| 75% | 0.8380 | 0.8479 | 0.8399 |

| Max | 0.8943 | 0.9152 | 0.8978 |

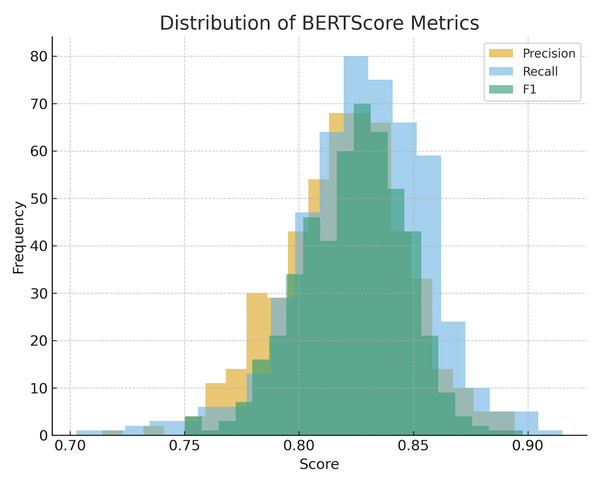

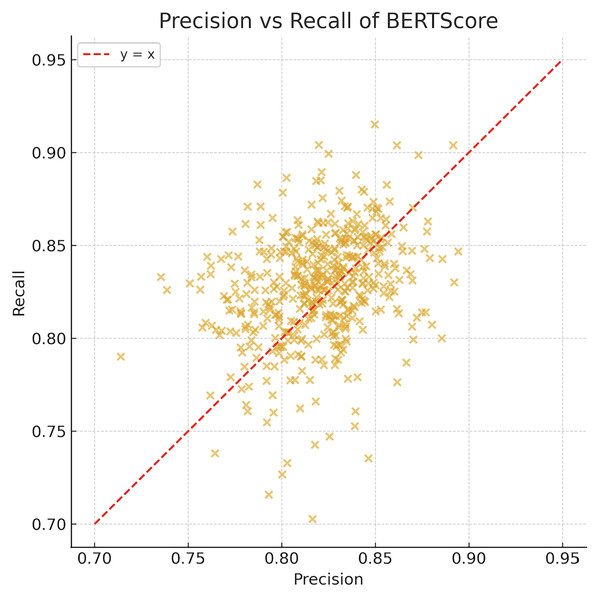

Figure 2 presents the distribution of semantic similarity metrics computed via BERTScore across 500 ChatGPT-generated responses. As summarized in Table 1, the mean BERTScore F1 value of 0.824 is consistent with the clustering patterns observed in Fig. 2. The right-skewed distributions across all three metrics confirm that the majority of responses fall within the high-performance zone, with relatively few outliers in the lower range. Together, the tabular and distributional results provide complementary evidence that ChatGPT maintains strong and stable semantic alignment with human-preferred answers across diverse open-domain questions. This observation is further reinforced by Fig. 3, which demonstrates that most responses balance completeness (recall) and relevance (precision), lying close to the diagonal line between the two dimensions.

Figure 2: Distribution of BERTScore precision, recall, and F1-scores.

Figure 3: BERTScore precision vs. recall, showing balanced semantic alignment in ChatGPT responses.

Figure 3 demonstrates that most responses align closely along the diagonal between precision and recall, suggesting that ChatGPT tends to balance relevance (precision) with completeness (recall). The clustering near the top-right quadrant indicates that the majority of responses achieve simultaneously high precision and recall, reinforcing the observation that ChatGPT produces semantically consistent answers that are both accurate to the reference content and inclusive of key information. Only a small number of points deviate notably from the diagonal, highlighting rare cases where responses may be more precise than complete (or vice versa).

These quantitative trends corroborate the findings of previous research (OpenAI, 2023), demonstrating the capacity of large language models to perform reliably on open-domain queries when provided sufficient context.

Distribution of high and low performance

To explore performance variability, we stratified the dataset using two empirically determined BERTScore F1 thresholds:

High-performing responses were defined as those scoring (n = 60);

Low-performing responses were defined as those scoring (n = 16).

These thresholds were selected based on the quartile distribution and inflection points observed in the BERTScore F1 histogram (see ‘Representative Examples’). The high threshold thus captures responses within the top tail of the distribution, representing the most semantically aligned answers, while the low threshold isolates the bottom tail, highlighting cases of clear underperformance. This stratification allows for targeted downstream analysis of strongly aligned vs. weakly aligned responses.

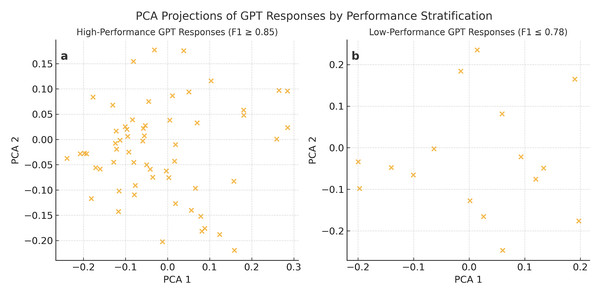

Clustering insights

To better understand patterns in model behavior, we projected the high-and low-performing subsets into a lower-dimensional space using TF-IDF vectorization followed by PCA, as outlined in ‘Clustering High and Low Performing Questions’. The resulting two-dimensional visualizations are presented in Fig. 4.

Figure 4: PCA projections of GPT responses stratified by BERTScore F1 thresholds: (A) High-performing responses (F1 0.85); (B) Low-performing responses (F1 0.78).

Clusters of high-performing responses exhibited strong topical cohesion. These questions were primarily factual, often involving definitions, historical facts, or stepwise process explanations. ChatGPT excelled in these areas because its training corpus contains abundant fact-based content, allowing it to generate responses with high semantic alignment and low ambiguity.

In contrast, clusters of low-performing responses tended to involve subjective or underspecified queries, as well as requests subject to policy constraints. Examples include emotionally framed questions (e.g., “How does it feel to?”) or requests triggering refusal mechanisms (e.g., “What are the lyrics?”). These findings suggest that breakdowns in performance typically occur when (a) insufficient contextual information is available, or (b) generative safety protocols limit content production. The clear divergence in topical cohesion across subsets provides empirical evidence that ChatGPT’s performance is shaped heavily by input clarity and task definition, consistent with recent LLM interpretability research (Bommasani et al., 2021).

Topic modeling results and analysis

To better understand the thematic distribution of questions and how topic content correlates with ChatGPT’s performance, we applied BERTopic on the full-question text of 500 samples. BERTopic combines transformer-based embeddings (MiniLM-L6-v2), UMAP for dimensionality reduction to uncover coherent latent topics in unstructured text data. The model identified 15 distinct topics, each characterized by ranked keyword descriptors and representative questions. Manual inspection and qualitative labeling (Chang et al., 2009) revealed the presence of semantically coherent categories such as:

Technology and Programming Help

Medical and Health Inquiries

Relationship and Emotional Advice

Homework and School Assignments

Definitions and Encyclopedic Questions

Each question was assigned to its respective topic cluster, and BERTScore F1 values were aggregated per topic to assess performance variability. The following findings were observed:

High-performing topics (avg. F1 ) included factual domains such as definitions, science-based queries, and technical troubleshooting, where questions had well-defined scopes and ChatGPT’s output was aligned with clear reference answers.

Low-performing topics (avg. F1 ) were concentrated in subjective or underspecified categories like emotional advice, opinions, and non-specific homework help, where ChatGPT’s responses often lacked the personal nuance or contextual grounding found in the best community answers.

This performance stratification is visualized in Fig. 4, where PCA plots of clustered high- and low-performing responses show distinct thematic boundaries. Clusters of high F1-scores tend to be dense and semantically homogeneous, whereas low-performing clusters are more dispersed and ambiguous in content structure. These results support the hypothesis that ChatGPT’s QA performance is topic-sensitive. Its strengths lie in structured, knowledge-oriented queries, likely due to pretraining on factual web corpora. Conversely, its weaknesses emerge in ill-posed or subjective questions, where human answers incorporate personal experience, empathy, or implicit cultural context—dimensions not easily replicated by LLMs. By combining BERTopic and BERTScore, our methodology enables not only evaluation of QA quality but also explainability, offering a topic-level lens through which model strengths and gaps can be identified.

Representative examples

To provide concrete illustrations of semantic performance, we extracted the top five and bottom five ChatGPT responses based on BERTScore F1, presented in Tables 2 and 3 respectively.

| Question | Answer A (Human) | Answer B (ChatGPT) |

|---|---|---|

| Who hit the longest home run at Jacobs Field? | On July 3rd, 1999, Jim Thome hit a 511-foot shot to center field against Kansas City. | Jim Thome hit the longest home run at Jacobs Field (now Progressive Field) at 511 feet on July 3, 1999. |

| What is a procrastinator? | A person who puts off doing something, often out of carelessness or laziness. | Someone who delays tasks or responsibilities, often choosing less urgent activities, which can lead to stress and reduced productivity. |

| Are lab-created gems fake? | Lab-created gems are not fake; they are synthetic, sharing the same composition as natural gems. | Not fake—they have the same chemical and physical properties as natural gems, created in labs under similar conditions. |

| What ballpark’s home run balls land on Waveland Avenue? | Wrigley Field in Chicago, known for fans waiting outside for balls hit out of the park. | Wrigley Field in Chicago is where home run balls often land on Waveland Avenue. |

| Can earned income for one child be taken for back child support of an adult child? | Depends on state laws—consult a family lawyer. | Generally, child support is for minors, not adults. Laws vary, so consult a legal professional. |

Note:

This table highlights questions where ChatGPT’s answers closely align semantically with human responses, achieving high BERTScore F1 values.

| Question | Answer A (Human) | Answer B (ChatGPT) |

|---|---|---|

| ooo00OOO000oooo00OOO00oooo…..la la la..how do I sing? | mmmm lalalalalalalalalaLA | Offers detailed singing advice including breathing techniques, vocal warm-ups, and considering a vocal coach. |

| What is the number that you divide the divisor into? | Dividend | Explains the concept of dividend with examples and a full breakdown of division terminology. |

| What are the lyrics? (Two songs: Panic! at the Disco & P. Diddy) | Full lyrics of both songs | Refuses due to copyright but suggests legal alternatives to find the lyrics. |

| What are Some BG rap links? | Long list of download links | Refuses to share download links due to copyright concerns and encourages legal alternatives. |

| I need an essay for my research plzz? (on American colonies) | No content (missing) | Provides a detailed multi-section essay outline covering early settlement, growth, conflict, and independence. |

Note:

This table compares human and ChatGPT responses for the bottom 5 examples with the lowest BERTScore F1 values, illustrating significant semantic divergence.

Top-performing responses achieved F1-scores approaching 0.89 and were associated with clearly phrased, fact-based questions. For instance, the question “What is a procrastinator?” elicited a precise and textbook-style definition, closely mirroring the best human response. Conversely, low-performing responses suffered from low content alignment, often due to incomplete or generic responses. A notable example is “I need an essay for my research plzz?”, where ChatGPT responded with a vague outline rather than an actual essay. Another illustrative failure involved the question “What are the lyrics?”, for which ChatGPT declined to answer due to copyright constraints—while the best human answer included the actual lyrics. These examples highlight not only content divergence but also structural limitations of LLMs operating under strict output filters.

Discussion

This study assessed the performance of ChatGPT in answering open-domain community questions by comparing its responses to human-voted best answers using BERTScore and topic-level cluster analysis. Across 500 QA pairs, ChatGPT (GPT 3.5-turbo) showed strong semantic alignment with human responses (mean F1 = 0.824), suggesting high linguistic fluency and contextual understanding. These findings align with prior literature emphasizing ChatGPT’s superiority in generating fluent responses, especially for factual queries or definition-style prompts (Bahak et al., 2023). Our findings are also consistent with Chan, An & Davoudi’s (2023) observations that effective prompting significantly enhances ChatGPT’s performance in NLP tasks, such as question generation and response evaluation. Through topic modeling and cluster-based review, we further identified that questions with vague context, implicit expectations, or subjective tones contributed disproportionately to lower BERTScore performance. This is in line with limitations reported by Pichappan, Krishnamurthy & Vijayakumar (2023) who observed that ChatGPT’s semantic accuracy deteriorates when handling abstract or evaluative prompts, often resulting in stylistically plausible but semantically imprecise answers. Interestingly, our use of BERTopic uncovered distinct performance patterns tied to content themes. Factual, technical, or procedural topics saw high GPT alignment, while emotionally charged or ambiguous topics tended to cluster within low-performing segments. This echoes (Bahak et al.,’s 2023) findings, where ChatGPT excelled with concrete questions but showed vulnerability to hallucination or content overreach in multi-hop reasoning or “why” questions. While BERTScore is effective for capturing semantic similarity, it does not directly measure factual correctness, fluency, or pragmatic appropriateness. As such, high F1 values may obscure hallucinations, missing facts, or stylistic mismatches. Future work should consider incorporating complementary evaluation methods such as BLEURT or human judgments to provide a more comprehensive understanding of LLM answer quality. Although BERTScore enabled nuanced semantic evaluation beyond surface-level overlap, it does not capture fluency, factual correctness, or pragmatic utility. Prior studies have emphasized the necessity of human evaluations or hybrid scoring frameworks to comprehensively assess LLM responses (Chan, An & Davoudi, 2023; Pichappan, Krishnamurthy & Vijayakumar, 2023). As such, the high F1-scores reported in this study may not reflect failure cases involving hallucinated content or missing factual details, a known concern in generative QA systems (Bahak et al., 2023). This study is not without limitations. First, due to resource constraints, only GPT-3.5-turbo was evaluated in this study. While it is among the most capable publicly available models, comparative insights across other models such as Claude, Gemini, or Mistral would strengthen generalizability and benchmarking robustness. Secondly, the evaluation relied solely on BERTScore, which, while robust, may benefit from augmentation via human annotation or model explainability techniques. Future work could involve cross-model comparisons, multilingual QA evaluations, and the integration of human preference scores or challenge sets focused on hallucination detection. Additionally, fine-grained error annotation and performance audits by topic and intent type would offer richer interpretability.

Implications for model evaluation

The integration of topic modeling into QA evaluation offers novel diagnostic potential. This method enables topic-specific identification of model strengths and weaknesses. Developers and researchers can leverage such insights to guide prompt engineering, content filtering, and fallback strategies in high-risk or uncertain domains. Moreover, a topic-aware framework provides greater explainability and interpretability in model audits, which is essential for trustworthy deployment of LLMs in real-world applications.

In summary, our findings reaffirm ChatGPT’s high competence in answering a wide variety of open-domain questions, particularly those grounded in factual knowledge. However, its limitations in nuanced reasoning, subjective interpretation, and question ambiguity reflect persistent challenges in the design and evaluation of general-purpose LLMs.

Conclusions

The current study investigated the semantic quality of ChatGPT responses in the context of open-domain question answering, via the Yahoo! Answers dataset. We quantitatively examined how ChatGPT’s answers semantically aligned to the human-selected best answers associated with a broad variety of user-generated questions, while utilizing BERTScore. Further, we provided an overview of latent themes we identified in the dataset with the use of the BERTopic, followed by an analysis of ChatGPT’s performance at a topic-level. We found that ChatGPT demonstrates good semantic alignment in factual, well-structured domains (e.g., definitions, technical explanations, and encyclopedic content, etc.), but performs worse in subjective, underspecified queries, especially those related to emotion, opinion and implicit context. This indicates a limitation in ChatGPT’s ability to navigate nuanced human communication. Using semantic similarity metrics with topic modeling provides a complete framework to assess large language models in real-world question and answer situations. Future extensions could include human-in-the-loop scoring, multilingual QA datasets, and cross-model comparisons (e.g., Claude, Gemini) to validate generalizability. Additional directions may involve analyzing prompt formulation effects and refining topic-aware metrics for nuanced performance insights.

Supplemental Information

ChatGPT Yahoo Evaluation.

The complete code used in the study, including data preprocessing, prompt generation for ChatGPT, BERTScore evaluation, and BERTopic-based topic modeling.

Yahoo sample dataset.

A representative 100-question and 500-question sample extracted from the Yahoo! Answers dataset used in the analysis. This sample was used for ChatGPT response generation and topic modeling.