SDN-enabled adaptive security framework for multi-cloud infrastructures using deep learning-based threat detection and policy management

- Published

- Accepted

- Received

- Academic Editor

- Davide Chicco

- Subject Areas

- Algorithms and Analysis of Algorithms, Artificial Intelligence, Data Mining and Machine Learning, Optimization Theory and Computation, Scientific Computing and Simulation

- Keywords

- Software-defined networking (SDN), Multi-cloud security, Intrusion detection system (IDS), Dynamic security policy management, Transformer models, Swarm intelligence

- Copyright

- © 2025 Verma and Jailia

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2025. SDN-enabled adaptive security framework for multi-cloud infrastructures using deep learning-based threat detection and policy management. PeerJ Computer Science 11:e3266 https://doi.org/10.7717/peerj-cs.3266

Abstract

Background

Organizations achieve agility, scalability, and enhanced resource utilization in multi-cloud environments, but face challenges in ensuring uniform and robust security across diverse cloud platforms. Variations in configuration and security mechanisms among providers hinder consistent policy enforcement and expose systems to data breaches and evasive threats. Additionally, the dynamic and distributed nature of multi-cloud operations broadens the attack surface, making real-time threat mitigation more complex.

Methods

To address these challenges, we introduced a groundbreaking software-defined networking (SDN)-enabled framework that incorporates deep learning for attack detection and adaptive security policy management. The framework consists of two primary components: the Software-Defined Multicloud Defense Controller (SDMDC), which enables centralized, real-time security policy enforcement (control plane), and the Multicloud Intrusion Detection System Gateways (MCIDS-G), which facilitate distributed threat detection across cloud platforms (data plane). The SDMDC’s integrated IDS is built using the Cross-Cloud Threat Transformer (CCTT) model, while the MCIDS-G’s regional IDS is based on the Long Short-Term Memory (LSTM) model. Additionally, the Lemurs Optimizer (LO) is employed in the SDMDC for cost-efficient policy management. SDMDC enforces security standards across all cloud environments where applications operate.

Results

The proposed solution addresses long-standing cloud security issues by combining coordinated global security strategies with automated threat detection and centralized policy management. SDMDC ensures consistent policy enforcement across cloud environments and manages ingress/egress and east–west traffic between cloud domains, including Amazon Web Service (AWS) and other service providers. The architecture supports dynamic resource orchestration and horizontal scalability, enabling adaptive performance under varying load conditions. The system also enables automatic policy implementation across platforms and facilitates real-time threat response to maintain consistent security.

Conclusion

The presented framework represents a significant advancement over existing multi-cloud protection solutions. It introduces new research directions, particularly in traffic management, firewall integration, and fully qualified domain name (FQDN) policy enforcement with proxy management.

Introduction

The rising popularity of multi-cloud, i.e., utilizing multi Cloud Service Providers (CSPs), is one of the indicators of this profound change in enterprise computing paradigms. By adopting multi-cloud approaches, organizations can improve performance, reduce vendor lock-in, and tailor services to meet different operational needs (Solanki, 2018). According to recent research, nearly 82% of IT leaders have already put together hybrid cloud plans that merge the public and private clouds, with 58% always using two or more public clouds to enhance the solidity and efficiency of their infrastructure (Reese, 2022). These trends indicate the increasing demand for distributed, robust architectures capable of supporting complex system workloads.

Also, the multi-cloud strategy represents both a prevailing trend and a necessary evolution in IT infrastructure. Estimates point to the fact that the worldwide multi-cloud management market is set to increase to $50.04 billion by 2030, testifying to the ongoing expansion spurred by the critical demand for optimizing resources, fault tolerance, and efficient workload placement across disparate cloud environments (Fortune Business Insights, 2024). This expanding market highlights multi-cloud environments’ crucial role in empowering organizations to prosper in a fiercely competitive and evolving technological era (Banimfreg, 2023).

While the benefits of multi-cloud infrastructure are well-recognized, it also presents challenges, primarily in security across fragmented cloud environments. Traditionally, security teams have struggled to maintain consistent policy enforcement, correct misconfigurations, protect against vulnerabilities and achieve adequate visibility across numerous cloud platforms (Kentik, 2024). These issues are compounded by the limitations of available security solutions offered by independent CSPs, which are typically engineered for a one-cloud setup. In multi-cloud environments, using such products leads to siloed security controls that do not provide the end-to-end security required by businesses nowadays (Prithi et al., 2022). This lack of end-to-end frameworks and the interoperability among cloud vendors leaves organizations ever more vulnerable to advanced cyber threats, which points towards cloud-neutral, scalable technologies that offer end-to-end visibility and immediate threat mitigation (Dawood et al., 2023).

In response to such increasing complexities, the emergence of Software-Defined Networking (SDN) revolutionized network models of management by separating the data plane from the control plane. Decoupling allows for centralized control, increased automation, and improved scale scalability—two capabilities necessary in defending multi-cloud environments (Hussein et al., 2020). SDN’s intrinsic flexibility makes it well-suited for dynamic cloud environments to support capabilities like real-time traffic management, dynamic resource allocation, and fault-tolerant policy enforcement across platforms like Amazon Web Service (AWS), Azure, and Google Cloud. As multi-cloud consumption increases, organizations increasingly acknowledge the need for seamless end-to-end network management to integrate these siloed infrastructures (Mulder, 2024).

Using SDN, multi-cloud structures can attain centralized management and uniform security across disparate platforms. The importance of such harmonization is seen in market estimations, which forecast the multi-cloud SDN market, valued at USD 5 billion in 2023, to grow beyond USD 19.5 billion by 2036 (Research Nester, 2025). This expansion is fueled by demand for hybrid cloud solutions, remote work facilitation, and increasing demand for secure cloud. Nevertheless, amidst all these developments, several key bottlenecks persist. Intrusion Detection Systems (IDS) and intrusion prevention systems (IPS) remain top priorities, namely abnormality detection and dynamic policy orchestration (Waseem et al., 2025). Resolving these problems will be critical in enabling multi-cloud SDN solutions to address the changing security needs of organizations.

Multicloud infrastructure enabled by SDN may present a specific set of security challenges, one of which is the utilization of lightweight IDS capable of operating in real-time without causing system performance degradation (Nizamudeen, 2023). In such fluid environments, detecting anomalies is a cornerstone for threat detection and neutralization before propagation on communicating cloud infrastructures. The ability to distinguish legitimate from illegitimate traffic via real-time traffic analysis is crucial in preventing large-scale security threats, such as data exfiltration, Distributed Denial of Services (DDos), and insider threats (Houda, Khoukhi & Hafid, 2020). However, such protection, maintained at minimal resource cost, requires perfect policy tuning. This is especially critical under high traffic levels, where maintaining scalability and low latency is essential for reliable service delivery (Chaudhary et al., 2022).

The emergence of Machine Learning (ML) and Artificial Intelligence (AI) has transformed how IDS and IPS are conceived and deployed. The technologies have proved extremely useful because they can identify intricate attack patterns, adapt to emerging threats, and learn from historical traffic patterns (Gupta, 2024). However, their integration in SDN-based multi-clouds is not perfect. AI/ML-based IDS models are typically plagued with the disadvantage of being incapable of learning effectively across heterogeneous cloud environments, increased false positives, and inability to respond to varied traffic patterns (Boryło et al., 2024). These issues highlight the necessity of further evolutions and improvements to utilize the optimal use of AI/ML models in securing multi-cloud environments.

Although numerous studies have shown the promise of AI-based anomaly detection in cloud infrastructure (Medjek et al., 2021; Stutz et al., 2024; Upadhyay et al., 2023), multi-cloud environments remain a nascent study area. The multi-dimensional complexity of such an environment—stretching across a single cloud provider with its singular configuration—requires efficient AI models to protect data, applications, and infrastructure underlying the operations (Sathupadi, 2022). Such models must be embedded within a holistic security architecture that addresses the diverse requirements of cross-cloud traffic management, policy orchestration, and anomaly detection. To strengthen the security stance of multi-cloud, organizations ought to have a master plan. This involves creating centralized security management for enhanced visibility, enforcing rigorous access controls to stifle malicious behaviour, and using encryption standards to ensure data integrity (Buyya & Son, 2018). Relying on next-generation AI models alongside these initiatives can offer scalable, standardized, and adaptive security controls to ensure that even the most sophisticated multi-cloud infrastructures are secured against burgeoning threats (Seth et al., 2024).

This article presents a revolutionary hybrid intelligent IDS developed to deal with the distinct challenges of multi-cloud environments. Our solution integrates the latest deep learning techniques with policy optimization using SDN to support real-time anomaly detection and real-time threat mitigation for large-scale cloud environments. One of the key characteristics of this method is the application of light IDS models, which are coupled with AI-based policy management systems to enable improved scalability and resource utilization. By handling cross-cloud traffic orchestration complexity, this framework provides uniform performance even during varying workloads and stringent security needs. In addition, the design is to improve detection quality, significantly mitigate false positives, and enable shift responses to threats in high-intensity environments. This work aims to go beyond incremental improvement by presenting a comprehensive and robust solution to the persistent security issues in multi-cloud SDN infrastructures. The framework that has been designed is intended to adapt to the changing threat landscape to be scalable and effective as businesses continue to place more reliance on cloud-integrated platforms.

Literature review

Cai & He (2024) propose an intelligent multi-cloud resource scheduling system with built-in endogenous security mechanisms. Their solution uses Dynamic, Heterogeneous, and Redundant (DHR) architecture that includes dual-channel reasoning, the combination of rule-based inferences and the behavior modeling using AI, to continue working in the conditions of a cyberattack. The system presents a resilience-oriented approach where resource paths are adaptively modified and service availability is preserved in the case of failure of the key scheduling routes. This architecture brings out a shift towards the integration of security at the level of resource management in cloud infrastructures instead of making it an isolated level of functionality.

To assess the model’s efficacy, experiments were conducted using a multi-cloud resource scheduling run log database for emulating various attack patterns. Even after extended usage, the system demonstrated registered exemplary reliability, much better than that of single-channel reason systems. Through this research, the requirement of redundancy and clever channel-switching mechanisms for robustness against cloud security is achieved, and an empowered method for security for multi-cloud systems against emerging threats is introduced.

El-Kassabi et al. (2023) presented a comprehensive security enforcement mechanism for cloud workflow orchestration with deep learning and clustering algorithms for better anomaly detection. Their mechanism uses Gated Recurrent Unit (GRU) based AutoEncoders and clustering methods, i.e., hierarchical clustering and Density-Based Spatial Clustering of Applications with Noise (DBSCAN), for anomaly detection. Experimentation on a real pandemic-related patient medical records dataset showed the remarkable effectiveness of both clustering methods in anomaly detection. The model is focused on its ability to save resources to enhance cloud security, particularly in healthcare operations where data integrity is critical.

Nizamudeen (2023) developed an Intrusion Detection Framework (IDF) that can detect network and application layer attacks. The framework follows a three-stage approach: data preprocessing using Integer-Grading Normalization (I-GN) for normalization, feature extraction through the Opposition-based Learning-Rat Inspired Optimizer (OBL-RIO), and classification with a 2D-Array-based Convolutional Neural Network (CNN). Evaluated with NetFlow-based datasets (e.g., NF-UNSWNB15, NF-BoTIoT), the model registered higher detection effectiveness and minimal false favourable detection rates. The framework highlights novel preprocessing and optimization methods in securing precise intrusion detection.

Selvapandian & Santhosh (2021) concentrated on enhancing intrusion detection for multi-cloud Internet of Things (IoT) settings by overcoming the disadvantage of conventional neural network IDS. Their DL-driven model improved training time and detection performance, surpassing earlier methods. NSL-KDD dataset analysis exhibited the ability of DL to maximize reliability and robustness in cloud-based IoT security systems.

Gupta et al. (2022) solved security issues in next-generation healthcare networks, which rely increasingly on IoT devices and cloud computing. Their Multi-User Security Enhancement (MUSE) system employs deep hierarchical stacked neural networks to identify malicious activity, such as altering data payloads in dataflows between core clouds and IoT gateways. By combining trained edge cloud model layers into the core cloud, MUSE reduced training times with improved detection accuracy. This book focuses on possible performance improvements by integrating models into various layers of clouds in healthcare environments.

Zhang et al. (2024) created a scalable serverless multi-cloud edge computing network resource allocation paradigm. The researchers used action-constrained deep reinforcement learning (deep RL) to optimize computer resource allocation and data integrity. Simulations with varied task data sizes showed that their Markov Decision Process (MDP)-based model outperformed conventional methods, decreasing system costs and improving efficiency. This study emphasizes the importance of security in cloud edge computing resource allocation.

Megouache, Zitouni & Djoudi (2020) added user authentication and data integrity maintenance to improve multi-cloud security. The approach employs a VPN for connectivity, Rivest–Shamir–Adleman (RSA) encryption for data security, and a hashing method for cloud data consistency. Testing on a social security fund dataset prevented breaches well. The research focuses on combining encrypted communication methods with protected architecture to reduce multi-cloud security concerns.

Zhang & Zhe (2024) suggested a layer-based neural network architecture focusing on end-user IoT devices for fog computing security. On CIC2017 and KDD99 datasets, their Convolutional Neural Network with Capsule Self-Attention (CNN-CapSA) model improved detection accuracy substantially. This study shows how non-sequential neural network models and backpropagation methods with adaptability might improve cloud and fog computing security.

Binu, Kumar & Ravi (2024) introduced a multi-cloud security architecture for real-time applications like e-commerce. Their Threat Preventive Routing (TP Routing) improves data security, and an Incident App identifies e-commerce security issues. The system uses artificial neural networks (ANNs) to predict and detect breaches, highlighting the necessity for real-time cloud security.

Borkar (2020) solved multi-cloud botnet assaults using an agglomerative-divisive web use mining model. A random forest technique with Web Access Pattern-tree (WAP) structure mining improved botnet identification. This study shows that ensemble learning approaches can solve multi-cloud security challenges.

Verma et al. (2024) explored using CNNs to detect multi-cloud DDoS assaults. The NSL-KDD dataset showed that their CNN-based model detected such assaults better than others. The study indicates that CNNs can improve IDS performance in multi-cloud setups.

Sanagana & Tummalachervu (2024) suggested Stacked Sparse Auto-Encoder Feature Selection–Based Deep Learning Intrusion Detection (SSAFS-DLID), which combines the Salp Swarm Algorithm (SSA) for feature selection with Long Short-Term Memory (LSTM) and the Adam optimizer for intrusion detection. Their system, tested on massive cloud datasets, enhanced detection with lower computational cost. According to the report, hybrid optimization and deep learning may significantly improve cloud security.

Kanimozhi & Jacob (2021) propounded a botnet detection system employing various ML classifiers using the CSE-CIC-IDS2018 dataset. ANNs surpassed the best among the classifiers, as detected by their work. Their work emphasized the tremendous potential for boosting botnet detection in the cloud environment via neural net.

Mayuranathan et al. (2022) introduced the Enhanced Optimal Security (EOS) IDS in cloud computing platforms. The hybrid approach used a deep Kronecker neural network (DKNN) as the intrusion-detecting algorithm, and the optimization method utilized was the chaotic red deer optimization algorithm to select salient features. EOS-IDS was found to possess effective detection ability and rectified the issues of overfitting and IDS-generated false positives.

Soussi et al. (2023) developed Multi-cloud Elastic Resource Learning and Intelligent Network Security (MERLINS), a 5G and next-generation 6G network security architecture that applies Moving Target Defense (MTD) techniques fortified with deep reinforcement learning (deep-RL). By modelling networks as a multi-objective Markov Decision Process (MOMDP), MERLINS adjusts security policies in real-time, outperforming conventional deep-RL approaches such as Deep Q-Network (DQN) and Proximal Policy Optimization (PPO) by a two-fold factor. Experiments proved its efficacy against advanced persistent threats (APTs) while guaranteeing rigorous Service Level Agreement (SLA) adherence. Future studies aim to enhance the explainability of AI-based decisions in high-stakes multi-tenant Telco Clouds.

Alhassan (2024) proposed a solution to address security and resource management issues in multi-cloud settings, where varying customer needs and CSP settings add complexity to the process. The model utilized a Self-Adaptive Flower Pollination-based RSA (SA-FPRSA) method for securing data integrity and verifying availability. To address inefficiencies in virtual machine (VM) resource allocation, an SA Heap-Based Optimizer (HBO) was developed to minimize time complexity and energy usage. The study confirmed the model’s effectiveness in improving security and optimizing resource allocation in multi-cloud environments. Recommendations for future improvements were suggested to reduce model complexity further and maximize performance.

While significant improvement has been made in solidifying multi-cloud security infrastructure, key research areas still require a closer look. Previous research often uses narrow, unidirectional security approaches, focusing on specific aspects like anomaly detection or user authentication without considering the intersection of different strategies that would strengthen overall defense mechanisms.

In addition, many frameworks do not have a properly designed and coherent architecture, emphasizing piecemeal security solutions over providing an end-to-end solution that addresses the intricacies of multi-cloud environments. This siloed approach does not include critical considerations such as operational resilience, self-healing mechanisms, and adaptive policy management, which are essential to maintaining service continuity in the event of security incidents. Moreover, the reliance on limited datasets undermines the validity of conclusions since such datasets cannot adequately capture the wide range of security threats experienced on diverse cloud platforms. There is, therefore, an immediate need for more holistic frameworks that combine multiple modelling approaches and utilize diverse, real-world datasets to create a robust and adaptive security position in multi-cloud environments. A multi-cloud setup presents unique challenges that call for a resilient and balanced security infrastructure to shield data, applications, and infrastructural core in the cloud-based platforms. Ensuring data security in multi-cloud setups requires an all-around strategy that competently addresses the complexities and hindrances.

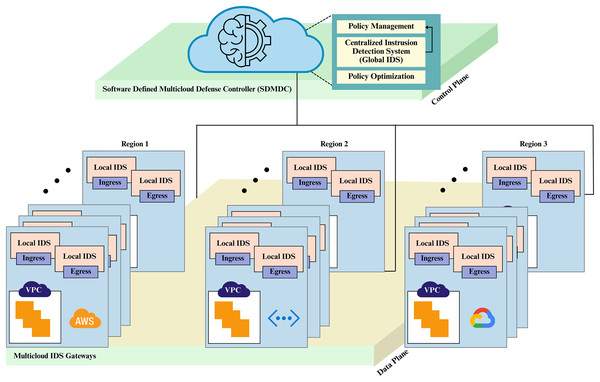

Deep learning-based security framework for sdn multi-cloud environments

Here, we introduce a comprehensive security framework for multi-cloud environments that uses deep learning and meta-heuristic optimization approaches to address the complexities of securing distributed multi-cloud infrastructures. The proposed framework aims to improve security across different cloud platforms by deploying sophisticated threat detection and mitigation techniques that are adaptive to the dynamic nature of multi-cloud environments. The proposed framework is derived from foundational principles in public cloud architecture and SDN, the most prominent of which are the separation of the control plane and data plane. This separation enables the establishment of two core components: Software Defined Multicloud Defense Controller (SDMDC) and Multicloud IDS Gateways (MCIDS-G). SDMDC, in its role as the centralized control plane, relies on deep learning to examine data streams, identify anomalies, and dynamically adapt security policies between clouds. Policy optimization is performed using the Lemurs Optimizer (LO), a swarm intelligence based module. Implemented as part of the SDN data plane, the MCIDS-G gateways monitor and protect inter-cloud data, these gateways monitor and protect inter-cloud data traffic, adopting DL models to identify in real-time and analyze traffic for patterns, with subsequent IDS/IPS-based enforcement across clouds. The architecture of the SDMDC is illustrated in Fig. 1.

Figure 1: Proposed Software Defined Multicloud Defense Controller (SDMDC) architecture.

Proposed architecture

Software Defined Multicloud Defense Controller (SDMDC)

The centralized intelligence element of the architecture is the SDMDC, which controls security operations among heterogeneous multiple clouds. It operates at the control plane, separating security management operations from the data plane, where the MCIDS-G are situated. The SDMDC attains a uniform and scalable security posture on leading cloud platforms such as Azure, AWS, and Google Cloud by enabling global security strategy, threat detection, and policy management enforcement.

Each of the SDMDC’s three core components—policy optimization, global IDS, and policy management—is responsible for providing real-time cloud security.

Policy management

The SDMDC policy management module is indispensable for creating, sharing, and enforcing security policies in a multi-cloud system. These rules enable cloud platform reaction mechanisms, govern traffic, and generate threat levels. By centralizing control and coordinating security measures, the SDMDC ensures that all cloud environments adhere to a consistent policy, which helps minimize misconfigurations and enforces policy consistency across clouds.

The SDMDC monitors incoming data and adjusts rules dynamically to reflect emerging risks and traffic behavior. Suppose the local IDS in Google Cloud detects the Structured Query Language (SQL) Injection attack. In that case, the SDMDC will modify the policy to prevent the application from being exploited and roll it across to all connected cloud platforms, including AWS and Azure. When a botnet campaign or other coordinated attack is detected, the SDMDC notifies all MCIDS-G of the necessary security policies to implement to reduce the impact on all clouds caused by command and control (C&C) nodes. To prevent security vulnerabilities from being exploited, this coordinated mechanism ensures consistent protection across all connected cloud environments.

Centralized Intrusion Detection System (global IDS)

The Cross-Cloud Threat Transformer (CCTT) is used by the global IDS, the principal hub of the SDMDC, to identify and link threats across different cloud infrastructures. In order to detect threats in real time and correlate them across clouds, it collects distributed MCIDS-G security alerts, logs, and unusual traffic.

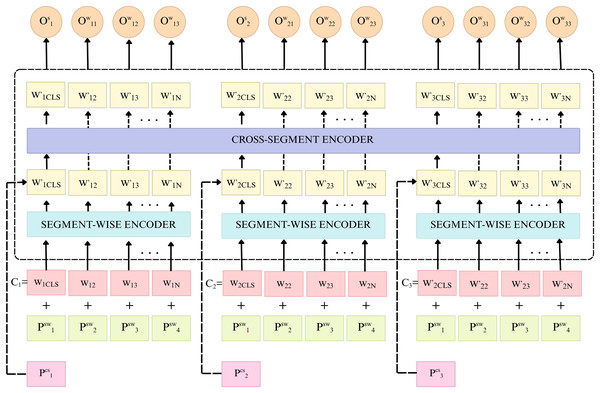

Cross-Cloud Threat Transformer (CCTT)

The CCTT model controls traffic flows between clouds; it uses hierarchical attention to identify and organize coordinated cyberattacks on different cloud services. It aggregates security alerts sent by all MCIDS-G modules that are monitoring traffic patterns in their own particular clouds. Such alerts are then combined and examined to determine bigger attack campaigns, which can’t be detected in one cloud. The global IDS analyzes traffic patterns and logs to identify Botnet operations, DDoS assaults, and multi-vector intrusions.

The CSE-CIC-IDS2018 dataset was adapted to reflect an SDN-enabled multi-cloud security setting by simulating inter-cloud traffic flows using an enterprise-grade multi-cloud emulation environment. Each traffic flow was labeled according to the originating virtual cloud domain (e.g., Cloud-1, Cloud-2, Cloud-3), mimicking segmentation patterns such as AWS Virtual Private Clouds (VPCs), Azure Virtual Networks (VNets), and Google Cloud Platform (GCP) subnets. SDN-specific behaviors such as OpenFlow-style rule propagation, controller-to-switch signaling, and inter-VPC routing were simulated using Mininet in conjunction with the OpenDaylight SDN controller. This enabled realistic domain-aware policy enforcement and visibility of east-west traffic. The CCTT was trained to detect coordinated attack patterns across cloud segments, while LSTM-based MCIDS-G modules learned localized ingress and egress patterns. This process maintained the fidelity of original flows without generating artificial data, enhancing domain alignment with real-world SDN multi-cloud infrastructures.

The CCTT comprises four transformer encoder layers, each containing eight self-attention heads with an embedding dimension of 256. The model processes multivariate input sequences derived from the extended CSE-CIC-IDS2018 dataset, including flow statistics (e.g., duration, bytes), SDN-specific metadata (e.g., domain labels, controller paths), and cloud anomaly indicators (Canadian Institute for Cybersecurity, 2018).

The data collection also includes attacks that imitate cloud-based adversarial behaviour, such as DDoS, Brute Force (BF), Botnet, SQL Injection, and Infiltration. This enhances model identification of complicated, dispersed assaults, cross-cloud attack correlation, and end-to-end traffic analysis.

Collecting Data: The SDMDC receives traffic traces and security alerts sent by each MCIDS-G’s LSTM-augmented local IDS, which undergo a thorough examination. The CCTT examines this data to detect suspicious patterns or potential dangers that could be signs of cross-cloud assault activities. The global IDS can detect any DDoS campaign that spans more than one cloud. Figure 2 shows the components of the CCTT model used in the global IDS module.

Figure 2: Cross-Cloud Threat Transformer (CCTT) for global IDS (Chalkidis et al., 2022).

The CCTT model uses a transformer-based architecture to examine and correlate security risks across various cloud environments. Utilizing a self-attention mechanism, it simultaneously examines data on network traffic time series and security warnings from various cloud platforms to identify unusual patterns that might indicate coordinated assaults.

Input representation (cloud traffic and alerts)

The provided data for the CCTT comprises the progression of traffic vectors, each illustrating network traffic tendencies or notifications from a distinct MCIDS-G at a particular time step. Assume the given progression at the moment it is denoted as:

(1) where is the traffic or alert data from the cloud platform (e.g., AWS, Azure, GCP) at time and is the number of cloud environments being monitored.

1. Self-Attention Mechanism

The CCTT applies a self-attention mechanism to compute the relationships between input element the sequence; for each input , the model computes three vectors:

Query (Q)

Value (V)

Key (K)

These vectors are computed as:

(2) where and are learned weight matrices for the queries, keys, and values.

The significance score for each pair of traffic data points is calculated by performing the inner product of the query and key vectors, followed by a softmax function to standardize the scores:

(3) where is the dimension of the key vectors, this approach enables the model to prioritize key traffic flows or anomalies by weighting each data point’s importance.

2. Multi-Head Attention

To grasp various aspects of the traffic data, the CCTT employs multi-head attention. Each attention head calculates its distinct set of . and V matrices, enabling the model to identify diverse relationships between cloud traffic flows:

(4)

Each head operates as a distinct self-attention mechanism, and acts as the resultant projection matrix. The fusion of all heads generates a more extensive representation of the traffic and anomaly patterns across clouds.

3. Positional Encoding for Sequential Data Since

Transformers do not natively interpret sequential data (like time-series traffic f1ows), the CCTT applies positional encoding to convey information about the arrangement of the input sequence. The positional encoding is incorporated into the input traffic vectors to sustain the chronological structure:

(5) where represents the position of the traffic data in the sequence, and indexes the dimensions of the input vector.

4. Output and Anomaly Detection

The outcome of the CCTT is a threat detection score that indicates whether a particular traffic flow or sequence appears abnormal. This is calculated after transmitting the multi-head attention outputs through multiple entirely linked layers with Rectified Linear Unit (ReLU) activation functions:

(6) The output is then fed into a softmax layer to produce a probability distribution over potential threats, with the highest probability indicating the detected attack type (e.g., DDoS, Botnet, SQL Injection).

Implementation and training configuration

PyTorch was deployed to implement the CCTT which has a four-layer transformer encoder and contains eight attention heads per-layer along with the embedding dimension of 256. The model employes sinusoidal positional encoding, and domain-aware embedding to encode temporal and cross-domain context. The dropout was put at 0.2 in order to overcome overfitting. Optimizer used was Adam with the learning rate of 0.0001 and batch size of 64. The model was trained on the Adam optimizer to up to 25 epochs early stopping (patience = 5) and converged around the 16th epoch. The categorical cross-entropy was used as the loss function, and it is appropriate because of many types of attacks that need to be classified. Input sequences were formed by 50 time-stepped observations which contained a combination of flow metadata, SDN state, and anomaly indicators. The training was performed in an NVIDIA A100 Graphics Processing Unit (GPU).

Policy optimization

The policy optimization component built into the SDMDC optimizes security configurations and operational parameters across cloud infrastructure to balance computation efficiency and threat detection accuracy. Employing the Lemurs Optimizer (LO) (Abasi et al., 2022), an advanced global optimization metaheuristic, the component dynamically tunes anomaly detection limits and resource allocation in response to real-time network dynamics and evolving security threats. During high-volume traffic times or attack scenarios, detection sensitivity is heightened to capture weak anomalies, whereas, in instances of low network usage, thresholds are decreased to decrease computational overhead. This adaptive system can scale effectively during high traffic while maintaining accurate threat detection.

| 1. Initialize the population of lemurs (policies) randomly. |

| 2. Evaluate the fitness of each lemur (resource consumption and detection accuracy). |

| 3. Set the best position as global best (G_best). |

| 4. While termination condition not met: |

| a. For each lemur: |

| i. Update position and velocity. |

| ii. Evaluate new fitness. |

| iii. If new fitness improves, update global best (G_best). |

| b. Adjust detection thresholds and resources based on traffic patterns. |

| 5. Return optimal policy (G_best). |

The first step is for the LO algorithm to generate potential policies based on various detection thresholds and available resources. The effectiveness of each candidate’s identification and real-time resource consumption define its fitness. Lemur velocities and locations are adaptively updated to explore novel options and policy parameters are altered to improve performance according to traffic needs. The best candidate is updated frequently until an optimum policy is reached, guaranteeing optimal resource usage and threat detection in the multi-cloud system.

LO calculates the Feed-Forward (FF) to improve classifier accuracy, using a positive number to signify improvement. The FF measures classifier error reduction.

(7)

The LO employed for adaptive policy tuning in the SDMDC was configured with a population size of 30, and a maximum number of 100 iterations. The stopping rule was set at the maximum number of iterations or convergence on fitness value on three consecutive generations. The fitness function took a multi-objective score integrating policy decision latency, detection accuracy, and policy conflict rate. The optimization process encoded parameters such as flow rule weights, alert correlation thresholds, and controller path selection, which allowed it to tune dynamically across inter-cloud SDN segments.

Multi-cloud IDS gateways (MCIDS-G)

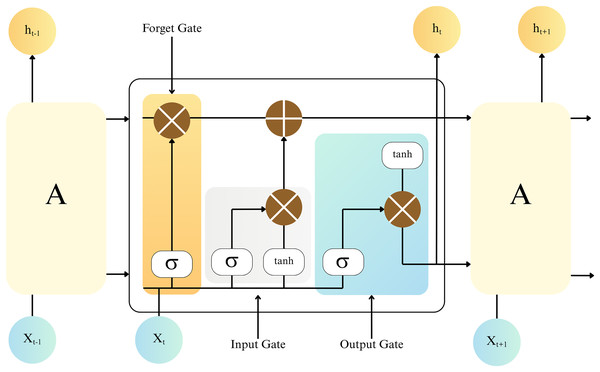

MCIDS-G refers to the centralized security nodes within the multi-cloud data layer. Within each cloud environment, such as AWS, Azure, or Google Cloud, the gateways continuously monitor and detect security risks in ingress, egress, and lateral traffic flows. There is a local IDS based on LSTM in every MCIDS-G (Alimi et al., 2022). It then sends security alerts to SDMDC for further investigation and regulation enforcement based on findings from its analysis of cloud traffic, with an emphasis on localized threats.

Every one of the cloud settings has the MCIDS-G as its principal security measure. Local IDS employ LSTM models to detect injection attacks (including SQL injection and command injection), data extraction efforts, botnet operations, and DDoS assaults on networks and the IoT. The LSTM-based IDS is highly effective at detecting patterns of assaults that occur over time, such as slow-rate DDoS attacks, progressive brute force offences, and gradual data breaches (Koroniotis & Moustafa, 2021). The MCIDS-G provides strong protection for cloud infrastructures by detecting and mitigating internal and external threats, including lateral infiltration.

The localized intrusion detection engine is the MCIDS-G component, which implements a two-layer LSTM architecture over PyTorch. Each of the model’s two layers had 128 hidden units, used ReLU activation, which was meant to learn nonlinear patterns in time. These modules process input sequences from the BoT-IoT dataset focusing on temporal features such as packet counts, flow duration, and byte intervals. Features selection was guided by temporal relevance (for LSTM) and cross-domain correlation (for CCTT), and all inputs were normalized prior to training. We trained with dropout of 0.3 in order to lessen overfitting. The Adam optimizer was used to optimize the model with the learning rate of 0.001, and the model was deployed with a batch size of 64. The loss function was chosen as Binary cross-entropy, which is applicable in the observation of the anomalies in binary form. Training was capped at 20 epochs with early stopping (patience = 5) to avoid overfitting. Input sequences had a length of 30 time steps, to show segments of ingress and egress flows in each of the cloud zones separately.

The feature selection relied on the extended CSE-CIC-IDS 2018 data containing both traditional flow-based features (e.g., total Fwd packets, flow duration, flag counts) and SDN-specific metadata (e.g. flow rules, controller-assigned policy tags, cloud domain IDs). We followed related work on ranking methods, like Lin et al. (2021), and chose the 30–40 highest ranked features that helped mostly to separate anomalies with classes in each cloud segment. The multivariate input was segmented using positional and domain embeddings to prepare for transformer-based processing.

Local ids for ingress traffic using lstm

At each cloud’s Ingress Gateway, an LSTM-enhanced local IDS regularly evaluates ingress traffic or data entering the cloud environment. Significant data breaches, brute-force login attempts, and malware propagation are all anomalies that the LSTM model, which is trained to recognize sequential patterns in traffic, can effectively spot. For instance, in a multi-cloud environment, the local IDS can detect abnormal spikes in connection requests by analyzing the surge in network activity. This would be useful in a DDoS assault on AWS services accessible to the Internet. The CCTT relays the warning to the SDMDC for additional investigation when anything is flagged as a possible security risk. After confirming that the assault is part of a more significant cross-cloud DDoS operation, the SDMDC locks down the attack without affecting legitimate network traffic by adjusting the flow policies in the appropriate SDN controllers to limit or block traffic from the identified enemy IPs.

Local IDS for egress traffic (LSTM-based detection)

Egress traffic, data leaving the cloud infrastructure, is also vital for detecting data exfiltration, malware communication, and insider threats. LSTM-based local IDS at the Egress Gateway continuously monitors outbound network flows for unusual patterns, such as massive data transfers or interactions from infected VMs. For example, when a vulnerable VM in Google Cloud initiates unauthorized data exfiltrations to an outside host containing sensitive information, the LSTM model detects and reports this anomaly. This report is routed to the SDMDC, which checks the traffic pattern methodically and validates the threat. Consequently, the SDMDC promptly updates the flow rules in the Google Cloud SDN controller to filter out the illegitimate egress traffic from the compromised VM and quarantine it to avoid further spread or illegitimate communication.

Egress Gateway detection for East-West traffic (lateral movement)

Other than hitting inbound and outbound traffic, the local IDS powered by LSTM conducts systematic inspection of sideways traffic, including intra-cloud communication among VMs, services, or applications in a single VPC or multiple VPCs. Lateral movement is a common strategy attackers use to pivot between affected resources within a shared cloud environment. For instance, if a threat actor gains entry into a VM within Azure and attempts to extend mobility to another service in the same VPC, the local IDS identifies abnormal communication patterns grouping the VMs, marking the traffic as a notable cybersecurity threat. The notification is immediately transmitted to the SDMDC, which dynamically alters the Azure SDN controller’s flow rules to limit further interaction between compromised and uncompromised VMs. In addition, the compromised VMs are immediately quarantined to isolate the breach and reduce the chance of additional escalations.

The LSTM operates on a sequential timeline, with representing the input instance (for example, network flow at time ) and representing the preserved memory of past traffic sequences, retaining important contextual characteristics. The weighted parameters are adjusted during training to capture relevant traffic patterns. Unlike typical Recurrent Neural Networks (RNNs), which suffer from gradient depletion or amplification when processing long sequences, LSTM mitigates these issues by utilizing a memory cell. This process enables LSTM to maintain and analyze long dependencies in network traffic and is critical for identifying complex, dynamic cyber threats in dynamic cloud environments, as illustrated in Fig. 3.

Figure 3: LSTM model for local IDS.

To control the flow of data inside the network, LSTM uses three primary gates: input, forget, and output. The forget gate controls the deletion of data according to historical traffic patterns. The sigmoid activation function is utilized by this gate and is defined as:

(8) The weights and biases are denoted as and , respectively. The current input vector at time , which combines the hidden state from the previous step with the current network sample, is represented by .

The input gate determines the relevance of the current input traffic pattern to be added to the memory. This gate also uses sigmoid and activation functions as follows:

(9)

(10)

and represent the influence of the present input data on the hidden state, which is used to determine whether to keep or discard fresh traffic data.

To control the output, the output gate updates the memory state as follows:

(11) This ensures that only relevant information about the current traffic pattern is retained.

Finally, the output vector , which represents the detected anomaly or normal behaviour at time , is calculated as:

(12)

(13)

The local IDS facilitates real-time network traffic analysis, utilizing LSTM’s memory capabilities to recognize anomalous patterns such as DDoS or injection attacks based on historical traffic data.

The seamless interaction between the MCIDS-G and the SDMDC ensures that detected threats are identified locally and systematically across the entire multi-cloud infrastructure, leading to coordinated responses and real-time policy enforcement.

Results

Two benchmark datasets to evaluate the efficiency of the proposed SDMDC framework consist of the extended CSE-CIC-IDS2018 dataset (Kaggle, 2024) and the BoT-IoT dataset (Koroniotis et al., 2019). Table 1 shows how the 27,000 samples generated were distributed into seven classes of attacks over which the CCTT model was trained.

| Category | Attack type | No. of samples |

|---|---|---|

| Benign | Normal | 5,000 |

| Botnet | Bot | 5,000 |

| Web attack | BF-Web | 1,000 |

| BF-XSS | ||

| SQL Injection | ||

| DDOS attack | DDOS attack-HOIC | 5,000 |

| DDOS attack-LOIC-UDP | ||

| DDOS attacks-LOIC-HTIP | ||

| DOS attack | DoS attacks-GoldenEye | 5,000 |

| DOS attacks-Hulk | ||

| DOS attacks-SlowHTIPTest | ||

| DOS attacks-Slowloris | ||

| Brute force | FTP-BF | 5,000 |

| SSH-BF | ||

| Infiltration | Infiltration | 1,000 |

| Total no. of samples | 27,000 | |

Note:

The CICIDS dataset comprises 27,000 samples categorized into seven distinct classes.

Likewise, the BoT-IoT dataset consists of 2,056 samples distributed across five unique classes, as outlined in Table 2.

| BoT-IoT multiclass dataset | |

|---|---|

| Class | No. of instances |

| DDoS | 500 |

| DoS | 500 |

| Recon | 500 |

| Theft | 79 |

| Normal | 477 |

| Total instances | 2,056 |

Note:

The BoT-IoT dataset consists of 2,056 samples distributed across five unique classes.

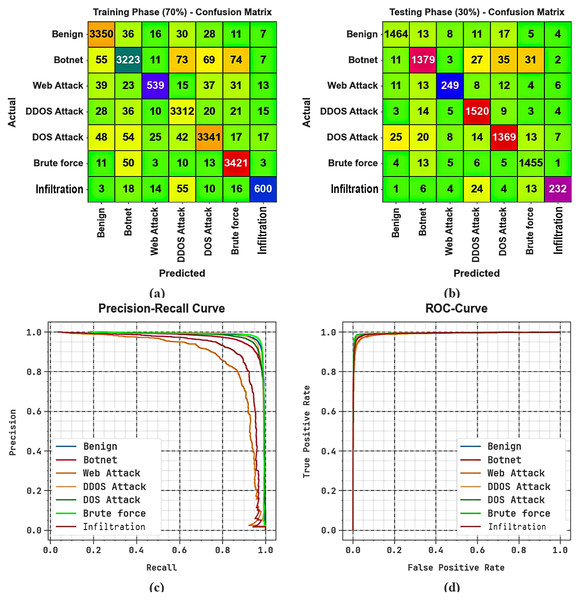

Figures 4A, 4B depict the confusion matrices generated by the SDMDC model using a 70:30 split of Threat Response and Analysis System/Threat Evaluation and Scoring System (TRAS/TESS), while Figs. 4C, 4D show the corresponding precision-recall and Receiver Operating Characteristic (ROC) curves, confirming its strong multi-class classification performance.

Figure 4: 70:30 of TRAS/TESS (A, B) Confusion matrices and (C, D) PR and ROC curves.

To address class imbalance across multiple attack categories, stratified sampling was used during dataset splitting, ensuring proportional representation of minority classes in both training and test sets. Additionally, the model was trained using a categorical cross-entropy loss function, which supports multi-class classification and reduces the bias toward dominant classes.

Table 3 and Fig. 5 portray the overall intrusion detection outcomes of the SDMDC method. The experimental values emphasized that the SDMDC model correctly recognized all samples proficiently. With 70% TRAS, the SDMDC technique offers an average of 98.32%, of 92.89%, of 91.02%, of 91.89%, and MCC of 90.92%. Simultaneously, with 30% TESS, the SDMDC model provides an average of 98.48%, price of 93.44%, l of 91.72%, of 92.53%, and MCC of 91.65%.

| Class labels | MCC | ||||

|---|---|---|---|---|---|

| TRAP (70%) | |||||

| Benign | 98.35 | 94.79 | 96.32 | 95.55 | 94.54 |

| Botnet | 97.32 | 93.69 | 91.77 | 92.72 | 91.09 |

| Web attack | 98.75 | 87.22 | 77.33 | 81.98 | 81.49 |

| DDOS attack | 98.12 | 93.64 | 96.22 | 94.91 | 93.77 |

| DOS attack | 97.99 | 94.97 | 94.27 | 94.62 | 93.38 |

| Brute force | 98.62 | 95.27 | 97.44 | 96.34 | 95.50 |

| Infiltration | 99.06 | 90.63 | 83.80 | 87.08 | 86.67 |

| Average | 98.32 | 92.89 | 91.02 | 91.89 | 90.92 |

| TESP (30%) | |||||

| Benign | 98.60 | 96.38 | 96.19 | 96.28 | 95.43 |

| Botnet | 97.68 | 94.58 | 92.67 | 93.62 | 92.21 |

| Web attack | 98.93 | 88.30 | 82.18 | 85.13 | 84.63 |

| DDOS attack | 98.42 | 94.41 | 97.56 | 95.96 | 95.00 |

| DOS attack | 97.91 | 94.35 | 94.02 | 94.19 | 92.92 |

| Brute force | 98.73 | 95.47 | 97.72 | 96.58 | 95.81 |

| Infiltration | 99.06 | 90.62 | 81.69 | 85.93 | 85.57 |

| Average | 98.48 | 93.44 | 91.72 | 92.53 | 91.65 |

Note:

The overall intrusion detection outcomes of the SDMDC method.

Figure 5: Average of SDMDC technique.

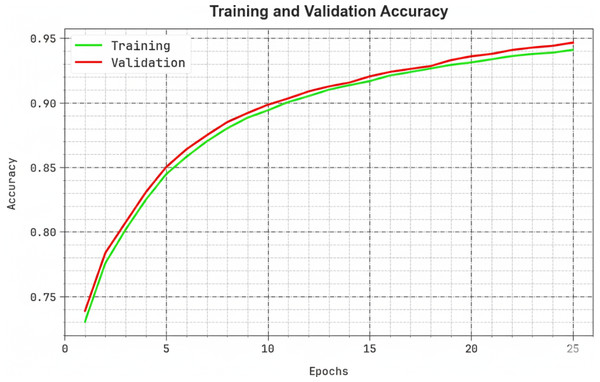

Figure 6 shows the training and validation accuracy trend of the SDMDC method, with accuracy values monitored between 0 and 25 epochs. The outcome indicates a steady improvement in training and validation accuracy values, proving the SDMDC technique’s ability to optimize performance in successive iterations. Moreover, the training and validation accuracy values stay close to each other during the epochs, demonstrates better results with overfitting and generalization ability of the SDMDC system that guarantees accurate predictions on unseen data.

Figure 6: Accuy curve of SDMDC technique.

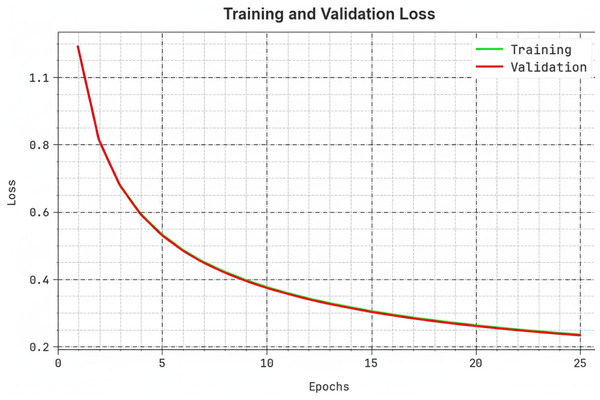

Figure 7 illustrates the training and validation loss curve of the SDMDC method, where loss values were calculated between 0 and 25 epochs. The chart indicates a declining trend in training and validation loss, highlighting the SDMDC system’s effectiveness in optimizing data fitting and generalization. The continuous reduction in loss values also supports the improved performance of the SDMDC model and its ability to enhance predictive accuracy with time.

Figure 7: Loss curve of SDMDC technique.

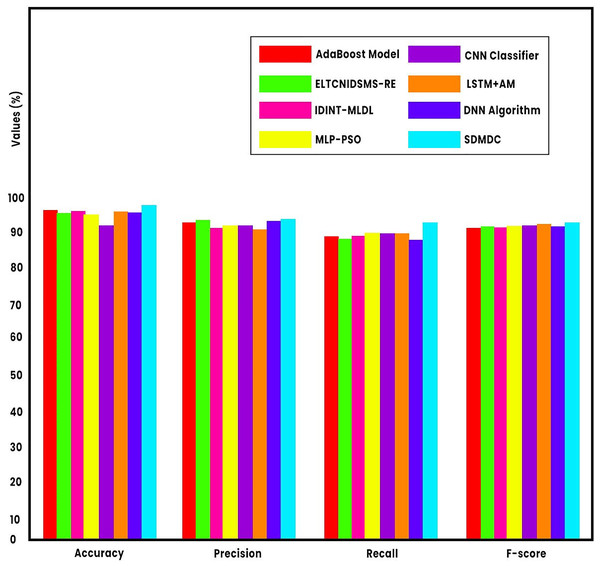

A comparative analysis with state-of-the-art models is conducted in Table 4 and Fig. 8 to assess the performance of the SDMDC approach (Okey et al., 2022; Lin et al., 2021; Alzughaibi & Khediri, 2023; Wang et al., 2023). Baseline models were chosen because they have been evaluated previous on the same dataset (CSE-CIC-IDS2018) in order to allow benchmarking. The simulation results reveal that the CNN-based approach was not optimal, yielding the lowest classification accuracy. In contrast, the Enhanced Lightweight Temporal Convolutional Network-Based Intrusion Detection and Security Management System with Reinforcement Engine (ELTCNIDSMS-RE), Intrusion Detection and Intelligent Network Traffic using Machine Learning and Deep Learning (IDINT-MLDL), Multilayer Perceptron optimized with Particle Swarm Optimization (MLP-PSO), and Long Short-Term Memory with Attention Mechanism (LSTM+AM) models exhibited moderately improved and comparable performance. Meanwhile, the AdaBoost and Deep Neural Network (DNN) models demonstrated to surpass previous models. Nevertheless, the SDMDC technique demonstrates superior performance with enhanced of 98.48%, of 93.44%, of 91.72%, and of 92.53%. Therefore, the SDMDC approach can be used to improve security in the multi-cloud environment.

| Model metrics | ||||

|---|---|---|---|---|

| AdaBoost model | 97.80 | 92.52 | 88.41 | 90.33 |

| ELTCNIDSMS-RE | 96.53 | 92.70 | 87.50 | 91.23 |

| IDINT-MLDL | 96.99 | 90.49 | 88.54 | 90.31 |

| MLP-PSO | 96.25 | 91.24 | 89.62 | 91.15 |

| CNN classifier | 91.50 | 91.74 | 89.09 | 91.31 |

| LSTM+AM | 96.19 | 90.71 | 87.23 | 91.28 |

| DNN algorithm | 97.28 | 92.68 | 88.52 | 91.93 |

| SDMDC | 98.48 | 93.44 | 91.72 | 92.53 |

Figure 8: Comparative analysis of the SDMDC method with recent models.

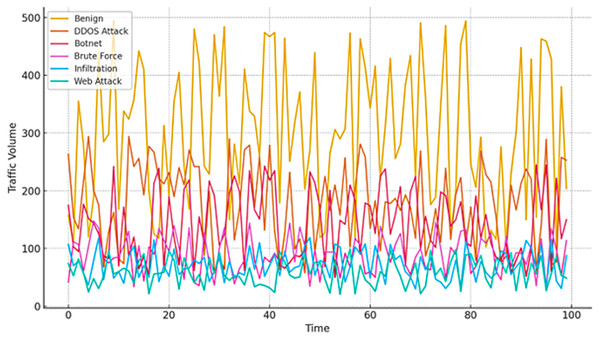

Figure 9 illustrates real-time traffic behavior under diverse cyber-attack scenarios in an SDN-enabled multi-cloud environment, employing the Cross Cloud Threat Transformer (CCTT) model for in-depth traffic flow assessment. The graph illustrates fluctuations in traffic volume, legitimate requests, and multiple attack vectors, including DDoS, Botnet, BF, Infiltration, and Web Attacks. DDoS attacks generate significantly higher traffic volumes, highlighting their disruptive impact compared to other threats. This fluctuations emphasize the need for real-time, adaptive security mechanisms within the proposed framework. The dynamic security policy management enabled by the SDN Multicloud Defense Controller (SDMDC) ensures uniform, centralized detection and mitigation of these evolving threats.

Figure 9: Traffic simulation of CCTT model with SDN flows.

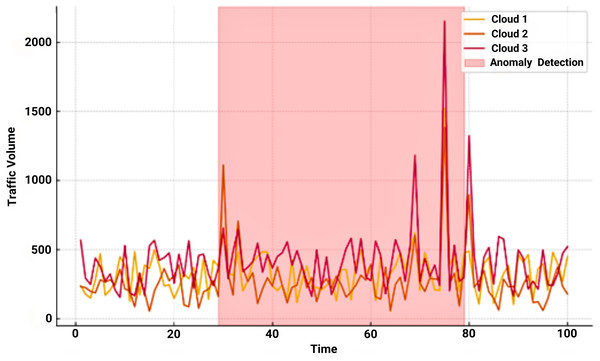

In our suggested SDN-enabled multi-cloud security framework, real-time traffic analysis and anomaly detection are key elements in maintaining a consistent security posture across multi-cloud heterogeneous environments. Figure 10 illustrates traffic variations and anomalies identified on three cloud platforms (Cloud 1, Cloud 2, and Cloud 3).

Figure 10: Traffic spikes and anomalies across multiple cloud.

The red-highlighted areas of the graph represent anomalous traffic patterns accurately recognized by the CCTT model. These patterns occurred in the 50- and 80-time intervals. The discovery also demonstrates the framework’s ability to detect potential threats, such as DDoS attacks, in real-time. The SDMDC ensures that security rules are consistently implemented by providing centralized management. Distributed MCIDS-G provide adaptive responses on a per-cloud basis and mitigate threats locally. Quick and precise reactions to anomalies are made possible by this architecture’s efficient examination of traffic patterns across several clouds. Notably, threat detection accuracy is significantly improved with CCTT and other Deep Learning models, and flexibility and scalability are made possible with the incorporation of SDN. Combined, these components form a robust defensive approach for the whole multi-cloud environment, strengthening defenses against external intrusions, insider threats and lateral movement inside the cloud.

Limitations

Although the SDMDC framework exhibits effective results in multi-cloud security orchestration, it has a few limitations. The CCTT imposes a notable computational overhead attributed to its multi-head-based attention infrastructure, especially using high traffic volumes. LSTM-based MCIDS-G modules may experience latency issues when preceded by a prolonged attack bursts which can affect real-time responsiveness. Despite a high precision of detection (Fig. 4C), certain cases of false positives still occurred, particularly where east-west traffic cross paths and has overlapping services. Moreover, the scalability with high flow rate (>1,000 flows/sec) caused policy synchronization intermittent failures. These challenges are to be investigated by the future work on model pruning, distributed inference, and inference using temporal-attention variations.

Conclusion

This study proposed a novel SDN based multi-cloud security architecture. It adaptively manages security policies and detects assaults in real time using deep learning models. SDMDC and MCIDS-G separate the control plane and data plane, allowing the architecture to handle security threats across different cloud environments more consistently, flexibly and scalable. Strong protective measures are put in place to enhance threat detection on a global scale using cutting-edge DL models such as CCTT and LSTM. The Lemerus optimizer allows for effective policy management and resource utilization, allowing for the efficient implementation of security without compromising performance. Experimental results demonstrate that the proposed architecture outperforms the state-of-the-art multi-cloud security methods, allowing real-time threat mitigation and dynamic policy enforcement. Complex cloud architectures could benefit from this feature. While this study primarily focuses on intrusion detection in multi-cloud systems, future work should extend its scope to include other security features, such as encryption, firewall configuration, secure traffic management, and zero-day attack detection. Extending the recommended framework’s usefulness in dynamic cloud settings requires enhancing the model’s ability to withstand novel assaults and including more diverse security measures.