Fuzzy logic trust management framework for Cyber Physical-Social Systems (FTM-CPSS)

- Published

- Accepted

- Received

- Academic Editor

- Chan Hwang See

- Subject Areas

- Human-Computer Interaction, Emerging Technologies, Security and Privacy, Social Computing

- Keywords

- Cyber physical social systems, Metaverse, Trust management, Fuzzy inference system

- Copyright

- © 2025 Abed et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2025. Fuzzy logic trust management framework for Cyber Physical-Social Systems (FTM-CPSS) PeerJ Computer Science 11:e3164 https://doi.org/10.7717/peerj-cs.3164

Abstract

Cyber-Physical-Social Systems (CPSS) are direct responses to the growing need for a deeper understanding of the interaction between Cyber-Physical Systems (CPS) and human beings. This shift from CPS to CPSS is primarily driven by the widespread utilization of sensor-equipped smart devices that are closely connected to users. CPSS has garnered significant interest for over a decade, with its importance steadily increasing in recent years. The incorporation of human elements in CPS research has introduced new complexities, particularly in comprehending human dynamics, which still require further exploration. In conclusion, CPSSs form an integral part of the Metaverse, showcasing the Metaverse as a CPSS. The Metaverse system encompasses computer systems equipped with artificial intelligence, computer vision, image processing, mixed reality, augmented reality, and extended reality. Additionally, it includes physical systems consisting of controlled objects and human interaction. The establishment of Metaverse gives rise to concerns regarding trust. Ensuring trust in the data within the Metaverse is crucial. In this article, we present a trust management framework for CPSS using fuzzy logic. The ability of fuzzy logic to simulate human reasoning makes it highly effective for systems that are designed to make decisions as a human would. Its distinctive qualities make it advantageous for assessing trust in complex situations, leading to efficient and effective decision-making. The proposed framework utilizes a combined five-tier to assess trust in the Metaverse as an example of CPSS. Tier I, Evaluates the compliance of the virtual service provider (VSP) to the agreed service level agreement (SLA); Tier II, calculates the processing performance of VSP based on the number of successful tasks accomplished; Tier III, measures the social reputations of VSP; Tier IV, checks the security issues of VSP; Tier V, monitors the violations committed by VSP within specific computational periods during its operation in the Metaverse. By integrating the five tiers with the developed overall trust fuzzy inference system (FIS), Section II attains the total trust value. We show that the proposed protocol (FTM-CPSS) is resistant to a number of security attacks through this informal security analysis, including Self-promotion attacks (SPA), Bad-mouthing attacks (BMA), Opportunistic service attacks (OSA), On-off attacks (OOA), Whitewashing attacks (WA), Discriminatory attacks (DA), and Ballot stuffing attacks (BSA).

Introduction

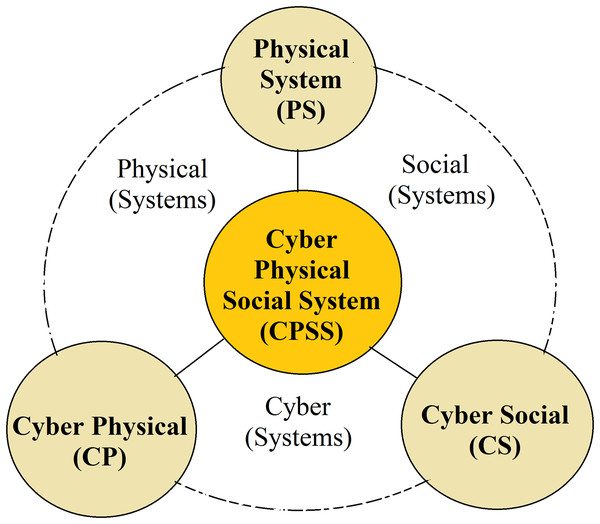

Cyber-Physical-Social Systems (CPSSs) introduces a new and exciting paradigm that merges cyber-physical and cyber-social systems. A CPSS consists of three systems (cyber, physical, and social). Within a cyber system, there are technical components such as computers and networks, physical systems (controlled entities), and social systems (human interaction) as shown in Fig. 1. Notably, these elements are highly distributed and exhibit a dynamic stochastic hybrid nature. CPSS can simplify and expedite tasks, leading to a higher level of control and accessibility. This unique framework integrates various components, including computation, communication, sensing, actuation, and social systems.

Figure 1: The dimensions of cyber physical social systems.

Their application can be observed in different contexts, such as smart homes, which are built on the integration of smart devices and Smart Home Technologies (SHTs). These SHTs consist of a network of sensors, actuators, monitors, interfaces, appliances, and devices. Through this interconnected system, smart homes offer personalized information and services, accommodating the diverse preferences of their users (Rahman, 2018). The emergence of new information communication technology in urban management infrastructure has led to the development of a prototype known as a smart city, aimed at enhancing the quality of life, providing better services, and promoting a clean and safe global environment (Angelidou, 2015). Smart healthcare is a distinct sector within smart cities, characterized by its unique approach and business model. It encompasses various aspects such as aiding in diagnosis and treatment, facilitating drug research, managing health, preventing diseases, monitoring risks, utilizing virtual assistants, and incorporating smart hospitals (Tian et al., 2019). The evolution of information technology has brought the concept of smart healthcare to the forefront. This innovative framework employs a new generation of information technologies, including the Internet of Things (IoT), big data, cloud computing, and artificial intelligence, to fundamentally alter the traditional healthcare system, enhancing its efficiency, convenience, and personalization. Advanced healthcare technologies can improve self-management of health. Individuals can access timely and relevant medical services as required, with these services being more customized to their specific needs. For healthcare facilities, advanced healthcare technologies can help minimize costs, ease the burden on staff, facilitate integrated management of resources and data, and enhance the patient experience. In research settings, these technologies can lead to reduced research costs, shorter timelines, and increased efficiency in research activities. On a macro level, advanced healthcare technologies can help rectify inequalities in medical resource allocation, drive healthcare reform, support preventive health strategies, and lower overall societal healthcare costs.

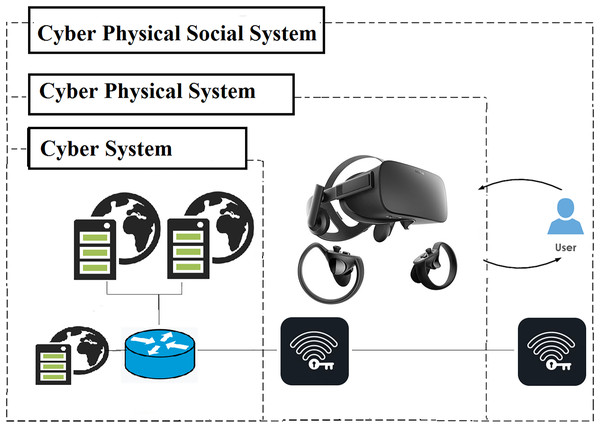

The social manufacturing environment aims to facilitate customer-producer interaction by providing mediators (Jiang, Ding & Leng, 2016). Intelligent transportation is a combined hybrid system designed to improve driver behavior prediction, offering potential benefits for autonomous driving (Dressler, 2018). Smart Tourism, based on the web, takes inspiration from the idea of a “Smart City”. Smart tourism aims to deliver engaging and current information including location, directions, visuals, ratings, and descriptions of tourist destinations around the clock and across various locations (Nisiotis, Alboul & Beer, 2020). Smart energy systems aim to find the most effective and economical solution through the utilization of existing infrastructure, low-temperature district sources, and renewable energy heat sources (Camtepe & Yener, 2007). Taking into account engineering and social elements, including the operational environment, social context, personnel, regulations, equipment, and information processing, the proposal for air traffic management within a CPSS has been developed (Wang et al., 2024a). Utilizing transformer-based cyber-physical social systems for the intelligent analysis of user-generated content related to urban natural gas (Wang et al., 2024b). An automated delivery model operating in parallel with the CPSS for the final leg of delivering super-chilling products, highlighting the societal benefits of logistics drone operations (Liu, Tsang & Lee, 2024). The Metaverse is a prime example that can be effectively utilized by CPSS as shown in Fig. 2. Major tech companies around the world, such as Facebook (now rebranded as “Meta”), Microsoft, Google, and Amazon, are showing a growing interest in the Metaverse. In the Metaverse, individuals are represented as avatars and can immerse themselves in a three-dimensional environment that faithfully replicates the real world. This enables users and software agents to actively participate in interactive exchanges with each other. The integration of advanced technologies in the Metaverse aims to improve service delivery and user-platform server interaction. These technologies include digital twin for replicating real-world images, virtual reality (VR) for virtual communication, augmented reality (AR) for immersive 3D experiences, and 5G for connecting devices to high-speed, low-latency networks (Kürtünlüoğlu, Akdik & Karaarslan, 2022). The Metaverse can be described as a three-dimensional virtual environment that overcomes the limitations of online services by providing immersive experiences through avatars (Ryu et al., 2022).

Figure 2: Metaverse as a cyber-physical-social system.

Some figure components are AI generated using Midjourney and Aitubo.Users in the Metaverse environment utilize different devices such as HTC VIVE and Oculus Quest, which utilize AR, VR, and XR (extended reality) to connect with various platform servers like Roblox, Minecraft, and Fortnite, providing a virtual reality experience through avatars (Park & Kim, 2022). Metaverse platforms provide various services through virtual worlds and avatars, such as educational programs, the application of Metaverse in audiovisual education are a key tool for expanding its accessibility, underscoring its importance in experiential learning (Sung et al., 2021). Games serve as the primary medium for promoting the widespread adoption of the Metaverse. Apart from catering to people’s interests, games also offer a means to simplify complex tasks. Given the extensive use of payment and personal data within the Metaverse, a blockchain-based game has been suggested as a solution (Ryskeldiev et al., 2018). The rise of the virtual domain, in conjunction with an exceptional intuitive platform that ensures a thrilling customer experience, is becoming prominent and is anticipated to result in a significant shift in the execution of marketing programs (Wasiq et al., 2024). A study was performed to assess the impact of corporate social responsibility on ethical and policy-related aspects (Papagiannidis, Bourlakis & Li, 2008). A comprehensive analysis was carried out on the operational framework of a corporation within Virtual Worlds and Second Life (Cagnina & Poian, 2007).

Table 1 presents an overview of each of these aggregation techniques, including their respective strengths and weaknesses.

-

Weighted sum: This technique of aggregation is described as the weighted average mean for each value, where static or dynamic weights are assigned to each metric in order to obtain a single score (Wang et al., 2024c).

Belief theory: This theory is frequently known as Dempster-Shafer theory or evidence theory. It synthesizes various forms of evidence to establish a degree of belief that lies within the range of 0 to 1, with 0 representing no support and 1 representing complete support for the evidence (Zhao, Chen & Wang, 2024).

Bayesian system: Bayesian inference is a widely utilized method for trust computational modeling, wherein trust is regarded as a random variable that spans the interval [0, 1] and adheres to a beta distribution to represent the probability distribution of a data point, node, or interaction. This approach is fundamentally grounded in Bayes’ theorem (Zheng et al., 2023).

Machine learning: Techniques for aggregation driven by machine learning typically involve a two-step process for making predictions. The first step is unsupervised learning, specifically clustering, which is utilized when the training data lacks labels. The second step involves multi-class supervised learning, or classification, which is employed to categorize the interactions or nodes into distinct classes, such as trustworthy and untrustworthy (Sousa et al., 2024).

Regression analysis: This statistical method employs the slope of lines to consolidate various independent variables. There are two primary types of regression: (i) Linear regression, which predicts a single dependent variable using data from one independent variable, and (ii) multiple regression, which forecasts the outcome of a dependent variable based on data from multiple independent variables (Jayasinghe, Lee & Lee, 2017).

Fuzzy logic: Fuzzy logic focuses on reasoning that is approximate rather than strictly accurate. It is characterized as a many-valued logic, which offers enhanced strength and flexibility over traditional binary logic. This approach embraces the notion of partial truth, allowing truth values to exist on a spectrum from fully true to entirely false. Moreover, these degrees can be regulated by particular membership functions, especially in the presence of linguistic factors. Reputation and trust are frequently represented as fuzzy measures, with membership functions illustrating the different levels of these qualities (Mansour et al., 2021).

| Techniques | Positive points | Negatives points |

|---|---|---|

| Weighted sum (Wang et al., 2024c) | The computational cost is kept low as there is no need for any mathematical functions. | An infinite range of possibilities is present for defining the weights of each value in different settings. |

| Belief theory (Zhao, Chen & Wang, 2024) | This method enables the integration of information from various separate sources. | The existence of harmful items can cause doubt and conflict in belief systems, potentially undermining the credibility of trustworthy items and resulting in unreliable decision-making. |

| Bayesian inference (Zheng et al., 2023) | The conclusions drawn from this method rely on the data and are precise, without relying on asymptotic estimation. | Models containing a large number of parameters or metrics frequently result in increased computational expenses. |

| Machine learning (Sousa et al., 2024) | This method is more appropriate when the quantity of trust metrics required calculating the overall trust score is greater than that of other aggregation techniques. | Utilizing a machine learning-driven algorithm can be costly in terms of computational resources, resulting in increased latency as the system must retrain the trust model following each transaction. |

| Regression analysis (Jayasinghe, Lee & Lee, 2017) | The function of multi-regression analysis is to evaluate the influence of each trust metric in conjunction with multiple metrics, which enhances the efficiency of outlier detection. | Regression can result in unpredictable outcomes if the dataset utilized for analysis is not significant. |

| Fuzzy logic (Mansour et al., 2021) | Fuzzy logic is akin to human reasoning, effectively functioning even when faced with ambiguous or unclear information. Fuzzy logic’s nature makes it suitable for evaluating trust in complex situations, allowing for efficient and effective decision-making. |

Additional testing and validation are necessary for the fuzzy logic system, as a single problem can have many potential solutions due to the absence of a systematic approach and the system’s heavy dependence on human knowledge and expertise. |

This CPSS plays a pivotal role in driving the data science revolution by facilitating the integration of information resources from different spaces. However, the establishment of CPSSs also raises concerns regarding trust and security.

It is important to find a way to determine the level of trust in the Metaverse. Trust plays a crucial role in providing a solution for individuals and services to navigate uncertainty and mitigate risks when making decisions. By establishing a trust service platform, it is possible to reduce unexpected risks, enhance predictability, and ensure the smooth operation of the Metaverse environment while preventing service failures. After a lot of studies, we found that fuzzy logic is the most appropriate way to measure trust in the Metaverse environment because Fuzzy logic is akin to human reasoning, effectively functioning even when faced with ambiguous or unclear information, and fuzzy logic’s nature makes it suitable for evaluating trust in complex situations, allowing for efficient and effective decision-making. Fuzzy logic with social reputation is the most important metric to take into consideration, reputation serves as a social framework that mirrors how the public views the characteristics of an entity. The main goal of a reputation is to effectively convey details regarding the reliability of an entity (acting as a trustee) to others (as trustors), thereby encouraging trustors to engage in online transactions even without personal experience. Typically, reputation relies on feedback mechanisms to handle the evaluations of participants following transactions, encompassing both good and bad feedback. Many metrics must be added to obtain better and more accurate results such as service level agreement (SLA), processing performance (PP), security issues (SI), and violations (V). In this article, we propose a Fuzzy Logic Trust Management Framework for Cyber-Physical-Social Systems (FTM-CPSS); the framework consists of five tiers employing fuzzy logic that assesses the Metaverse as an example of CPSS. The proposed protocol consists of two parts. Part I presents the five tiers (service level agreement (SLA)—processing performance (PP)—social reputation (SR)—security issues (SI) and violations (V)). Part II integrates the five-tier and derives an overall trust value and status for the platform server, using a MATLAB-based trust fuzzy inference system (FIS). We compared the proposed protocol (FTM-CPSS) to other protocols by analyzing various platform server properties. The structure of this article is as follows. ‘Literature Review and Related Work’ introduces the literature review and related work; the proposed FTM-CPSS described in ‘Proposed Fuzzy Logic Trust Management Framework for Cyber-Physical-Social Systems (FTM-CPSS)’; ‘Simulation Results, Security Analysis and limitations of the Proposed System’ displays the simulation results, discusses security analysis and limitations of the proposed system; finally, the conclusion is conducted in ‘Conclusion and Future Work’.

Literature review and related work

An integrated inspection method for CPSSs has been proposed, which incorporates artificial systems, computational experiments, and parallel execution to facilitate smart manufacturing by Wang et al. (2023). Mustafa (2023) introduced an investigation which focuses on identifying and classifying the sources of personal data creation in CPSSs. Its findings are crucial for future studies dedicated to enhancing personal data security in CPSS. The proposed framework will significantly improve the functionality of CPSSs by facilitating automatic knowledge acquisition, continuous updating and iteration, as well as reasoning and decision-making processes utilizing extensive, diverse data from multiple sources. This advancement aims to establish a knowledge-driven semantic cognition and an autonomous decision-making system for industrial processes was introduced by Wang & Cheng (2021). Qian, Tang & Yu (2024) outline the current state of the process industry, utilizing a CPSS framework. The article examines the latest advancements within the sector, alongside the primary challenges and opportunities that arise. A forward-looking vision for the process industry, grounded in CPSS principles, is articulated, emphasizing three key areas: environmental sustainability and low carbon initiatives, high-value and premium production, as well as the digitalization and intellectualization of process manufacturing. The discussion concludes with an overview of advanced technologies and methodologies in CPSS, particularly those propelled by artificial intelligence and industrial digitalization, which are crucial for fostering sustainable development in the process industry. Furthermore, the evolution of comprehensive digital technologies including virtual reality, digital twins, blockchain, and big data will catalyze the realization of an innovative concept within the CPSS framework known as the industrial metaverse. Valinejad & Mili (2023) outlines a strategy for optimizing community resilience in accordance with power flow constraints in cyber-physical systems associated with power engineering, which is tackled using a multi agent-based algorithm. Abed, Abdelkader & Hashem (2023) outline the key characteristics of CPSS, examine their vulnerabilities, and identify associated challenges. Furthermore, it emphasizes the primary attacks directed at CPSS and the suggested security measures. To illustrate the concept of CPSS, a case study on its application in smart agriculture is presented.

A dynamic trust model was introduced by Li & Gui (2010) to precisely measure and forecast users’ cognitive behavior. For effective service matching in the cloud, they presented the T-broker trust-aware service brokering scheme in 2015 (Li et al., 2015). To assess user behavior, Tan & Wang (2015) suggested a combination-weighted technique based on relative entropy. Evolutionary algorithms paired with trust mechanisms have been proposed by several academics (Sanadhya & Singh, 2015). The trust evaluation approach based on the cloud model was proposed by Wang, Zhang & Li (2010). The twice excitation and deceit detection-based trust model was created by Xie, Liu & Zhao (2012). For multi-agent societies, Ahmadi & Allan (2016) presented a trust-based decision-making paradigm. For cloud-based applications, AbdAllah et al. (2017) proposed the mechanisms of trust evaluation methodologies. A trust-based probabilistic recommendation model for social networks was introduced by Wang et al. (2015). Deng et al.’s (2017) creatively proposed a two-phase recommendation process that effectively combines the interests of users and their reliable friends to initialize deep learning and synthesize user interests. This significantly increased recommendation accuracy and effectiveness. To ensure the credibility of the entities Saleh, Hamed & Hashem (2014) proposed a hybrid model to build, evaluate, and expose trust. To effectively assess the competence of cloud services based on the various trust attributes, Li & Du (2013) introduced an adaptive trust management model. Das & Islam (2012) proposed a dynamic trust model based on feedback and a new load-balancing algorithm to deal with the hostile actors’ strategically changing behavior and distribute the burden as evenly as feasible among service providers.

In order to evaluate the dependability of the provider, Wang et al. (2011) first offered a platform for service level agreements and then a reputation system was suggested based on the platform. An SLA template pool was also suggested to facilitate SLA negotiations between cloud providers and customers. However, there were no mathematical formulas and simulation tests. Manuel (2015) built a novel trust model and determined the values of four linked factors using the prior credentials and current capabilities of a cloud resource provider. To create a trust model based on fuzzy mathematics in the cloud computing environment, Mohsenzadeh & Motameni (2015) took into account success and failure interactions between cloud entities. Noor et al. (2016) discussed various trust management philosophies and methods. A brand-new distributed trust model was presented by Tan et al. (2010). In order to calculate the value of trust, they created a communication multi-dimension history vector and its distributed storage structure. The most recent evidence was added to the iterative calculations in Tan, Wang & Wang (2016) dynamic and iterative trust model. Combining Bayesian notions into the trust model, Wang & Vassileva (2003) suggested a Bayesian network-based trust model but did not provide the methodology for calculation. Reviewing previous collusion assaults by Saini, Sihag & Yadav (2014) suggested a preventative defense mechanism against them and offered a reduction mechanism to punish colluding peers. An SLA framework with negotiation, a secure monitoring system, and a third party was proposed by Binu & Gangadhar (2014). A framework for trust evaluation systems was built by Li et al. (2014), who also proposed a model of trust evaluation tailored to the field of mechanical manufacturing. According to Chiregi & Navimipour (2016), the trust value is assessed using five characteristics (availability, dependability, data integrity, identity, and capability) while taking into account the influence of opinion leaders on other entities and removing the effect of troll entities in the cloud environment.

Nitti et al. (2014) describe the trustworthiness management paradigm for the Internet of Things (IoT), which makes use of both an object’s subjective and objective attributes. The calculation of objective trustworthiness takes into account centrality as well as the long- and short-term perspectives of all networked objects. In order to calculate the single trust score, the static weighted sum aggregation is used. In their REK trust model, presented by Truong et al. (2017), the experience and reputation of an object are used as indicators of its reliability. Experience is calculated based on three variables: three factors: (1) interaction intensity; (2) interaction values (cooperative, uncooperative, or neutral); and (3) relationship status at the moment. In a mutual context-aware trust evaluation approach presented by Khani et al. (2018), the trustworthiness of an object is assessed using three social trust metrics and two QoS metrics. The three social measures are similar in relationships, communities of interest, and friendships, whereas the QoS metrics are expected and announced QoS. The status of a device (energy and computing capacity), environment (location), and task type are merged for trust metrics computation in context awareness. The static weighted sum aggregation approach is used to separate these independent trust indicators at the conclusion. Jayasinghe et al. (2019) offer a data-centric machine learning-based trust evaluation mode that incorporates social trust measures to assess the trustworthiness of nodes and uses machine learning-based trust aggregation to produce a single trust score. Chen, Bao & Guo (2016) introduce the adaptive trust model, suitable for the dynamic changing environment in SIoT, to separate the misbehaving nodes committing trust-related attacks. Two trust metrics, similarity trust and familiarity trust are used by Xia et al. (2019) to define a framework for context-aware trustworthiness inference. To calculate direct and indirect trust, the familiarity trust uses a kernel-based nonlinear multivariate grey prediction model. An ideal credit and reputation-based trust model for the Internet of Things is proposed by Xiao, Sidhu & Christianson (2015). An integrated three-tier trust management framework that evaluates cloud service providers in three main domains is proposed by Mansour et al. (2021). Abed, Abdelkader & Hashem (2024) provides a comprehensive examination of trustworthiness management in the context of CPSS. Create an innovative B-DTM framework for EC networks that incorporates off-chain trust verification alongside on-chain trust-based consensus mechanisms is proposed by Shi et al. (2024). Approaches to trust computation are shown in Table 2.

| Ref. | Metrics of trust | Positive points | Constraints |

|---|---|---|---|

| Nitti et al. (2014) | Centrality, Credibility, and Feedback (opinion) | Competent in effectively identifying malicious nodes in the network, even when a substantial portion of nodes are malicious. | The model’s reaction time to evolving environmental conditions is delayed as a result of predetermined trust parameters. |

| Truong et al. (2017) | Reputation and experience | The model under consideration introduces two trust metrics, namely experience (cooperative, uncooperative, and neutral interactions) and reputation (positive-negative). | Performance evaluations related to trust computation and trust-related attacks have not been conducted. |

| Khani et al. (2018) | QoS metric and Friendship, Community of interest, and Feedback (recommendations) | The model effectively defends against various trust-related attacks, such as BMA, BSA, SPA, and OOA, among others. Considers mutual context-awareness |

The adjustment of trust parameters in response to evolving environments has not been adequately addressed. |

| Jayasinghe et al. (2019) | Community of interest, Friendship, similarity, Centrality, and Cooperativeness | A trust evaluation model focused on data is proposed, which employs machine learning techniques for trust aggregation to determine individual trust metrics rather than relying on a weighted sum aggregation approach. | The model encounters challenges related to scalability and reliability as the number of nodes grows. |

| Chen, Bao & Guo (2016) | Honesty, Cooperativeness, Community of interest, and Recommendations | The model is ideal for the variable environmental conditions. | There has been a lack of consideration for defense mechanisms that counteract key attacks, specifically on-off and intelligent behavior attacks. |

| Xia et al. (2019) | Centrality, Recommendation, and Community of interest | The suggested framework demonstrates effectiveness when faced with grey-hole attacks and badmouthing attacks, while also taking into account context-awareness. | The defense mechanisms have not been taken into account for protection against on-off attacks and intelligent behavior attacks. |

| Xiao, Sidhu & Christianson (2015) | Reputation and credit | By utilizing only two metrics, credit and reputation, this model achieves computational optimality in categorizing nodes as trustworthy or untrustworthy. | The scalable nature of SIoT makes scalability a primary concern, particularly in light of the centralized computation system. |

| Mansour et al. (2021) | The agreement on service levels, processing efficiency, and the assessment of violations. | Fuzzy logic’s characteristics make it well-suited for evaluating trust in complex situations, ultimately leading to efficient and effective decision-making. | The defense protocol has not been taken into account for Reputation. |

Wei et al. (2021) proposes a socio-cognitive-based trust model that is context-aware for the purpose of service delegation in service-oriented SIoT. Abdelghani et al. (2022) Presents a scalable multi-level trustworthiness management model designed to detect and mitigate malicious objects capable of conducting a range of attacks. As a result, a variety of trust metrics are employed, including, but not limited to, direct experience, ratings, the credibility of raters, frequency of ratings, and social similarity. Xiao, Sidhu & Christianson (2015) presents an ideal credit and reputation-based trust model for SIoT, which incorporates two parameters: credit (identified as the guarantor) to ascertain whether the object is capable of communication, and reputation to assess trustworthiness and identify any misbehaving nodes. A security model based on policy, referred to as Real Alert, is proposed by Li, Song & Zeng (2018) To determine the trust level of a node and the data it holds. The model specifies the policies grounded in contextual data to pinpoint the nodes that are compromised and the misleading information by assessing the model through different trust attack methodologies. Comparisons between schemes that rely on the reputation-based trust model are shown in Table 3.

| Ref. | Trust type | Strengths | Weaknesses | Trust decision | Trust attacks |

|---|---|---|---|---|---|

| Wei et al. (2021) | Weighted sum | The aggregation of values is achieved through a method that is both simple and incurs minimal computational expenses. | There is a lack of clarity regarding the influence of each value on the aggregate value. | Threshold-based | OSA, BMA, BSA |

| Abdelghani et al. (2022) | Machine learning | Machine learning models can analyze large datasets and identify complex patterns that traditional methods may miss. This leads to more accurate and dynamic trust assessments | Although Machine learning models can adapt over time, they may not always be quick to adjust to entirely new or unexpected situations. | Threshold-based | SPA, WA, OSA, OOA, BMA, BSA, DA |

| Xiao, Sidhu & Christianson (2015) | Bayesian system |

This technique is reliant on the data and is precise, independent of asymptotic estimation. | Computational costs increase when models contain a large number of parameters or metrics. | Threshold-based | SPA, BMA, BSA |

| Li, Song & Zeng (2018) | Belief theory | This method enables the integration of data from various independent sources | In the presence of harmful entities this resulting in unreliable decision-making. | Context-based | OOA, BMA, BSA |

| Our proposed (FTM-CPSS) | Fuzzy logic | Fuzzy logic is designed to work with imprecise and uncertain data. This is particularly beneficial in trust management, where inputs, such as reputation or behavioral indicators are often vague or subjective. | Fuzzy systems require the definition of membership functions for input variables (e.g., trust levels), and these are often based on expert opinions. This subjectivity can lead to inconsistencies if different experts have varying interpretations of trust. | Threshold-based | SPA, WA, OSA, OOA, BMA, BSA, DA |

Proposed fuzzy logic trust management framework for cyber-physical-social systems

Extensive analysis has revealed that fuzzy logic is the most suitable approach for gauging trust in the Metaverse environment. Fuzzy logic, resembling human reasoning, operates effectively in the presence of ambiguous or unclear data, making it well-suited for evaluating trust in complex situations and enabling efficient decision-making. Social reputation, when integrated with fuzzy logic, emerges as a key metric, as it mirrors public perceptions of an entity’s characteristics. The primary objective of a reputation is to efficiently communicate information about the trustworthiness of an entity (in the role of a trustee) to others (as trustors), in turn fostering trustors to participate in online transactions even in the absence of direct experience. Reputation commonly utilizes feedback mechanisms to manage assessments from participants’ post-transactions, encompassing good and bad feedback. Additionally, various metrics like service level agreement (SLA), processing performance (PP), security issues (SI), and violations (V) must be incorporated to enhance the quality and accuracy of results. We proposed (an FTM-CPSS) protocol that aims to establish trust in CPSS by employing fuzzy logic within a comprehensive five-tier trust management framework that evaluates trust in Metaverse as an example of CPSS. The framework comprises five main tiers:

Tier I, SLA functions as a contract signed between a virtual service provider (VSP) and a user, establishing the conditions for service processing execution. The VSP bears full responsibility for creating the SLA and must clearly define all processing terms for the user. These terms encompass aspects such as computational cost, storage, execution time, and QoS (SCib_R, SSib_R, STib_R, SQib_R).

Tier II in which the processing performance of VSP is calculated, such as average processing success ratio (APSUi), The number of processes that the platform servers accepted but failed to complete (PINi), and average user termination ratio (AUTEi).

Tier III in which the social reputations of VSP is measured, such as good reputation (GRib) and bad reputation (BRib).

Tier IV in which the security issues of VSP is checked.

Tier V in which the violations committed by VSP in Tier I, II, III, and IV are monitored. Examples of violations include the amount of cost dissatisfaction exceeded the limit (War 1_Sri issue), the amount of time dissatisfaction exceeded the limit (War 2_Sri issue), the amount of storage dissatisfaction exceeded the limit (War 3_Sri issue), VSP is below the quality of service threshold (War 4_Sri issue), VSP exceeds the processing incompliance threshold (War 5_Sri issue), VSP exceeds the processing user termination threshold (War 6_Sri issue), VSP is below the social reputation threshold (War 7_Sri issue) and VSP undergoes security privacy problem (War 8_Sri issue).

The notations used in the proposed framework are detailed in Table 4.

| Symbol | Description |

|---|---|

| b | Job |

| BMA | Bad-Mouthing Attacks |

| BRib | Bad Reputation |

| BSA | Ballot Stuffing Attacks |

| DA | Discriminatory Attacks |

| FIS | Fuzzy Inference System |

| GRib | Good Reputation |

| M | A Complain Message |

| MSM | Metaverse Service Manager |

| OOA | On-Off Attacks |

| OSA | Opportunistic Service Attacks |

| Pi | Processing Performance of the ith VSP |

| PINi | Processing Incompliance |

| PS | Platform Server |

| PSUi | Process Success |

| QoS | Quality of Services |

| SC | Cost of Computation in SLA |

| SLA | Service Level Agreement |

| SPA | Self Promotion Attacks |

| SS | Computational Storage in SLA |

| ST | Execution Time in SLA |

| SUj | Service User j |

| Ti | Total Warnings Value for the ith Platform Server |

| UI | User Interface |

| UTEi | User Termination |

| VMi | Violation Measurement |

| VSP | Virtual Service Provider |

| WA | Whitewashing Attacks |

Fuzzy logic

Fuzzy logic deals with reasoning that is approximate rather than fixed and precise. It is a many-value logic, which gives it more strength and flexibility compared to binary logic. Fuzzy logic accommodates the idea of partial truth, where the truth value might range from entirely true to completely false. Furthermore, these degrees may be controlled by certain membership functions when linguistic factors are present. Reputation and Trust are highly preferred to be represented as a fuzzy measure with membership functions indicating the levels of reputation and trust. Chen et al. (2011) proposed utilizing a static weighted total of direct trust based on direct interaction experiences, and indirect trust based on suggestions. The overall trust of a nod toward another node is then aggregated over all trust types. The experience is positive if the total trust value meets a certain threshold. The direct trust is calculated using a fuzzy membership function that takes into account the quantity of both good and negative experiences as well as uncertainty.

Advantages of fuzzy logic:

In situations where uncertainty or vagueness is present, fuzzy logic demonstrates a remarkable capability in addressing imprecise data.

Fuzzy logic emulates human reasoning, rendering it particularly suitable for systems that must make decisions in a manner akin to human thought processes.

The significance of interpretability and simplicity is evident in fuzzy logic systems, which are inherently rule-based, making them straightforward to comprehend and analyze.

In situations where expert knowledge is accessible but data is scarce, fuzzy logic systems can be constructed using “if-then” rules derived from that expertise.

In situations where a simplified representation of a complex system is necessary, fuzzy logic offers an intuitive approach that allows for effective modeling without the need for an intricate mathematical framework.

Fuzzy logic is highly effective in synthesizing various criteria and inputs, allowing for the assignment of weights based on their significance through the application of fuzzy rules.

The necessity for adaptability arises when fuzzy systems can be readily adjusted or expanded by altering rules or membership functions, eliminating the need for a complete system redesign.

When real-time decision making is necessary: Fuzzy logic systems are computationally efficient, making them suitable for real-time applications.

In theory, this provides the approach with a greater ability to emulate real-world conditions, where assertions of complete truth or falsehood are seldom found.

As a result of the above, we chose fuzzy logic in determining trust in CPSS.

System model

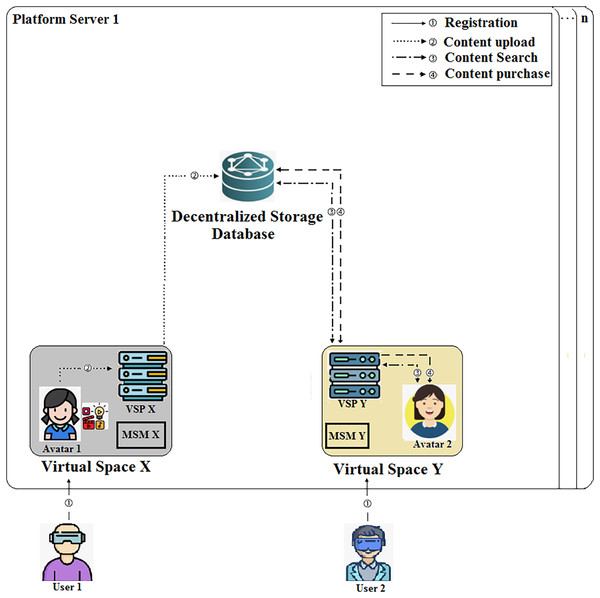

The suggested protocol is made up of a user, an avatar, a virtual service provider, Metaverse Service Manager, decentralized storage, and a network provider as shown in Fig. 3.

-

•

Service User (“SUj”:jth): The Metaverse is accessed by users, who are the individuals responsible for creating their avatars. Once inside this virtual world, users are granted the opportunity to customize their avatars to accurately represent their preferences, personality traits, and distinctive styles. Furthermore, users possess the ability to create, share, and trade content through their avatars, actively engaging in the content-driven economy and playing a pivotal role in shaping the dynamic ecosystem of the metaverse.

-

•

Avatar: Avatars act as virtual representations of users, playing different roles such as interacting with other avatars and digital entities, accessing a wide array of Metaverse services, and participating in content-related activities. They are essential in content creation, curation, and trading, empowering users to actively engage in the content-driven economy of the Metaverse.

-

•

Virtual Server Provider (VSP): (“VSri”:ith): VSPs are pivotal entities within the content trading ecosystem of the Metaverse. They provide a range of services related to content, including uploading, downloading, and facilitating user interfaces for external content searches. By ensuring transparency and trustworthiness in content transactions, VSPs actively contribute to establishing a safer and more efficient Metaverse environment.

-

•

Metaverse Service Manager (MSM): The responsibility of MSM includes carrying out, monitoring, and evaluating the trust computation process for virtual service providers in the Metaverse. Upon request, MSM can share the computed trust values of virtual service providers with other MSM within its operational scope. Furthermore, MSM ensures the secure storage of trust calculation results for virtual service providers.

-

•

Decentralized storage (DS) is responsible for storing content uploaded by VSPs. It offers a search function that allows VSPs to easily access the content they need. By utilizing this distributed storage method, data availability and resilience are improved, guaranteeing that Metaverse users can access and engage with their preferred content without interruptions. The communication flow can be characterized as follows (Oh et al., 2023):

-

(1)

When users wish to register for their specific virtual world, they begin by sending a registration request query to VSP. VSP then proceeds to verify the user’s identity and generates an Avatar and a content upload key for the user.

-

(2)

Within the virtual environment, Avatar1 creates content that is later shared with VSPX. VSPX validates the legality of Avatar1 and proceeds to upload the content to DS, providing detailed information. Additionally, VSPX uploads verification values to 1, 2.

-

(3)

Avatar2 utilizes the search user interface (UI) offered by VSPY to access DS and explore the content that is accessible. It subsequently verifies the information pertaining to the content via DS.

-

(4)

After receiving the necessary details, Avatar2 makes a selection of the desired content and requests access to it. VSPY initiates an automatic payment process through a smart contract upon receiving the corresponding content from DS. Once the smart contract is executed, VSPY delivers the requested content to Avatar2.

-

-

•

Network provider: It also handles network communications between all of the above entities.

Figure 3: System model of the proposed protocol.

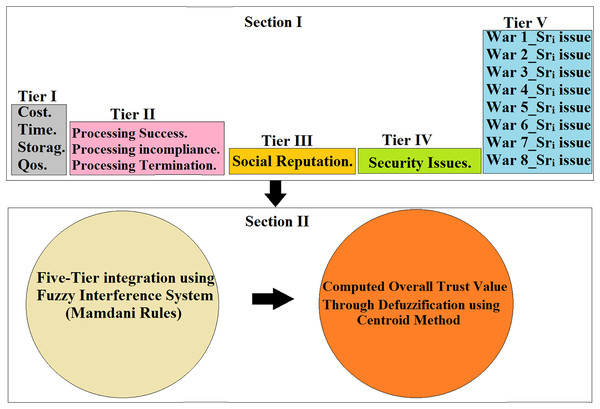

Some figure components are AI generated using Midjourney and Aitubo.The suggested proposal evaluates the virtual server provider’s trustworthiness while taking numerous factors into account. As depicted in Fig. 4, the protocol is constructed from two components. Part I is composed of five major tires: Tier I assesses the platform server’s compliance, Tier II calculates the platform server’s processing performance value, and Tier III presents the social reputation. Calculate the security issues at tier IV. Tier V checks the Metaverse platform server violations. In Part II, a MATLAB-based overall trust fuzzy inference system (FIS) is developed to integrate the evaluated results of Tier I, II, III, IV, and V, to gain an overall trust value and status for platform servers in Metaverse.

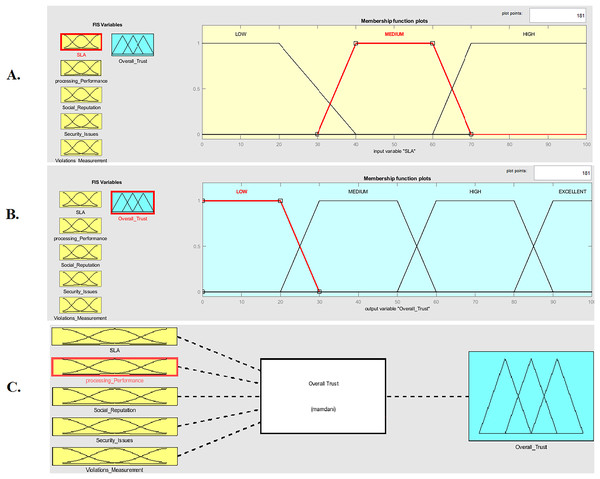

Figure 4: Fuzzy inference system input variables membership function.

The protocol is constructed from two components. Part I is composed of five major tires: Tier I assesses the platform server’s compliance, Tier II calculates the platform server’s processing performance value, and Tier III presents the social reputation. Calculate the security issues at Tier IV. Tier V checks the Metaverse platform server violations. In Part II, a MATLAB-based overall trust fuzzy inference system (FIS) is developed to integrate the evaluated results of Tier I, II, III, IV, and V, to gain an overall trust value and status for platform servers in Metaverse.List of assumptions

The suggested trust framework takes into account the following presumptions:

Trust calculations are made by a fully trusted third party such as a certificate authority.

When VSPX transfers the user’s required task to VSPY for processing, also known as process migration, VSPX assumes complete accountability for maintaining the security of the user’s data. It is imperative for VSPX to inform the user about this transfer and offer the necessary guarantees to ensure the security, privacy, and integrity of the user’s data.

VSP can possess one or multiple platforms that provide various types of services.

Each service type of VSP is evaluated independently regardless of whether it is the same.

The three major jobs that are requested through the Metaverse system are processing, QoS, and both of them, which are identified by the job type identifiers “Job1, Job2, Job3” accordingly.

Part I: proposed five-tier trust evaluation framework

Five tiers of trust evaluation are included in part I: Tier I is for service level agreements, Tier II is for processing performance, and the social reputation is presented in Tier III. In Tier IV security issues are calculated. Tier V checks the violation measurement. As illustrated in Fig. 4, several characteristics are assessed in each tier to determine the trust value. TierI trust results are calculated by the service user assessment of SLA, after the process is finished. Tier II trust results are calculated by evaluating the processing performance of VSP. Tier III checks the reviewers’ votes to calculate the social reputation of VSP. Tier IV checks the security issues of the VSP, and Tier V provides a violation measurement. The five-tier calculation is performed per “n” computational interval as outlined in the subsections below.

Tier I—service level agreement evaluation

A contract between a platform server and a user that indicates the task type the user has requested and the agreed-upon computing requirements is known as a SLA. Assume that the requested task is one of the three categories of jobs specified before, designated as (b) and that the total number of requests received by the ith platform server is designated as (B). The primary terms specified in an SLA should be uniform for all SLAs, maintained by both parties and available to decision as necessary.

Assume that there are four main requirements in all SLAs; cost of computation (SCib), and execution time h/min (STib), needed computational storage size, in GB/TB (SSib), and the quality of services (SQib). Following the end of the job, the user must complete a mandatory rating process to assess the platform server’s adherence to the four predetermined conditions. Assume that all four of the aforementioned primary SLA components, as given in Table 5, have a (r) rating.

| Parameter | Dissatisfaction rate | Satisfaction rate | Consequences of dissatisfaction rate > Threshold SLA drawback warnings |

|---|---|---|---|

| SCibR | 0 ≤ r ≤ 2 | 3 ≤ r ≤ 5 | War 1_Sri issue |

| SSibR | 0 ≤ r ≤ 2 | 3 ≤ r ≤ 5 | War 2_Sri issue |

| STibR | 0 ≤ r ≤ 2 | 3 ≤ r ≤ 5 | War 3_Sri issue |

| SQibR | 0 ≤ r ≤ 2 | 3 ≤ r ≤ 5 | War 4_Sri issue |

The values described above can be changed to fit an individual’s point of view. These evaluations of computational cost, storage, execution time, and QoS (SCib_R, SSib_R, STib_R, SQ ib_R) accordingly, represent the level of user pleasure or discontent and platform server adherence to the specified SLA requirements. Assume that there are as many accepted processes (B) by the ith platform server as there are rated SLAs.

Assume that the calculated rated SLA value, SLAib_R_value, given by Eq. (1), ranges between (0) and (20), is as shown:

(1)

To transform the answer into percentage form, multiply the result from Eq. (2) by 5. As a result, the average computed SLAs value, (SLA average), is determined by:

(2)

Noting that each requested employment is granted a different SLA, even if it is requested by the same user and completed by the same platform server, the MSM computes SLAs average per platform server as specified in Eq. (2), providing the accuracy of SLA evaluation findings. However, the computational demands of every job could are different. On the other hand, a certain threshold value is established for each component with a low dissatisfaction rating. The dissatisfaction rate is calculated for each component for each ith platform server every (n) computational interval. As shown in Table 5, a warning is sent to each platform server in the case that any component’s dissatisfaction rate has gone above the predetermined threshold for this component. This alerts platform servers to any unsatisfactory results obtained for the SLA components in order to improve their processing capabilities in this component.

Tier II—processing performance computation

The value of successful processes executed by a platform server in a computing period of “n” is referred to as the processing performance of ith platform server (Pi). Assume that process “b” is complete, whether it was successful or not. A successful process is referred to as (PSUi) and means that the platform server is committed to all three processing conditions. On the other hand, one of the following three conditions could lead to an incomplete process:

After starting the job processing, a platform server failed to finish it within the time limit specified.

After accepting the job, a platform server did not begin processing it.

The job processing on a platform server began, is going according to plan, and didn’t go outside its process execution time (SEib). The user ends the job processing operation.

Condition 1 and 2 are suggested as examples of incomplete job processing (processing incompliance) (PINi). State 3 is known as the user termination ratio (UTEi) case. Tier II assesses the platform server’s processing efficiency in terms of:

-

•

The average processing success ratio (APSUi), which is calculated as the number of successful processes (PSUi) implemented by the platform server divided by the sum of all accepted processes (B) in Eq. (3), is as follows: (3)

-

•

The number of processes that the platform servers accepted but failed to complete (PINi) is calculated as the average processing incompliance ratio (APINi) is determined by the number of processing incompliance (PINi) implemented by the platform server divided by the sum of all accepted processes (B) in Eq. (4), is as follows: (4)

-

•

Average user termination ratio (AUTEi): The percentage of users for any reason dissatisfied with their computing operation and chooses to end it. This is shown by condition number 3. And is known as the user termination ratio (UTEi). The average user termination ratio (AUTEi) is determined by the number of processing termination (UTEi) implemented by the platform server divided by the sum of all accepted processes (B) this is calculated using Eq. (5). (5)

(6) where 0 ≤ Pi ≤ 1 for (n = 1) computational period.

The processing performance level of a platform server in the Metaverse system is shown in Eq. (6). Processing incompliance and user termination ratio metrics each have a predetermined threshold specified for them. A relative alert is given to the ith platform server as stated in Table 6 if the platform server computed results for these two parameters pass this threshold within (n) computational intervals.

| Parameter | Processing performance warnings |

|---|---|

| APINi >Threshold | War 5_Sri issue |

| APTEi >Threshold | War 6_Sri issue |

Tier III-social reputation

Social reputation (SR) is a societal construct that reflects the public’s perception of an entity’s attributes. Over the last two decades, there has been significant research into reputation systems within the realms of computer and information sciences (Kamvar, Schlosser & Garcia-Molina, 2003; Jøsang, Ismail & Boyd, 2007). The primary objective of a reputation system is to accurately provide information about the trustworthiness of an entity (as a trustee) to others (as trustors), thus promoting the participation of trustors in online transactions without direct knowledge. Most reputation systems are based on a feedback mechanism for managing the opinions of participants after transactions, in both good and bad forms. This tier is based on the user experience which can be collected or obtained by the user’s opinions of ith platform server, social reputation is a separate tier unrelated to service level agreements, processing performance, and security issues. Social reputation is divided into two outcomes good reputation (GRib) and bad reputation (BRib) War 7_Sri issue as shown in Table 7.

-

•

Average good reputation (AGRib) is calculated by the number of good reputations (GRib) implemented by the platform server divided by the sum of all accepted social reputations (B) as calculated in Eq. (7). (7)

-

•

Average bad reputation (ABRib) is calculated by the number bad reputations (BRib) implemented by the platform server divided by the sum of all accepted social reputations (B) as calculated in Eq. (8). (8)

| Parameters | Social reputation |

|---|---|

| ABRib >Threshold | War 7_Sri issue |

Tier IV-security issues

A virtual service provider is accountable for safeguarding the privacy of service user data during the provisioning of the provider’s services or applications, through the implementation of appropriate data protection measures. Nevertheless, a service user may encounter a scenario where it becomes aware that its data has been transmitted by the service provider without obtaining prior consent, thereby compromising the user’s data privacy (Deepa et al., 2020; Noor et al., 2013). A data privacy breach is recognized as a significant security concern, as it can result in cyber-attacks, societal problems, or even facilitate user theft through various methods (Javed et al., 2021; Iwendi et al., 2020; Vasani & Chudasama, 2018). In order to monitor the actions of virtual service providers, a user may send a complaint message “M” to the MSM, accusing the suspected virtual service provider and providing evidence of the incident. The “M” complaint message should contain:

Snapshot of user information displayed on unidentified devices for the user.

Proof of the user’s data ownership (such as an old email the user sent to the relevant service provider including this data).

SLA transaction with VSri and SUj.

The MSM verifies the conditions mentioned in the complaint message, “M” to ensure their accuracy. If the MSM confirms that the investigation results align with the virtual service provider, the incident is marked as incident 1. Subsequently, an alarm message “incident1_Sri” is sent to the accused service provider in accordance with Table 8. If a complaint regarding data privacy leakage is filed by another service user, but against the same virtual service provider, MSM will investigate the matter. If MSM verifies the authenticity of the complaint, as mentioned earlier, these incidents will be classified as data privacy leakage attacks, and the accused virtual service provider will be penalized by receiving warning number 8, “W8_Sri”. However, this warning also diminishes the trustworthiness of the accused virtual service provider. This measure is implemented to prevent the recurrence of such attacks by malicious virtual service providers in the future. The data privacy leakage complaint algorithm, as detailed in Table 8, tracks and quantifies data breaches carried out by the ith virtual service provider during “n” computational intervals, across multiple users. Incidents and warnings are archived in a historical database by the MSM for each ith virtual service provider.

| Algorithm name: data privacy leakage |

| Description: user complaints of data privacy leakage against platform server that excited by MSM |

| Input: M |

| Output: incident 1_sri or W 8_Sri |

| MSM: |

| SUi MSM: M |

| Where M = (Snapshot of user, Proof of the user’s data ownership, SLA transaction). |

| MSM: verifies M |

| If (M is approved) then |

| Issues incident 1_Sri |

| Store incident 1_Sri |

| Count incident 1_Sri ++ |

| MSM Sri: incident 1_Sri |

| MSM: checks |

| If incident 1_Sri ≥ 2 then |

| Issues war 8_Sri |

| Store war 8_Sri |

| MSM war 8_Sri |

| End if |

| Else |

| (M is disapproved) |

| Exist ( ) |

| End if |

| End |

Tier V-violations measurement

The violations measurement algorithm calculates the warnings flagged to the ith virtual platform server in tiers I, II, III, and IV (during SLA evaluation, processing performance computation, checking the users votes to determine the servers’ social reputation, and checking the security issues).

According to Table 9, each warning kind is assigned a number between 1 and 8. For warnings that occur on the ith platform server, a reducing factor (θ) is provided that is calculated based on the number and kind of warnings. Noting that (θ) is a flexible variable that might be given many values depending on one’s point of view. Assume that “Ti,” which is determined by Eq. (9), is the total warnings value for the ith platform server generated in tier V.

(9) where 0 ≤ Ti ≤ 1.

| Warning | Reason | Reducing factor | Tier |

|---|---|---|---|

| War 1_Sri issue | Amount of cost dissatisfaction exceeded the limit. | I-SLA | |

| War 2_Sri issue | Amount of time dissatisfaction exceeded the limit. | I-SLA | |

| War 3_Sri issue | Amount of storage dissatisfaction exceeded the limit. | I-SLA | |

| War 4_Sri issue | Doesn’t take into account quality of services threshold. | I-SLA | |

| War 5_Sri issue | Processing incompliance threshold exceeded. | II-PP | |

| War 6_Sri issue | Processing user termination threshold exceeded | II-PP | |

| War 7_Sri issue | A bad result in the social reputation evaluation. | III-SR | |

| War 8_Sri issue | Security privacy problem. | IV-SI |

Since (Ti) provides the value for all warnings, the violation measurement by (VMi) is calculated by Eq. (10):

(10)

The MSM computes the platform server’s violations measurement at tier determining whether ith platform server had any warnings (W1_Sri····W8_Sri). If the ith platform server receives any alert, it computes its relative (θ) and (Ti) values using Eq. (9) accordingly. To obtain the violations measurement for each virtual service provider, (VMi) is computed using Eq. (10). The warnings received by the ith platform server are checked by the violations measuring algorithm shown in Table 10, which then computes its total warnings value (Ti). Therefore, to get its violations measurement (VMi) the total warnings value has deducted from 1.

Part II: five–tier integration using fuzzy logic

The trustworthiness of the ith virtual service provider is determined by combining the results from five different tiers: SLA evaluation, processing performance computation, social reputation, security issues, and violations measurement. This overall trust value is calculated using a fuzzy inference system (FIS) called “Overall Trust”, as illustrated in Fig. 4 and explained in the articles “Using Fuzzy Logic for Trust Computation in Metaverse” and “Integration of Tiers I, II, III, IV and V Results using Fuzzy Logic”.

Trust computation using fuzzy logic in metaverse environments

Fuzzy logic, as a branch of artificial intelligence, involves the utilization of input parameters in a fuzzy inference system, where these parameters are presented as unclear or uncertain data, indicating the partial truth of a parameter (Nagarajan, Selvamuthukumaran & Thirunavukarasu, 2017). Unlike Boolean logic which operates on discrete numbers of 0 or 1, this method involves input values that span from 0 to 1. These values are subsequently utilized in membership functions to differentiate between distinct values ranges, a process commonly referred to as fuzzification (Sule et al., 2017). The fuzzy inference rule base editor in MATLAB is programmed with if-then-else rules to assign specific output decisions to input value ranges. The defuzzification process converts the output result into a clear value. Fuzzy logic offers various advantages, such as flexibility, quick response time, affordability, and logical reasoning. Therefore, fuzzy logic was employed to calculate the overall trust value and status of virtual service providers in this framework, given the dynamic nature of the Metaverse environment. The computation of trust for virtual service providers aids users in selecting the most suitable provider while maintaining a balance between services offered and price.

Integration of tier I, II, III, IV, and V results using fuzzy logic

To obtain an overall trust value for the ith virtual service provider during the computation of section II, the fuzzy logic concept is utilized to integrate the results of Tiers I, II, III, IV, and V from section I. This integration process is illustrated in Fig. 4. The development of the “overall trust” system was facilitated by the fuzzy logic toolbox in MATLAB (The MathWorks, Natick, MA, USA). The five fuzzy inputs of the five tiers (SLA evaluation, processing performance computation, social reputation, and violations measurement) were represented using trapezoid-curve shapes, accompanied by their corresponding fuzzy membership functions, as demonstrated in Fig. 5A. The input values for membership functions are limited to the range of [0, 100], and are converted into fuzzy linguistic input variables, SLA evaluation and processing performance computation choose one value of (low–medium-high), but the social reputation takes one value of (low-high) because the reputation can be good or bad it cannot be average.

Figure 5: Components of the Fuzzy Inference System (FIS) for Overall Trust Calculation.

(A) Membership functions for the input variable SLA (Service Level Agreement). (B) Membership functions for the output variable Overall_Trust. (C) Mamdani-type FIS architecture showing five inputs and the single output Overall_Trust.The security issues and violations measurement determine yes or no because these two properties require (yes) or (no) and do not include any other options. As well as their respective fuzzy membership functions were represented using a trapezoidal shape. Centroid defuzzification was utilized to obtain a precise overall trust value for the ith virtual service provider as illustrated in Table 11.

| FIS type: Mamdani | Rules: 72 | Defuzzification Type: Centroid |

|---|---|---|

| Input | Output | |

| No | 5 | 1 |

| Name | I-SLA, II-PP, III-SR, IV-SI, V-VM | Overall trust |

| No. of membership functions | SLA :3 & PP:3 & SR:2 & SI:2 & VM:2 | 4 |

| Membership function type | Trapezoidal | Trapezoidal |

| Membership function name and Rang for each tier. | I-SLA, II-PP (Low-Medium-High) (0–40) (30–70) (60–100) |

Low-Medium-High-Excellent (0–30) (30–60) (60–90) (90–100) |

| III-SR (low-high) (0–60) (40–100) | ||

| IV-SI, V-VM (yes-no) (0–60) (40–100) |

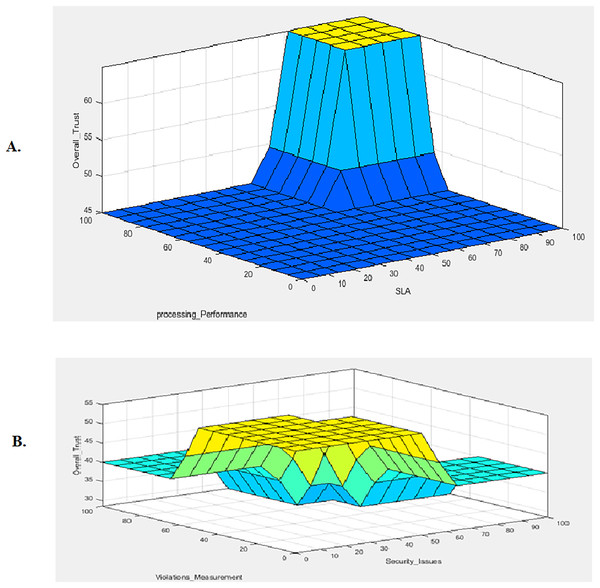

As illustrated in Fig. 5B, the Trapezoid-shaped curve denotes the output value membership functions (low, medium, high, excellent). The overall trust value of the ith virtual service provider belongs to one of the four fuzzy output sets. In order to calculate the overall trust status of the ith virtual service provider, seventy-two inference rules were incorporated into the Mamdani inference system. These rules take into account the SLA evaluation, processing performance computation, social reputation, security issues, and violation measurement. Each rule employs the AND logical operator to establish a correlation between the input and output variables. The integration of the five-tier values contributes to the formulation of the overall trust fuzzy inference system, which calculates the overall trust of the virtual service provider in the Metaverse, as shown in Fig. 5C. A smooth surface between processing performance and SLA evaluations illustrated in Fig. 6A. Smooth surface between security issues and violation measurement shown in Fig. 6B. The main goal of ‘Literature Review and Related Work’ is to determine the overall trustworthiness of virtual service providers in the Metaverse. This is accomplished by evaluating the success of interactions and the level of violations of virtual service providers, while also taking into account service user’s opinions of the SLA. Through the application of fuzzy logic, the results from the five tiers are integrated to calculate the overall trust value and status of ith virtual service provider. Virtual service providers in the simulation were constrained to specific value ranges to verify the system equations and 72 fuzzy inference system rules under different circumstances as shown in Table 12.

Figure 6: 3D Surface View of the Overall Trust Output as a function of selected pairs of input variables.

(A) Surface plot of Overall_Trust as a function of SLA and processing_Performance. (B) Surface plot of Overall_Trust as a function of Security_Issues and Violations_Measurements.| Rule no. | SLA | PP | SR | SI | VM | Overall trust |

|---|---|---|---|---|---|---|

| 1 | L | L | L | N | N | M |

| 2 | L | L | L | N | Y | L |

| 3 | L | L | L | Y | N | L |

| 4 | L | L | L | Y | Y | L |

| 5 | L | L | H | N | N | H |

| 6 | L | L | H | N | Y | M |

| 7 | L | L | H | Y | N | M |

| 8 | L | L | H | Y | Y | M |

| 9 | L | M | L | N | N | M |

| 10 | L | M | L | N | Y | M |

| 11 | L | M | L | Y | N | M |

| 12 | L | M | L | Y | Y | L |

| 13 | L | M | H | N | N | H |

| 14 | L | M | H | N | Y | M |

| 15 | L | M | H | Y | N | M |

| 16 | L | M | H | Y | Y | M |

| 17 | L | H | L | N | N | H |

| 18 | L | H | L | N | Y | M |

| 19 | L | H | L | Y | N | M |

| 20 | L | H | L | Y | Y | L |

| 21 | L | H | H | N | N | H |

| 22 | L | H | H | N | Y | M |

| 23 | L | H | H | Y | N | M |

| 24 | L | H | H | Y | Y | L |

| 25 | M | L | L | N | N | M |

| 26 | M | L | L | N | Y | L |

| 27 | M | L | L | Y | N | L |

| 28 | M | L | L | Y | Y | L |

| 29 | M | L | H | N | N | H |

| 30 | M | L | H | N | Y | M |

| 31 | M | L | H | Y | N | M |

| 32 | M | L | H | Y | Y | M |

| 33 | M | M | L | N | N | M |

| 34 | M | M | L | N | Y | M |

| 35 | M | M | L | Y | N | M |

| 36 | M | M | L | Y | Y | L |

| 37 | M | M | H | N | N | H |

| 38 | M | M | H | N | Y | M |

| 39 | M | M | H | Y | N | M |

| 40 | M | M | H | Y | Y | M |

| 41 | M | H | L | N | N | H |

| 42 | M | H | L | N | Y | M |

| 43 | M | H | L | Y | N | M |

| 44 | M | H | L | Y | Y | L |

| 45 | M | H | H | N | N | H |

| 46 | M | H | H | N | Y | M |

| 47 | M | H | H | Y | N | M |

| 48 | M | H | H | Y | Y | L |

| 49 | H | L | L | N | N | H |

| 50 | H | L | L | N | Y | M |

| 51 | H | L | L | Y | N | M |

| 52 | H | L | L | Y | Y | L |

| 53 | H | L | H | N | N | H |

| 54 | H | L | H | N | Y | M |

| 55 | H | L | H | Y | N | M |

| 56 | H | L | H | Y | Y | L |

| 57 | H | M | L | N | N | H |

| 58 | H | M | L | N | Y | M |

| 59 | H | M | L | Y | N | M |

| 60 | H | M | L | Y | Y | L |

| 61 | H | M | H | N | N | H |

| 62 | H | M | H | N | Y | H |

| 63 | H | M | H | Y | N | H |

| 64 | H | M | H | Y | Y | M |

| 65 | H | H | L | N | N | H |

| 66 | H | H | L | N | Y | M |

| 67 | H | H | L | Y | N | M |

| 68 | H | H | L | Y | Y | M |

| 69 | H | H | H | N | Y | H |

| 70 | H | H | H | Y | N | H |

| 71 | H | H | H | Y | Y | M |

| 72 | H | H | H | N | N | Excellent |

Simulation results, security analysis and limitations of the proposed system

Simulation results

To obtain the results of the integrated five-tier trust management framework’s Tier I, II, III, IV, and V, a MATLAB-based simulation has been created. Each of the following input values for the SLA rating components such as (SCibR + SSibR + STibR + SQibR), number of received processes (successful (PSUi), failed (PINi), and user termination ratio (UTEi)), social reputation such as (GRib-BRib), privacy concerns, violations by VSP across tiers I, II, III, and IV are subject to monitoring. Instances of such violations include exceeding the acceptable limits for cost dissatisfaction (War 1_Sri issue), time dissatisfaction (War 2_Sri issue), and storage dissatisfaction (War 3_Sri issue). Additionally, VSP has fallen below the established quality of service threshold (War 4_Sri issue), surpassed the processing incompliance threshold (War 5_Sri issue), exceeded the user termination threshold (War 6_Sri issue), and is below the social reputation threshold (War 7_Sri issue). Furthermore, VSP is experiencing issues related to security and privacy (War 8_Sri issue). The MATLAB fuzzy logic designer program was used to create the overall trust fuzzy inference system. However, to test the created system equations and seventy-two inference system rules set under different scenarios, each of the simulated platform servers was required to operate within a given range of values. Five input membership functions are included in the overall trust FIS. The computed results for each tier (SLA, processing performance, social reputations, security concerns, and violations measurement) are presented by each membership function.

System setup

Assume case studies from four distinct service providers.

Each platform server start trust value is (0).

Five-tier computation was executed in n = 1 computational interval.

The amount of work requests received by each platform server was the same and only had one job type, (Job3).

Hardware PC properties are core i5, RAM 4 GB, and hard disk 500 GB.

A simulation based on MATLAB was created to display the outcomes of sections I and II within the comprehensive five-tier trust management framework. The input values for SLA rating components, number of received processes, social reputation, and security issues were generated randomly. The trust fuzzy inference system was established using the MATLAB fuzzy logic designer tool. Nevertheless, the virtual service providers in the simulation were constrained to specific value ranges to verify the system equations and 72 fuzzy inference system rules under different circumstances. The overall trust FIS comprises five input membership functions, each representing the results of the respective tiers. The simulation results for the four virtual service providers in Table 13 display a five-tier analysis from Section I. These results are specific to the ith virtual service provider after the completion of (n) computational interval. Section I provides a comprehensive overview of computational outcomes and areas for improvement for each virtual service provider across tiers. The warning methodology assists virtual service providers in identifying their vulnerabilities. The simulation results for the virtual service provider and overall trust value for Section II can be found in Table 14.

| Virtual platform server | SLA | PP | S R | SI | Warnings | Enhancement recommendations |

|---|---|---|---|---|---|---|

| 1 | 82.5 | 86.3 | 89 | 80 | War 8_Sri | 1-SI |

| 2 | 45 | 60 | 55 | 90 | War 1_Sri, War 2_Sri, War 5_Sri, War 7_Sri, War 8_Sri | 1-Cost 2–storage 3- PIN 4-SR 5- SI |

| 3 | 95 | 93 | 2 | 10 | War 7_Sri, War 8_Sri | 1-SR 2-SI |

| 4 | 30 | 35 | 87 | 80 | War 1_Sri, War 4_Sri, War 6_Sri, War 8_Sri | 1- Cost 2-QoS 3- PTE 4-SI |

| Platform server | Overall trust | Overall trust status |

|---|---|---|

| 1 | 92.2 | Excellent |

| 2 | 45.6 | Medium |

| 3 | 81.4 | High |

| 4 | 59.1 | Medium |

Security analysis

An informal security analysis of the proposed protocol (FTM-CPSS) is resistant to various attacks such as Self-promotion attacks (SPA), Bad-mouthing attacks (BMA), Opportunistic service attacks (OSA), On-Off attacks (OOA), Whitewashing attacks (WA), Discriminatory attacks (DA) and Ballot Stuffing attacks (BSA).

Self promotion attacks

In this attack, a node can increase its significance by consistently providing positive recommendations for it. This tactic is employed to ensure its selection as a virtual service provider. However, once chosen, the node adopts malicious practices (Chahal, Kumar & Batra, 2020). The proposed protocol (FTM-CPSS) prevents self-promotion attacks using social reputation.

Bad-mouthing attacks

A node can tarnish the reputation of a reliable node in the network by offering poor recommendations, thereby reducing its likelihood of being selected as a virtual service provider (Marche & Nitti, 2021). Our proposed protocol (FTM-CPSS) offers protection against bad-mouthing attacks by focusing on the social reputation and the quality-of-service features.

Opportunistic service attacks

A device can intelligently provide outstanding service to elevate its reputation, particularly when its reputation is damaged by providing poor service. With a strong reputation, a device can conspire with other devices to conduct collusion-based attacks (Khan et al., 2021). Our proposed protocol (FTM-CPSS) places great emphasis on the security issues and social reputation that give high priority to it in determining the level of trust.

On-off attacks

OOA is similar to OSA, although, in these types of attacks, an object offers good and bad services intermittently to avoid being identified as a low-reputed node, thereby increasing its likelihood of being selected as a service provider (Chahal, Kumar & Batra, 2020). Our proposed protocol (FTM-CPSS) prevents On-off attacks by checking security; privacy; processing performance measurement; security reputation; and SLA compliance.

Whitewashing attacks

In the context of whitewashing attacks, a node can leave and rejoin the network or an application to restore its reputation and cleanse itself of any negative associations. Our proposed protocol (FTM-CPSS) keeps the Whitewashing Attacks by using the social reputations; and checking the security issues.

Discriminatory attacks

By virtue of human intuition or affinities for friends in CPSS structures, a node in DA expressly attacks other nodes that do not share a variety of friends. When a node performs well for one service or node while being ineffective for other services or nodes, this attack is commonly referred to as a selective behavior attack (Masmoudi et al., 2020). Our proposed protocol (FTM-CPSS) can prevent discriminatory Attacks because used security issues; the quality of services; processing performance measurement; and social reputation.

Ballot stuffing attacks

These attacks are used to improve the reputation of undesirable nodes inside the network by making favorable suggestions for them, enabling the undesirable node to be chosen as a service provider (Marche & Nitti, 2021). Our proposed protocol prevents the Ballot Stuffing Attacks by using security issues; social reputation; SLA compliance; and quality of services.

Limitations of proposed system

While fuzzy logic is a powerful approach to address uncertainty and imprecision, it does have some limitations:

Complexity in rule creation: There are a large number of inputs and outputs and this leads to a huge increase in the number of rules required.

Lack of learning mechanism: Fuzzy logic systems lack the capability for automatic learning or adaptation. In contrast to machine learning models, they necessitate manual adjustments to enhance their performance.

Inaccurate results: Fuzzy logic offers solutions that are approximate.

Dependency on expert knowledge: The performance of a fuzzy system depends to a large extent on the quality of expert knowledge that guides us in creating rules and membership functions. Inaccurate rules can lead to substandard results.

Conclusion and future work