Deep learning-based novel ensemble method with best score transferred-adaptive neuro fuzzy inference system for energy consumption prediction

- Published

- Accepted

- Received

- Academic Editor

- Željko Stević

- Subject Areas

- Algorithms and Analysis of Algorithms, Artificial Intelligence, Data Mining and Machine Learning, Data Science

- Keywords

- Deep learning, Machine learning, Adaptive neuro fuzzy inference system, Ensemble learning, Energy consumption

- Copyright

- © 2025 Dağkurs and Atacak

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2025. Deep learning-based novel ensemble method with best score transferred-adaptive neuro fuzzy inference system for energy consumption prediction. PeerJ Computer Science 11:e2680 https://doi.org/10.7717/peerj-cs.2680

Abstract

Background

Energy consumption predictions for smart homes and cities benefit many from homeowners to energy suppliers, allowing homeowners to understand and manage their future energy consumption, improve energy efficiency, and reduce energy costs. Predictions can help energy suppliers effectively distribute energy on demand. Therefore, from the past to the present, numerous methods have been conducted using collected data, employing both statistical and artificial intelligence (AI)-based approaches, to achieve successful energy consumption predictions.

Methods

This study proposes a deep learning-based novel ensemble (DLBNE) method with the best score transferred-adaptive neuro fuzzy inference system (BST-ANFIS) as a high-performance and robust approach for energy consumption prediction. The proposed method uses deep learning (DL)-based algorithms, including convolutional neural networks (CNN), recurrent neural networks (RNN), long short-term memory (LSTM), bidirectional long short-term memory (BI-LSTM), and gated recurrent units (GRUs) as base predictors. The BST-ANFIS architecture combines the individual outcomes of these predictors. In order to build a robust and dynamic prediction model, the interaction between the base predictors and the ANFIS architecture is achieved using a best score transfer approach. The performance of the proposed method in energy consumption prediction was verified through five DL methods, five machine learning (ML) methods, and a DL-based weighted average (DLBWA) ensemble method.

Results

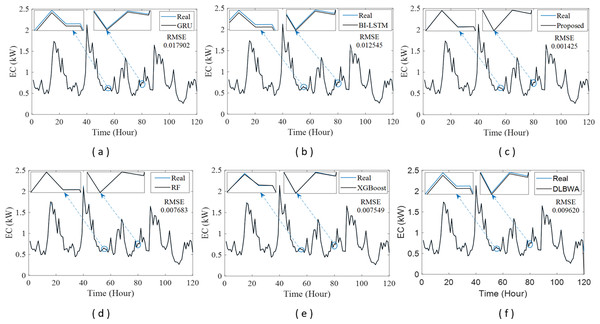

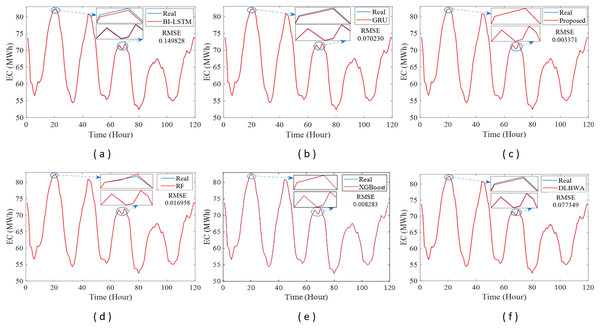

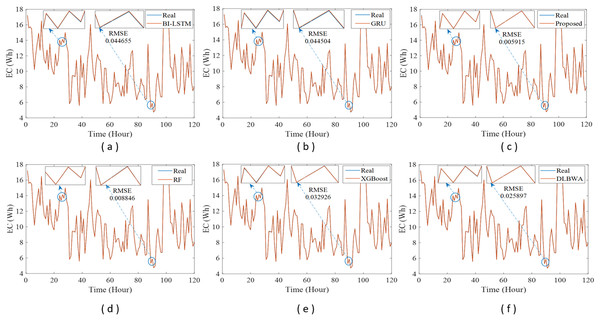

In experimental studies, the results were obtained from three-stage analyses: fold, average, and periodic performance analyses. In fold analyses, the proposed method, in terms of the root mean square error (RMSE) metric, demonstrated better performance in four folds on the Internet of Things (IoT)-based smart home (IBSH) dataset, two in the homestead city electricity consumption (HCEC) dataset, and two in the individual household power consumption (IHPC) dataset compared to the other methods. In the average performance analyses, it showed significantly higher performance than the other methods in all metrics for the IBSH and IHPC datasets, and in metrics except the mean absolute error (MAE) metric for the HCEC dataset. The performance results in terms of RMSE, MAE, mean square error (MSE), and mean absolute percentage error (MAPE) metrics from these analyses were obtained as 0.001531, 0.001010, 0.0000031, and 0.001573 for the IBSH dataset; 0.025208, 0.005889, 0.001884, and 0.000137 for the HCEC dataset; and 0.013640, 0.006572, 0.000356, and 0.000943 for the IHPC dataset, respectively. The results of the 120-h periodic analyses also showed that the proposed method yielded a better prediction result than the other methods. Furthermore, a comparison of the proposed method with similar studies in the literature revealed that it demonstrated competitive performance in relation to the methods employed in those studies.

Introduction

Over the past few decades, global electrical energy consumption has grown rapidly due to population expansion and technological advancements (Kim & Cho, 2019b). According to the international energy agency (IEA) report (IEA, 2019), the world’s total electricity consumption increased from 12,137 terawatt-hours (TWh) to 22,848 TWh between 1999 and 2019. The report highlights that the residential and industrial sectors together contribute to 15,638 TWh, accounting for 66.45% of total energy consumption. The assessment emphasizes the rising energy consumption, especially in residential and industrial sectors, which requires urgent action from electricity distribution and supply companies (EDSCs) to develop effective policies and innovative solutions to manage current and future energy needs. In this context, experts have focused on developing tools that automate energy distribution, using load prediction methods to optimize energy source utilization (Acharya, Wi & Lee, 2019). These methods, known as innovative approaches, utilize various features such as historical data, weather patterns, and customer behaviors to predict future energy consumption. Accurate predictions enable EDSCs to manage energy supply effectively, enhance capacity, make capital investments, and conduct market research (Sajjad et al., 2020).

A comprehensive review of literature on energy consumption predictions reveals diverse studies employing different approaches. These studies are generally categorized into two classes: statistics-based methods and artificial intelligence (AI) based methods. Statistics-based energy consumption prediction methods are mathematical models that analyze historical data to predict future consumption trends (Box et al., 2016; Hyndman & Athanasopoulos, 2018; Cai et al., 2023). Methods such as Holt-Winters (HW) (Sudheer & Suseelatha, 2015), auto-regressive moving average (ARMA) (Chen, Wang & Huang, 1995), auto-regressive integrated moving average (ARIMA) (Büyükşahin & Ertekin, 2019), grey prediction (GP) (Li & Zhang, 2018), and seasonal auto-regressive integrated moving average (SARIMA) (Blázquez-García et al., 2020) tackle energy consumption prediction as a typical time series problem. These methods might yield successful results in linear predictions with regular data distributions, but their accuracy decreases in those with incomplete or unbalanced data distribution. Hence, AI-based methods, offering more robust and dynamic solutions, have gained prominence. Within these methods, especially machine learning (ML) and deep learning (DL) algorithms are widely used.

ML algorithms are essential for modeling complex relationships and predicting future energy consumption trends. Some popular ML algorithms for energy consumption prediction include classical methods, such as artificial neural network (ANN) (Kandananond, 2011), support vector regression (SVR) (Ma, Ye & Ma, 2019), and decision trees (DT) (Tso & Yau, 2007). Additionally, model-based ensemble learning (EL) techniques, such as random forest (RF) (Silva et al., 2020), Extreme Gradient Boosting (XGBoost) (Shin & Woo, 2022), and light gradient boosting machines (LightGBM) (Chowdhury et al., 2021), have shown promising results in energy consumption prediction. Classical ML-based methods can effectively model linear and nonlinear relationships but may have varying performance depending on the training data, especially with large datasets (Olu-Ajayi et al., 2022). On the other hand, model-based ensemble ML methods have demonstrated high performance, resistance to overfitting, and faster training times (except for the RF method), particularly with large datasets (Suenaga et al., 2023). However, these methods have limitations owing to the performance of the individual models and the variability of the input data. One limitation is the problem of high dimensionality, particularly in high-dimensional datasets with many attributes, which can lead to overfitting and poor performance (Tran, 2023). Another limitation is the less robust prediction problem caused by uncertainty and high variance in the input data in dynamic and variable environments (Shree et al., 2023). In addition, the aggregated outcomes may exhibit optimal performance on certain datasets, with variations in the accuracy levels of the model outputs depending on data characteristics.

DL-based methods are crucial for accurately predicting large and complex datasets. So far, recurrent neural network (RNN) and its variations, such as long short-term memory (LSTM), bidirectional long short-term memory (BI-LSTM), and gated recurrent unit (GRU), have been successfully used to predict energy consumption because of their ability to uncover hidden relationships over time (Fan et al., 2019; Bai et al., 2021). These methods outperform classical ML-based methods in predictions; however, their performance can vary depending on the data characteristics (Farsi et al., 2021). Furthermore, while DL-based methods may outperform ensemble ML methods in terms of prediction accuracy, they struggle to handle uncertainties in data from grid lines with sudden power changes and load imbalances. Consequently, hybrid and ensemble DL-based approaches have been proposed as effective solutions to these challenges.

Several studies have shown the effectiveness of hybrid DL-based methods, such as the convolutional neural network long short-term memory (CNN-LSTM) neural network (Kim & Cho, 2019b), CNN-multilayer bidirectional GRU (CNN-MB-GRU) neural network (Khan et al., 2020b), LSTM neural network with stationary wavelet transform (SWT) technique (Yan et al., 2019), CNN with LSTM autoencoder (LSTM-AE) (Khan et al., 2020a), and Novel CNN-GRU neural network (Sajjad et al., 2020), in accurately predicting electricity consumption. They have proven to be adept at capturing the temporal and spatial features that impact energy consumption and delivering accurate predictions. Each method has its strengths and weaknesses. The choice of the prediction method depends on the dataset’s characteristics, problem complexity, and learning objectives. However, these methods present common challenges, such as non-optimized parameter values, resource-intensive computations, and extensive data preprocessing, particularly in hybrid models incorporating the CNN method.

Although there have been relatively few studies on ensemble DL methods compared to hybrid DL-based methods in energy consumption prediction, their application has been steadily increasing in recent years. Studies in this area show that ensemble DL methods can be successfully applied to tackle energy consumption prediction tasks. The current ensemble DL methods proposed in the literature for this purpose include approaches that combine LSTM, GRU, and temporal convolutional networks (TCN) (Hadjout et al., 2022); LSTM and Kalman Filter (KF) methods (Khan et al., 2021a); LSTM and GRU with cluster analysis (Khan et al., 2021b); RNN, LSTM, BI-LSTM, GRU, and CNN (Shojaei & Mokhtar, 2024); LSTM, Evolutionary trees (EvTree), RF and neural networks (NN) (Sujan Reddy et al., 2022); ANN, XGBoost, LSTM, stacked LSTM, and BI-LSTM (Guo et al., 2024); and LSTM and RNN (Irfan et al., 2023). These methods leverage the powerful capabilities of DL models, such as their ability to learn hierarchical representations and capture complex patterns and relationships in energy consumption data. Depending on the data characteristics, they can reduce the risk of overfitting and improve generalization by combining multiple models. Ensemble DL methods are also more robust to noisy or biased data as they reduce reliance on a single model that may have been trained on potentially biased information. Despite the limited number of studies in the literature, average- or weighted-average-based ensemble DL methods are still used owing to their simple structures and ease of application. However, this simplicity has several disadvantages, such as low performance issues resulting from the loss of diversity among predictors, insensitivity to outliers, and the inability to capture complex effects based on the data structure or the characteristics of base predictors. To address these limitations, more sophisticated stacking ensemble DL methods have been proposed. These advanced ensemble techniques can leverage the complementary strengths of diverse DL models, leading to an improved predictive performance in energy consumption prediction. In stacking ensemble architectures, most studies show that ML methods, such as XGBoost, GBM, support vector machine (SVM), and k-nearest neighbor (KNN), can be successfully used to combine multiple DL predictor outputs on specific datasets. However, when these methods are used with high-dimensional datasets, they can encounter challenges, including long training times, overfitting, and generalization issues, which can result in an overall performance degradation. Furthermore, because the base predictors in these models are often complex, overfitting and generalization-related problems inherent in the individual models can further exacerbate these performance declines. To fill this gap in the literature, there is an urgent need to develop effective and robust models within the stacking ensemble DL framework that can minimize the problems associated with current prediction combiners. Designing such robust and high-performing meta-models is crucial for advancing the state-of-the-art research in this area.

In this study, the researchers address the gap in ensemble DL methods with an advanced stacking ensemble method specifically designed for household energy consumption data. In the proposed method, multiple DL models such as RNN, LSTM, BI-LSTM, GRU, and CNN are utilized as base predictors due to the short- and long-term dependencies and complex characteristics of such time series data. Unlike previous studies in the literature, the meta-combiner component of the method employs a configuration that effectively combines multiple DL outputs through Adaptive Neuro Fuzzy Inference System (ANFIS) using a best score transfer approach. The contributions of the proposed method to the literature are as follows.

Combining the scores from the DL-based base predictors using an algorithm that incorporates human learning and inference abilities (ANFIS) has made the proposed method a more robust and dynamic approach compared to similar methods in the literature.

The innovative aspect of the proposed method, also known as its distinctive feature, is its ability to dynamically identify two models with the best score outputs among the base DL predictors and effectively combine these outputs through an optimized architecture. This characteristic of the method allows it to reduce the number of inputs to ANFIS, designing it as a simpler structure as a prediction combiner, while also enabling the effective combination of the best scores through this architecture. Structurally, this contributes significantly to reducing the complexity of the method and enhancing its performance as a prediction result. In addition, the best score effect can minimize performance issues arising from overfitting of base predictors in traditional stacking ensemble methods.

The model combines five robust DL-based methods (CNN, RNN, LSTM, BI-LSTM, and GRU) with the ANFIS architecture. CNNs are effective at feature extraction, while RNN and its variations (LSTM, BI-LSTM, and GRU) are skilled at capturing sequential dependencies in time-series data. This combination of methods enhances the model’s flexibility for energy consumption prediction.

The proposed method offers a new perspective to the literature by illustrating how DL models can be combined more effectively, resulting in substantial benefits.

The proposed method’s comprehensive analysis and comparison with existing DL, ML, and classical EL techniques clearly highlight its strengths and weaknesses, providing a valuable reference for future research.

This novel approach can also be applied to other time-series forecasting issues that have similar structures to energy consumption forecasting, such as water consumption and demand prediction, weather forecasting, and natural gas consumption prediction. Thus, the method’s scope can be expanded, making it suitable for use in various disciplines.

The remaining sections of this article are organized as follows. “Literature Review” provides a comprehensive literature review on energy consumption predictions. “Materials and Methods” presents the datasets used, the structure and components of the proposed method, the DL-based weighted average (DLBWA) ensemble method, DL and ML-based methods, and evaluation metrics. “Experimental Results and Discussion” discusses the experimental results of the proposed method, and the other methods employed for performance verification. The last section interprets the findings and offers recommendations for future studies.

Literature review

The prediction of energy consumption in smart homes and buildings has received significant attention in the literature because of its crucial role in energy resource planning and management. Various methods have been proposed and tested in real-time or on diverse datasets. This section provides an overview of adaptive, ML, DL, hybrid DL, and ensemble DL-based methods relevant to our study.

Adaptive methods aim to dynamically adjust and optimize energy consumption based on real-time data and feedback. Several studies indicate that ANFIS and its combined architectures have been successfully employed as adaptive methods for predicting building electrical energy consumption. The study conducted by Ghenai et al. (2022) utilized ANFIS to predict the energy consumption of educational buildings. The model was tested using publicly available electricity consumption data obtained from smart meters in office buildings in Washington, USA. The results from the experimental studies showed that R correlation coefficients of 0.97951, 0.9854, and 0.96778 were achieved at 0.5, 1, and 4-h periods, respectively. Adedeji et al. (2022) conducted a study comparing the performance of stand-alone ANFIS with a hybrid ANFIS optimized using the particle swarm optimization (PSO) method to predict energy consumption based on climatic factors in a multi-campus institution in South Africa. They validated the performance of both models using a self-collected dataset containing weather information. The experimental studies on four campuses demonstrated that the hybrid PSO-ANFIS model for campus D outperformed all other standalone and hybrid models for campuses A, B, and C, including a root mean square error (RMSE) of 0.147, mean absolute deviation (MAD) of 0.125, and MAPE of 2.89. In a similar study performed by Oladipo, Sun & Adeleke (2023), they proposed an ANFIS model optimized with the modified advanced particle swarm optimization (MPSO) technique to predict electrical energy consumption in a student dormitory at the University of Johannesburg. The study involves comparing this model with hybrid ANFIS models using different optimization algorithms. The models utilize meteorological variables such as wind speed, humidity, and temperature as inputs and electrical energy consumption as output. The proposed MPSO-ANFIS model outperformed other models, achieving the lowest values for RMSE (1.8928 KWh), MAD (1.5051 KWh), and a coefficient of variation (RCoV) of 0.1370 under the split conditions of 0.7. On the other hand, Alam & Ali (2023) presented for predicting energy usage in residential buildings during both normal and COVID-19 periods. Parameter optimization using the PSO method and data training via the subtractive clustering method were incorporated into the proposed method. They compared the performance of MANFIS-2 with stand-alone ANFIS, RF, and LSTM methods. MATLAB simulation results confirmed the effectiveness of MANFIS-2 in residential load prediction, with absolute average error (AVG) values of 1.2396, RMSE of 2.0398, and MAPE of 14.12%. MANFIS-2 surpassed stand-alone ANFIS, LSTM, and RF methods in energy consumption predictions for both pre-COVID-19 and post-COVID-19 conditions. Finally, Barak & Sadegh (2016) developed hybrid ARIMA-ANFIS ensemble models for annual energy consumption in Iran. The results from three hybrid patterns of ARIMA-ANFIS showed that the third hybrid pattern, using the adaptive boosting (AdaBoost) method with Genfis3 ANFIS structure, performed quite better with a MSE value of 0.026%.

Generally, the ability of ML algorithms to capture complex relationships, identify nonlinear patterns, generalize well, and adapt to various data types has made them considerably more effective in addressing a variety of regression problems than conventional methods. In this regard, several commonly utilized ML methods, as well as hybrid approaches, have been successfully employed to predict energy consumption in buildings. In a study conducted by Chammas, Makhoul & Demerjian (2019) a multilayer perceptron (MLP)-based model was introduced to predict the energy consumption of a smart building, including weather information. They compared the proposed model with linear regression (LR), support vector machines (SVM), gradient boosting machines (GBM), and RF methods. The experimental studies showed that the MLP model achieved the most successful result with values R2 of 64%, RMSE of 59.84%, mean absolute error (MAE) of 27.28%, and mean absolute percentage error (MAPE) of 27.09%. In a separate study, Shapi, Ramli & Awalin (2021) compared the performance of SVM, ANN, and k-nearest neighbors (KNN) using data collected from four tenants in a smart building in Malaysia. The findings indicated that each algorithm demonstrated varying performance levels based on RMSE and MAPE metrics for the tenants involved. Notably, SVM performed better than the other methods, achieving RMSE values of 4.75 and 3.59 for tenants A1 and A2, and with MAPE values of 19.09 and 43.97 for tenants B1 and B2. Priyadarshini et al. (2022a) proposed a reliable system for an IoT-based smart home environment using ML-based methods, including ARIMA, SARIMA, LSTM, Prophet, Light GBM, and vector autoregression (VAR). They performed time series analyses on the Internet of Things (IoT)-based smart home (IBSH) dataset and identified ARIMA as the best-performing method with an RMSE of 0.1806, MAE of 0.1491, and MSE of 0.0326 values. In a study by Moldovan & Slowik (2021), the authors explored the prediction of energy consumption for electrical appliances using various regression methods, including RF regression, ExtraTree (ET) regression, KNN regression, DT regression, and a hybrid approach called the multi-objective binary gray wolf optimization (MOBGWO-4ML). They utilized fourteen out of 28 features and found that the MOBGWO-4ML method performed the best, yielding the following values: MAPE of 0.349, RMSE of 0.568, R2 of 0.680, and MAE of 0.279. Ben (2021) performed a comparison study on electricity consumption prediction, applying random tree (RT), LR, SVM, and additive regression (AR) methods to the IBSH dataset. The results indicated that the RT method outperformed the other methods, with an MAE of 0.292, RMSE of 0.73, relative absolute error (RAE) of 50.62, and root relative squared error (RRSE) of 68.6. Priyadarshini et al. (2022b) presented an ensemble method based on DT, RF, and XGBoost for predicting electrical energy consumption. Their analysis, contrasting this ensemble method with DT, RF, XGBoost, and KNN techniques, demonstrated the superior performance of their approach. The error values for the first dataset were 0.000008 (MSE), 0.99999 (R2), 0.00072 (RMSE), and 0.00033 (MAE), while for the second dataset, the values were 0.000002 (MSE), 0.99999 (R2), 0.00078 (RMSE), and 0.00033 (MAE), respectively. In a study conducted by Mocanu et al. (2016), the authors examined the conditional restricted Boltzmann machine (CRBM) and the factored conditional restricted Boltzmann machine (FCRBM) models for time series prediction of energy consumption. They compared the performance of these models with ANN, SVM, and RNN methods using electrical power consumption data obtained from a residential customer. The results showed that the FCRBM method outperformed the other methods across different time periods. The reported RMSE values for FCRBM, ANN, SVM, RNN, and CRBM were 0.8995, 0.7971, 0.1702, 0.73, and 0.7971, respectively.

DL methods, which consist of multiple layers of neural networks, have become crucial for high-performance prediction in residential energy consumption due to their ability to model non-linear relationships. Kong et al. (2019) proposed an RNN model based on LSTM to address short-term load prediction for individual homes. This model was evaluated using a publicly accessible dataset of actual residential smart meter readings and demonstrated superior performance compared to traditional models such as backpropagation neural networks (BPNN), KNN, and extreme learning machines (ELM). The reported MAPE values for the LSTM model were 44.39%, 44.31%, and 44.06% at 2, 6, and 12-time steps, respectively. Das et al. (2020) carried out a study using the DL methods based on LSTM, Bi-LSTM, and GRU to predict occupant-specific miscellaneous electric loads. The performance of these models was tested on a range of plug-in loads associated with different devices. This investigation relied on data gathered from each sensor linked to the individual appliances. Findings indicated that both the Bi-LSTM and GRU models outperformed the LSTM model as the prediction interval extended. Cordeiro-Costas et al. (2023) undertook a comparative analysis to identify the most effective prediction model for predicting electricity consumption in single-family homes across the United States. The study compared ML methods, including RF, SVR, XGBoost, and MLP with DL-based methods like LSTM and 1-D convolutional neural networks (CONV-1D). Findings revealed that the LSTM method surpassed all other models, achieving a normalized Mean Bias Error (MBE) of −0.02% and a normalized RMSE of 2.76% on the validation dataset. For the test dataset, the LSTM model recorded a normalized MBE of −0.54% and a normalized RMSE of 4.74. Marino, Amarasinghe & Manic (2016) developed a novel method based on deep neural networks, especially LSTM for predicting energy load. They used two types of LSTM models: stand-alone LSTM and LSTM-based sequence-to-sequence (S2S) architecture. Both methods were tested on individual household electricity consumption data. The experimental results showed that the stand-alone LSTM did not perform well with 1-min resolution data, but it achieved satisfactory performance with 1-h resolution data. On the other hand, the LSTM-based S2S demonstrated successful performance for both resolutions, with RMSE values of 0.625 for the 1-h resolution dataset and 0.667 for the 1-min resolution dataset. Kim & Cho (2019a) proposed a DL-based model based on the autoencoder to predict energy demand in various situations. The model includes a projector that identifies a suitable state for a given situation and a predictor that estimates the energy demand from the defined state. In the experiments using the residential electricity power consumption data of 5 years, LR, DT, RF, MLP, LSTM, and stacking long short-term memory network (SLSTM) methods were utilized to evaluate the performance of the proposed model. The results obtained from experimental studies on datasets with different time resolutions of 15, 30, 45, and 60 min showed that the proposed model outperformed traditional models. This model obtained its highest performance from a 15-min periodic analysis, with an MSE of 0.2113, MAE of 0.2517 and MRE of 0.3625.

Hybrid DL approaches are gaining traction for predicting residential energy usage, thanks to their precision and the capacity to integrate various algorithm strengths. They exhibit adaptability to lifestyle data, versatility in managing time series, and have demonstrated effectiveness in a variety of contexts. Consequently, there has been a significant increase in recent research on residential energy consumption using these methods. Almalaq & Zhang (2019) proposed a new hybrid energy prediction approach combining the genetic algorithm (GA) and LSTM. This algorithm optimizes the objective function by considering the time window delays as well as the hidden neurons within the LSTM framework. The effectiveness of the GA-LSTM model was assessed for short-term predictions using data sourced from both residential and commercial buildings. The results obtained through a 10-fold cross-validation approach demonstrated that the proposed GA-LSTM method exhibited better performance than the traditional methods, achieving an RMSE of 0.213, coefficient of variation (CV) of 19.56%, and MAE of 0.074 for the first scenario. In parallel, for the second scenario, the GA-LSTM approach again exceeded the conventional methods with an RMSE of 0.43, CV of 8.38%, and MAE of 0.26. Kim & Cho (2019b) developed a hybrid CNN-LSTM neural network model that can effectively extract temporal and spatial features to predict residential energy consumption. They conducted experimental studies on four scenarios representing the individual household power consumption (IHPC) data arranged at minute, hourly, daily, and weekly resolutions. The performance comparison of the proposed method was made with LR and LSTM methods. The findings from all scenarios showed that the CNN-LSTM neural network model outperforms the LR and LSTM methods in terms of prediction accuracy. For the scenario with hourly resolution, the reported performance metrics for the proposed model were MSE = 0.3549, RMSE = 0.5957, MAE = 0.3317, and MAPE = 0.3283. Khan et al. (2020a) conducted a study on various DL-based prediction models and proposed a new hybrid model called CNN-LSTM-AE for predicting electricity consumption in residential and commercial buildings. The performance of the proposed model was compared with that of the CNN, LSTM, CNN-LSTM, and LSTM-AE models. The results of the study showed that in terms of the RMSE, MAE, and MSE metrics, the CNN-LSTM-AE model performed better than the other models for both residential and commercial buildings. For daily resolution data, the performance of the proposed hybrid model was summarized as follows: RMSE = 0.02, MAE = 0.01, and MSE = 0.0004 for residential buildings and RMSE = 0.01, MAE = 0.01, and MSE = 0.0003 for commercial buildings. Sajjad et al. (2020) proposed a hybrid model combining CNN and GRU DL methods to accurately detect prior electrical energy consumption in residential homes and tested this model on publicly available appliance energy prediction (AEP) and IHPC datasets. The performance of the proposed model was compared with that of the LR, DT, SVR, CNN, LSTM, and CNN-LSTM models. The results of the experimental studies demonstrated the applicability of the proposed hybrid CNN-GRU model more successfully in the real world than the basic models for residential homes. Specifically, for the IHEPC dataset, the reported performance results of the model were an RMSE of 0.47, MAE of 0.33, and MSE of 0.22. For the AEP dataset, the reported performance results were an RMSE of 0.31, MAE of 0.24, and MSE of 0.09. Zang et al. (2021) developed a novel day-ahead residential load prediction method that incorporated feature engineering, pooling, and a hybrid DL model. The hybrid model combined LSTM with a self-attention mechanism (SAM). The model was verified using a practical dataset that included multiple residential users. The experimental results demonstrate that the performance of the proposed method varies depending on the data pool from different groups of users. The best performance was achieved using a four-user data pool with 49-time steps and 24 feature sizes. The performance of the model, in terms of MAPE, MAE, and RMSE, was reported to be 15.33%, 56.86, and 82.50 kW, respectively. Fang et al. (2021) proposed a hybrid deep transfer learning strategy that combines LSTM and a domain-adversarial neural network (DANN) to address the issue of limited data in training models for building energy prediction. They conducted experimental studies by using different models to verify the performance of the proposed method. The results showed that the proposed strategy significantly improves building energy prediction compared with models trained solely on target, source, or both target and source data without transfer learning. Syed et al. (2021) developed a hybrid DL model that combines the advantages of LSTM, BI-LSTM, and RNN for energy consumption prediction accuracy in smart buildings. They compared the proposed model with other commonly used hybrid models, including CNN-LSTM, ConvLSTM, LSTM encoder-decoder model, and SLSTM. The evaluation was conducted on two real energy consumption datasets from smart buildings. The results revealed that the proposed model outperformed the LSTM-based hybrid models, yielding MAPE values of 2.00% in the first case study and 3.71% in the first and second case studies, respectively. Moreover, for the multi-step week-ahead daily forecasting, the proposed model has an improvement of 8.368% and 20.99% in MAPE compared with the LSTM-based model.

On the other hand, ensemble DL methods, as in hybrid methods, have recently become more prominent in residential energy consumption prediction than other methods due to their improved prediction accuracy as a result of combining the strengths of different methods. Khan et al. (2021a) presented an ensemble approach that combined LSTM and KF methods to predict short-term energy consumption in a multifamily residential building in South Korea. The proposed model was compared with the stand-alone LSTM and KF methods and successful ML-based methods such as RF, AdaBoost, XGBoost, and GB. The results demonstrate that the proposed ensemble model performed better than the other methods, with an MAE of 373.580, RMSE of 487, MAPE of 3.264, and R2 of 0.966. Khan et al. (2021b) developed a spatial and temporal ensemble model for the prediction of short-term electricity consumption. This model incorporates two deep learning models, LSTM and GRU, and employs cluster analysis with the k-means algorithm to discover apartment-level electricity consumption profiles. The proposed model was tested using high-resolution electricity consumption data from smart meters at both building and floor levels. Its effectiveness in predicting energy consumption was compared with those of widely used ML and DL methods. The experimental results confirmed that the model successfully captured the sequential behavior of electricity consumption, demonstrating superior building and floor-level prediction performance, with the lowest MAPE of 4.182 and 4.54, respectively. Hadjout et al. (2022) proposed an ensemble DL method that combines LSTM, GRU, and TCN models to accurately predict long-term electricity consumption for the Algerian economic sector. The researchers used the weighted average technique to combine the outputs of DL models, and the weight coefficients were obtained using a grid search (GS) algorithm. The study, which utilized the Bejaia High Voltage Type A (HVA) consumer energy consumption dataset, revealed that the proposed ensemble model performed sufficiently well to meet the company’s requirements and better than the prediction of stand-alone models. The statistical significance of the model was evaluated using the Wilcoxon signed-rank test, which showed a p-value less than 0.05, for all paired combinations. Sujan Reddy et al. (2022) created two stacking ensemble models for electrical energy consumption prediction, combining LSTM, EvTree, RF and NN basic predictor outputs through XGBoost and GBM, and they tested these models on a standard dataset containing 500,000 energy consumption data. The results obtained from their study showed that the stacking model with the XGBoost combiner reduced the training time of the second layer by a factor of approximately 10 and improved the RMSE by 39%. Guo et al. (2024) developed a novel stacking ensemble method that combines the outputs of ANN, XGBoost, LSTM, Stacked LSTM, and BI-LSTM models through a Lasso regressor. They applied this method to two real-world datasets and achieved better accuracy with MAPE values of 5.99% and 7.80%, respectively, compared to similar methods. Irfan et al. (2023) proposed an ensemble model combining the RNN and LSTM methods to effectively predict multi-region hourly power usage. The performance of this model was evaluated using a dataset collected from thirteen different regions between 2004 and 2018. The results obtained from the experimental studies demonstrated that the model performed better than other base methods. Some statistical results, such as the RMSE, R2, and MAPE for the proposed model, were reported as 0.1658, 0.9731, and 1.9238, respectively.

The literature review results discussed above show that most AI-based methods, including adaptive, ML, DL, hybrid DL, and ensemble DL methods, can produce successful prediction results on the specific datasets to which they are applied. Among them, hybrid and ensemble DL methods outperform standalone and adaptive methods. Therefore, hybrid methods with an adjustable parameter structure and a combination of various DL-based methods have been widely used in numerous energy consumption prediction studies. Ensemble DL methods that combine the different strengths of multiple DL models have gained popularity recently due to the high-performance advantages they provide in this area. Average or weighted average techniques have been used in a limited number of studies to combine the outputs of base DL predictors owing to performance issues from their linear structures arising from fixed weights. Instead, stacking ensemble DL methods that use ML techniques as meta-combiners capable of successfully modeling variations in the outputs of base predictors are widely preferred due to their high-performance effects. However, along with the long training times of ML combiners, overfitting and generalization issues in high-density datasets can often lead to performance drops across the entire model. Accordingly, the need for a successful prediction process is to introduce a structurally simpler and functionally more effective meta-combiner that can minimize these issues. Based on this requirement, this study proposes a novel stacking ensemble DL method. It combines the output of five DL-based methods proven to be effective in time-series problems through a simplified ANFIS architecture with the best score transfer as a meta-combiner.

Materials and Methods

This section outlines the materials and methodologies used in this study. The researchers first provided a detailed explanation of the datasets used for energy consumption prediction and their characteristics. They then thoroughly described the proposed deep learning-based novel ensemble (DLBNE) method with best score transferred-adaptive neuro fuzzy inference system (BSTANFIS), including its construction, constituent units, and the roles of these components. Subsequently, the researchers presented the ML and DLBWA ensemble methods, which were used for comparison with the proposed DLBNE approach. Finally, an overview of the performance metrics applied to assess the effectiveness of the different models was presented.

Dataset

The method proposed in this study incorporates DL algorithms to capture the spatial relationships among different features and to learn long- and short-term dependencies. Accordingly, the selection of the dataset focused on two key aspects to effectively showcase the performance of these algorithms: (1) a comprehensive feature map combining various features and (2) sufficient data density to improve generalization ability. In addition, it is important to consider that the dataset contains real-world data when selecting the dataset because it is crucial to test the performance of the proposed method in practical applications. In this context, the IBSH and IHPC datasets, including real-world data on household energy consumption, and the homestead city electricity consumption (HCEC) dataset combining different features on city energy consumption were used in the experimental studies.

IoT-based smart home (IBSH) dataset

The IBSH dataset, available on Kaggle website (Singh, 2018), contains the power consumption data in Kilowatts and weather information measured at 1-min intervals over a span of 350 days. The dataset has 5,003,911 data points in total and is made up of 32 features. A total of 18 of these features represent power consumption, 13 contain weather information, while one feature includes time information, as shown in Table 1.

| Feature labels | |||

|---|---|---|---|

| Time | Microwave | Fridge | Pressure |

| Use | Living room | Wine cellar | windSpeed |

| Gen | Solar | Garage door | cloudCover |

| House overall | Weather | Kitchen 12 | windBearing |

| Dishwasher | Temperature | Kitchen 14 | dewPoint |

| Furnace 1 | Humidity | Kitchen 38 | precipProbability |

| Furnace 2 | Visibility | Barn | precipIntensity |

| Home Office | apparentTemperature | Well | Summary |

Each column in the dataset represents the value changes labeled with the features in Table 1. The data contained in the columns, apart from the house overall column, serves as inputs for energy consumption prediction methods. The house overall column, which gives the total power consumed by all devices, is considered as the real data class to be used in training and testing processes.

Individual household power consumption (IHPC) dataset

This dataset, available on UCI Machine Learning Repository (Hebrail & Berard, 2006), consists of power consumption data collected from a single house in Sceaux, France, over a 47-month period from December 2006 to November 2010, measured at 1-min intervals. Although 25,979 measurements are missing from the 2,075,259 measurements in the dataset, this data can be easily addressed during the pre-processing phase to prepare it for prediction. In addition to date and time features, the dataset includes variables related to the global minute-averaged active power (in kilowatt), the global minute-averaged reactive power (in kilowatt), the minute-averaged voltage (in volt), and the minute-averaged current intensity (in ampere). It also covers variables relevant to the active energy consumption in watt-hours obtained from three sub-meters (Sub-metering-1, Sub-metering-2, Sub-metering-3) in various areas of the house. The active energy consumption measurements include the first, second, and third sub-meters used for kitchen, laundry, and climate control systems. A new feature representing total energy consumption per minute (TEC) was later added to the dataset in addition to the existing features to be used as a target variable in the training and testing phases. The equation for obtaining this feature is as follows.

(1)

Homestead city electricity consumption (HCEC) dataset

The HCEC data is a publicly available dataset on Kaggle website (Jain, 2020) involving hourly electric energy consumption from Homestead city in the United States. This dataset contains 22,201 data with eighteen variables serving as features. Among these variables, two represent date and time, one denotes the target energy consumption, while the remaining ones include external factors related to weather information such as temperature, humidity, rainfall, and wind speed.

Deep learning-based novel ensemble method with best score transferred-ANFIS

The DLBNE method proposed in this study incorporates the strengths of DL-based methods with the adaptive and fuzzy logic capabilities of ANFIS to achieve high-performance energy consumption prediction. As this method combines the prediction scores of base DL predictors through a meta-combiner, it represents a novel stacking-type ensemble method. The choice of base predictors for the method is based on the structure of the data to be used. Electricity energy consumption data are complex time series containing both short- and long-term sequential dependencies. Therefore, the researchers leverage five well-established DL models that have proven successful in this area: RNN, LSTM, BI-LSTM, GRU, and CNN. The RNN model is employed to capture the short-term sequential dependencies in the data. LSTM, BI-LSTM, and GRU models are used to effectively model long-term sequential dependencies. Additionally, the CNN model is incorporated to identify local patterns and detect anomalies in the electricity consumption data. The most distinguishing feature of the proposed method apart from existing stacking ensemble DL methods is its use of a meta-combiner that merges the outputs of base predictors through the best score transfer approach with the ANFIS architecture. The ability of ANFIS to adaptively tune its parameters based on the problem and generate new inferences from historical data, similar to human reasoning, makes it an effective component for combining the nonlinear scores of DL predictors in the method. The best score transfer approach, on the other hand, simplifies ANFIS structurally by reducing the number of inputs. This structure brings with it two important contributions: it shortens the training time and minimizes the performance issues arising from overfitting in the base predictors. Consequently, the ANFIS architecture with the best score transfer becomes a more robust and effective combiner than other meta-combiners used in stacking ensemble methods in terms of performance.

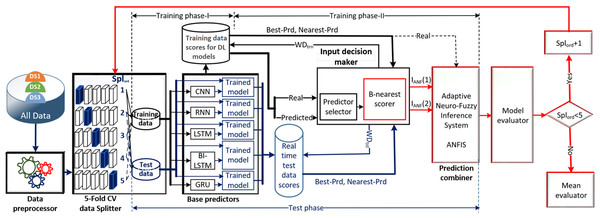

Figure 1 illustrates the schematic diagram of the DLBNE method with BST-ANFIS, which is proposed to create a robust and efficient model for energy consumption prediction. As seen from the schematic diagram, it is structurally composed of seven units. Five of these units perform the main functions, while the remaining two measure the method’s performance. In this structure, the data preprocessor, five-fold CV data splitter, base predictors, input decision-maker, and prediction combiner carry out the main functions of the proposed method, while the model evaluator and mean evaluator are used to assess its performance. The data preprocessor enhances data quality through cleaning, fitting, and normalization processes. The five-fold CV data splitter obtains cross-validation datasets that include training and testing data to verify the accuracy of the proposed method. The base predictor individually determines the prediction scores of DL-based methods using data from the five-fold CV data splitter. The input decision-maker executes an approach that identifies the best scores to be transferred from the base predictors to the prediction combiner (ANFIS). As a result, the two best-scoring inputs to be sent to the prediction combiner are obtained through this approach, utilizing the predictor selector and B-nearest scorer procedures. The prediction combiner synthesizes these scores through ANFIS, resulting in the final prediction output. Among the last two units used for performance evaluation, the model evaluator assesses the performance of the method for each fold, while the mean evaluator computes the overall performance based on the findings of the five-fold performance.

Figure 1: The schematic diagram of the DLBNE method with BST-ANFIS.

The operation of the DLBNE method with BST-ANFIS can be explained in three phases: training phase I, training phase II, and testing phase. In training phase I, also referred to as the preparation phase, the base predictors are trained using active training data sourced from the five-fold CV data splitter. During this process, the prediction scores for this data, along with their corresponding real labels, are recorded in the training data score database. At the end of this phase, the DL predictor that demonstrates the lowest error value—assessed through the MAE of the base predictors—is selected as the first best-scoring input to be applied to the prediction combiner. The training phase II focuses on the ANFIS training process. Here, the data stored in the training data scores database serves as training input. The ANFIS training utilizes the output score from the selected DL predictor identified in Training Phase I as its first input. The second input is derived from the output of the DL predictor that has the lowest absolute error associated with the first input at each sampling loop. The final phase, the testing phase, involves obtaining predictions for energy consumption and evaluating performance results on test data provided by the five-fold CV data splitter. Initially, active fold test data is applied to the trained DL models in the base predictor. The resulting scores from these base predictors for each sampling loop are then transferred to the input decision-maker. Similar to Training Phase II, this unit identifies the two best-scoring inputs for ANFIS by applying MAE and absolute error (AE) criteria to the scores produced by the DL predictors for the test data. These selected inputs are dynamically fed into ANFIS during each sampling loop, culminating in a combined score that represents the final energy consumption prediction. After completing one sampling loop, the split data index (Splord) is incremented, and identical operations are repeated for subsequent fold data across all phases. When this index reaches five, the average performance for the DLBNE method with BST-ANFIS is calculated using the mean evaluator. The details about the proposed method are described in the following subheadings.

Data preprocessor

The data preprocessor is a critical unit used to eliminate the negative impacts of missing, incorrect, and inconsistent values in the datasets. This ensures the data can be easily understood and interpreted by predictive models. In this context, the preprocessor performed various data preprocessing tasks such as data cleaning, organization, removal, and normalization on the datasets. The study utilized three datasets-HCEC with hourly resolution, and IBSH and IHPC with minute-resolution. To harmonize the resolutions, the preprocessor first rearranged the IBSH and IHPC datasets into hourly format to match the HCEC dataset. The next processing steps of the preprocessor can be summarized as follows for each dataset: For the IBSH dataset, the preprocessor created two new columns by merging related features (Furnace 1 & 2, Kitchen 14 & 38). It also removed the “kW” unit from column names and deleted string-type columns. Invalid “cloudCover” values were replaced with the next valid entry, and the time column was converted to a standardized datetime (Y-m-d H-M-S) format. Additionally, one of the same highly correlated columns (use, house overall, gen Solar) were pruned to avoid redundancy. In the IHPC dataset, the date and time columns were combined into a single time column. Missing values were imputed using the column averages. In the HCEC dataset, the index column was deleted. The “Homestead” prefix was also removed from column names, and the missing values were filled with the average value of the respective column. Finally, the preprocessor completed the process by normalizing all column values across the datasets.

5-fold CV data splitter

The five-fold CV data splitter is a unit that helps to check the effectiveness and reliability of the DLBNE method with BST-ANFIS across different datasets. It applies the k-fold cross-validation technique to create the training and testing datasets. Several key parameters govern the process. First, the k parameter was set to five, meaning that the cross-validation used five folds or subsets of the data. The second parameter, called random seed, was set to 42. This ensures that the dataset is randomly divided into folds in a consistent manner during the run of the algorithm, which helps reproducibility. In addition, there is a shuffle parameter set to “True,” meaning that the data will be randomized before starting the cross-validation, reducing any bias from the original order of the data. Using these parameters, the five-fold CV data splitter shuffled the data, split it into five folds, and then performed the k-fold cross-validation process. Each fold takes turns as the testing data, whereas the others are used for training the model. This was repeated for each fold, and at the end of the process, the unit created CV datasets with five training and testing sets.

Base predictors

This unit individually obtains the scores of DL-based methods for each CV dataset. It includes five DL-based methods: CNN, RNN, LSTM, Bi-LSTM, and GRU. The basic descriptions of these methods are presented below in subheadings.

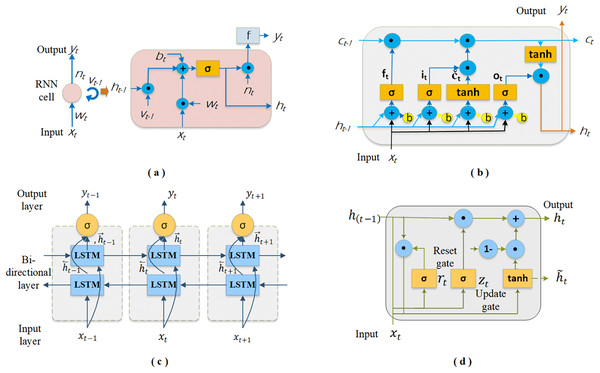

RNN: It is an artificial neural network-based method that can learn patterns and relationships in sequential data by utilizing historical information. This method stands out from other neural networks because of its hidden states, which serve as memory components, preserving some of the information from the sequence that has been processed up to that point (Song et al., 2023). The RNN continuously updates its hidden states with each new input and preceding hidden states, making decisions that depend on both. This feature makes it an effective method for solving problems in natural language processing, anomaly detection, text and sentence generation, and time series analysis. As illustrated in Fig. 2A, RNN cells are typically shown as neural network nodes with a loop, symbolizing the transfer of information from one sequence step to the next. Each cell, indicative of the hidden states, operates as a function that merges the current input with prior hidden states to produce the output, which is then derived by processing the relevant state information through a function. Given this information, the relationship between the input and output of an RNN cell can be described by Eqs. (2)–(6). The following equations represent the input ( ), network weights and thresholds ( and, ), aggregation information ( ), hidden state information ( ), and output ( ).

Figure 2: The schematics of the DL-based four base predictors: (A) RNN cell, (B) LSTM cell, (C) Bi-LSTM and (D) GRU cell.

(2)

(3)

(4)

(5)

(6)

Sigmoid and ReLU are the functions most frequently utilized for the hidden state (σ(.)), while SoftMax is used for output (f(.)). The symbol ⊙ denotes pointwise multiplication. Training RNNs with the backpropagation algorithm results in the vanishing gradient problem, similar to that in conventional neural networks. Consequently, this issue hinders these networks’ ability to grasp and learn long-term dependencies present in sequential data (Jozefowicz, Zaremba & Sutskever, 2015).

LSTM: It is a recurrent deep network architecture specifically designed to address the vanishing gradient problem in RNNs (Sunjaya, Permai & Gunawan, 2023). These networks excel in learning long-term dependencies, which standard RNNs often struggle with, thereby providing efficient solutions for complex time series data. In general, an LSTM layer in an LSTM network comprises a series of memory blocks that are interconnected in a recurrent manner. Each contains memory cells that are also recurrently connected, along with four key components, as depicted in Fig. 2B: input gate ( ), forget gate ( ), output gate ( ), and cell state ( ). In this structure, the input gate contributes significantly to updating the cell state by determining which new pieces of information will be stored. The forget gate determines which information from the previous steps in the sequence should be discarded or retained depending on the forget vector. The output gate is responsible for defining the next hidden state and identifying information that will come from the cell state (Fayaz et al., 2023). The cell state is a communication line that carries meaningful information across cells to make predictions. Accordingly, the basic equations defining the information flow in an LSTM cell are as follows.

(7)

(8)

(9)

(10)

(11)

(12)

Here , , are the weight matrices for the input gate, , , are the weight matrices for the hidden state, and , , are the bias terms for the input gate, forget gate, output gate, and cell state operations, respectively. The symbols σ and tanh correspond to the sigmoid and hyperbolic tangent activation functions.

Bi-LSTM: The method developed by Schuster & Paliwal (1997) is a specialized version of the LSTM network. BI-LSTM is a robust approach for capturing temporal patterns and generalizing unseen data. Its popularity has surged because it effectively addresses many ML problems. We can see its successful use in various fields, such as natural language processing, speech recognition, audio classification, and time series prediction. As shown in Fig. 2C, it consists of two LSTM layers that allow the input to flow forward and backward (Xia et al., 2020). This setup enables it to obtain model output by evaluating historical and future information. Thus, the network can better understand the input sequence by capturing both contextual information. Furthermore, it solves the gradient and information loss issues that often arise in traditional RNN layers during training (Wan et al., 2022). In Bi-LSTM, the final output ( ) is obtained by combining the forward LSTM hidden state ( ) and backward LSTM hidden state ( ) based on the input data sequence ( ). Equations (13)–(15) give the formulas for calculating , , and outputs.

(13)

(14)

(15)

Here, LSTM (•) symbolizes the LSTM equations given in Eqs. (7)–(12), corresponds to the forward LSTM weights, represents the backward LSTM weights, and defines the output layer bias of the Bi-LSTM.

GRU: The method proposed by Cho et al. (2014) is another variation of recurrent networks endowed with memory capabilities. GRU, like LSTM networks, possesses gated mechanisms that enable it to filter information, thereby allowing the retention of significant data in long-term memory for subsequent retrieval, as needed. It employs fewer parameters and features a more streamlined architecture than LSTM, which can result in expedited training times. However, a reduced number of parameters does not always guarantee a better performance. The decision between the GRU and LSTM is contingent upon the specific task at hand and the dataset utilized. As illustrated in Fig. 2D, a GRU cell incorporates two gating mechanisms that offer a flexible approach to regulate the information flow in the network: the reset gate and the update gate. Depending on the input data, the reset gate determines how much past information should be discarded, thus enabling the dynamic selection of pertinent details from previous states for current processing. The update gate regulates the preservation and transfer of prior information to the next time step, striking a balance between new inputs and existing memory. Notably, the GRU lacks an additional memory cell for storing information; instead, it solely governs data in its cell state unit (Gao et al., 2020; Tang et al., 2023). The mathematical equations corresponding to GRU cell are as follows.

(16)

(17)

(18)

(19)

(20)

In the abovementioned equations, is the update gate, is the reset gate, and is the hidden state. The symbols used in the equations are as follows: , , and represent weight matrices, while and are bias vectors. In addition, the symbols σ and tanh represent the sigmoid activation function and the hyperbolic tangent activation function.

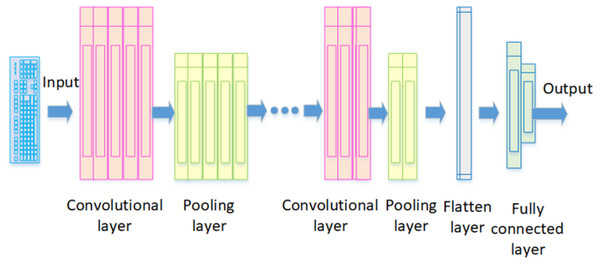

CNN: The method proposed by Lecun, Bengio & Hinton (2015) is a deep feedforward neural network that is crucial in extracting spatial features for time series data, image recognition, and classification problems. Just like how a neuron in the brain processes information and sends it throughout the body, the artificial neurons in CNNs do the same with inputs and produce outputs (Happiest Minds, 2023). Figure 3 illustrates the architecture of a fundamental CNN. It comprises a convolutional layer, pooling layer, flatten layer, and fully connected layer. In this structure, the convolutional layer extracts local features from the input data using filters, also known as kernels. Each filter is designed to identify and capture specific features or patterns in these data. The pooling layer then reduces the dimensions of the feature map created by the convolutional layer, keeping the essential information. Maximum pooling is the most common technique used here; it picks the highest value in a feature map as the most important feature for extraction. The flatten layer serves as an indispensable element that enhances overall neural network functionality; it transforms multidimensional data from both convolutional and pooling layers into a one-dimensional vector before relaying results to fully connected layers. These fully connected layers then process these feature vectors to allocate specific output values corresponding to input data. Finally, these values go through functions like Sigmoid and SoftMax to create probability distributions, helping to determine the class of the input data (Zheng et al., 2023).

Figure 3: The architecture of a fundamental CNN.

The output of CNN ( ) is mathematically represented as given below.

(21)

In the aforementioned equation, defines the input sequence, and denotes the kernel weight. The symbols , , and represent the length of the input sequence, the feature dimension of the instances, and the convolutional kernel, respectively. The representation of the convolutional layers in the CNN architecture is expressed through Eqs. (22) and (23).

(22)

(23)

Here, Eq. (22) is the activation function, and Eq. (23) is the ReLU function. The symbol in the activation function represents the kernel weight.

Input decision-maker

The input decision maker unit is responsible for selecting the two best-scoring inputs from the outputs of the base predictors, which are then forwarded to the prediction combiner (ANFIS), all in pursuit of producing a high-performance prediction result. This unit accomplishes its objective through two fundamental procedures: a predictor selector and a B-nearest scorer. The predictor selector procedure determines the first best-scoring input to be sent to the prediction combiner, while the B-nearest scorer procedure finds the second best-scoring input.

In this process, the data array derived from the output of the base predictor unit during training phase I is categorized into two distinct groups: real data array ( ) and predicted data array ( ). In order to select the first best-scoring input, the predictor selector first applies the MAE criterion, as described in Eq. (24), to these data arrays to calculate the average error score for each DL predictor.

(24)

Here, is the jth value of the real data array for training phase I, is the jth prediction score of the ith predictor for training phase I, and is the total number of instances for the same phase. Upon obtaining the MAE measurements, the predictor selector identifies the kth DL predictor that produces the lowest measurement using Eq. (25) and assigns it as the first best-scoring input ( ) to the predictor combiner for both training phase II and testing phase.

(25)

In the equation, is the function that finds the first best-scoring input based on the prediction scores of DL predictors for the training phase I and the MAE measurements for these predictors ( ), and is the scores of the best predictor produced by this function. In order to determine the second best-scoring input for the prediction combiner, the B-nearest scorer performs a procedure similar to that of the predictor selector, which chooses the first best-scoring input. The differences between them lie in the operating phases, the criteria used to determine the best score, and the data source referenced when applying this criterion. In fact, this input corresponds to the prediction result that is closest to the DL predictor representing the first best-scoring input, dynamically in each sampling loop of training II and testing phases. Accordingly, in each sampling loop, the B-nearest scorer first defines the score of the DL predictor representing the first input of the prediction combiner as a reference for determining the score to be assigned as the second best-scoring input to this unit. Then, it obtains the absolute errors (AEs) of the other DL predictors with respect to this reference, using Eq. (26).

(26)

Here, denotes the index number of the predictors outside the best predictor . represents the absolute error of the nth predictor in the jth sampling loop, while refers to the score of the DL predictor assigned as the first best-scoring input to the prediction combiner in the jth sampling loop. Finally, the B-nearest scorer assigns the DL predictor score with the lowest AE measurement, derived from Eq. (27), as the second best-scoring input to the prediction combiner.

(27)

Here, the output scores of various predictors can be allocated to the second best-scoring input of the prediction combiner in each sampling loop based on AE measurements. Consequently, this input demonstrates dynamic behavior, with its value changing throughout the process.

Prediction combiner

In the proposed method, the prediction combiner obtains the final result by combining two best-scoring predictor outputs that come to its input. The literature review results show that, because of the dynamic structure of electrical energy consumption data from different sources, average and weighted average techniques fail to provide the desired performance in this process. Instead, ML-based meta-combiners, such as XGBoost, GBM, SVM, and KNN, have been successfully applied in many studies. However, these combiners can face several performance issues, particularly in high-dimensional datasets, owing to overfitting and generalization problems. The fact that ANFIS architectures combine fuzzy inference systems, which are known to be effective in modeling uncertainties, with adaptive neural networks that optimize their own parameters, can make it an effective combiner for solving these problems. In particular, a simplified ANFIS configuration with fewer rules and a hybrid learning mechanism can perform better in the combination process by eliminating overfitting and generalization issues.

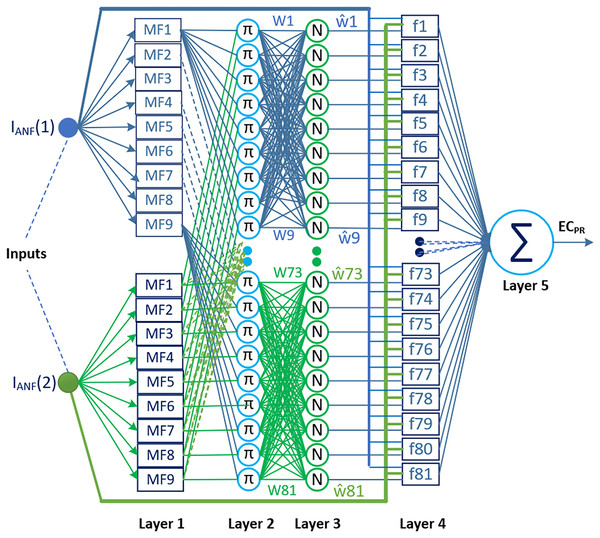

Two types of ANFIS architectures have been proposed in the literature: Type-1 ANFIS and Type-2 ANFIS. One of them, Type-2 ANFIS, is an architecture proposed to better manage the uncertainties caused by fuzzy sets in the Type-1 ANFIS, which is also commonly referred to as ANFIS (Dabiri Aghdam et al., 2022; Alberto-Rodríguez, López-Morales & Ramos-Fernández, 2024). The ability to model uncertainties appears to be an advantage of this architecture in terms of performance; however, the computational complexity and additional resource requirements in terms of training the model constitute the most significant limitations in practice (Chen et al., 2017). Therefore, Type-1 ANFIS is more widely used than Type-2 ANFIS in current applications. In this study, the proposed method utilizes ANFIS (Type-1 ANFIS) as the prediction combiner. This choice was made to avoid further increasing the computational burden imposed by the DL models, and also due to the successful performance and relatively low computational complexity of Type-1 ANFIS for this type of problem. Figure 4 shows the ANFIS architecture used as a two-input and one-output prediction combiner in the proposed method.

Figure 4: The ANFIS architecture used in the proposed method as a two-input and one-output prediction combiner.

Determining the most appropriate ANFIS configuration for the proposed method is crucial for reducing complexity and enhancing model performance. In this regard, we focused on specifying the parameters that affect the ANFIS configuration, namely, the number of inputs, sets used for each input and type of membership function (MF). The selection of the shape or type of MF is specific to the characteristics of the problem. Therefore, in most application studies, the type of MF is determined through trial-and-error experiments. Triangular and Gaussian MFs are commonly preferred in literature reviews examining these processes. Triangular MF provides an ideal solution for systems requiring simple and fast solutions, while Gaussian MF offers more stable results for complex, nonlinear, and noisy systems (Wu, 2012; Sadollah, 2018). Similar to the studies in the literature, the parameters affecting the ANFIS configuration in this study were determined through experimental trials. Under 0.7 data splitting conditions, experiments were conducted on sampling data from the IBSH dataset by varying the number of inputs from two to four and the number of membership functions from three to nine, using triangular, trapezoidal, Gaussian, and Gaussian2 MFs. The evaluations based on the RMSE performance and structural simplicity indicated that the ANFIS architecture with two inputs, nine membership sets, 81 rules, and Gaussian MFs, achieving an RMSE of 0.000675278, was the most suitable configuration for the proposed method. This architecture is structured into five layers, as depicted in Fig. 4.

Layer 1, also referred to as the fuzzification layer, verbalizes the input information and computes the membership degree for associated linguistic labels. The rectangles in this layer represent the adaptive nodes. Each node is verbally labeled, and its defined membership function decides the membership degree of the input data with that particular label. The equation that determines the output of a node defined by Gaussian membership functions in this layer is as follows:

(28)

Here, defines the output of the jth node for the ith input, and denotes the jth membership function for the ith input. and are the formal parameters that shape the jth membership function.

Layer 2 consists of constant nodes labeled with π. These nodes calculate the firing strengths of rules in the ANFIS. The firing strengths represent how much each rule contributes to the overall system output. The node outputs are determined by multiplying the pairwise membership values from the previous layer. Alternatively, the AND operator can also be used instead of the multiplication operation to compute the node outputs.

(29)

Here, the is the output of the kth node in Layer 2, and is the firing strength of the kth node.

Layer 3 normalizes the firing strengths from Layer 2 using constant nodes labeled with . Therefore, this layer is also referred to as the normalization layer. The output of the kth node in the normalization layer is calculated as follows.

(30)

Here, represents the output of the kth node in Layer 3, while denotes the normalized firing strength for the kth node in the same layer. The denominator indicates the total sum of firing strengths across the 81 nodes in Layer 2.

Layer 4, also known as the defuzzification layer, involves multiplying the normalized firing strengths from Layer 3 with the rule outputs represented by a first-order linear polynomial equation. The rule structure for the proposed prediction combiner ANFIS is given below.

(31)

The symbols F1 to F9 represent the antecedent parameters of the rules for inputs, while those f1 to f81 denote the consequent parameters of the first-order polynomial rules. The output of the kth adaptive node ( ), which determines the contribution of the rules in this layer to the total output, is obtained by Eq. (32).

(32)

Here, the variables , and are the set of consequent parameters for the kth rule.

Layer 5, called also as the output layer, consists of a single node marked with the ∑ symbol. This node sums up all the rule outputs from Layer 4 to produce the final ANFIS output. The process can be mathematically expressed as follows.

(33)

The expression in the equation represents the sum of the 81 defuzzified rule outputs from Layer 4.

The operation of ANFIS can be explained in two separate phases: training and testing. During the training phase, the input (antecedent) and output (consequent) parameters of the fuzzy rules are adjusted using backpropagation or a hybrid learning algorithm (Zhou, Herrera-Herbert & Hidalgo, 2017). This study employs a hybrid learning algorithm, enabling the ANFIS network to effectively update its parameters. The algorithm manages the training process using two well-known methods: least-squares estimation (LSE) and gradient descent (GD). During the forward pass, the LSE method is used to fine-tune the output (consequent) parameters, while keeping the input (antecedent) parameters constant. This is achieved by minimizing the sum of squared differences between the target and predicted outputs. In the backward pass, the GD method is applied to adjust the input (antecedent) parameters, maintaining the output (consequent) parameters fixed. This method computes the derivatives of the error function with respect to the antecedent parameters and updates them accordingly to minimize the error. After the training phase is complete, the testing phase determines the final output by applying test data to the trained ANFIS model.

Model evaluator

Evaluating the accuracy of regression models is crucial in this process. In the proposed method, the model evaluator serves as the unit responsible for evaluating the model’s accuracy for each fold of data. It performs this evaluation using well-recognized metrics from literature, including MAE, RMSE, MSE, and MAPE. Table 2 shows the basic definitions and formulas for these metrics.

| Performance metrics | Formula | Definition |

|---|---|---|

| MSE | It gives the mean squared difference between the real value and the predicted value. In general, this metric is considered as a measure of the proximity of a fitted line to the data points. | |

| RMSE | It measures how far predictions are from the real values and its value is calculated by taking the square root of the mean of the squared errors. | |

| MAE | It provides the mean absolute deviation between predicted values and real values. | |

| MAPE | It represents the mean of the absolute percentage differences between predictions and real values. |

Note:

n, The total number of testing samples; , the real value of testing samples; , the prediction value of testing samples.

The RMSE metric is particularly useful in cases where discrepancies between predictions are substantial. Similarly, the MSE metric is concerned with the magnitude of errors; however, its interpretation can be more complex than that of the RMSE due to its reliance on the squares of errors. MAPE metric deals with percentage error and facilitates comparison between different datasets (Brownlee, 2021a; Agrawal, 2023). Finally, the MAE metric is frequently employed in cases where working with datasets containing outliers is crucial and assessing the absolute error in predictions is of primary importance.

Mean evaluator

The mean evaluator is a unit that computes the average performance of the proposed method alongside others based on the performances measured for each fold of the data. It determines the final performance of all methods used in energy consumption prediction by averaging the individual performances obtained for the five-fold CV dataset. Consequently, the mean evaluator’s output for the kth performance metric is found by Eq. (34).

(34)

Here gives the final performance of the kth metric based on the five-fold CV technique, while provides the individual performance of the kth metric for the ith fold of the data.

ML-based methods for energy consumption prediction

In order to evaluate the effectiveness of the proposed method for energy consumption prediction, five ML-based methods, predominantly ensemble methods, were used, namely RF, DT, XGBoost, LightGBM, and AdaBoost. The details of these methods are described below.

RF: It is an ML-based ensemble method that combines the prediction results of multiple individual decision trees using bagging technique. The execution of the combined process within the method can be explained as follows: First, subsets are created from the training data using random sampling technique. It then trains the decision trees with these subsets. Subsequently, the final prediction result is obtained by averaging predictions from all decision trees (Liaw & Wiener, 2002). This is expressed mathematically using the following steps.

(35)

(36)

In Eq. (35), the symbols A, , denote the training dataset, feature vector, and target output, respectively. Equation (36) describes a random subset comprising n-instances produced by random sampling technique. For each decision tree T, a model is constructed using the dataset A′, with each tree making predictions based on the instance data. Equation (37) shows the class label in a classification context, while Eq. (38) indicates the final prediction value for a regression task.

(37)

(38)

Here, m is the total number of decision trees, is the prediction output of the decision tree, and is the final prediction result of the method.

DT: This method is widely used in many regression tasks because it not only yields highly accurate forecasts within predictive modeling, but also boasts a straightforward and comprehensible framework (Huynh-Cam, Chen & Le, 2021). When predicting data, the basic DT procedure entails the construction of a tree model based on feature values. In this procedure, the data is first divided into subgroups using these feature values. A decision tree is then created for each subgroup of data. The building of decision trees starts at the root node and progresses through the structure, forming branches and leaves according to decisions that hinge on the feature values (Li et al., 2022). During decision making, either the Gini index or entropy metrics are evaluated; with the Gini index being preferable for distinguishing classes that occur more frequently, and entropy being employed to generate a tree structure with better balance. The Gini index serves as an indicator of a feature’s purity in the dataset and can be calculated as shown in Eq. (39).

(39)

Here gives the probability of occurrence for the ith class. The Gini index value ranges between 0 and 1, and its value close to 0 indicates a higher discriminative power of the class. DTs are trained using the Classification and Regression Tree (CART) algorithm in this method.