Image based visual servoing with kinematic singularity avoidance for mobile manipulator

- Published

- Accepted

- Received

- Academic Editor

- Paulo Jorge Coelho

- Subject Areas

- Computer Vision, Robotics

- Keywords

- Visual servoing, Mobile manipulator, Manipulability, Redundant robot

- Copyright

- © 2024 Hernandez-Barragan et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2024. Image based visual servoing with kinematic singularity avoidance for mobile manipulator. PeerJ Computer Science 10:e2559 https://doi.org/10.7717/peerj-cs.2559

Abstract

This article presents an implementation of visual servoing (VS) for a redundant mobile manipulator in an eye-in-hand configuration. We used the image based visual servoing (IBVS) scheme, which means the pose control of the robot is based on the error features in the image of a camera. Conventional eye-in-hand VS requires the inversion of a Jacobian matrix, which can become rank deficient, provoking kinematic singularities. In this work, the inversion of the Jacobian matrix is solved using damped least squares (DLS) to reduce singularities and smooth out discontinuities. In addition, a task prioritization scheme is proposed where a primary task performs the eye-in-hand IBVS task, and a secondary task maximizes a manipulability measure to avoid singularities. Finally, a gravity compensation term is also considered and defined on the basis of the image space error. The effectiveness of the proposed algorithm is demonstrated through both simulation and experimental results considering the Kuka YouBot.

Introduction

Visual servo control refers to the use of computer vision data control the pose of a robot (Chaumette & Hutchinson, 2006, 2007; Wang et al., 2024; Leomanni et al., 2024; Kumar et al., 2024). Estimating the pose of a robot is a crucial task in robotics (Ban et al., 2024). It forms the basis for numerous subsequent tasks, such as visually-guided object grasping and manipulation (Li et al., 2023; Tian et al., 2023; Zuo et al., 2019), autonomous navigation (Bultmann, Memmesheimer & Behnke, 2023), multi-robot collaboration (Li, De Wagter & De Croon, 2022; Papadimitriou, Mansouri & Nikolakopoulos, 2022) and human-robot interaction (Christen et al., 2023).

Exteroceptive sensors, such as cameras, offer a robust alternative that relies on external references rather than internal measurements. That is the reason why image based visual servoing (IBVS) control schemes have become so popular. In IBVS, the primary objective is to minimize the error between the current image features captured by a camera and the desired image features. This error is computed in the image space, and the control laws are designed to drive this error to zero, thereby achieving the desired pose of the robot. The camera used in IBVS can be configured in two ways: eye-to-hand, where the camera is fixed in the environment, or eye-in-hand, where the camera is mounted on the end-effector, which is the approach used in this work (Flandin, Chaumette & Marchand, 2000).

In this context, the implementation of IBVS is particularly beneficial for redundant manipulators, which require advanced control strategies to exploit their additional degrees of freedom effectively. Through the combination of visual feedback and advanced control terms, IBVS enables these robots to perform complex tasks with high precision and reliability. Some related works include (Ren, Li & Li, 2020; Heshmati-Alamdari et al., 2014; Karras et al., 2022; Heshmati-Alamdari et al., 2024), where IBVS is implemented in redundant manipulators using feature constraints to keep image features in the field of view.

Several studies have explored using redundancy to define different types of constraints by incorporating secondary tasks (increase manipulability) alongside the main task (visual servoing) (Mansard & Chaumette, 2009; Yoshikawa, 1996; Chaumette & Marchand, 2001; Rastegarpanah, Aflakian & Stolkin, 2021). However, these works do not consider mobile platforms.

In recent years, the introduction of mobile manipulators into the industry has transformed the viewpoint of both users and developers. These robots enhance the manipulation abilities of articulated industrial arms by extending their reach across the entire workshop area. However, in current industrial applications, the navigation and manipulation phases of mobile manipulators are typically separated for technical simplicity and safety considerations (Marvel & Bostelman, 2013; Markis et al., 2019); Nevertheless, it is possible to achieve cooperation between the mobile platform and manipulator arm simultaneously with visual servoing approaches (González Huarte & Ibarguren, 2024; Wang, Lang & De Silva, 2009; Lippiello, Siciliano & Villani, 2007).

Recently, hybrid approaches that combine IBVS and position-based visual servoing (PBVS) have been explored (Li & Xiong, 2021; Jo & Chwa, 2023). These approaches offer increased robustness to feature occlusion and compensate for inaccurate pose estimation due to slippage errors in odometry and wheel encoders, as IBVS does not rely on the internal odometry of the robot but instead uses visual feedback from the environment. However, hybrid visual servoing requires the integration of both image-based and position-based control loops, which increases the system’s complexity, making the design, tuning, and implementation of the control system more challenging.

Most research on visual servoing and mobile robots focuses on mobile robot navigation, with only a few instances of vision-based mobile manipulation being documented (Wang, Lang & De Silva, 2009). Many of the works that address the visual control of mobile platforms include non-holonomic constraints (Silveira et al., 2001; De, Bayer & Koditschek, 2014; Huang & Su, 2019; Zhao & Wang, 2020; Dirik, Kocamaz & Dönmez, 2020; Li & Xiong, 2021; Yu & Wu, 2024). It should be emphasized that when a mobile platform has non-holonomic constraints, it reduces the possibility of exploiting kinematic redundancies.

This work proposes a VS scheme for an eye-in-hand IBVS task on a redundant omnidirectional mobile manipulator. In addition, the proposed scheme deals with singularities based on DLS and manipulability measure maximization. To reduce singularities and smooth out the discontinuities, the inversion of the Jacobian matrix is solved using DLS. Since the mobile manipulator is redundant, a task prioritization scheme is proposed to perform the eye-in-hand IBVS as a primary task while maximizing a manipulability measure as a secondary task. Finally, a gravity compensation term is considered, especially for the case of real-world applications. The effectiveness of the proposed algorithm is demonstrated through both simulation and experimental results considering the Kuka YouBot which is an 8-DOF omnidirectional mobile manipulator. The simulation codes are shared to facilitate the replicability of this work.

The rest of this research is organized as follows: In “Image Based Visual Servoing (IBVS)” the reader will find an overview of visual servoing. In “Eye-in-hand IBVS for omnidirectional mobile manipulators”, the proposed eye-in-hand IBVS approach is addressed. This scheme is tested in both simulation and real-world experiments, the results are presented in “Experimental Results”. Then, An analysis of the obtained results and the future research directions are given in “Discussion”. Finally, the conclusions are discussed in “Conclusions”.

Image based visual servoing

Multiple schemes of visual servoing are found in the literature Chaumette & Hutchinson (2006, 2007). In this work, we implement IBVS with an eye-in-hand configuration. This approach is described below.

This method involves extracting relevant information from the visual input to guide the actions of the robots, such as positioning, navigating, or manipulating objects. The primary objective is to minimize the error between the current visual perception and the desired visual state

(1) where is the vector of coordinates in the image plane, are the measurements of interest points (in pixel units) and are additional parameters of the system (in this case, the intrinsic parameters of the camera): and are the coordinates of the principal point, the focal length and the ratio of the pixel dimensions. is the vector of the desired coordinates.

The next step is to calculate a velocity controller based on the relationship between the time variation of and the velocity of the camera, considering the case of controlling it with six degrees of freedom (DOF).

(2) where and are the instantaneous linear and angular velocity of the origin of the camera. Features velocity and are related by a matrix known as interaction matrix and denoted by

(3) where and is the number of features. For each feature , the interaction matrix is defined as

(4) where

(5) where Z is the depth from the camera to the feature. In this work, this depth is estimated using prior knowledge of the ArUco pattern. and are the feature coordinates in the image plane given by

(6)

(7)

The relationship between camera velocity and the variation of the error is given by

(8) and, to guarantee an exponential reduction of the error, the camera velocities are calculated with

(9)

Eye-in-hand ibvs for omnidirectional mobile manipulators

This section provides the kinematic model for an omnidirectional mobile manipulator. Commonly, mobile manipulators are redundant robots which is beneficial for working with task priority schemes. The robot, with eight degrees of freedom, consists of an omnidirectional mobile platform with four mecanum wheels, allowing it to move to any position with a desired orientation (Zhang et al., 2016; Wu et al., 2017; Kundu et al., 2017).

Omnidirectional mobile manipulator kinematics

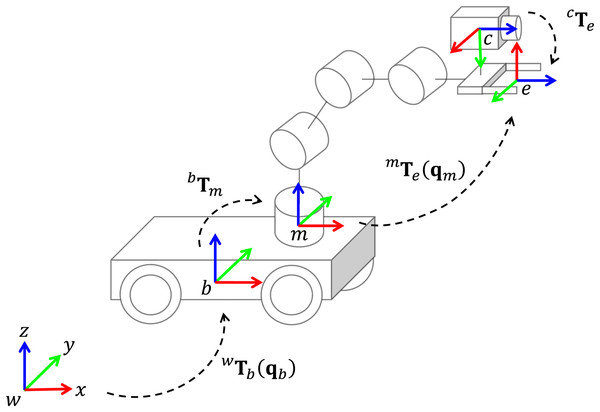

The eye-in-hand system with coordinate frame assignments for robot kinematics is illustrated in Fig. 1. We start defining the following homogeneous matrices: represents the position and orientation (pose) of the mobile platform, with respect to the world frame, is a constant transformation between the mobile platform and the manipulator base, is the transformation from the manipulator base to the end-effector frame. Finally, is the position and orientation of the end-effector, with respect to the camera frame.

Figure 1: Eye-in-hand system and coordinate frames assignment for a mobile manipulator.

The mobile platform pose is defined as

(10) where represents the position and orientation of the omnidirectional platform.

Let be the manipulator configuration with DOF. The manipulator kinematics can be computed based on the Denavit-Hartenberg (DH) convention. Then, forward kinematic can be defined as

(11) where transforms the frame attached to the link into the frame link of the robot manipulator, with .

The joint variable for the mobile manipulator is given by . The forward kinematics of the mobile manipulator is obtained with

(12) where is the orientation and the position of the end-effector, with respect to the world frame .

Inverse kinematics involves calculating the joint variables given the desired end-effector pose. This calculation can be achieved by minimizing an error function through an iterative process based on differential kinematics (Sciavicco & Siciliano, 2012). Differential kinematics seeks to establish the relationship between the joint velocities and the end-effector velocity .

(13) where is a Jacobian matrix and . and are instantaneous linear and angular velocities of the end-effector. Moreover, where are the mobile platform velocities , and are the manipulator velocities in joint space.

The Jacobian matrix can be computed as follows

(14) where matrix relating the contribution of the end-effector linear velocity, and matrix relating the contribution of the end-effector angular velocity.

The matrix is defined as

(15) where is the end-effector position related to the joint variable . Moreover, the matrix is defined as

(16) where and are the z-axis of the rotation matrices and , respectively. Notice that the first two rows are set to since and displacements do not provoke any end-effector angular velocity.

Rotation matrix can be found in the homogeneous transformation , which is

(17)

Finally, the mapping between the mobile platform to each wheel velocity is given by the following relationship

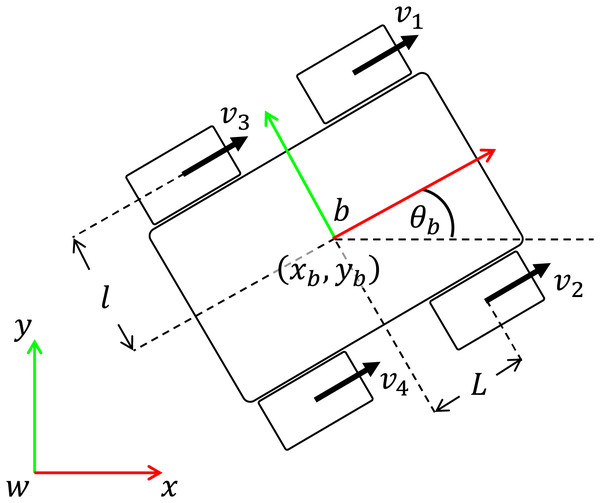

(18) where is the linear velocity of wheel , L is half of the distance between the front and the rear wheels, and is half of the distance between the left and the right wheels, see Fig. 2.

Figure 2: Omnidireccional mobile platform consisting of four mecanum wheels.

Eye-in-hand scheme with task priority and gravity compensation

To consider an eye-in-hand scheme for mobile manipulator visual servoing, we propose to rewrite (Eq. (9)) to map the camera velocity control to the end-effector velocity expressed in the world frame, which is

(19) where is a spatial motion transform matrix from the camera frame to the world frame, and contains the linear and angular velocity expressed in the world frame. The matrix is given as

(20) where is the skew-symmetric matrix associated with the vector , and is the transformation matrix from camera to world frame, which is computed as

(21)

To map Cartesian velocities to joint velocities , using Eq. (13) we have

(22) where is the Moore-Penrose pseudoinverse of .

To overcome the problem of inverting in the neighborhood of a singularity is provided by the damped least-square (DLS) inverse

(23) where is a damping factor that improves the conditioning of the inversion from a numerical viewpoint. DLS helps reduce the impact of singular configurations, which occur when is not full rank (that is, when its determinant is zero or any of its eigenvalues are zero).

Consider the eigenvalue decomposition , the matrix is a diagonal matrix containing the eigenvalues . If we invert the decomposition, we get , where .

A singularity configuration will have some ; we get a stable inversion if we add a positive scalar , as shown in Eq. (24).

(24)

Then, the stabilized -eigenvalue will be . The final form (Eq. (25)) is the well-known solution of the Thikonov regularization (Nair, Hegland & Anderssen, 1997; Gerth, 2021).

(25)

Moreover, this work considers a task priority scheme to deal with feature error convergence of the visual servoing task while maximizing the mobile robot manipulability. The task priority scheme is given by

(26) where is the main task, and allows the projection of the second task into the null space of .

In this work, we propose a vector to avoid singularities based on the manipulability measure defined as

(27)

The second task is calculated with

(28) where . The robot redundancy is exploited to avoid kinematic singularities by maximizing the manipulability measure. Indeed, can be designed for collision avoidance by letting the joints reconfigure to avoid obstacles without affecting the primary task. Moreover, can also be designed for joint limit avoidance by guiding the joint configuration towards the middle of their range while simultaneously performing the primary task.

Finally, a gravity compensation term is also considered, which is crucial for real-world implementations. Let us consider the gravitational potential energy as follows

(29) where is the gravity acceleration, is the mass of the link and is the height of the center of mass and it depends on . For simplicity, we estimate the center of mass at the middle of the link.

The gravity vector is calculated with

(30)

Finally, the term in Eq. (30) is added to Eq. (26)

(31)

Equation (31) defines the proposed eye-in-hand scheme with task priority and gravity compensation for a mobile manipulator with an omnidirectional platform.

Experimental results

This scheme is tested in both simulation and real-world experiments. The applicability is demonstrated on the Kuka YouBot which is an omnidirectional mobile manipulator.

Experiments are performed in three parts. First, an ideal simulation is performed where no gravity is required. Second, a simulation is performed in the Coppelia simulator. In this scenario, the proposed approach is tested under non-modeled dynamics and gravity compensation is required. Third, real-world experiments were performed on the Kuka YouBot robot with a conventional RGB camera.

The YouBot consists of a 5-DOF manipulator and a 3-DOF omnidirectional mobile platform. In terms of the mobile manipulator kinematics, the transformation can be calculated using the pose of the mobile platform, defined by as shown in Eq. (10). The constant transformation is represented as follows

(32)

The transformation is obtained from the Denavit-Hartenberg table shown in Table 1. The joint variable for the mobile manipulator will be

(33) where to represent the joint configuration of the manipulator.

| Joint | a (mm) | (rad) | d (mm) | (rad) |

|---|---|---|---|---|

| 1 | 33 | 147 | ||

| 2 | 155 | 0 | 0 | |

| 3 | 135 | 0 | 0 | |

| 4 | 0 | 0 | ||

| 5 | 0 | 0 | 217.5 |

The constant matrix transformation is defined as

(34)

Model and control parameters were set as: , , , m, m, kg and kg. Mass is not required to compute the gravity vector. All parameters were selected experimentally to achieve the best performance.

Simulation results

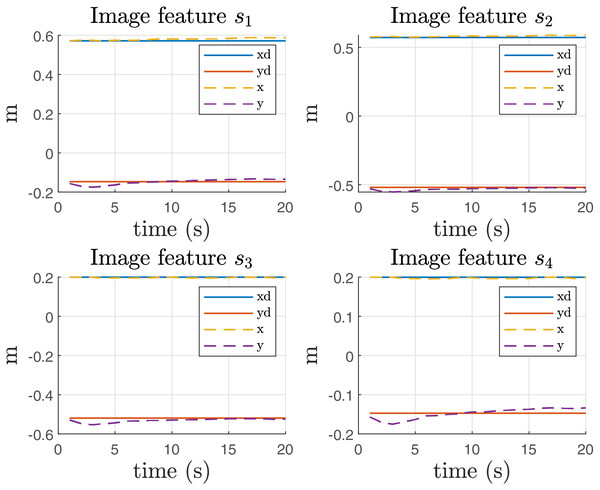

In simulation tests, four coordinates of interest points in the image were considered, where the desired image features was set as

(35)

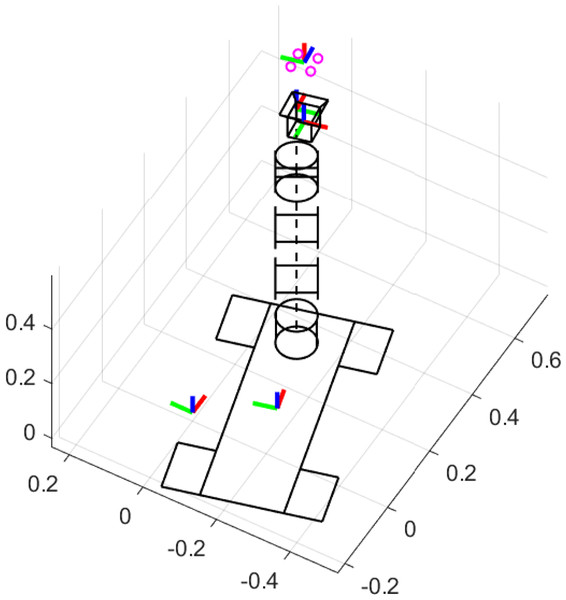

Moreover, the intrinsic parameters of the camera were set as , , . The simulation scenario of the ideal case is given in Fig. 3.

Figure 3: Simulation scenario of the ideal case.

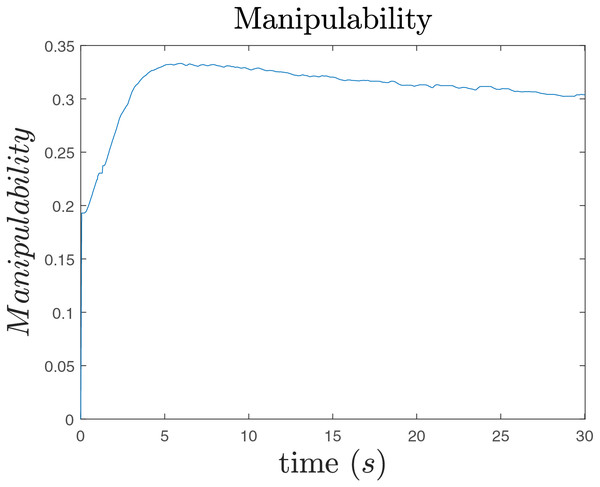

The ideal simulation aims to show that the second task in Eq. (26) is increasing the manipulability measure while performing the IBVS task with high priority.

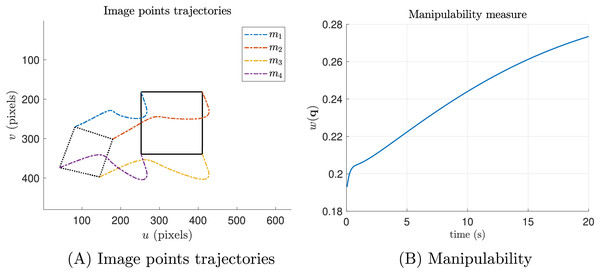

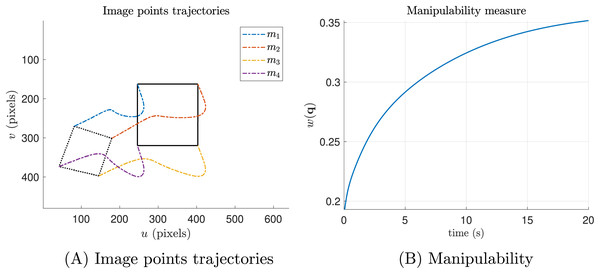

Figure 4 shows the results when the secondary task is not included. Figure 4A the dashed lines represent the starting positions, while the solid lines indicate the desired positions. The colored dashed lines illustrate the trajectories of each keypoint. Although the keypoints reach the desired pose, Fig. 4B shows that, since the secondary task is not included, the manipulability measure does not exceed 0.3.

Figure 4: Ideal simulations without the manipulability term (secondary task).

(A) Shows the trajectories of the features in pixel units and (B) shows the manipulability index.Conversely, in Fig. 5, we present the results of the same simulation, but with the secondary task included. It is evident that the manipulability measure increases, in contrast to the previous experiment (Fig. 4).

Figure 5: Ideal simulations with the manipulability term (secondary task).

(A) The trajectories of the features in pixel units and (B) the manipulability index.As we can see, Figs. 4A and 5A the image point trajectories (colored dashed lines) confirm that the primary task is being performed successfully.

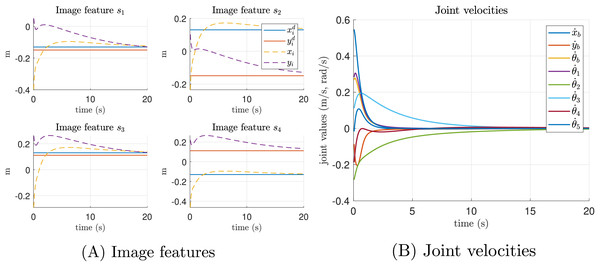

In addition, in Fig. 6A we show the transition of the image features, where the dashed lines can be seen converging at the desired positions, represented by solid lines. In Fig. 6B we present the joint velocities of the mobile manipulator while performing both the primary and secondary tasks. It can be observed that the velocities converge smoothly to a neighborhood of zero, indicating that the desired positions have been reached.

Figure 6: Ideal simulation.

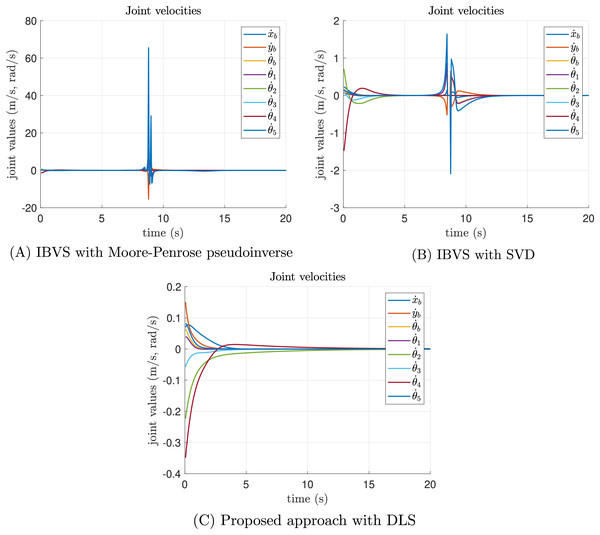

(A) Image features and (B) joint velocities.A singularity test experiment is performed to validate the capacities of the proposed approach to avoid singularities and smooth out discontinuities by inverting the Jacobian matrix based on DLS, see Eq. (23). In this experiment, we also compared the proposal against the classical IBVS

(36) where the inversion of with was performed in two ways, using singular value decomposition (SVD) and using the well-known Moore-Penrose pseudoinverse . SVD in the form is another method to deal with singularities. To compute the inverse of , singular values along the diagonal of that are less or equal to a tolerance are treated as zero. Then, all the non-zero values of can be inverted.

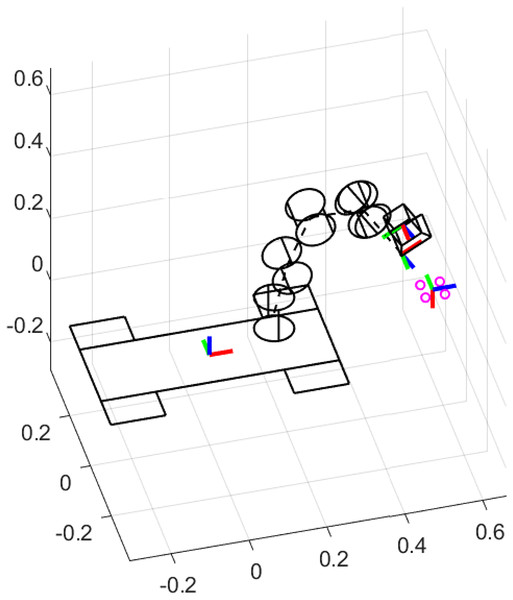

Figure 7 illustrates the simulation scenario to perform the singularity test experiment. To identify singularities, Monte Carlo experiments were performed to randomly select numerical joint configurations that provoke the determinant of is close to 0. Then, four coordinates of interest points in the 3D world were intentionally placed near the position of the end-effector related to a singular joint configuration.

Figure 7: Simulation scenario for the singularity test experiment.

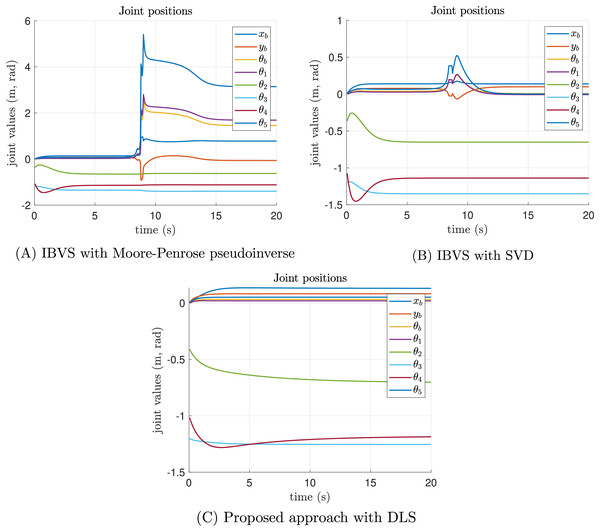

Figure 8 presents the joint positions results along time of the singularity test experiment. As can be seen in Fig. 8A, the Moore-Penrose pseudoinverse suffers from singularities around 9 s of simulation time. Abrupt changes in joint position are presented. Figure 8B shows that SVD reduces the impact of singularities and all joint positions are admissible. However, Fig. 8C demonstrates that the proposed approach significantly reduces the impact of singularities, since joint positions are not perturbed at all.

Figure 8: Joint position results of the singularity test experiment.

(A) The joint position results of classical IBVS with Moore-Penrose pseudoinverse, (B) the classical IBVS with SVD to avoid singularities, and (C) the proposed IBVS scheme with DLS.The joint velocity results of the singularity test experiment are reported in Fig. 9. In the case of the Moore-Penrose pseudoinverse, the presence of singularities provokes inadmissible joint velocities as shown in Fig. 9A. Despite the SVD reducing the impact of singularities, discontinuities are presented in some joint velocities which are not recommended for real-world applications, see Fig. 9B. Moreover, the joint velocities reported by the proposed approach are smooth, demonstrating that DLS smooth out discontinuities, see Fig. 9C. All velocities converge to a neighborhood of zero with admissible joint positions.

Figure 9: Joint velocity results of the singularity test experiment.

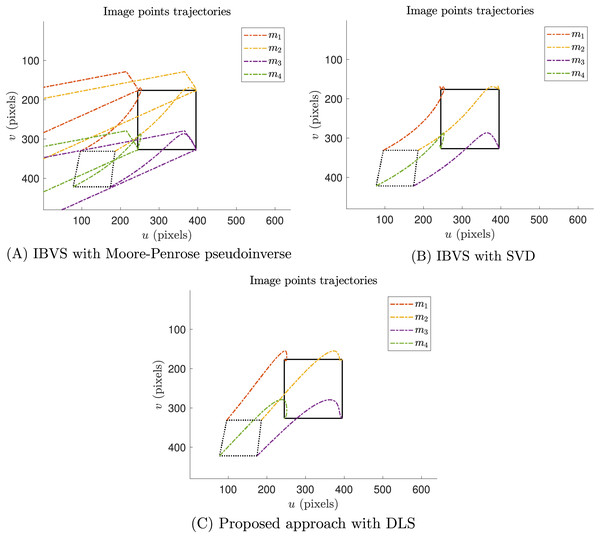

(A) The joint velocity results of classical IBVS with Moore-Penrose pseudoinverse, (B) the classical IBVS with SVD to avoid singularities, and (C) the proposed IBVS scheme with DLS.The image points trajectories of the singularity test experiment are provided in Fig. 10. As expected, Fig. 10A shows that the task of IVBS with Moore-Penrose pseudoinverse failed. In this case, the image points trajectories present abrupt changes that go out of the image boundary. Moreover, Fig. 10B reports that the IVBS with SVD reached the desired image features, despite the velocities discontinuities. Finally, Fig. 10C illustrates that the proposed approach with DLS successfully reached the desired image features.

Figure 10: Image points trajectories of the singularity test experiment.

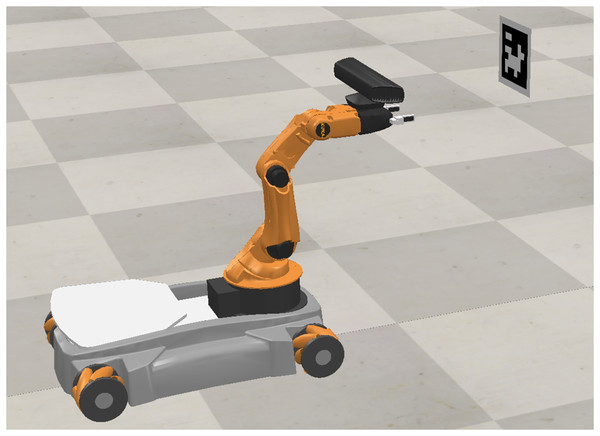

(A) Thee trajectories of the features of classical IBVS with Moore-Penrose pseudoinverse, (B) the classical IBVS with SVD to avoid singularities, and (C) the proposed IBVS scheme with DLS.Now, the proposed approach is tested in the Coppelia simulator. These simulations aim to test the proposed scheme under non-modeled dynamics and gravity compensation. The setup of these simulations is shown in Fig. 11.

Figure 11: Setup in Coppelia simulator.

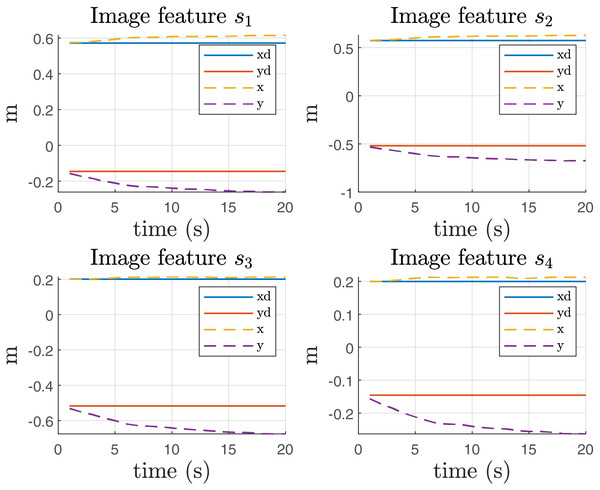

Photo credit: Javier Gomez-Avila.From this experiment, it is important to report the error in the image features, as illustrated in Fig. 12. The figure clearly shows an offset in the direction, which can be attributed to the influence of gravity on the system. This offset indicates that the image features are not aligning perfectly with the expected positions, likely due to gravitational effects acting on the manipulator during the operation.

Figure 12: Image features in Coppelia simulator before the gravity compensation.

Figure 13 presents the results of implementing gravity compensation. The data clearly demonstrate that the error in the direction has been significantly reduced as a result of this compensation technique. This improvement indicates that the gravity compensation has effectively minimized the offset previously observed (Fig. 12), leading to better alignment of the image features with their expected positions.

Figure 13: Image features in Coppelia simulator with compensation of gravity.

Real-world experimental results

The experiments are conducted using the Kuka Youbot omnidirectional platform, which has an initial pose as shown in Fig. 14. The QR code is placed within the field of view of this initial position. The platform is equipped with encoders which have been used to measure joint positions. Moreover, Encoders have been also used for the wheel odometry to estimate the pose of the mobile platform.

Figure 14: Experimental setup. Initial (left image) and final (right image) positions.

Photo credit: Jesus Hernandez-Barragan.Since the surface where the mobile platform moves is not completely flat, external disturbances by surface imperfections can produce slippages that cause errors in odometry. Even with the lack of accuracy in platform pose estimation, the proposed IBVS succeeds since it does not rely on the internal odometry (Li & Xiong, 2021; Jo & Chwa, 2023), but instead the visual feedback from the QR code.

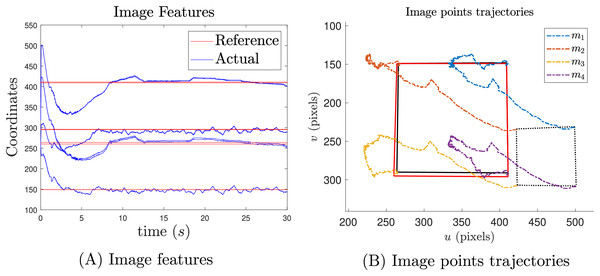

For this experiment, we measure four image features from a QR code. Figure 14 shows the starting and ending positions in an example of the experimental setup. This experiment was performed over 30 s. In this scenario, the desired image features was set as

(37)

Moreover, the intrinsic parameters of the camera were calibrated as , , .

The joint velocities and positions from the experiment are presented in Fig. 15. Although the control signals are not as smooth as those observed in the simulations, Fig. 15A shows that the velocities converge to a neighborhood of zero. Several challenges arose during the real-world implementation, including unmodeled dynamics, external perturbations, robot wear, and surface imperfections. Despite these challenges, the joint positions are still achieved smoothly, as shown in Fig. 15B.

Figure 15: Joint velocities (A) and joint position (B) results.

Figure 16A presents the results of the image feature transition and Fig. 16B image point trajectories. The reported results indicate that the reference position is achieved, even in the presence of noisy image features from QR detection. Additionally, Fig. 16A shows that the offset caused by gravity has been mitigated. In Fig. 16B, the black solid square represents the desired position, while the red square indicates the final position.

Figure 16: Image feature results in pixel units (A) and their trajectories (B).

Finally, Fig. 17 shows that the manipulability measure is increased as expected.

Figure 17: Manipulability.

Discussion

Ideal simulations addressed the task prioritization scheme, where the eye-in-hand IBVS was the highest priority task, and manipulability maximization was the secondary task. Simulation results demonstrate that both tasks performed successfully with smooth image trajectories and control actions while maximizing the manipulability measure. Notice that with higher manipulability, it will reduce the incidence of singularities.

A singularity test experiment was performed to demonstrate the singularity avoidance capacities of the proposed approach. In this case, the proposed approach was compared against the classical IBVS where the inversion of required matrices was performed with two methods, the Moore-Penrose pseudoinverse and SVD. Reported results demonstrate that IBVS with Moore-Penrose pseudoinverse performed poorly, the IBVS task practically failed since this method does not handle singularities. Moreover, IBVS with SVD reduces the impact of singularities, and the IBVS task succeeds. However, the presence of singularities provokes significant joint velocities discontinuities that are impractical in real-world implementations. Finally, the proposed approach demonstrates that avoids singularity configurations since joint position and joint velocities results are admissible and it smooths out discontinuities.

Simulations in the Coppelia simulator addressed the importance of gravity compensation. Simulation results without the compensation term report a significant error in the y-axis of each image feature. However, the gravity compensation term effectively reduced this inconvenience.

The real-world experiments with the 8 DOF KUKA Youbot address the applicability of the proposed approach. The eye-in-hand IBVS was performed successfully with manipulability maximization along with gravity compensation. Although the input control signals of the experiments were not as smooth as in the simulations, position joint trajectories are still smooth. Notice that control action tries to compensate for non-modeled dynamics, external perturbations, and other drawbacks of the physical implementations which are not considered in simulated scenarios. Despite all these inconveniences, the proposed approach succeeds.

We use kinematic modeling for both the manipulator and the mobile platform to implement IBVS. No dynamic modeling is considered except for the gravity compensation.

Kinematic modeling uncertainties, such as kinematic modeling errors of the platform in Eq. (18) and the manipulator (Table 1) may arise since constants are defined based on technical specifications and practical procedures that have error tolerances. Additionally, there are parametric errors that can occur due to intrinsic and extrinsic camera calibration.

Finally, although we do not employ any techniques to address noisy image features (such as a Kalman filter) we still achieve the desired image features.

In this work, the VS task was implemented on an omnidirectional mobile manipulator. We let as future work, the design of kinematic modeling that includes mobile platforms with nonholonomic constraints. Moreover, the dynamic modeling of the mobile manipulator can be also included in the VS scheme. Finally, this work can be extended to the use of the eye-to-hand configuration.

Conclusions

The article presented satisfactory results from implementing eye-in-hand IBVS enhanced with manipulability maximization and gravity compensation. A redundant omnidirectional mobile manipulator KUKA Youbot was considered as a case study. Both, simulation and real-time experimentation demonstrated the effectiveness of this approach.

To demonstrate the effectiveness of our proposal and address the challenge of inverting near singularities, we applied the damped least-squares (DLS) inverse method. In simulation, the mobile manipulator was directed to approach a kinematic singularity. Results show that, with the classical method, velocities escalate significantly as the manipulator moves toward this configuration. In contrast, our proposed approach enables the manipulator to avoid the singular configuration, consistently maintaining velocities within acceptable limits.

Additionally, the secondary task, aimed at increasing the manipulability index, assists in ensuring that once the primary task is completed (reducing the positional error of the QR code in the image), the manipulator moves away from singular configurations. This approach not only helps achieve the primary positioning goal but also enhances the stability and performance of the system by avoiding configurations where control may become unstable.

Supplemental Information

Repository link.

This repository contains the code and resources for “Image Based Visual Servoing with kinematic singularity avoidance for mobile manipulator”. The repository includes matlab and coppelia simulations.

The repository can be found in GitHub:

https://github.com/Jegovila/IBVS_YouBot DOI: 10.5281/zenodo.14035156 (https://doi.org/10.5281/zenodo.14035155).