A wave delay neural network for solving label-constrained shortest route query on time-varying communication networks

- Published

- Accepted

- Received

- Academic Editor

- Chan Hwang See

- Subject Areas

- Artificial Intelligence, Computer Networks and Communications, Data Mining and Machine Learning, Neural Networks

- Keywords

- Artificial intelligence, Auto-wave neuron, Time-varying network, Wave delay neural network, Label-constrained time-varying shortest route query

- Copyright

- © 2024 Han et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2024. A wave delay neural network for solving label-constrained shortest route query on time-varying communication networks. PeerJ Computer Science 10:e2116 https://doi.org/10.7717/peerj-cs.2116

Abstract

The focus of the research is on the label-constrained time-varying shortest route query problem on time-varying communication networks. To the best of our knowledge, research on this issue is still relatively limited, and similar studies have the drawbacks of low solution accuracy and slow computational speed. In this study, a wave delay neural network (WDNN) framework and corresponding algorithms is proposed to effectively solve the label-constrained time-varying shortest routing query problem. This framework accurately simulates the time-varying characteristics of the network without any training requirements. WDNN adopts a new type of wave neuron, which is independently designed and all neurons are parallelly computed on WDNN. This algorithm determines the shortest route based on the waves received by the destination neuron (node). Furthermore, the time complexity and correctness of the proposed algorithm were analyzed in detail in this study, and the performance of the algorithm was analyzed in depth by comparing it with existing algorithms on randomly generated and real networks. The research results indicate that the proposed algorithm outperforms current existing algorithms in terms of response speed and computational accuracy.

Introduction

The shortest route query problem is a classic combinatorial optimization challenge, aiming to identify the most efficient route (minimizing cost or reducing delay) from a source node to a destination node. Solutions to this problem find extensive applications in communication networks (Wang, Guo & Okazaki, 2009; Gomathi & Martin Leo Manickam, 2018), transportation network (Fu, Sun & Rilett, 2006; Neumann, 2016), engineering control (Nip et al., 2013; Lacomme et al., 2017), and many other areas.

The shortest route query problem was initially formulated by Dijkstra (1959) in the 1950s. Subsequently, numerous enhanced algorithms were introduced to address this problem in time-invariant networks (Xu et al., 2007; Zhang & Liu, 2009). During that period, modifications to this problem were also proposed, including the label-constrained shortest route query on time-invariant networks (Zhang et al., 2021; Likhyani & Bedathur, 2013; Barrett Chris, 2008). While demonstrating certain advantages in time-invariant networks, these methods still face challenges when applied to solving the shortest route query problem in time-varying networks.

The time-varying network (also known as the time-dependent network) is a dynamic network, which widely exists in the real world (Huang, Xu & Zhu, 2022). Compared to the traditional static networks, the time or cost of one data packet traveling an arc in the time-varying network is not constant but changes over time, which depends on the departure time from the start node and may be denoted by a piecewise function. Recently, some problems based on time-varying networks have attracted extensive attention, such as the traveling salesman problem (Cacchiani, Contreras-Bolton & Toth, 2020), maximum flow problem (Zhang et al., 2018), minimum spanning tree problem (Huang, Fu & Liu, 2015), project scheduling problems (Huang & Gao, 2020), etc. The shortest route query problem on time-varying networks was first studied by Cooke & Halsey (1966), who proposed a Bellman-based iterative algorithm to solve the unconstrained time-varying shortest delay route problem. Since then, this kind of problem has also been studied by Huang & Wang (2016), Wu et al. (2016), Huang et al. (2017), Wang, Li & Tang, (2019) etc.

Similiar to the time-invariant networks (Feng & Korkmaz, 2013), the shortest route query problem with constraints also exists in time-varying networks. To the best of our knowledge, the research on the constrained time-varying shortest route query problem mainly focuses on the reachiability on time-varying networks, such as delay-constrained time-varying minimum cost path problem (Cai, Kloks & Wong, 1997; Veneti, Konstantopoulos & Pantziou, 2015), and more (Chen et al., 2022; Peng et al., 2020; Chen & Singh, 2021; Gong, Zeng & Chen, 2023; Heni, Coelho & Renaud, 2019; Yang & Zhou, 2017). Choosing the appropriate path is crucial for optimizing network performance in communication networks. The constrained shortest route problem allows for the introduction of specific constraints in path selection Ruß, Gust & Neumann (2021), such as bandwidth, latency, load balancing Peng et al. (2022), etc., to meet the specific needs of the network and improve the overall efficiency of the network. Different applications and services have different requirements for network performance. The constrained shortest route problem can be used to ensure that specific quality of service standards, such as low latency and high bandwidth, are met when selecting paths in a network, thereby improving user experience. In the case of limited computing resources, the constrained shortest route problem helps to effectively manage network resources. By considering constraints, certain paths can be avoided from being too crowded, thereby improving network availability and resource utilization efficiency. However, there is limited research on the label-constrained time-varying shortest route query problem (LTSRQ).

The label-constrained shortest route query problem is of great importance in time-varying communication networks, especially in achieving efficient, reliable, and low-latency network communication. It is specifically manifested in: (1) Load balancing and resource optimization: Nodes and links in communication networks may have different performance characteristics. By considering constraints such as bandwidth and latency, path selection can be optimized to achieve load balancing, avoid overcrowding of certain paths, and improve the utilization of network resources. (2) Security: By considering label constraints, a path can be designed to ensure the security of data during transmission and prevent security threats such as man-in-the-middle attacks. (3) Multipath transmission and traffic engineering: The label-constrained shortest route problem can be used for multipath transmission and traffic engineering, dynamically selecting the path that is most suitable for the current network state to improve the overall performance of the network.

Neural network technology has been proven to be more efficient than traditional mathematical methods in various fields (Huang, Xu & Zhu, 2022; Adnéne et al., 2022; Zulqurnain et al., 2022). Particularly in the investigation of the shortest route problem in time-varying networks, neural network technology, with its robust parallel computing and timing simulation capabilities, has demonstrated outstanding performance. Existing research results have substantiated the feasibility and progressive nature of neural network technology when compared to traditional mathematical methods in addressing path-related problems in time-varying networks. Therefore, in this article, a wave delay neural network (WDNN) framework is proposed to solve the LTSRQ. The purpose of LTSRQ is to find a route from the source node to the destination node having the shortest delay with a NP-hard complexity, and meet the label threshold. For example, in certain wireless broadcast networks, where the limited capacity of wireless devices necessitates selective signal reception and processing, labels are commonly employed for signal filtering. Specifically, in scenarios where the payload is associated with specific time intervals, the time required for signal processing and forwarding is generally directly proportional to the payload. As a result, such wireless broadcast networks can be categorized as labeled time-varying networks. The labeled-constrained time-varying shortest route query problem in this context aims to identify a path within the network that facilitates the transmission of signals with specific labels from the source to the destination. The proposed wave delay neural network (WDNN) is built on auto wave neurons, allowing for parallel computation. WDNN proves effective in addressing the label-constrained time-varying shortest route query (LTSRQ), arriving at the global optimal solution. Notably, unlike conventional neural networks that necessitate training, the proposed WDNN operates without any training requirements.

In general, our novelty and contributions can be summarized in the following two aspects:

-

Wave delay neural network (WDNN) framework: A framework for Wave Delay Neural Networks (WDNN) is proposed to resolve the LTSRQ, which composed of autonomously designed and training-free wave neurons. These wave neurons are adept at handling the time-varying lengths of dynamic edges, allowing for optimal departure time selection. By assigning a state type to each neuron to restrict wave reception, the framework successfully implements label-constrained processing. Due to the adoption of parallel computation and an optimal emission time selection mechanism for neurons, this method can rapidly obtain the global optimal solution to the label-constrained time-varying shortest route query problem. It plays a crucial role in delay-sensitive communication networks.

-

The effectiveness of the proposed algorithm is assessed through a thorough analysis of time complexity and a correctness proof. Performance evaluation is conducted from two perspectives: the number of nodes and the number of time windows. The experimental results demonstrate that the proposed algorithm is capable of effectively addressing the label-constrained shortest routing query problem in time-varying networks.

To enhance the understanding of this article, Table 1 provides a summary of symbols used in the definition section and the neural network architecture design section. The rest of this article is organized as follows. ‘Preliminaries’ introduces the preliminary knowledge that WDNN requires. In the third section, a newly designed neural network framework, auto wave neuron, and algorithm for solving LTSRQ were proposed, and the time complexity and correctness of the proposed algorithm were analyzed, which is also the main focus of this study. Next, we conduct our experiments and evaluations in ‘Experimental Results and Discussion’. Finally, the ‘Conclusion’ makes a conclusion of this article in brief.

| Symbols | Explanation |

|---|---|

| TS | The start time of a time window. |

| TE | The end time of a time window. |

| TL | Tthe length of arc in a time window. |

| Ln | The label set of a node n. |

| lene(t) | The length of a time-varying arc. |

| VP | The set of nodes on path P. |

| EP | The set of arcs on path P. |

| LP | The set of label of nodes on path P. |

| M | A large integer. |

| αi | The arrival time of ith node on the path P. |

| τi | The departure time of ith node on the path P. |

| t | The current time. |

| The set of all precursor neurons of neuron i. | |

| The set of all successor neurons of neuron i. | |

| Δt | A step (unit) of iteration. |

| s | The root neuron (source node). |

| z | The destination neuron (destination node). |

| ts | The earliest time from the source node is allowed. |

| Lc | The constrained label set. |

| Li | The label set of neuron i. |

| The recorded label set of neuron i. | |

| A wave from neuron k to i at time t. | |

| The path from neuron k to i at time t. | |

| The arrival time of the wave from neuron k to i at time t. | |

| The label of the wave from neuron k to i at time t. | |

| The path recorded by neuron i. | |

| The set of the arrival time of each wave recorded. | |

| The label set of recorded paths. | |

| TWi,q(t) | The time window of arc (i, q) at time t. |

Preliminaries

To ensure clarity and understanding in this study, the clear definitions will be provided for the key concepts involved. By carefully and precisely defining our concepts, it aim to ensure that our analysis is rigorous and well-informed, contributing to a comprehensive understanding of the study’s foundations and findings.

Definition 1 (Time window) Huang, Xu & Zhu (2022): A triple (TS, TE, TL) is defined as a time window if and only if the TE > TS, and where the TS is the start time of time window, the TE is the end time of time window, the TL is a constant number that denotes the length of arc in this time window.

Definition 2 (Time-varying function) Huang, Xu & Zhu (2022): A piecewise function f(t) is defined as a time-varying function if and only if t is a time variable. If to devide the time-varying function, it can be divided into multiple time windows. That is to say, a time-varying function is a functional representation of one or more time windows.

Definition 3 (Label node): A node is defined as a label node if and only if it has at least one label. The label set of a node n is denoted as Ln.

Simply put, a labeled node refers to a node that has certain attributes. If the label attribute of node A is “a”, it means that only signals with the label “a” can be received and forwarded by node A, thereby reducing network resource occupation and information dissemination range. In communication networks, labels can be used to label the types of signals that a node can receive and send.

Definition 4 (Time-varying arc) (Huang, Xu & Zhu, 2022): An arc e = (u, v) is defined as a time-varying arc if and only if its length lene(t) is a time-varying function.

In communication networks, time-varying arcs are employed to depict the varying time required for the same data to complete transmission at different time periods over the same communication connection. This variability in transmission time can be attributed to factors such as network congestion, leading to delays in data transmission. The use of time-varying arcs allows for a more nuanced representation of the dynamic nature of data transmission in communication networks.

Definition 5 (Time-varying network) (Huang, Xu & Zhu, 2022): A directed network G(V, E, TW) is defined as a time-varying network if and only if there is at least one time-varying arc, where the V is the set of nodes, the E is the set of arcs, the TW is the set of time windows of nodes.

Definition 6 (Time-varying path): A path P(VP, EP, LP) is defined as a time-varying path if and only if αi + ωi = τi. Where, the VP is the set of nodes on path P; the EP is the set of arcs on path P; and the LP is the set of label of nodes on path; the αi and τi are the arrival time and departure time of ith node on the path, respectively; and ωi ≥ 0 is the waiting time at ith node.

For any time-varying path P(VP, EP, LP), where the VP = {v1, v2, …, vn+1}, and the EP = {e1, e2, …, en}, the LP = Lv1∪Lv2∪...∪Lvn+1, the length of path P is equal to .

Definition 7 (Label-constrained time-varying shortest route query problem, LTSRQ): Given a time-varying network G, a LTSRQ Q = (s, z, ts, Lc) is to find a time-varying path P from s to z, such that: 1) the LP ∈ Lc; 2) the lenP ≤ lenP′. Where, the s is source node, the z is destination node, the Lc is the constrained label set, the P′ is any satisfied label-constrained path from node s to node z on network G. Its mathematical model is: (1)

WDNN Architecture

In this section, the architecture of the proposed WDNN is initially presented, followed by the introduction of a WDNN algorithm for addressing the shortest route problem within the context of time-varying network label constraints. furthermore, two theorems is provided to analyze the time complexity and correctness of the proposed algorithm.

Design of WDNN

The wave delay neuron network is an auto wave neuron-based neural network. Using WDNN to address LTSRQ, the structure of WDNN depends on the topology of the time-varying network, i.e., each node and arc on the time-varying network respectively correspond to a neuron and a link (synapse) that between two neurons. The operating mechanism of the wave delay neural network is as follows: first, activate the root neuron. For non-root neurons, they will only be activated after receiving valid waves (waves that comply with their own label constraints); only activated neurons can generate concurrent waves; the neural network stops running when it reaches the given delay threshold, and the destination neuron selects the shortest route among all the received waves, which is the label-constrained time-varying shortest route.

Auto wave is the medium for neurons to transmit information, which also is regarded as the data packet. As a data packet transmission on an arc, there are delay and cost associated with a wave travel the corresponding synapse, where the delay is calculated by the synapse and the label is calculated by the neuron that sent the wave. Each wave contains three information, namely , , and .

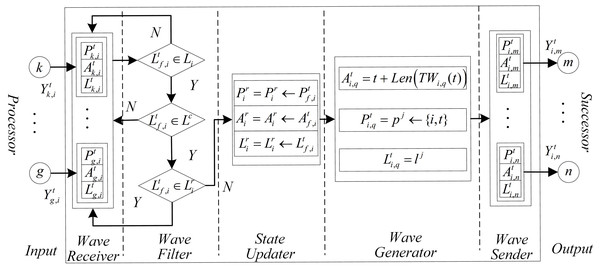

Figure 1 shows a general auto wave neuron’s structure. Each auto wave neuron consists of seven parts: input, wave receiver, wave filter, state updater, wave generator, wave sender, and output. The illustration and function of each part as following:

Figure 1: The structure of a general neuron on WDNN.

-

Input: The input of neurons is usually composed of multiple ports used to receive waves sent by other neurons. The number of input ports often depends on the in-degree of the neuron.

-

Wave receiver: The wave receiver is used to receive, cache, and decode auto waves. The wave receiver layer consists of several sub receivers, whose number depends on the number of input ports, which also enables each input port to correspond to one sub receiver one by one. When a neuron receives a wave at the current moment, , , and in its corresponding sub receivers will be assigned based on the information of the wave; if no waves are received, then , , and will be assigned an initial value. Where, the is used to cache the path in the wave sent by neuron g to current neuron i, the is used to cache the arrival time of the wave, and the is used to cache the labels in the wave. (2) (3) (4)

-

Wave filter: Wave filters are used to filter the data in the wave receiver. Firstly, based on the label information of the wave, select the wave that the current neuron can process, next determine whether the wave type meet the constrained label, and then determine whether the type of wave has been received. If the wave type is not a type that the current neuron can recognize or not meet the label constrain or has already received the type of wave, so the wave will be abandoned (since the length of the first received wave must be the shortest, only the earliest arriving wave needs to be recorded.).

-

State updater: The state updater is used to update and record the latest state of the current neuron. It includes three sub modules: , and , which are used to update and record the current shortest route sequence, the arrival time of the wave, and the label of the received wave.

-

Wave generator: The wave generator is used to calculate the values of new auto waves. It consists of three parts: , , and , , their expressions are as following: (5) where, the is the one of label momerized by current neuron.

-

Wave sender: The wave sender is used to encode and send waves, which may be regarded as the inverse process of the wave receiver. It consists of , , and , .

-

Output: The output is the port of auto wave output to successor neurons. Its function is similar to the axon site of biological neurons. The number of output ports depends on the current neuron output.

WDNN algorithm

The underlying idea of using WDNN to solve LTSP according to the following mechanisms: (1) initialize all neurons and activate the root neuron; (2) all non-root neurons receive auto waves, update neuron’s state at special time step; (3) all activated neurons generate auto waves and send to its successor neurons at special time step; (4) the shortest path depends on the wave that arrive destination neuron earliest and satisfied the label constrain Lc. Note that, the condition for activate non-root neuron is that the wave receiver receives one or more waves. The detailed procedures of the WDNN algorithm are summarized as shown in Algorithm 1 – 3 . All symbols that used in Algorithm 1 – 3 are summarized in Table 1.

Algorithm 1

WDNN

Input: V, E, L, s, d, Δt, k, Lc;

Output: report label-constrained shortest route;

1: t = ts; /*Initialize neuron timer.*/

2: initializing each neuron by using INA;

3: while Lrd == ∅ and t − ts <= k do

4: update each neuron by using UNA;

5: t = t + Δt; /*Iterative update of neuron timer.*/

6: end while

7: report the shortest route Ptd. Algorithm 2

Initializing neuron algorithm (INA)

Input: i, d, t;

Output: , , ;

1: if (i = r) then /* Initializing root neuron */

2: set Pri = Pri ← i;

3: set Ari = Ari ← t;

4: set Lri = Li;

5: end if

6: if (i ⁄= d) then /* Initializing non-root neuron */

7: set Pri = ∅;

8: set Ari = ∅;

9: set Lri = ∅;

10: end if Algorithm 3

Updating neuron algorithm (UNA)

Input: i, Li, Lc, , t, , , ; /* .*/

Output: ; /* . */;

1: for f ∈ V Pi do /*Receive waves sent by precursor neurons.*/

2: if Y tg,i ⁄= ∅ then

3: set Ptf,i = Ptf,i ∈ Y tf,i;

4: set Atf,i = Atf,i ∈ Y tf,i;

5: set Ltf,i = Ltf,i ∈ Y tf,i;

6: else/*No wave received, set receiver to initial value.*/

7: set Ptf,i = ∅;

8: set Atf,i = M;

9: set Ltf,i = ∅;

10: end if

11: if Ltf,i ∈ Li and Ltf,i ∈ Lc then /*Determine whether the re-

ceived wave satisfies the label constraints of the current neu-

ron and whether this type of wave has been received.*/

12: if not Ltf,i ∈ LtI then

13: Pri = Pri ← Ptf,i;

14: Ari = Ari ← Atf,i;

15: Lri = Lri ← Ltf,i;

16: end if

17: end if

18: end for

19: for j ∈ V Fi do /*Send waves to each succeeding neuron.*/

20: set Ati,q = t + len(TWi,q(t));

21: set Pti,q = pj ←{i,t};

22: set Lti,q = lj;

23: set Y ti,q = {Pti,q,Ati,q,Lti,q};

24: end for Time complexity of WDNN

Theorem 1. Let n be the number of nodes on the time-varying network, the m is the number of all arcs, the be the number of the neuron i’s input arcs, the be the number of the neuron i’s output arcs, k be the arrival time of destination node on output path, and Δt is the step (unit) of iteration. The time complexity of WDNN is equal to .

Proof: The WDNN algorithm consists of four main steps (step 1: line 1; step 2: line 2; step 3: line 3-6; step 4: line 7), the time complexity of step 1 and step 4 are all equal to O(1) due to without loop, iteration or recursion. The step 2 and step 3 are relatively complicated operations, the detailed analysis as following:

As to step 2 in WDNN, all neurons need to call INA for initializing. The times for running INA depends on the number of neurons in the neural network. Furthermore, the INA does not contain loop. Then, the time complexity of this step is equal to O(n).

Step 3 in WDNN is a loop, the number of iterations of the loop is limited by the k. Then, each neuron needs to run UNA for update at each time, which times depends on the number of neurons on the neural network. As to UNA, each neuron needs to send a wave to its precurssors and successors, its complexity is determined by . Therefore, the time complexity of this step is equal to .

In summary, the time complexity of the WDNN algorithm is equal to: (6)

It is worth noting that WDNN is a parallel algorithm, all neurons on the neural network are calculated in parallel. Therefore, in an ideal situation, the number of neurons does not affect the algorithm execution speed, the theoretical time complexity of WDNN algorithm is equal to .

Correctness of WDNN

Theorem 2. The first auto-wave that arrives at the destination neuron and satisfies the label constraint determines the shortest route from root neuron to destination neuron.

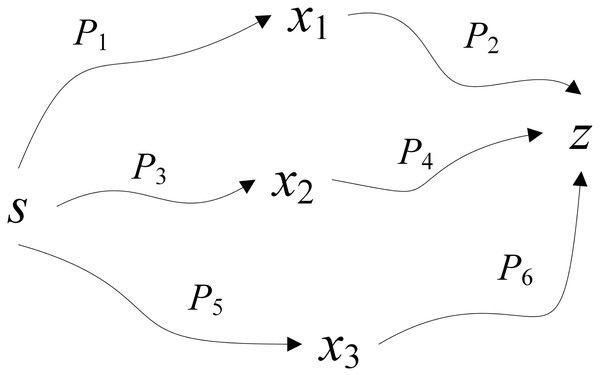

Proof: Let x1, x2, and x3 be the precursor neurons of neuron z (see Fig. 2). If the first auto-wave that received by neuron z is sent by neuron x1, then the delay is TP1 + wx1 + TP2, the label set is {a, b, c}. If the second auto-wave that received by neuron z is sent by neuron x2, then the delay is TP3 + wx3 + TP4, the label set is {a, b}. If the third auto-wave that received by neuron z is sent by neuron x3, then the delay is TP5 + wx3 + TP6, the label set is {a, b}. Because the destination neuron z will no longer receive the auto-wave after receiving the auto-wave that meets the label threshold, so if the second automatic wave is received, it is apparent that the label {c} is not in the constrained label set; if the third auto-wave is received by neuron z, it is apparent that TP3 + wx3 + TP4 > TP5 + wx3 + TP6, in reality, it contradicts the algorithmic process. In summary, Theorem 2 is correct.

Figure 2: Proof of Theorem 2.

Experimental Results and Discussion

To evaluate the performance of the proposed algorithm, the performance of WDNN is compared with the well-known algorithm of Yang & Zhou (2017) (Yang), the algorithm of Veneti, Konstantopoulos & Pantziou (2015) (Veneti), algorithm of Tu et al. (2020) (Tu) on 120 randomly generated label time-varying networks using public network generation tools Random with different number of nodes and on two public real dataset neural network (N-Net) and Internet Network (I-Net) (https://www.diag.uniroma1.it/challenge9/download.shtml). The structure of each dataset is shown in Table 2. The space complexity of WDNN, Veneti, Yang and Tu are , , O(n) and O(n⋅e), respectively; the time complexity of WDNN, Veneti, Yang and Tu respectively is , , O(n2) and O(n2).

| Dataset | Number of nodes | Number of edges | Number of time-windows | Length of edge |

|---|---|---|---|---|

| 50 | 50 | 400 | [1,5] | [1,20] |

| 100 | 100 | 800 | [1,5] | [1,20] |

| 150 | 150 | 1,200 | [1,5] | [1,20] |

| 200 | 200 | 1,600 | [1,5] | [1,20] |

| N-Net | 4,941 | 13,203 | [1,5] | [1,20] |

| I-Net | 22,962 | 96,872 | [1,5] | [1,20] |

| Algorithm | Number of nodes | |||

|---|---|---|---|---|

| 50 | 100 | 150 | 200 | |

| Veneti | 0.383 | 0.131 | 0.283 | 0.237 |

| Yang | 0.191 | 0.219 | 0.123 | 0.201 |

| Tu | 0.019 | 0.025 | 0.026 | 0.027 |

| WDNN | 0.000 | 0.000 | 0.000 | 0.000 |

The performance of proposed algorithm are evaluated from two aspects: number of nodes and number of time windows. In all experiments, without loss of generality, each experiment will be conducted N = 20 times, and the source and destination nodes will be randomly selected in each repeated experiment. All programs and instances running a machine with Intel Xeon(R) Gold 5218R CPU and 64G RAM, and all programs are implemented in C#.

For convenience, the relative error (RE) as an index to compare the performance of Yang, Veneti, Tu, and WDNN. The calculate expression of RE is as following: (7)

where the is the calculated value of ith experiment, and the is the optimal value of ith experiment.

Effect of different nodes

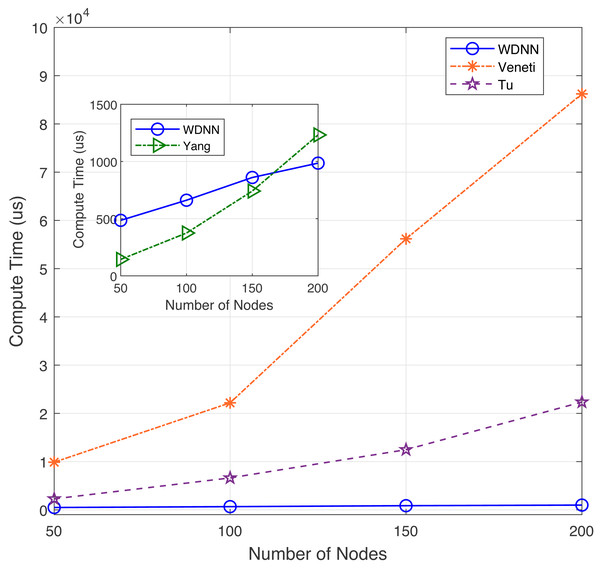

In this experiment, the performance of the proposed algorithm is evaluated by varying number of nodes between 50 to 200. Table 3 shows the effectiveness of the proposed algorithm and existing algorithms in solving 40 randomly generated label time-varying networks with different nodes. As shown in Table 3, compared to Yang, Veneti and Tu, the proposed algorithm obtain the optimal solution of the problem, while Yang algorithm has a relative error ratio between 0.123 and 0.219, the Veneti algorithm has a relative error ratio between 0.131 and 0.383, and the relative error ratio of Tu algorithm is shown a increasing trend from 0.019 to 0.027. It can be seen that the change in the number of nodes does not affect the accuracy of the Veneti, Yang and WDNN algorithms. This is because changes in the number of nodes only cause changes in the network size, while the degree and edge length between nodes do not have any significant changes, as the number of nodes does not affect the accuracy of the three algorithm. However, as the network size increases (the number of nodes increases), the error ratio of Tu algorithm is showing an upward trend, which means that Tu is not suitable for label-constrained shortest route solving on large time-varying networks. Furthermore, the reason why the algorithm proposed in this article can obtain the optimal solution on label time-varying networks with different number of nodes (network size) is that the neural network maps each node to a neuron, and changes in network size only cause changes in the network size, that is, an increase in the number of neurons, so it does not affect the performance of the algorithm. The compute time with different nodes are shown in Fig. 3.

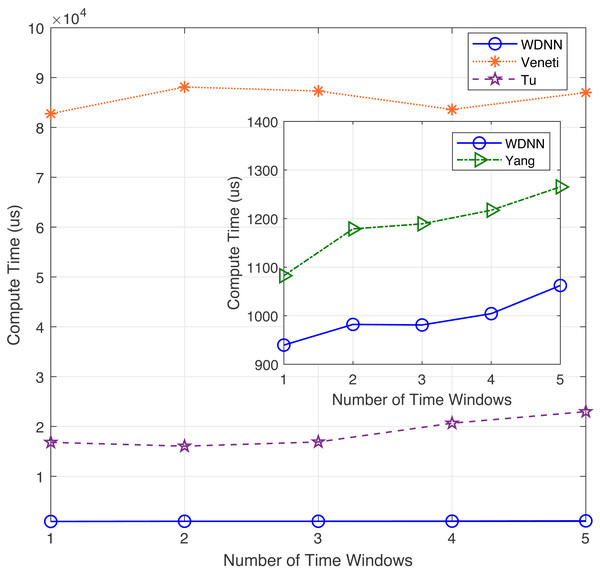

Figure 3: The compute time with different nodes.

In terms of computational time, although the proposed algorithm has a slightly slower computational speed than Yang algorithm when the network size is small (between 50 and 150 nodes), the loudness speed of WDNN is actually better than Yang, Veneti and Tu algorithms when the network size is large. It is because that the Yang algorithm adopts a heuristic search mechanism similar to the Dijkstra algorithm, which does not require synchronization in the time dimension. On large scale networks, the advantages of the proposed algorithm are presented due to the parallel computation of each neuron. The Veneti and Tu algorithms requires a lot of computation time due to the need to handle a large scale number of labels. In summary, although the proposed algorithm is slightly slower than Yang algorithm on smaller networks, it has better solution accuracy. On larger networks, the proposed WDNN outperforms existing algorithms in terms of response speed and solution accuracy.

Effect of different time windows

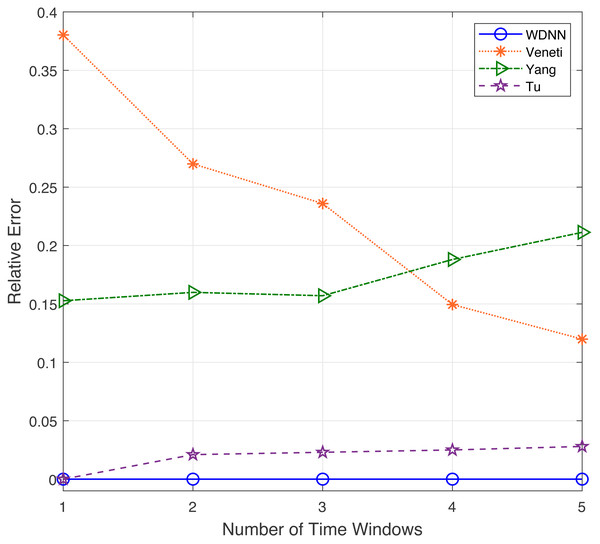

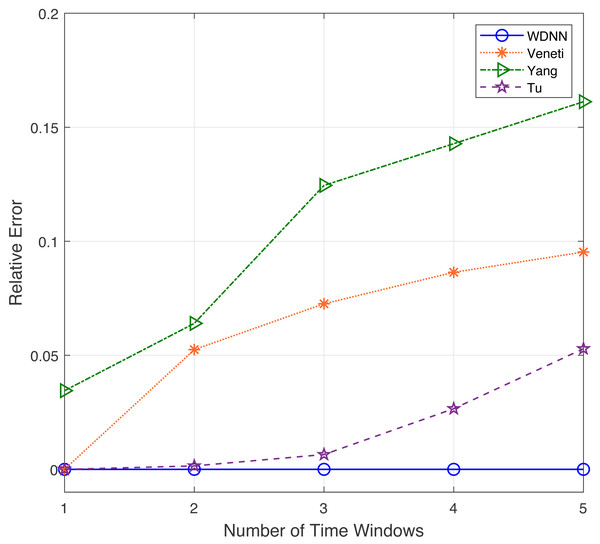

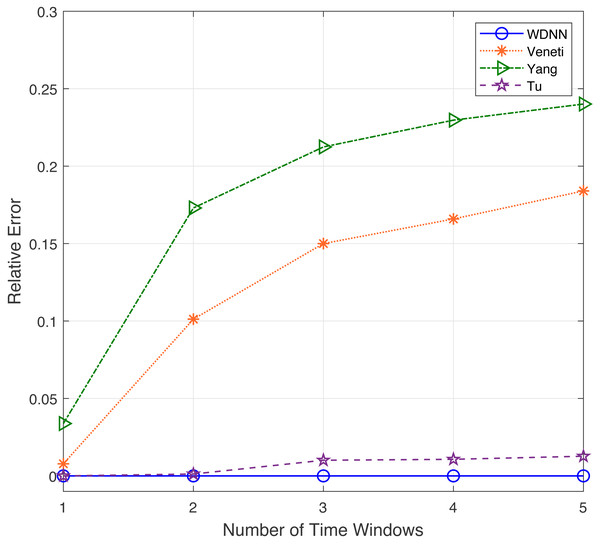

In this experiment, the performance of the proposed algorithm is evaluated by varying number of time windows between 1 to 5. Table 4 shows the effectiveness of the proposed algorithm and existing algorithms in solving 50 randomly generated label time-varying networks with different time windows. As shown in Table 4, compared to Yang, Veneti and Tu, the proposed algorithm obtain the optimal solution of the problem, while Yang algorithm has a relative error ratio of 0.15 to 0.22, the Veneti algorithm has a relative error ratio of 0.12 to 0.38, and the relative error ratio of Tu from 0 to 0.028. Figure 4 shows the relative error trend of the four algorithms when the number of time windows for each arc changes from 1 to 5. From Fig. 4, it can be seen that the proposed algorithm can obtain the optimal solution on time-varying networks with different number of time windows. The relative error of Yang and Tu algorithms increases with the increase of the number of time windows. Although the relative error of Veneti algorithm shows a decreasing trend, there is still an error of over 0.1 at 5 time windows. Figure 5 shows the compute time trend of the proposed WDNN, Yang, Veneti and Tu algorithms on a network with varying number of time windows. As shown in Fig. 5, both WDNN, the Yang and Tu algorithms show an upward trend with the increase of the number of time windows. This is because as the number of time windows increases, the algorithm needs to consume a certain amount of time when selecting a time window. Although the query time of the Veneti algorithm does not show an upward trend, this is because the time spent selecting the time window is relatively small compared to the search path of the Veneti algorithm, so it is not shown. Furthermore, the speed at which the proposed algorithm increases with the number of time windows is smaller than that of the Yang and Tu algorithms, while the Veneti algorithm has a computation time that is one order of magnitude higher than the proposed algorithm. In the case of more time windows, the proposed algorithm still has the best performance. In summary, the proposed algorithm has better performance compared to existing algorithms with varying time windows.

| Algorithm | Number of time windows | ||||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |

| Veneti | 0.380 | 0.270 | 0.236 | 0.150 | 0.120 |

| Yang | 0.158 | 0.160 | 0.157 | 0.188 | 0.211 |

| Tu | 0.000 | 0.021 | 0.023 | 0.025 | 0.028 |

| WDNN | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

Figure 4: The relative error with different time windows.

Figure 5: The compute time with different time windows.

Experimental results on large-scale networks

This experiment will evaluate the performance of the proposed algorithm on large-scale real-world networks. Tables 5 and 6 show the response times of the proposed WDNN algorithm and Veneti, Yang, and Tu algorithms on real networks N-Net and I-Net, respectively, for solving the time-varying label-constrained shortest route problem on subnets with different number of time windows. Meanwhile, Figs. 6 and 7 respectively show the relative errors of the WDNN algorithm and Veneti, Yang, and Tu algorithms in solving the time-varying label-constrained shortest route problem on subnets with different number of time windows in these two real networks. From Table 5, it is evident that in the N-Net network with approximately 4,000 nodes, the proposed algorithm shows a significant improvement in computational speed compared to Veneti and Yang algorithms. Furthermore, compared to Tu algorithm, the computational speed of WDNN has also increased by about twice. In an I-Net network with approximately 20,000 nodes, it can be clearly observed from Table 6 that the proposed algorithm shows a significant improvement in computational speed compared to Veneti, Yang, and Tu algorithms. This result indicates that the proposed algorithm is better suited for label-constrained time-varying shortest routing query problems on large-scale networks. Through the comprehensive analysis of Figs. 6 and 7, it can be concluded that the proposed WDNN does not decrease accuracy as the number of time windows increases, and always maintains the ability to query the optimal solution. This is because WDNN is able to flexibly choose the most suitable departure time based on the time window to ensure earlier arrival at the next node. However, other algorithms lack a time window selection mechanism, and as the number of time windows increases, the query error shows an upward trend.

| Algorithm | Number of time windows | ||||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |

| Veneti | 4011.81 | 4567.63 | 4382.80 | 4534.41 | 4582.48 |

| Yang | 69259.23 | 73693.86 | 62957.29 | 71008.40 | 65617.65 |

| Tu | 334.51 | 416.62 | 353.41 | 391.93 | 360.54 |

| WDNN | 172.59 | 169.16 | 158.19 | 161.26 | 162.71 |

| Algorithm | Number of time windows | ||||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |

| Veneti | 26789.26 | 27723.212 | 27076.32 | 26779.87 | 25608.23 |

| Yang | 22011.51 | 22287.60 | 21343.86 | 20870.71 | 20851.77 |

| Tu | 28820.42 | 30466.24 | 26013.79 | 22377.93 | 23775.95 |

| WDNN | 1516.66 | 1424.55 | 1585.39 | 1431.50 | 1250.00 |

Conclusion

In this study, a framework for solving the label-constrained time-varying routing query (LTSRQ) on time-varying networks is proposed using a wave delay neural network (WDNN). WDNN is comprised of self-designed seven-layer auto wave neurons, enabling parallel computing. Unlike other intelligent or neural network algorithms, the proposed neural network operates as an intelligent algorithm without the need for training. This mitigates the issue of slow response speed associated with training, diminishing the impact of network size (number of nodes) on model performance and considerably expediting the solution process on complex networks. In comparison to existing algorithms, the proposed WDNN demonstrates the capability to obtain the global optimal solution and provides interpretability. Through experiments conducted on 120 time-varying networks with varying node numbers and time windows randomly generated using the public network generation tool Random, as well as on real networks N-Net and I-Net, it is observed that the WDNN outperforms existing algorithms such as Veneti, Yang, and Tu. This offers substantial evidence for the effectiveness of WDNN in addressing the LTSRQ problem.

Figure 6: The relative error with different time windows for the N-Net dataset.

Figure 7: The relative error with different time windows for the I-Net dataset.

In practical applications, multiple uncertain properties often characterize networks, and the label-constrained shortest route query problem on time-varying networks in uncertain environments has not been addressed by the proposed WDNN. In future work, attention should be directed towards improving the structure of neural networks or neurons to enhance algorithm adaptability in uncertain and time-varying environments, including aspects of fuzziness and randomness. When enhancing neurons, the primary focus should be on refining their wave filters, state updates, and wave generators. Wave filters play a crucial role in determining the efficiency of pathfinding, while state updates and wave generators influence the accuracy of pathfinding. For fuzzy time-varying environments, the addition of fuzzy simulation units is recommended to handle fuzzy edge lengths. In the case of randomly time-varying environments, incorporating a random simulation unit is advisable to calculate the probability distribution of the path. These enhancements will contribute to the overall robustness and applicability of the proposed WDNN in handling uncertainties within network environments.