Assessment of YouTube videos on post-dural puncture headache: a cross-sectional study

- Published

- Accepted

- Received

- Academic Editor

- Markus Hobert

- Subject Areas

- Anesthesiology and Pain Management, Neurology, Science and Medical Education, Human-Computer Interaction, Healthcare Services

- Keywords

- Post-dural puncture headache, Consumer health information, Digital technology, Social media, YouTube

- Copyright

- © 2025 İlhan and Evran

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits using, remixing, and building upon the work non-commercially, as long as it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ) and either DOI or URL of the article must be cited.

- Cite this article

- 2025. Assessment of YouTube videos on post-dural puncture headache: a cross-sectional study. PeerJ 13:e19151 https://doi.org/10.7717/peerj.19151

Abstract

Background

Post-dural puncture headache (PDPH) is a common complication of central neuroaxis anesthesia or analgesia, causing severe headaches. YouTube is widely used for health information, but the reliability and quality of PDPH-related content are unclear. This study evaluates the content adequacy, reliability, and quality of YouTube videos on PDPH.

Methods

This cross-sectional study analyzed English-language YouTube videos on PDPH with good audiovisual quality. Two independent reviewers assessed the videos using the DISCERN instrument, Journal of American Medical Association (JAMA) benchmark criteria, and Global Quality Scale (GQS). Correlations between video characteristics and their reliability, content adequacy, and quality scores were examined.

Results

Out of 71 videos, 42.3% were uploaded by health-related websites, 36.6% by physicians, and 21.1% by patients. Strong correlations were found between DISCERN, JAMA, and GQS scores (p < 0.001). Videos from physicians and health-related websites had significantly higher scores than those from patients (p < 0.001). No significant correlations were observed between descriptive characteristics and scores (p > 0.05).

Conclusion

YouTube videos on PDPH uploaded by health-related websites or physicians are more reliable, adequate, and higher in quality than those uploaded by patients. Source credibility is crucial for evaluating medical information on YouTube.

Introduction

Post-dural puncture headache (PDPH) is a prevalent and significant complication associated with central neuroaxis anesthesia or analgesia, characterized by dural breach, cerebrospinal fluid leakage, and subsequent postural headache development (Alatni, Alsamani & Alqefari, 2024; Aniceto et al., 2023; Bishop et al., 2023). Its incidence varies depending on the demographic and clinical characteristics of the exposed (Aniceto et al., 2023; Bishop et al., 2023). The etiology of PDPH has yet to be fully clarified (Aniceto et al., 2023; Thon et al., 2024). Typically, PDPH presents postural features that are exacerbated when sitting or standing and alleviated in the supine position. Although PDPH often resolves spontaneously, specific pharmacological or procedural interventions may be required in certain patients (Aniceto et al., 2023). Additionally, adjunctive measures such as bed rest and fluid and caffeine intake are often used in treating PDPH despite the lack of solid evidence showing their benefits (Alatni, Alsamani & Alqefari, 2024; Aniceto et al., 2023; Bishop et al., 2023; Thon et al., 2024). In the age of digital media, social media platforms such as YouTube have become increasingly popular sources of medical information for people seeking information about their health conditions (Do et al., 2023). Despite lacking peer review, YouTube’s health-related content is attracting the attention of an increasingly larger audience (Chan et al., 2021; Erkin, Hanci & Ozduran, 2023; Lee et al., 2020; Saffi et al., 2020). However, given that these contents consist of a mix of expert-contributed material and potentially contradictory or misleading information, concerns have been raised regarding the reliability and quality of such non-peer-reviewed content (Erkin, Hanci & Ozduran, 2023; Madathil et al., 2015; Saffi et al., 2020; Yildizgoren & Bagcier, 2023).

Infodemiology, defined as the study of the dissemination and determinants of medical information through electronic media, has gained prominence in recent years (Goadsby et al., 2023). For many years, “headache” has consistently been among the most searched terms on Google, reflecting the widespread interest in headache-related content on social media platforms (Do et al., 2023; Goadsby et al., 2023). Incorporating unstructured, self-reported data from social media into a scientific framework and relevant medical insights requires methodological rigor (Goadsby et al., 2023). In parallel with this requirement, the number of studies assessing the quality of health-related information on YouTube has risen (Yildizgoren & Bagcier, 2023). Although there are some studies that assessed the content and quality of YouTube videos on migraine and cluster headaches, none of these addressed PDPH (Chaudhry et al., 2022; Gupta et al., 2023; Reina-Varona et al., 2022; Saffi et al., 2020).

The widespread use of YouTube as a health information source necessitates a thorough evaluation of PDPH-related content quality, particularly given PDPH’s significant impact on patient well-being and healthcare resource utilization. Despite the growing body of literature examining medical information on social media platforms, there remains a critical gap in our understanding of the content quality and reliability of PDPH videos on YouTube. This gap is particularly concerning given that PDPH can significantly impact patient recovery and quality of life, and thus, accurate and reliable information is crucial for patient education and management.

We hypothesized that higher audience engagement metrics (views, likes, comments) would positively correlate with content quality and reliability scores, representing an important indicator for identifying high-quality educational content on PDPH.

The primary objective of our study is to comprehensively evaluate the content adequacy, reliability, and quality of YouTube videos addressing PDPH, specifically by comparing videos uploaded by healthcare professionals, health-related websites, and patients.

To this end, this study was carried out to assess the quality and reliability of YouTube videos on PDPH and identify the sources that provide high-quality and dependable content.

Materials & Methods

Study design

This study was designed as a cross-sectional observational study of YouTube videos on PDPH. The study protocol was approved by the local ethical committee (approved on December 26, 2023, with approval number 21). A video-based search was performed on the YouTube online video-sharing platform (https://www.youtube.com/) using the English keywords “post-puncture”, “dura”, “headache”, and “post-dural puncture headache” on 20/01/2024. The keywords were strategically chosen to encompass a broad spectrum of information, including patient characteristics, procedural features, preventive measures, intervals for diagnostic suspicion, conservative and pharmacological treatment modalities, harmful maneuvers, follow-up details, long-term complications, and use of epidural patches.

Content analysis

Two independent reviewers identified PDPH-related videos on the YouTube website, ranked by popularity. Any bias due to search history and cookies has been avoided using Google Incognito with a private window function. Unaware of each other’s evaluation, two authors independently screened the titles and video descriptions for all videos.

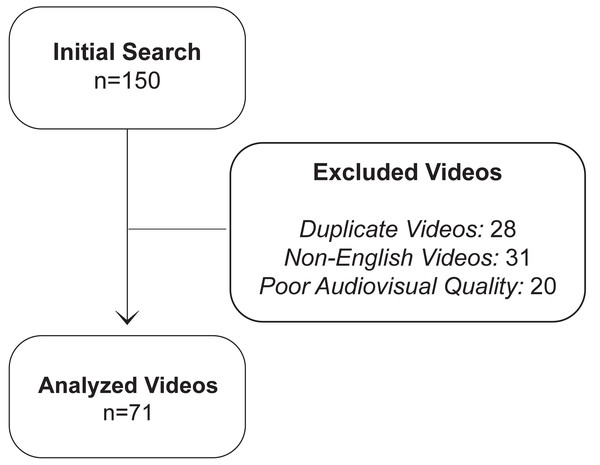

The video selection process is detailed in Fig. 1. Our initial search revealed 150 consecutive videos about PDPH. Of these videos, duplicate videos (n = 28), those published in any language other than English (n = 31), and those lacking the audiovisual quality needed for accurate assessment (n = 20) were excluded from the study. In the end, the study material consisted of 71 videos.

Figure 1: Flow diagram of video selection process.

The flowchart depicts the video selection process, starting with 150 initial videos and resulting in 71 analyzed videos after excluding duplicates (n = 28), non-English videos (n = 31), and those with poor audiovisual quality (n = 20).Content analysis revealed that 54.9% of videos (n = 39) provided comprehensive data on disease pathophysiology associated with patient- and procedure-related factors, while 97.2% (n = 69) covered the natural course of PDPH. Management strategies were discussed in 93.0% of the videos (n = 66), 64 of which (97.0%) addressed both conservative (hydration, bed rest, caffeine intake, and analgesic medications) and interventional approaches, including epidural blood patch. Notably, 13 videos (18.3%) provided complete information across all evaluated domains.

Videos’ descriptive characteristics

Videos’ descriptive characteristics, i.e., title, publication date, the number of days since upload, video source, country/continent in which the video was uploaded, and video duration (in seconds), were determined and recorded. Video sources were categorized as health-related websites, physicians, and patients.

Video source definitions

We followed a structured classification protocol for video source categorization. Accordingly, health-related websites were defined as institutional channels operated by accredited healthcare organizations, medical schools, academic hospitals, or established medical education platforms with verified institutional credentials. These included university medical centers, professional medical societies, and recognized healthcare education organizations with institutional verification badges on their YouTube channels.

Physician-sourced videos were identified based on explicitly declared medical credentials in the channel description or video content, including medical license numbers, institutional affiliations, and professional certifications. Only videos from physicians with verifiable professional credentials were included in this category.

Patient-generated content was identified through explicit self-identification as individuals sharing personal PDPH experiences. In cases where the source’s identity was ambiguous, we conducted additional verification through channel description analysis, content review, and assessment of associated social media profiles. To maintain classification integrity, videos with unclear sources or overlapping characteristics were reviewed independently by both researchers, and consensus was reached in case of disagreement between researchers. To address potential category overlap, channels operated by physicians at healthcare institutions were classified based on their primary identification in video presentations and channel branding. In the case of videos that could potentially be included in more than one category, classification was determined considering the primary content creator and the channel’s stated purpose.

The number of views, likes, dislikes, and comments were also recorded for each video. Based on these data, the video power index (VPI) was calculated for each video using the following formula: VPI = video like ratio [like/(like+dislike) ×100] × video view ratio [number of views/days]/10015.

Reliability assessment

The DISCERN instrument and the Journal of American Medical Association (JAMA) benchmark criteria were used to assess the reliability of the health/medical information in the videos. The 5-point Likert-type DISCERN tool includes 15 “yes/no” items. While eight of these items assess the reliability of the videos, seven assess the quality of information on treatment choices. The total DISCERN score is calculated by adding up the points assigned to the 15 items. Total DISCERN scores between 63–75, 51–62, 39–50, 27–38, and lower than 27 are classified as excellent, good, fair, poor, and very poor and assigned 5, 4, 3, 2 points, and 1 point, respectively (Chaudhry et al., 2022; Erkin, Hanci & Ozduran, 2023; Yildizgoren & Bagcier, 2023). The higher the total DISCERN score, the higher the reliability (Chang & Park, 2021; Charnock et al., 1999; Erkin, Hanci & Ozduran, 2023). Accordingly, the total DISCERN scores assigned higher than 3 points, 3 points, and lower than 3 points were considered to indicate high, moderate, and low reliability, respectively (Chaudhry et al., 2022).

JAMA benchmark criteria have also been used to assess the accuracy and reliability of the videos. To this end, four JAMA benchmark criteria, i.e., authorship, disclosure, currency, and attribution, were assigned a score between 0 and 1. Accordingly, the total JAMA score can be between 0 and 4. The higher the JAMA score, the higher the reliability (Chang & Park, 2021; Charnock et al., 1999; Erkin, Hanci & Ozduran, 2023; Yildizgoren & Bagcier, 2023). The total JAMA scores assigned 4 points, 2 or 3 points, and 0 points or 1 point were considered to indicate completely sufficient, partially sufficient, and insufficient videos, respectively (Erkin, Hanci & Ozduran, 2023).

Quality assessment

The 5-point Likert type Global Quality Scale (GQS) was used to assess the quality of the health/medical information in the videos. Videos assigned four and five points, three points, and one and two points were considered high, medium, and low-quality videos, respectively (Chang & Park, 2021; Chaudhry et al., 2022; Duran & Kizilkan, 2021; Erkin, Hanci & Ozduran, 2023). The third reviewer made the final decision in case of a discrepancy between the two reviewers.

Statistical analysis

In this study, we evaluated the content adequacy, reliability, and quality of the health/medical information provided by the YouTube videos on PDPH. To this end, the descriptive statistics obtained from the collected data were tabulated as median and range (minimum-maximum) values for continuous variables and frequencies and percentages for categorical variables. The normal distribution characteristics of the continuous variables were analyzed using the Shapiro–Wilk, Kolmogorov–Smirnov, and Anderson-Darling tests based on sample sizes.

In comparing the categorical variables between the groups, Pearson’s chi-square test was used for 2 × 2 tables with expected cells of five or higher, Fisher’s exact test was used for 2 × 2 tables with expected cells less than five, and Fisher–Freeman–Halton test was used for R × C tables. On the other hand, Kruskal–Wallis H and Dwass–Steel–Critchlow–Fligner tests were used to compare normally and non-normally distributed continuous variables between independent groups, respectively. Spearman’s Rho correlation coefficient was used to examine the relationships between non-normally distributed variables. Correlation coefficients (r) were reported along with their corresponding p-values.

Jamovi project 2.3.28 (Jamovi, version 2.3.28.0, 2023, retrieved from https://www.jamovi.org) and JASP 0.18.3 (Jeffreys’ Amazing Statistics Program, version 0.18.3, 2024, retrieved from https://jasp-stats.org) software packages were used in the statistical analyses. Probability (p) statistics of ≤ 0.05 were deemed to indicate statistical significance.

Results

The median interval between the upload and evaluation time of the 71 YouTube videos was 836 (min. 17, max. 4921) days. Real-world data was the most common content type, used in 60.6% of evaluated videos. Of the 71 videos, 42.3% were uploaded by health-related websites, 36.6% by physicians and 21.1% by patients. The videos were most commonly sourced from the USA (n = 24, 33.8%), followed by India (n = 12, 16.9%). The median view, like, dislike, and comment counts were 1130, 26, six, and nine, respectively. The median VPI was 1.15 (min. 0, max. 48.5). The upload and descriptive characteristics of the videos are given in Table 1.

| Overall (n = 71) | ||

|---|---|---|

| Days since upload date† | 836.0 [17.0–4921.0] | |

| Duration in seconds† | 530.0 [61.0–3411.0] | |

| Content type‡ | Animation | 5 (7.0) |

| Real-world | 43 (60.6) | |

| Text | 23 (32.4) | |

| Video source‡ | Physicians | 26 (36.6) |

| Health-related websites | 30 (42.3) | |

| Patients | 15 (21.1) | |

| Country of origin‡ | United States of America | 24 (33.8) |

| India | 12 (16.9) | |

| Canada | 3 (4.2) | |

| Others | 12 (16.9) | |

| Unknown | 20 (28.2) | |

| View count† | 1130.0 [2.0–63366.0] | |

| Likes‡ | No | 10 (14.1) |

| Yes | 61 (85.9) | |

| Number of likes† | 26.0 [1.0–324.0] | |

| Dislikes‡ | No | 50 (70.4) |

| Yes | 21 (29.6) | |

| Number of dislikes† | 6.0 [2.0–25.0] | |

| Comments‡ | No | 28 (39.4) |

| Yes | 43 (60.6) | |

| Number of comments† | 9.0 [1.0–129.0] | |

| VPI† | 1.15 [0.0–48.5] |

Based on the DISCERN-quality scores, 13 (18.3%) were high quality. In addition, based on total DISCERN scores, a total of 8 (11.2%) videos were classified as good (5.6%) or excellent (5.6%) (Table 2).

| Overall (n = 71) | ||

|---|---|---|

| Part 1: DISCERN-reliability† | 19.0 [8.0–40.0] | |

| Part 2: DISCERN-treatment† | 16.0 [7.0–35.0] | |

| Part 3: DISCERN-quality† | 2.0 [1.0–5.0] | |

| Reliability categories based on DISCERN-quality‡ | Poor (score < 3) | 38 (53.5) |

| Moderate (score 3) | 20 (28.2) | |

| High (score > 3) | 13 (18.3) | |

| DISCERN-total† | 34.0 [15.0–75.0] | |

| Categories for DISCERN-total‡ | Very poor (15–27) | 17 (23.9) |

| Poor (28–38) | 29 (40.8) | |

| Fair/medium (39–50) | 17 (23.9) | |

| Good (51–62) | 4 (5.6) | |

| Excellent (63–75) | 4 (5.6) | |

| JAMA source† | 2.0 [1.0–5.0] | |

| JAMA content/quality categories‡ | Insufficient (scores 0 and 1) | 24 (33.8) |

| Partially sufficient (scores 2 and 3) | 38 (53.5) | |

| Completely sufficient (score 4) | 9 (12.7) | |

| GQS† | 2.0 [1.0–5.0] | |

| GQS categories‡ | Low (scores 1 and 2) | 37 (52.1) |

| Intermediate (score 3) | 21 (29.6) | |

| High (scores 4 and 5) | 13 (18.3) |

The JAMA quality scores revealed that 53.5% and 12.7% of the videos were partially and completely sufficient, respectively, whereas GQS scores indicated that only 29.6% and 18.3% had intermediate and high quality. The coefficient correlations between DISCERN-total and JAMA, DISCERN-total and GQS, and JAMA and GQS scores were 0.703, 0.785, and 0.745, respectively. The reliability and quality scores of the videos are given in Table 2.

There were positive correlations between video duration and content adequacy, reliability, and quality scores of the videos (p < 0.05) (Table 3). Of these correlations, the correlation coefficient between the video duration and the DISCERN-total score was 0.473, whereas the correlation coefficients between the video duration and JAMA and GQS scores were 0.278 and r = 0.313, respectively. Videos’ other descriptive characteristics, i.e., view, like, dislike, and comment counts, were not found to be correlated with DISCERN-total, JAMA, and GQS scores (p > 0.05). The correlations between videos’ descriptive characteristics, content adequacy, reliability, and quality scores are detailed in Table 3.

| DISCERN-total | JAMA source | GQS | ||||

|---|---|---|---|---|---|---|

| r | p | r | p | r | p | |

| Duration in seconds | 0.473 | <0.001 | 0.278 | 0.019 | 0.313 | 0.008 |

| View count | −0.211 | 0.077 | −0.108 | 0.370 | −0.196 | 0.102 |

| Number of likes | −0.104 | 0.425 | −0.012 | 0.928 | −0.031 | 0.811 |

| Number of dislikes | −0.294 | 0.195 | −0.031 | 0.895 | −0.021 | 0.928 |

| Number of comments | −0.213 | 0.170 | −0.182 | 0.244 | −0.136 | 0.385 |

Notes:

JAMA, Journal of American Medical Association benchmark criteria; GQS, Global Quality Score.

r: Spearman’s rho coefficient.

Significant p values are indicated in bold.

The comparison of the videos based on their sources revealed significant differences in content type (p < 0.001). Accordingly, real-world videos were more commonly uploaded by physicians and patients than health-related websites, whereas videos with text content were more commonly uploaded by health-related websites and physicians than patients. The median duration and VPI of the videos uploaded by health-related websites and patients were higher, albeit not significantly, than those uploaded by physicians (p = 0.621 and p = 0.486, respectively). The distribution of videos’ characteristics by video sources is given in Table 4.

| Video source | ||||

|---|---|---|---|---|

| Physicians (n = 26) | Health-related websites (n = 30) | Patients (n = 15) | p | |

| Days since upload date† | 802.5 [17.0–4771.0] | 791.0 [116.0–4639.0] | 1609.0 [263.0–4921.0] | 0.212 |

| Duration in seconds† | 383.0 [74.0–3411.0] | 616.5 [73.0–2494.0] | 595.0 [61.0–1966.0] | 0.621 |

| Content type‡ | ||||

| Animation | 0 (0.0)a | 5 (16.7)b | 0 (0.0)a,b | <0.001 |

| Real-world | 18 (69.2)a | 10 (33.3)b | 15 (100.0)c | |

| Text | 8 (30.8)a | 15 (50.0)a | 0 (0.0)b | |

| Country of origin‡ | ||||

| United States of America | 6 (23.1) | 10 (33.3) | 8 (53.3) | 0.484 |

| India | 6 (23.1) | 5 (16.7) | 1 (6.7) | |

| Canada | 0 (0.0) | 2 (6.7) | 1 (6.7) | |

| Others | 5 (19.2) | 6 (20.0) | 1 (6.7) | |

| Unknown | 9 (34.6) | 7 (23.3) | 4 (26.7) | |

| View count† | 769.5 [2.0–63366.0] | 772.5 [32.0–40626.0] | 6018.0 [61.0–36690.0] | 0.053 |

| Likes‡ | 20 (76.9) | 28 (93.3) | 13 (86.7) | 0.228 |

| Number of likes† | 23.5 [2.0–309.0] | 24.0 [1.0–324.0] | 55.0 [3.0–290.0] | 0.327 |

| Dislikes‡ | 7 (26.9) | 7 (23.3) | 7 (46.7) | 0.251 |

| Number of dislikes† | 3.0 [2.0–25.0] | 7.0 [2.0–14.0] | 6.0 [4.0–17.0] | 0.404 |

| Comments‡ | 15 (57.7) | 16 (53.3) | 12 (80.0) | 0.210 |

| Number of comments† | 6.0 [1.0–46.0] | 3.5 [1.0–129.0] | 11.5 [2.0–114.0] | 0.277 |

| VPI† | 0.6 [0.0–48.5] | 1.3 [0.0–21.9] | 1.3 [0.0–25.9] | 0.486 |

There were significant differences between the reliability and quality scores of the videos categorized according to their sources (p < 0.05). Accordingly, the DISCERN-reliability, DISCERN-quality, DISCERN-total, JAMA, and GQS scores in the videos uploaded by physicians and health-related websites were significantly higher than those uploaded by the patients (p < 0.001). There was no significant difference between the reliability and quality scores of those videos uploaded by physicians and health-related websites (p > 0.05 for all cases). The rate of low and very low-quality videos based on DISCERN-quality and DISCERN-total scores uploaded by patients was significantly higher than those uploaded by physicians and health-related websites (p = 0.006 and p = 0.008, respectively). Similarly, the rate of videos of inadequate and low quality based on JAMA and GQS scores uploaded by patients was significantly higher than those uploaded by physicians and health-related sites (p < 0.001 and p = 0.007, respectively). The correlations between the descriptive characteristics and reliability and quality scores of the videos categorized according to their sources are detailed in Table 5.

| Video source | ||||

|---|---|---|---|---|

| Physicians (n = 26) | Health-related websites (n = 30) | Patients (n = 15) | p | |

| Part 1: DISCERN-reliability† | 18.0 [14.0–40.0] | 21.0 [12.0–40.0] | 12.0 [8.0–19.0] | <0.001 |

| Part 2: DISCERN-treatment† | 16.0 [9.0–35.0] | 17.0 [7.0–35.0] | 13.0 [7.0–23.0] | 0.165 |

| Part 3: DISCERN-quality† | 3.0 [1.0–5.0] | 3.0 [1.0–5.0] | 1.0 [1.0–3.0] | <0.001 |

| Reliability categories based on DISCERN-quality‡ | ||||

| Poor (score < 3) | 11 (42.3)a | 13 (43.3)a | 14 (93.3)b | |

| Moderate (score 3) | 11 (42.3)a | 8 (26.7)a,b | 1 (6.7)b | 0.006 |

| High (score > 3) | 4 (15.4)a,b | 9 (30.0)b | 0 (0.0)a | |

| DISCERN-total† | 34.0 [23.0–75.0] | 38.0 [20.0–75.0] | 27.0 [15.0–42.0] | <0.001 |

| Categories for DISCERN-total‡ | ||||

| Very poor (15–27) | 3 (11.5)a | 4 (13.3)a | 10 (66.7)b | |

| Poor (28–38) | 13 (50.0)a | 12 (40.0)a | 4 (26.7)a | |

| Fair/medium (39–50) | 8 (30.8)a | 8 (26.7)a | 1 (6.7)a | 0.008 |

| Good (51–62) | 1 (3.8)a | 3 (10.0)a | 0 (0.0)a | |

| Excellent (63–75) | 1 (3.8)a | 3 (10.0)a | 0 (0.0)a | |

| JAMA source† | 2.0 [1.0–4.0] | 2.0 [1.0–5.0] | 1.0 [1.0–2.0] | <0.001 |

| JAMA quality/content categories‡ | ||||

| Insufficient (scores 0 and 1) | 4 (15.4)a | 6 (20.0)a | 14 (93.3)b | |

| Partially sufficient (scores 2 and 3) | 20 (76.9)a | 17 (56.7)a | 1 (6.7)b | <0.001 |

| Completely sufficient (score 4) | 2 (7.7)a,b | 7 (23.3)b | 0 (0.0)a | |

| GQS† | 3.0 [2.0–5.0] | 3.0 [1.0–5.0] | 1.0 [1.0–3.0] | <0.001 |

| GQS categories‡ | ||||

| Low (scores 1 and 2) | 10 (38.5)a | 13 (43.3)a | 14 (93.3)b | |

| Intermediate (score 3) | 10 (38.5)a | 10 (33.3)a | 1 (6.7)b | 0.007 |

| High quality (scores 4 and 5) | 6 (23.1)a | 7 (23.3)a | 0 (0.0)b | |

Notes:

JAMA, Journal of American Medical Association benchmark criteria; GQS, Global Quality Score.

Significant p values are indicated in bold.

Discussion

Our study revealed that YouTube videos on PDPH uploaded by physicians or health-related websites were more prevalent and had significantly higher content adequacy, reliability, and quality than those uploaded by patients. We found significant correlations between the videos’ descriptive characteristics, content adequacy, reliability, and quality scores, both overall and when the videos were classified by their source.

The role of social media in disseminating headache-related information is increasing. However, there are a limited number of studies that assessed YouTube videos on headaches, such as migraine or cluster headaches (Chaudhry et al., 2022; Do et al., 2023; Goadsby et al., 2023; Gupta et al., 2023; Reina-Varona et al., 2022; Saffi et al., 2020). In one of these studies, Chaudhry et al. (2022) analyzed 134 YouTube videos on cluster headaches and found that almost half of the videos were of low quality according to the GQS scores, and nearly three-quarters of the videos were of low quality according to the DISCERN scores. The findings of our study, the first to investigate the reliability and quality of YouTube videos in PDPH, are consistent with the findings of Chaudhry et al. (2022). These findings raise concerns about the potential risks faced by patients who rely on YouTube for medical information and point to the need to improve the quality and reliability of even content provided by healthcare providers (Altunisik & Firat, 2022; Baker et al., 2021; Mohile et al., 2023). It is reported in the literature that YouTube videos about medical topics are most commonly created by physicians (Erkin, Hanci & Ozduran, 2023; Saffi et al., 2020). Gupta et al. (2023) reported that nearly two-thirds of the videos on migraine were published by physicians, hospitals, and healthcare providers. Another study reported that videos published by healthcare professionals/institutions and videos featuring personal healthcare experiences were more frequent than others (Chaudhry et al., 2022). Saffi et al. (2020) reported that only about one-fourth of the videos (17%) have been published by healthcare professionals/institutions and universities. In comparison, we found that almost four-fifths of the videos (78.9%) were uploaded by health-related websites and physicians.

Several studies assessed videos’ content adequacy, reliability, and quality by their sources (Arslan, Dinc & Arslan, 2023; Gupta et al., 2023; Ng et al., 2021; Onder & Zengin, 2021). In one of these studies, where patients were not specified as a separate source, no significant difference was found between the GQS and DISCERN-reliability scores of YouTube videos categorized according to their sources (Gupta et al., 2023). Other studies reported that the YouTube videos on diseases uploaded by healthcare professionals had better quality than those uploaded by different sources (Ng et al., 2021; Onder & Zengin, 2021). Similarly, we found that the videos uploaded by physicians and health-related websites had higher content adequacy, reliability, and quality scores than those uploaded by patients. These findings underscore the importance of considering the source when evaluating the credibility of online medical information (Bayram & Pinar, 2023). On the other hand, the discrepancies between the findings of the studies in question may be attributed to the fact that primarily GQS, which does not focus on the information itself, has been used to rate the videos’ quality and content adequacy along with differences in the technical characteristics of the videos and the characteristics of medical topics explored, such as popularity (Chaudhry et al., 2022).

The correlations between the descriptive characteristics and the reliability and quality scores of the videos have been investigated in various studies. Accordingly, most studies reported that higher view and like counts were significantly associated with higher reliability and quality of the videos, while some studies reported negative correlations between the audience engagement parameters and DISCERN and GQS scores (Duran & Kizilkan, 2021; Erkin, Hanci & Ozduran, 2023). In comparison, we did not find significant correlations between these parameters. Then again, the sources of the videos may impact their descriptive characteristics. As a matter of fact, YouTube videos about migraine uploaded by “others” had higher VPI values than those uploaded by other subcategories (Gupta et al., 2023). These higher VPI values were attributed to higher view counts, reflecting the content’s straightforwardness, greater ease of understanding, and public relatability.

In contrast, even though we found strong correlations between the content adequacy, reliability, and quality scores of the videos categorized according to their sources, we did not find any significant difference between their descriptive characteristics.

We propose implementing a quality labeling system for medical education videos on YouTube. While our analysis encompasses various content sources, we acknowledge the inherent differences in purpose and perspective between professional and patient-generated content. The inclusion of diverse sources reflects the actual information environment patients encounter. Videos should be clearly labeled as either ‘professional medical education’ or ‘patient experience’ content, with appropriate disclaimers for non-professional content. This labeling would help viewers make informed decisions about the educational value of the content they consume while acknowledging the different but complementary roles of professional and experiential content in patient education.

Based on our findings, we propose several practical recommendations for enhancing the quality of PDPH-related content on social media platforms. First, developing a standardized quality assessment tool specifically designed for medical procedure videos could help content creators maintain consistent educational standards. This tool should incorporate elements such as evidence-based practice guidelines, clear presentation of provider credentials, explicit citation of scientific sources, and comprehensive coverage of both conservative and interventional management approaches.

While our analysis encompasses various content sources, we acknowledge the inherent differences in purpose and perspective between professional and patient-generated content. The inclusion of diverse sources reflects the actual information environment patients encounter, though future studies might benefit from developing specialized evaluation metrics for experiential content.

The primary limitation of our study was its cross-sectional design, featuring the YouTube videos on PDPH published in English only. The fact that the PDPH videos published only on YouTube were included in the study may be deemed another limitation.

Another important limitation is that our study did not specifically assess videos’ adherence to evidence-based practice guidelines. While we evaluated content quality through established tools like DISCERN, JAMA, and GQS scores, systematic grading of adherence to current clinical practice guidelines posed significant methodological challenges, particularly given the diverse nature of content creators and the difficulty in verifying medical credentials on social media platforms. Future research should focus on developing and validating specialized assessment tools for assessing adherence to clinical practice guidelines in medical education videos, potentially incorporating standardized criteria put forward by major anesthesiology societies.

It was also important to acknowledge that video engagement metrics (views, likes, and comments) may be influenced by factors beyond content quality and reliability. Healthcare provider characteristics, including gender, presentation style, and professional credentials, could potentially affect viewer engagement patterns. Additionally, technical aspects such as video production quality, presentation duration, and posting time may impact visibility and engagement. Furthermore, YouTube’s algorithm-driven content promotion and varying regional accessibility patterns might affect video reach independently of content quality. While our study focused primarily on content quality assessment, these potential confounding factors should be considered when interpreting engagement metrics as indicators of content impact. Future research might benefit from controlling these variables to better isolate the relationship between content quality and viewer engagement.

Conclusions

Our study sheds light on the reliability and quality of the YouTube videos on PDPH. Our finding that the YouTube videos on PDPH uploaded by physicians or health-related websites were more prevalent and had higher content adequacy, reliability, and quality scores than those uploaded by patients underscores the importance of considering the source of the video when assessing the credibility of the medical information addressed in the videos.