Challenges and opportunities for Arabic question-answering systems: current techniques and future directions

- Published

- Accepted

- Received

- Academic Editor

- José Alberto Benítez-Andrades

- Subject Areas

- Artificial Intelligence, Natural Language and Speech, Text Mining, Sentiment Analysis, Neural Networks

- Keywords

- Arabic question answering, Arabic question answering dataset, Transformer models, Information retrieval, Answer extraction

- Copyright

- © 2023 Alrayzah et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2023. Challenges and opportunities for Arabic question-answering systems: current techniques and future directions. PeerJ Computer Science 9:e1633 https://doi.org/10.7717/peerj-cs.1633

Abstract

Artificial intelligence-based question-answering (QA) systems can expedite the performance of various tasks. These systems either read passages and answer questions given in natural languages or if a question is given, they extract the most accurate answer from documents retrieved from the internet. Arabic is spoken by Arabs and Muslims and is located in the middle of the Arab world, which encompasses the Middle East and North Africa. It is difficult to use natural language processing techniques to process modern Arabic owing to the language’s complex morphology, orthographic ambiguity, regional variations in spoken Arabic, and limited linguistic and technological resources. Only a few Arabic QA experiments and systems have been designed on small datasets, some of which are yet to be made available. Although several reviews of Arabic QA studies have been conducted, the number of studies covered has been limited and recent trends have not been included. To the best of our knowledge, only two systematic reviews focused on Arabic QA have been published to date. One covered only 26 primary studies without considering recent techniques, while the other covered only nine studies conducted for Holy Qur’an QA systems. Here, the included studies were analyzed in terms of the datasets used, domains covered, types of Arabic questions asked, information retrieved, the mechanism used to extract answers, and the techniques used. Based on the results of the analysis, several limitations, concerns, and recommendations for future research were identified. Additionally, a novel taxonomy was developed to categorize the techniques used based on the domains and approaches of the QA system.

Introduction

In recent years, the capacity of computing systems to comprehend natural languages has increased and several computer-based programs, such as question-answering (QA) systems, allow individuals to expedite their work. A QA system is a subfield of information retrieval (IR) and is considered as an alternative to search engines. The objective of a QA system is to provide correct answers to inquiries submitted by users using their natural language. Conversely, search engines return related documents to extract relevant answers (Shaheen & Ezzeldin, 2014).

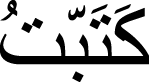

QA systems read passages and answer questions posed by users in their natural language. Given a question, they extract and rank possible answers from the most relevant documents retrieved from the internet to determine the most accurate answer. However, training these systems to understand Arabic text and answer questions posed in Arabic is a challenge. Arabic words often exhibit polysemy, meaning they can have multiple meanings depending on the context; for instance, consider the Arabic word “  ”, which can refer to “gold”, but can also mean “he went”, adding ambiguity to its usage in various contexts. This semantic richness poses difficulties for NLP models, necessitating advanced techniques to disambiguate and achieve accurate understanding. Over the years, numerous QA systems in various languages have been designed for usage. However, the development of Arabic QA systems has been impeded by the scarcity of research resources, tools, and linguistic challenges in Arabic (Darwish et al., 2021). Given these challenges, building QA systems that can understand and correctly respond to Arabic questions is highly difficult. As a result, very few studies on Arabic natural language processing (NLP) are available compared to those on English NLP. Therefore, computer-based analysis and comprehension of Arabic text have recently emerged as a burgeoning NLP research area.

”, which can refer to “gold”, but can also mean “he went”, adding ambiguity to its usage in various contexts. This semantic richness poses difficulties for NLP models, necessitating advanced techniques to disambiguate and achieve accurate understanding. Over the years, numerous QA systems in various languages have been designed for usage. However, the development of Arabic QA systems has been impeded by the scarcity of research resources, tools, and linguistic challenges in Arabic (Darwish et al., 2021). Given these challenges, building QA systems that can understand and correctly respond to Arabic questions is highly difficult. As a result, very few studies on Arabic natural language processing (NLP) are available compared to those on English NLP. Therefore, computer-based analysis and comprehension of Arabic text have recently emerged as a burgeoning NLP research area.

Previously, a few Arabic QA experiments and systems were designed using datasets that were small or some inaccessible. Despite several reviews being conducted on Arabic QA studies, either the number of studies covered was limited or they failed to encompass the latest trends in research (Alwaneen et al., 2021; Bakari, Bellot & Neji, 2016c; Bakari, Bellot & Neji, 2016b; Biltawi, Tedmori & Awajan, 2021; Ezzeldin & Shaheen, 2012; Mahdi, 2021; Ray & Shaalan, 2016; Utomo, Suryana & Azmi, 2020). To our knowledge, only two published systematic reviews were focused on Arabic QA systems. The first review covered only 26 primary studies without including recent techniques (Biltawi, Tedmori & Awajan, 2021), while the other covered only nine studies conducted for the Holy Qur’an QA systems (Utomo, Suryana & Azmi, 2020). The studies that were incorporated were examined based on the dataset employed, domain addressed, type of Arabic questions, IR and answer extraction mechanisms, and techniques utilized. Additionally, a new taxonomy was developed for the approaches used, which were categorized according to both the QA system domains and methods.

This systematic review is structured as follows. ‘Background’ provides a background on the challenges of the Arabic language, history of NLP, and approaches to Arabic QA tasks. ‘Related work’ presents an overview of previous research in this area. ‘Survey methodology’ provides a detailed description of the methods used to conduct this review. The findings are presented in ‘Results’. The limitations of this review are discussed in ‘Limitation’. Lastly, ‘Conclusions’ draws the conclusions of this study.

Background

History and approaches of QA systems in the Arabic world

Currently, almost everything is just a click away and progress is rapid in this world. A fundamental Google search engine command includes “what will today’s weather be like? You may need an umbrella; the temperature will be 8 °C (rain).” Artificial intelligence (AI) has taken great strides in recent years and is now an integral part of numerous aspects of our lives. Researchers on AI have improved research on NLP through their ongoing efforts. NLP was initially proposed to help computers better understand human languages. Some of the earliest applications of AI were in the field of NLP, such as machine translation (Khurana et al., 2022). An exponential improvement in the quality of AI technology, accompanied by the growing public knowledge and expectations of what AI can accomplish were observed in recent years (2010–2020).

According to Darwish et al. (2021), Arabic NLP and QA have especially undergone three distinct waves of development over their history. The fourth wave of development can be accelerated based on the approaches of NLP. Four of the approaches include rule-based (RB), machine learning (ML), deep learning (DL), and pre-trained modelling languages (PMLs), which are also known as transformer models based on DL.

In the 1980s, the world witnessed a significant breakthrough when Microsoft released MS-DOS 3.3 with Arabic language support. Additionally, Sakhr (Darwish et al., 2021) developed the first Arabic morphological analyzer in 1985, where examining the structure of Arabic text through morphological analysis was emphasized, and a majority of the research utilized RB methods. Moreover, Sakhr (Darwish et al., 2021) developed the first syntactic and semantic analyzer in 1992, followed by Arabic optical character recognition in 1995. Numerous commercial Arabic-to-English machine translation products and solutions were developed during that era (Khurana et al., 2022). The wave-processing Arabic language was based on the RB approach, where the handwritten rules were utilized to assist machines in comprehending sentences by structuring phrases. However, the technique did not enable a machine to grasp the meaning of a sentence, but it could recognize particular words or word combinations in specific patterns.

During 2000–2010, the second wave of Arabic NLP development occurred. Large-scale initiatives, funded by the United States government, were conducted to develop Arabic NLP tools for their dialects. These initiatives included the creation of machine translation technology, IR systems, and QA tasks (Darwish et al., 2021). A majority of the systems then developed employed ML, which was gaining immense popularity in the field of NLP. ML-based algorithms require significantly less linguistic information than RB-based systems, and are hence more efficient and accurate. However, acquiring requisite data requires some effort, particularly for Arabic datasets. During this period, numerous hybrid systems that successfully merged RB morphological analyzers and ML disambiguation were developed (Darwish et al., 2021). ML-based algorithms offered a more sophisticated method of interpreting ambiguity. Decision trees as examples of algorithms employed if-then regulations to derive the most accurate outcome, while probabilistic algorithms reinforced the decision of machines by indicating a degree of certainty (Johri et al., 2021).

In 2010, the third wave of development based on the DL approach was initiated (Alyafeai, AlShaibani & Ahmad, 2020). During this period, a noteworthy surge in the quantity of Arab researchers and postgraduate students interested in Arabic NLP was observed, which resulted in a corresponding rise in publications from the Arab world presented at top conferences. Additionally, two major independent advancements emerged during this period: DL and neural models and social media. NLP involved inherent ambiguity that could not be resolved entirely. The meaning of a word could depend on the context in which the word was used, making it challenging to create a definitive rule or decision tree that covered all possible meanings. Since DL did not necessitate the programmer to provide decision-making rules, but rather had an algorithm deduce the process of mapping an input to an output, it was an effective solution to the existing issue (Johri et al., 2021). A majority of the artificial neural networks (ANN), including recurrent neural networks (RNNs) and conventional neural networks (CNNs), were introduced for NLP. However, to train and test DL models, high-power hardware with vast processing speed was necessary. Further details are discussed in the upcoming sections.

NLP requires a large amount of labeled data for training models. One of the main challenges in NLP is obtaining sufficient labeled data, which are not always readily available, making it difficult to train transformer models (Johri et al., 2021). A method to overcome this hurdle is to label data explicitly, although the process may be time-consuming and expensive.

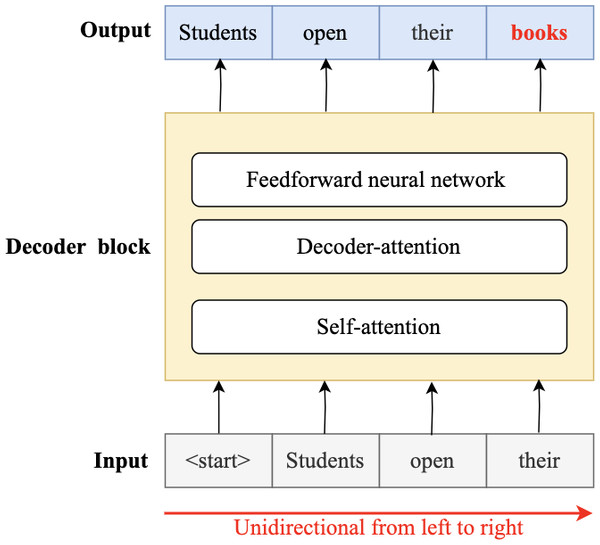

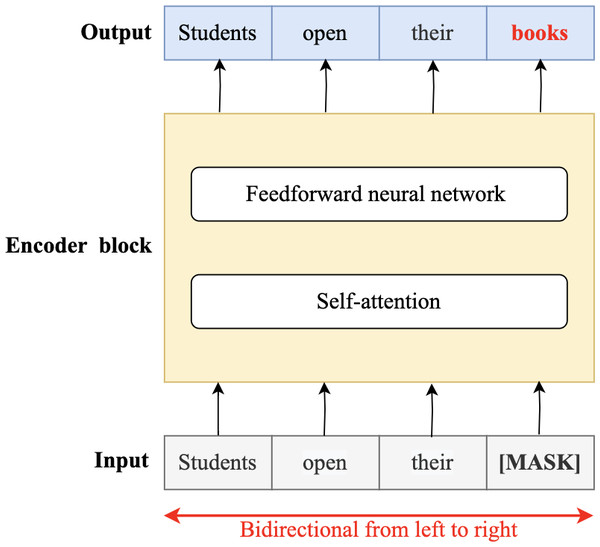

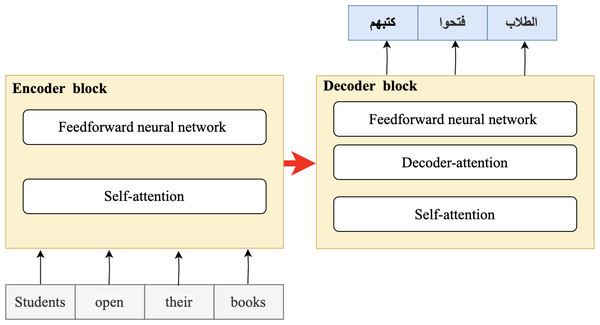

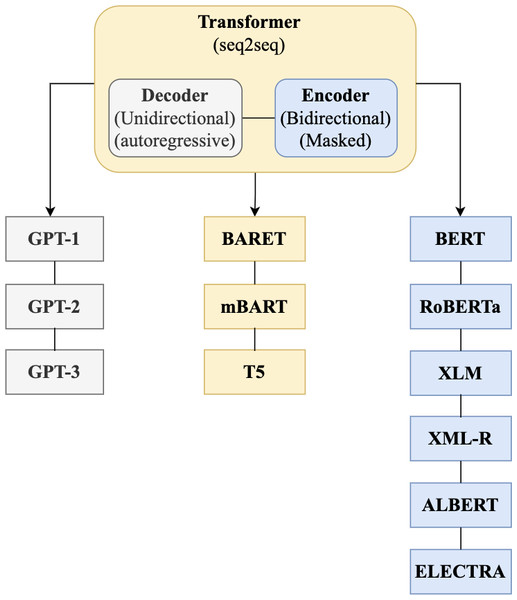

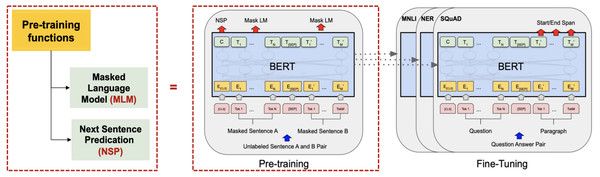

In 2018, the fourth wave of development started by the implementation of mBERT (Pires, Schlinger & Garrette, 2019b). A majority of the NLP tasks in this period was solved by pre-trained language models (PLMs), also known as transformer models. Transformer models are DL models employing an attention mechanism. A self-attention mechanism does not require recurrent architecture and can perform parallel processing (Zong, Xia & Zhang, 2021). It prevents the loss of relevant information from the extensive volume of texts processed by ANN models. Thus, the mechanism is an integral part of models that perform several NLP tasks including translation, QA, and sentiment analysis (Saidi, Jarray & Mansour, 2021). It allows dependencies to be processed regardless of their position in the input or output sequences, which is necessary for NLP because a transfer model searches an encoder for positions containing the most relevant information to generate a sentence. Thus, a transfer model is capable of “attending to” specific words when encoding an output because of the self-attention mechanism, which allows it to remember all the tokens in the input sequence (Vaswani et al., 2017). Table 1 provides a summary of approaches in NLP.

| RB | ML | DL (CNN/RNN) | DL (PLMs/Transformer) | |

|---|---|---|---|---|

| Year | (1985–2000) | (2000–2010) | (2010–2020) | (2018–Present) |

| Concept | Linguists write explicit rules such as the if-then rule. These human-made rules are followed and applied to store, sort, and manipulate data. | Humans extract features. Thereafter, machines learn the rules based on extracted features, such as decision-tree algorithms. | Machines learn the rules and features without considering contexts, such as ANN and CNN models. | Machines learn rules and features with self-attention mechanism to consider contexts, such as transformer models. |

| Pros | Rules are easy to understand. Requires limited data owing to limited applicable domain. | Use of fewer computational resources and less data. | Automatic representation of learning and permission to capture semantic. | Existence of self-attention mechanism and permission to consider context. Texts are processed in parallel. |

| Cons | Manual performance of task is time consuming and difficult to scale. When rules are not strictly followed, performance can suffer. | No semantic capturing, feature extraction is expensive, and no proper generalization for other tasks, such as text generation. | Sequential processing of texts. Requires substantial quantity of data and expensive computing power. | Requires substantial quantity of data and expensive computational resources. |

Challenges in Arabic NLP

Arabic is spoken by the Arabs and Muslims and is located in the middle of the Arab world, which encompasses the Middle East and North Africa. The Arabic language is one of the oldest and most widely spoken languages in the world, with over 420 million speakers across the globe (Darwish et al., 2021). The use of NLP techniques to process modern Arabic is difficult due to the complex morphology, orthographic ambiguity, regional variations while speaking, and limited linguistic and technological resources of the language (Alyafeai, AlShaibani & Ahmad, 2020; Darwish et al., 2021; Guellil et al., 2021).

Morphological richness

In Arabic, a single word can have multiple meanings; for instance, “  ” can mean either “liquid” or “beggar.” Further, a single word may be equivalent to an entire English phrase; for example, “

” can mean either “liquid” or “beggar.” Further, a single word may be equivalent to an entire English phrase; for example, “  ” means “they will spend it.” Emmett Knowlton (Atef et al., 2020) has stated that Arabic is regarded as one of the most difficult languages to master, with an average of 88 weeks or 2,200 h of instruction needed to attain proficiency in both spoken and written Arabic.

” means “they will spend it.” Emmett Knowlton (Atef et al., 2020) has stated that Arabic is regarded as one of the most difficult languages to master, with an average of 88 weeks or 2,200 h of instruction needed to attain proficiency in both spoken and written Arabic.

Orthographic ambiguity

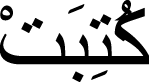

Written Arabic texts use optional diacritical marks to indicate details of phonology and vowels that are essential for distinguishing one word from another, resulting in orthographic ambiguity (Darwish et al., 2021). For example, the word “  ” is written without diacritics; however, it can be written as “

” is written without diacritics; however, it can be written as “  or I wrote,” “

or I wrote,” “  ” or she wrote,” and “

” or she wrote,” and “  or it is written by” with diacritics.

or it is written by” with diacritics.

Dialectal variations

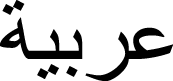

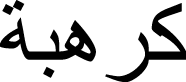

Arabic is not a singular language, but a group of linguistically related varieties, including modern standard Arabic (MSA), classical Arabic (CA), and Arabic dialects (AD) (Guellil et al., 2021; Malhas, 2023). MSA is preferred for official purposes and education, while other forms including AD are commonly spoken and are recently being used for written communication. AD encompasses various dialects, such as Egyptian, Gulf Arabic, or Moroccan Arabic, each with their own unique grammar and vocabulary that distinguishes them from MSA. For example, the word “car” in MSA, Egyptian, Gulf Arabic, and Moroccan Arabic is written as “  ,” “

,” “  ,” “

,” “  ,” and “

,” and “  ,” respectively (Darwish et al., 2021).

,” respectively (Darwish et al., 2021).

Resource poverty

Arabic has one of the lowest resources of data. Substantial quantity of Arabic data has to be collected and processed through several pre-processing steps before an input can be given to a machine for learning. The data utilized in RB methods necessitate lexicons and meticulously written rules, while those in ML and DL approaches require large and annotated corpora.

Unlike other languages, Arabic is not usually supported by various platforms. Miller (1995) created an English lexical database of words known as WordNet to store semantic relations between words. Thereafter, several other languages were incorporated except Arabic. WordNet research was supported by Princeton University to enhance English words and their relations. In 2006, the development of Arabic WordNet was initiated, and was improved and processed until 2016 by Elkateb et al. (2006).

In 2002, Sheffield University created a general architecture for text engineering, an NLP tool for several tasks such as information extraction (Cunningham, 2002). Initially, it did not support Arabic, but since 2010, Zaidi, Laskri & Abdelali (2010) endorsed it. Up to this date, Arabic is yet to be supported by models including those researched by Fan and Gardent (Fan et al., 2020), who built a model aimed at generating texts for 21 languages. Conversely, Arabic does not support multilingual abstract meaning representation-to-text generation models.

Arabic QA systems

NLP is a linguistic branch of AI concerning the ability of computing systems to comprehend natural languages and perform specific tasks (Prasad et al., 2019). Prominent research areas in NLP include QA, sentiment analysis, translation, and computer-based text generation. Computers employ various approaches, such as lexicon RB and ML approaches, to process, analyze, and comprehend natural languages. Transformer models form the basis of current NLP techniques that yield cutting-edge outcomes (Otter, Medina & Kalita, 2021; Al-Ayyoub et al., 2018).

With the advancement of technology and availability of ample quantity of online data, the ability to request information is increasingly important. The rapid expansion of online information is a crucial draw for several users who depend on search engines and other IR tools to find answers to their questions. When a user enters a request into a search engine, the engine scours the internet for relevant pages and returns a list of those with brief descriptions of the request (Calijorne Soares & Parreiras, 2020). Consequently, QA systems are crucial to the fields of IR and text processing because they facilitate the extraction of important information from a given text (Almotairi & Fkih, 2021). A QA system is one of the NLP tasks belonging to AI tasks. The field of a QA system may be regarded as a subset of natural language understanding (NLU), which is a subset of NLP. Automatic QA systems are created to respond to questions presented by individuals using natural languages. The users input questions in their own languages, extract crucial details from the provided data, and receive responses in their natural language format (Nassiri & Akhloufi, 2022).

The purpose of a QA system is to analyze questions or queries posed in natural languages by users and provide the most relevant answers to them (Nguyen & Tran, 2022). Hence, QA systems are considered as intelligent systems owing to their goal of providing precise answers to user questions posed in natural languages. Moreover, QA systems are vital for improving particular field environments such as education, health care, and research engines. They are more efficient in promptly providing correct answers to user questions (Almotairi & Fkih, 2021).

QA systems find applications in numerous domains, such as education, health care, research engines, and personal assistance, for various reasons (Calijorne Soares & Parreiras, 2020). Due to their versatility in various applications, QA systems are commonly utilized in the field of NLP (Almotairi & Fkih, 2021). They involve QA using natural languages. Several examples of such applications are as follows.

First, the main application of a QA system is in IR or web search (Calijorne Soares & Parreiras, 2020). QA systems automatically answer user questions in their natural languages and do not merely provide documents relevant to the questions; they also extract all relevant information from those documents and present a thorough response, similar to that a human would.

Second, QA systems are used in community QA (CQA) services (Adlouni et al., 2019), where users converse and share information through QA, such as Yahoo! answers, stack overflow, or Cheeg, aided by online knowledge exchange. CQA services are used in the field of education to help learners interact with experts.

Third, QA systems are used commercially as customer support to reduce the workload on customer service teams and allow them to concentrate on important issues. An example of a QA system applied to customer support is the chatbot used by Amazon (Jiang, 2019).

Fourth, QA systems are used by search engines such as MSN, Google, Yahoo!, and Bing Search (Al-Shenak, Nahar & Halawani, 2019). The number of displayed questions grows when either of the questions is clicked, and the revised list more closely resembles the clicked question.

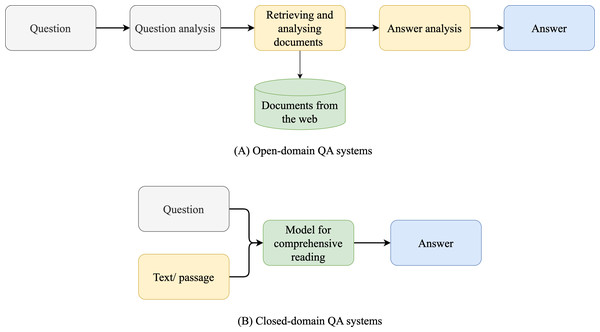

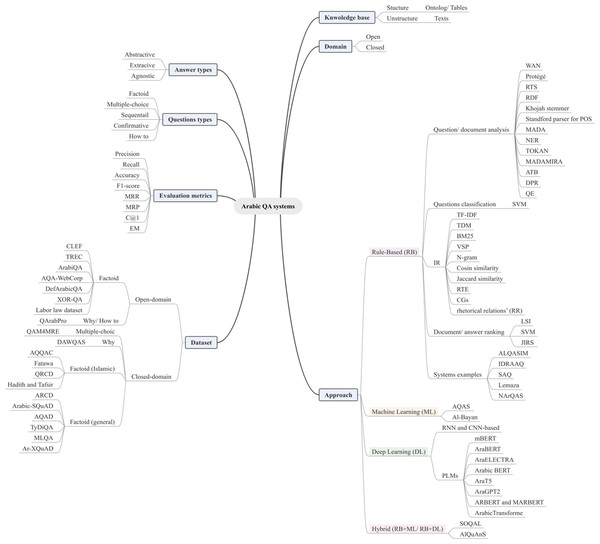

Figure 1: Open and closed-domain QA systems.

Lastly, a QA system is used for real time QA (Elfadil, Jarajreh & Algarni, 2021). Even the most complicated questions have to be answered within a few seconds, as users would face an unpleasant experience of spending hours in front of a computer awaiting answers. Therefore, the development of QA systems providing end products in real time is highly necessary. They are required in every aspect involving assistance from computers.

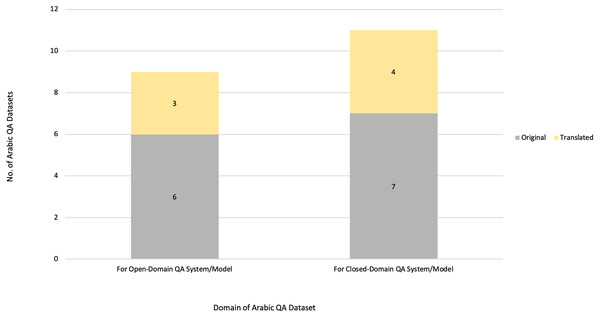

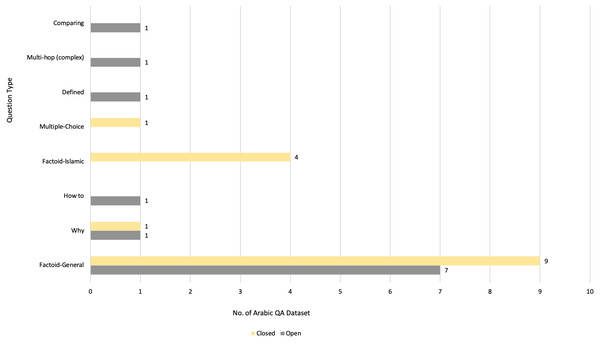

QA systems are categorized into two: reading comprehension (RC) and IR systems. RC systems are capable of reading and understanding text passages and answering related questions (Zhu et al., 2021). RC is used as a metric to evaluate the ability of a computing system to understand human language, and has several practical applications. RC systems are given specific passages as inputs, while IR systems must extract answers from an extensive collection of web documents without prior knowledge of their location (Zhu et al., 2021). Thus, QA systems are of two main types: open-domain and close-domain, as shown in Fig. 1. Open-domain QA systems access several documents on the internet as input and retrieve answers from them (Alsubhi, Jamal & Alhothali, 2021). In contrast, close-domain QA systems retrieve answers from specific passages by taking both the question and passage as input.

Related work

Thus far, several review papers have discussed Arabic QA systems, resources, tools, and techniques, while focusing on the RB approach. A summary of these review papers is presented in Table 2, with data categorization based on the review type, publication year, years covered by the studies, number of both Arabic QA studies and datasets included, and whether Arabic QA taxonomy, DL models, and evaluation methods are present. Additionally, limitations of those studies are included. Moreover, a comparison between our research and existing publications is also included in Table 2.

| Ref. | Review type | Purpose | No. of studies | Years of studies | Arabic QA taxonomy? | No. of Dataset | DL approach/ models? | Evaluation metrices? | Limitations |

|---|---|---|---|---|---|---|---|---|---|

| Ezzeldin & Shaheen (2012) | Survey | -Reviewing challenges of Arabic language in QA task. -Reviewing three main tasks of QA: question analysis, passage retrieval and answer extraction. - Summarizing main Arabic QA tools. |

N/A | N/A | N/A | N/A | N/A | N/A | Paper did not present studies for Arabic QA task |

| Ray & Shaalan (2016) | Review | -Presenting Arabic QA techniques, tools, and computational linguistic resources. -Reviewing challenges of Arabic language in QA task. -Reviewing three main tasks of QA: question analysis, passage retrieval and answer extraction. |

N/A | N/A | N/A | N/A | N/A | N/A | Paper did not present studies for Arabic QA task |

| Bakari, Bellot & Neji (2016a) | Literature Review | -Review of the main approaches and experimentations in Arabic QA systems. -Comparing existing Arabic QA systems. |

11 | 1993–2014 | N/A | N/A | N/A | N/A | Studies till 2014 |

| Bakari, Bellot & Neji (2016b) | Review | -Reviewing the main approaches of Arabic QA. -Discussing the different proposed systems with a classification. |

N/A | N/A | N/A | N/A | N/A | N/A | Paper did not present studies for Arabic QA task |

| Utomo, Suryana & Azmi (2020) | SLR | -Disusing research issues, morphology analysis, question classification, and ontology resources of Holy Qur’an QA systems. -Reviewing and comparing existing studies for Holy Qur’an QA systems. |

9 | 2008–2016 | N/A | N/A | N/A | N/A | Review paper only for studies built for Holy Qur’an QA task. Also, Paper did not present any review about DL approach and recent models. |

| Alwaneen et al. (2021) | Survey | -Reviewing challenges of Arabic language in QA task. -Presenting Arabic QA techniques, tools, and evaluation metrics. -Reviewing and comparing existing Arabic QA systems. |

22 | 2002–2020 | N/A | N/A | N/A | Yes | Paper did not present any review about DL approach and recent models. |

| Biltawi, Tedmori & Awajan (2021) | SLR | -Reviewing challenges of Arabic language in QA task. -Presenting Arabic QA techniques, tools, and evaluation metrics. -Presenting some of Arabic QA datasets. -Reviewing and comparing existing Arabic QA systems. |

26 | 2002–2020 | N/A | 6 | N/A | Yes | Paper did not present any review about DL approach and recent models. |

| Mahdi (2021) | Survey | -Reviewing and comparing existing studies of QA systems which are based on BERT. | 9 | 2019–2020 | N/A | N/A | N/A | N/A | Review paper only for studies built based on BERT model for QA task. Also, Paper did not present any review about DL approach and recent models. |

| Present study (2023) | SLR | -Reviewing challenges of Arabic language in QA task. -Reviewing approaches of Arabic QA systems. -Presenting some of Arabic QA datasets. -Reviewing and comparing existing Arabic QA systems. -Including the first Arabic QA taxonomy for techniques, domains, approaches, datasets, and components of existing systems. -Discussing recent trends of Arabic QA systems. -Presenting limitations of existing Arabic QA systems. -Proposing future directions for research in Arabic QA. |

40 | 2013–2022 | Yes | 21 | Yes | Yes | There is no Arabic QA studies founded in 2023. |

Notes:

Ref, reference; N/A, not available; SLR, systematic literature review.

Data presented in Table 2 suggest that only a few, approximately nine (Utomo, Suryana & Azmi, 2020) to 26 (Biltawi, Tedmori & Awajan, 2021) Arabic QA studies were conducted by researchers because a majority of these surveys and review papers focused on Arabic QA systems developed using the RB approach, particularly for open-domain purposes, which was most prevalent in general domain for Arabic QA frameworks. Biltawi, Tedmori & Awajan (2021) conducted a systematic literature review (SLR), which encompassed the most extensive collection of Arabic QA studies, spanning several recent years. However, Biltawi, Tedmori & Awajan (2021) only reviewed the contributions of those Arabic QA studies without providing adequate details on the recent DL approach and models. Moreover, a majority of the survey and review papers did not reviewing existing Arabic QA datasets. Biltawi, Tedmori & Awajan (2021) covered six Arabic QA datasets, which was not sufficient for detailed analyses. A majority of the survey and review papers listed in Table 2 do not focus on DL as a recent approach to Arabic QA systems. Additionally, no study presented Arabic QA taxonomy that would assist researchers in the field of Arabic QA systems. Conversely, no studies have focused on the challenges of current Arabic QA systems in recent years nor have proposed a solution to improve the accuracy of existing Arabic QA systems and models.

This research article provides a SLR of existing Arabic QA studies to address the restrictions of previous reviews on Arabic QA tasks. To improve search results, a large number of bibliographic databases were used along with a broader range of keywords that consider various naming conventions of QA tasks. Therefore, 40 primary studies related to Arabic QA were selected. Moreover, the selected studies in the QA-proposed domain, their strengths and weaknesses, proposed system/model approaches, datasets used, methodologies, and evaluation results were thoroughly analyzed. The analysis results assisted in the identification of issues and limitations existing in the current Arabic QA literature, and potential avenues for future research were identified to motivate participation of researchers in Arabic QA tasks.

Survey methodology

SLR is a research process that gathers, evaluates, and analyzes important published papers in order to precisely answer research-related questions concerning a certain issue. The major objective of an SLR is to provide a method for searching present literature that is repeatable, unbiased, and exhaustive. The SLR conducted for this study followed the guidelines developed by Kitchenham & Charters (2007). The guidelines can be summarized into three main phases: review planning, review conduction, and writing/publishing the review findings. Review planning process involves defining the reason for the review, establishing research questions, and developing the review methodology. A review protocol is a document that specifies all the phases in the SLR process, from choosing primary studies to collecting and synthesizing data from those studies. The second phase involves review conduction, where the steps outlined in the protocol are followed. The final phase includes writing and publishing the review results, validating the findings, and ensuring accurate and well-supported results.

Objective

In ‘Survey methodology’, existing Arabic QA-related review papers requiring an adequate coverage on Arabic QA tasks have been discussed. This study aims to systematically review existing studies on several Arabic QA systems/models to identify gaps and areas for future research.

Research questions

Multiple factors can affect the performance and robustness of Arabic QA systems. These factors include utilized datasets, information retrievers, questions and documents analyses, answer extracting algorithms, approaches used, and evaluation methods used to assess the suggested solutions (Calijorne Soares & Parreiras, 2020; Cambazoglu et al., 2020; Elfadil, Jarajreh & Algarni, 2021).

Considering the aforementioned factors, research questions (RQs) were designed, which are as follows:

RQ1: Which are the Arabic QA studies conducted to date?

RQ2: Which are the Arabic QA datasets used to evaluate the Arabic QA systems and models proposed by the researchers, and which datasets are available to the public?

RQ3: Which evaluation criteria were utilized to assess the Arabic QA systems and models?

RQ4: What are the current research methods and status of Arabic QA models and systems?

RQ5: What are the limitations of Arabic QA studies?

RQ6: How to enhance the Arabic QA systems and models?

Search procedure

Study resources

The automated Google Scholar academic search engine was used to search for relevant and published studies. The publication period of the primary studies was set between 2013 and 2022 to include recent Arabic QA studies within the last decade.

Search keywords

Since different Arabic QA techniques and resources use a variety of terminologies, generic and common search terms were used to obtain a broad overview of the field. Arabic QA studies were collected primarily through Google Scholar by using different search keywords, such as “question-answering systems,” “question answering,” “question answering models,” “Arabic question answering systems,” “Arabic QA models,” “Arabic question answering tasks,” “Arabic question answering dataset,” “Arabic QA dataset,” “question answering dataset,” and “question-answering.”

Search string

To represent all possible root-word endings, a wildcard (*) was used; for example, answer* represents both “answering” and “answers.” Furthermore, Boolean operators (AND and OR) were used to account for synonyms, spelling varieties, and naming inconsistencies. In some cases, synonymous terms in Google Scholar were separated into search strings because using them with the OR operator yielded hundreds of results that were irrelevant. The following search strings were used for automatic searches in Google Scholar.

(“question answering models” OR “question answering systems”) AND (“Arabic”)

(“question answer*”) AND (“Arabic”)

(“QA”) AND (“Arabic”)

(“Question-Answer*”) AND (“Arabic”)

(“question answering dataset”) AND (“Arabic”)

(“QA dataset”) AND (“Arabic”)

(“QA dataset”) AND (“question answer*”) OR (“Arabic”)

(“question answering dataset”) OR (“question answer*”) AND (“Arabic”)

Study selection

The collected studies had to meet specific selection criteria before being considered in this review. A set of inclusion and exclusion criteria was established to ascertain the eligibility of the studies, as shown in Table 3. The studies that met the inclusion criteria were part of the review and analysis, while those that met at least one of the exclusion criteria was excluded.

| Inclusion criteria |

|---|

| Study is written in English and published sometime between 2013 and 2022. Language of the dataset is MSA or Arabic. Type of the dataset used in the study is factoid QA dataset, starting with a wh- question. Study handles Arabic QA tasks. Research findings are made available on the website of an academic institution or through publication in a journal or conference by undergoing peer-review. Additionally, the results are included in doctoral and master’s theses and dissertations. |

| Exclusion criteria |

| Proposal studies that are not empirically tested. Dataset is in English, non-Arabic, Arabic dialectics, or community QA, with classifying questions, generated questions, Yes/No QA, Multiple-Choice QA. Study handles chatbot as Arabic QA. |

Search process

A preliminary search on Google Scholar was conducted to collect publications within a search period between 2013 and 2022 to ensure the coverage of the most significant primary research and include early works in Arabic QA. During this stage, relevant primary studies were identified by conducting a literature survey using pre-determined criteria of inclusion and exclusion and strings of the search. The search procedure began with a title screening, and publications with titles meeting one of the selection criteria were collected. When the study title contained the phrase “question-answer” or one of its synonyms, in addition to the keyword “Arabic,” the study was included. However, when more than one search string was used, some duplicate publications were found. Hence, duplicates were removed from the list of candidate research after screening their titles. Thereafter, the abstracts and keywords of the published studies were screened to meet the inclusion and exclusion criteria. Next, the full text of the collected publications were scanned when the abstract and keywords were not highly conclusive. The total number of results provided by Google Scholar is shown in Fig. 2, including the scanning step results obtained using the preferred reporting items for systematic reviews and meta-analyses (PRISMA) technique.

Figure 2: Flow of search and selection process using PRISMA technique.

Data extraction

The instructions of Kitchenham & Charters (2007) were applied for data extraction. Information including details on publisher, authors, publication date, and publication type was extracted from the primary studies that were included in the analyses. Additionally, data relevant for answering research questions were collected, such as domains, methodologies, text and question analysis, ways of extracting answers, datasets, and reported results. The gathered data were organized in a table for analyses.

Results

The findings of the SLR conducted for this study are discussed in this section, with an overview of the included studies. Analytical results of the data extracted from the publications included in this SLR are also discussed to answer the pre-determined research questions.

Summary of the included studies

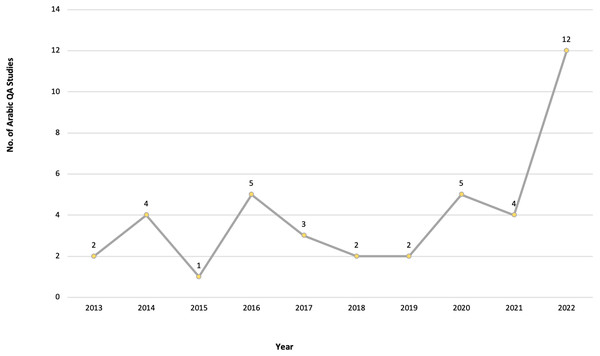

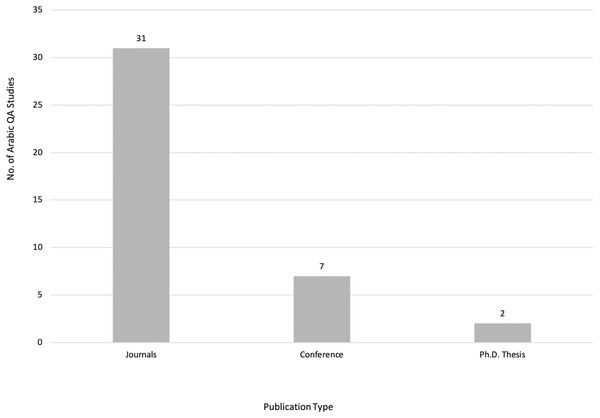

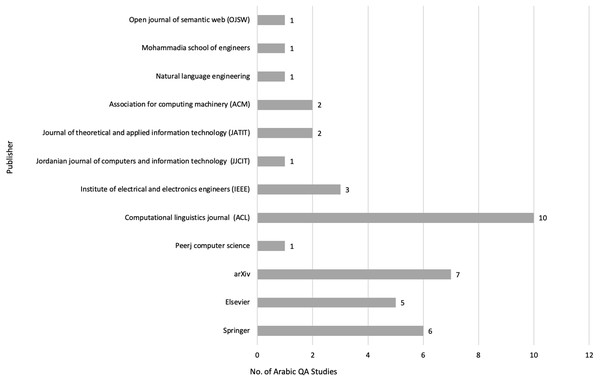

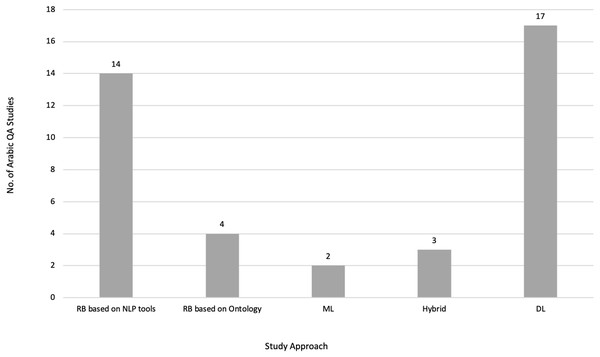

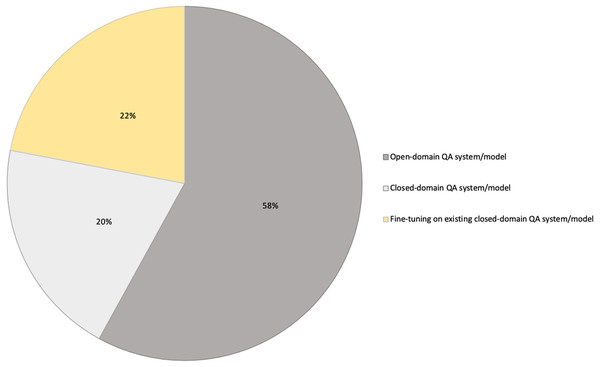

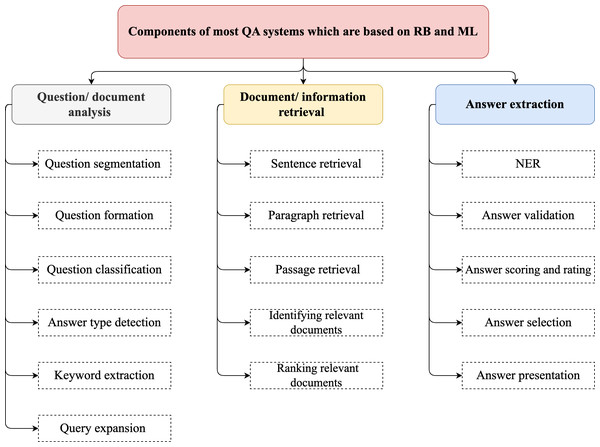

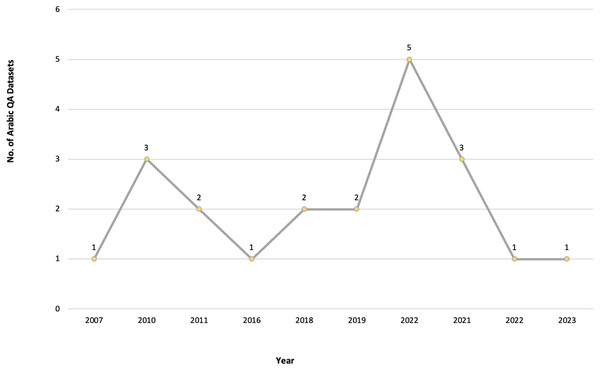

After meeting the inclusion and exclusion criteria, 40 primary studies were selected for this review, as illustrated in Figs. 3, 4 and 5, which depict the distribution of the included studies based on the publication year, type, and publisher, respectively. The primary studies included were published between 2013 and 2022, with a majority published in 2022. The studies were published by a variety of publishers, the most common being computational linguistics journal (ACL) and arXiv that offer free digital archive of papers in NLP and computational linguistics from conferences and journals. A majority of the publications were journal articles, followed by conference proceedings.

Figure 3: Number of Arabic QA studies conducted between 2013 and 2022.

Figure 4: Distribution of Arabic QA studies based on publication type.

Figure 5: Distribution of Arabic QA studies based on publishers.

RQ1: Which are the Arabic QA studies conducted to date?

Based on the inclusion and exclusion criteria for selecting primary Arabic QA studies, the selected studies are presented and analyzed in this section to answer RQ1.

Ezzeldin, Kholief & El-Sonbaty (2013) suggested ALQASIM as an Arabic QA system for analyzing documents related to posed questions based on several NLP tools. They used morphological analysis and disambiguation for Arabic (MADA), TOKAN morphological analyzer, part-of-speech (POS), Arabic WordNet (AWN), and stop words removal techniques. MADA extracts morphological and contextual information from unprocessed Arabic texts, while the process of POS involves assigning a word to a specific part of speech, which includes nouns, punctuation, verbs, adjectives, adverbs, conjunctions, pronouns, or prepositions. AWN is a lexical database that mirrors the developmental process of Princeton University developed English WordNet and Euro WordNet, but for Arabic. It contains various lexical and semantic relations between word senses. For extracting the correct answer, the distance between the question and answer choice locations was subtracted from the sum of the scores at each location, and the resulting score was used to determine the location of the correct answer. This ensured that the answer locations with the highest scores were those that were closest to the question location.

Fareed, Mousa & Elsisi (2013) proposed a retrieval Arabic QA system based on three modules. The first module involved question analysis using AWN, which built “synonym, definition, subtype, and super type” relations for each keyword in the question. The second module was a passage retrieval module that used the Google search engine, java IR system (JIRS), and Khojah stemmer. Google was used to acquire documents related to the question, while JIRS was used to re-rank the Google search results on a structural basis to improve their ranking. The Khojah stemmer, an Arabic stemming algorithm developed by Shereen Khoja (Fareed, Mousa & Elsisi, 2013), is one of the most popular light stemmers for Arabic language. It was use to strip words of their longest prefix and suffix. The words were then checked against verbal and noun patterns to find a match. The third module involved answer extraction based on the type of the question.

Abdelnasser et al. (2014) suggested the use of ML algorithms and NLP tools for building a QA system on Islamic data, especially for the Holy Qur’an to explain its verses. They exploited an ML algorithm known as support vector machine (SVM) to classify questions after analyzing them using an Arabic NLP toolkit or MADA. MADA extracts morphological and contextual information from unprocessed Arabic texts, which are then used for more accurate POS tagging, lemmatization, diacritization, and stemming.

Kamal, Azim & Mahmoud (2014) applied for Fatwa in Islamic data by proposing the use of latent semantic indexing (LSI) for indexing and retrieving documents automatically. LSI uses singular value decomposition (SVD) as a mathematical method to identify patterns in relationships between words and concepts in raw texts. For ranking candidate answers, a term document matrix (TDM) was used by applying term frequency-inverse dense frequency (TF-IDF) algorithm for each word in the query using a math equation. The algorithm used the frequency of words to determine how relevant those words were to a given document. This was done by observing the appearance frequency of words in the document compared to their appearance frequency in other documents.

Sadek (2014) enhanced Arabic “why” and “how to” questions by applying text mining methods. The author combined pattern recognizer model with text parser model to form a single system. The pattern recognizer model used linguistic patterns to identify relationships present in sentences, with approximately 900 patterns available in the system. The model was capable of extracting information related to cause–effect and method–effect. Thereafter, the text parser model was built based on the framework of rhetorical structure theory (RST) of text structure, which was used to assist in the creation of computational text plans. The text structure in RST is based on a hierarchy of small patterns, called schemas. The RST approach recognizes a text as a group of clauses arranged in a hierarchical structure and interconnected in different ways, rather than being a mere sequence of clauses. A similar theoretical approach was used in a research by Sadek & Meziane (2016) for a similar purpose but with different datasets.

Abouenour (2014) proposed an Arabic QA system called IDRAAQ, which combined three different approaches based on levels, such as keyword, structure, and semantics. The three approaches were QE process based on AWN semantic relations, N-gram model for passage retrieving, and conceptual graphs (CGs) for extracting answers. The N-gram model referred to a consecutive series of N words extracted from a particular text, which could be utilized to estimate the likelihood of the subsequent word in the sequence based on the preceding word. The CGs presented graphical representations of the relationships between words and concepts. They were composed of nodes and edges, which represented the concepts and the relationships between them, respectively.

Ahmed & Anto (2016) aimed to analyze open-domain questions to extract correct answers. For analyzing questions, several steps were proposed, such as tokenization, stop word removal, POS tagger, and classifying question using the SVM algorithm. Thereafter, a vector space model (VSM) was used to retrieve documents, and then extract answers. Before tokenizing the questions, an Arabic tree bank (ATB) was used for morphological analyses. It was a corpora annotated for morphological information and POS with syntactic properties.

A framework known as semantic question answering (SQA) was proposed by Tatu et al. (2016) for large document collections. The aim of the framework was to provide an opportunity for users to retrieve stored information using natural language questions. The questions were automatically transformed into SPARQL queries and utilized to investigate the semantic index of the resource description framework (RDF). SPARQL is a protocol and semantic query language for RDF, which is designed to retrieve and manipulate data stored in the RDF format. The RDF involves statements used for describing and representing data in a structured manner. It consists of a set of rules for representing data in the form of triples, composed of a subject, predicate, and an object. The RDF allows organization of data into a graph structure, which can be used for querying and interpreting data.

Shaker et al. (2016) applied an ontological approach on an Islamic dataset called Fatwa. The study aimed to propose a QA approach for the domain of Islamic Fatwa utilizing ontology-based techniques. Hence, an ontological model was constructed through a compilation of Fatwas from ibn uthaymeen-prayer Fatwas.

Al-Chalabi, Ray & Shaalan (2015) applied a semantic approach to search and retrieve documents before answer extraction. The authors used a query expansion (QE) method to add semantically equivalent terms to increase the likelihood of retrieving documents containing relevant information. QE is a technique employed in IR to reduce query–document mismatch and boost retrieval performance. This was implemented by choosing and incorporating terms into the query of the user.

A hybrid approach combining RB and ML methods was used by AlShawakfa (2016). The author built a PoS tagger with combination of RB approach. The tagger employed over 35 tagging rules to recognize various types of nouns, including “Proper, Action, Genus, Agent, and Patient nouns,” along with adjectives, adverbs, demonstrative nouns, instrument, time, and place (AlShawakfa, 2016). Additionally, more than 15 and 17 tagging rules were used to identify particles and verbs, respectively. For IR, the relational database system employed VSM for locating and fetching relevant documents. The mathematical model entailed representation of each document and query as a vector and involved assigning weights to the index terms in both entities. Similarity between the query and each document was determined based on these weights, which allowed the model to identify the documents with highest relevancy to the query. Therefore, the best results were retrieved. Cosine similarity is a measure of how similar two vectors are to each other, and is often used to compare the similarity of documents. Through the analysis of cosine similarity between a query and a set of pertinent documents, the best possible answers for the query can be accurately determined.

Another system known as LEMAZA was proposed for Arabic “why” questions by Azmi & Alshenaifi (2017). They applied the RB approach to RST and aimed for IR in open-domain QA field. The documents were processed by breaking them into tokens, normalizing them, removing stop-words, and stemming.

Nabil et al. (2017) proposed a system to improve the process of retrieving passages from numerous documents by analyzing the question, retrieving the document, and then extracting the answer. This approach is used most commonly by Arabic QA systems. QE with SVM algorithm was used to analyze and classify questions. An improved version of MADA, known as MADAMIRA, for Arabic text was also used. MADAMIRA ran faster than MADA with more analyzing rules.

Albarghothi, Khater & Shaalan (2017) applied semantic web and ontological technologies to enhance QA tasks in the pathological domain. The ontology was built using a Protégé tool, which translated inquiries into triple patterns and built SPARQL queries to access RDF data. Protégé is a widely used tool for representing and reasoning knowledgeable concepts and defining the properties and instances of those concepts. According to the ontological dictionary, Protégé is defined as a RDF/web ontology language (OWL) file. OWL provided a wide range of constructs for representing the semantics of a domain and allowed for the expression of complex ontological relationships. Furthermore, it provided support for reasoning and enabled applications to infer new knowledge from the ontology. Thereafter, Jena framework was used for answer extraction and to build applications that made use of semantic web and linked data technologies. It allowed users to easily extract data from and write to RDF graphs.

Ben-Sghaier, Bakari & Neji (2018) built a system known as NArQAS based on the RB approach. Recognizing textual entailment (RTE) technique was used to identify the correct answer from a set of candidates. RTE was combined with several operations achieved by NLP tools, such as IR, information extraction, automatic language processing, and automatic reasoning.

Ismail & Homsi (2018) proposed a dataset of Arabic “why” questions. The authors calculated the probabilities of rhetorical relations (RR) to extract relevant answers for the posed questions. The RR indicated the logical relationship between two sections of texts. Ismail and Homsi (Ismail & Homsi, 2018) used RR to determine related reasons and causality in texts. Moreover, ML algorithms, such as SVM, were used to classify the selected documents from the internet into eight domains. NLP techniques such as bag-of-words were used to convert documents into vectors of weighted frequency for each token before RR was calculated. For retrieving answers, TF-IDF was used. However, the study was only focused classification and analysis of questions, instead of extracting correct answers.

To improve IR using a transformer model, Mozannar et al. (2019) built a retrieval system using TF-IDF. The system retrieved those Arabic text from Wikipedia that were related to user-generated questions, before passing the most relevant documents to the readers. Bidirectional encoder representation (BERT) was used as a reader in this study.

Al-Shenak, Nahar & Halawani (2019) used ML algorithms such as SVM, SVD, and LSI to classify questions. Based on these classifications, relevant documents containing answers were retrieved.

Bakari & Neji (2020) aimed to design a system to retrieve text from the internet. The authors converted both the questions and passages into semantic and logical representations. The questions and passages were first converted to CGs, which were then converted to logical representations. Textual entailment relations among the logical representations were extracted, and the most relevant passages were determined based on those relations.

Several pre-trained transformer models used to improve the accuracy of QA systems are available. These include multilingual BERT (mBERT) (Mozannar et al., 2019), AraBERT (Antoun, Baly & Hajj, 2020a), ARAGPT2 (Antoun, Baly & Hajj, 2020c), and ARAELECTRA (Antoun, Baly & Hajj, 2020b). These models were built to improve the understanding of text by computational systems and are used for QA and named entity recognition and sentiment analysis.

IR can be further improved with graph ontology, as demonstrated by Zeid, Belal & El-Sonbaty (2020). The study applied semantic operations to questions to expand the queries. Thereafter, the queries were used to search for data using graph ontology. This model followed three steps: question processing, document processing, and answer extraction. Each step was achieved using traditional NLP techniques.

Alamir et al. (2021) propose another system for open-domain QA tasks. The system consisted of three main stages: preparing data, processing data, and extracting answers. The TF-IDF and cosine similarity algorithms were used to retrieve the documents. For processing, a few NLP techniques were used. The dataset was built under the “Ministry of Human Resources and Social Development in the Kingdom of Saudi Arabia.” However, the system handled only those words that were similar in both the question and the document. Further improvements to the system were proposed to also handle the semantics of words for more accurate results.

Maraoui, Haddar & Romary (2021) aimed to create an Arabic QA system specialized in answering factoid questions, particularly relating to Islamic sciences. To extract answers, the system consisted of three phases: analyzing the question, searching for information, and processing the answer. The first stage of QA involved analysis of the question in order to formulate an appropriate query. After selecting a specific set of elements from a database, the second stage involved IR through an information search. During the third stage, the answer processing phase provided an accurate Arabic response. The system was built using a normalized database that adhered to the text encoding initiative (TEI) standard. TEI is a consortium of scholars and researchers who work together to develop and maintain a standard for representing texts in digital form.

Two deep bi-directional transformers for Arabic known as ARBERT and MARBERT were proposed by Abdul-Mageed, Elmadany & Nagoudi (2021). Both transformer models were built and implemented based on the BERT transformer model. The models differed in their training data as some of the models trained on MSA, social media data, or Arabic dialects. However, the models had the same stages as that of the BERT model. Additionally, the study proposed a new large benchmark known as Arabic natural language understanding evaluation benchmark (ARLUE). The ARLUE composed of 42 Arabic datasets for Arabic NLP tasks. However, the result of fine-tuning the model on Arabic QA task was not competitive because some questions were based on Wikipedia articles, and the two models were not trained on Arabic Wikipedia articles.

To build and train Arabic models based on text-to-text transfer (T5) (Raffel et al., 2020) and mT5 (Xue et al., 2021) transformer models, Nagoudi, Elmadany & Abdul-Mageed (2022) proposed and implement AraT5 for several tasks. T5 and BERT are different in that T5 has a casual decoder and uses the task of fill-in-the-blank cloze instead of a masking task. The AraT5 had a task of question generation under the mode of read the passage and generate question. A new Arabic QA dataset named ARGEN QGwas developed. The dataset was created by gathering sets of QA dataset that included Arabic reading comprehension dataset (ARCD), multi-lingual QA (MLQA), cross-lingual question answering dataset (XQuAD), and typologically diverse languages question answering (TyDiQA). However, the accuracy for QA was unsatisfactory, and the reasons for low accuracy were excluded for this study.

Alsubhi, Jamal & Alhothali (2022) suggested improvements to IR modules by applying dense passage retrieval (DPR) techniques instead of TF-IDF and best match 25 (BM25). The suggested solution was intended to develop open-domain QA systems. The proposed DPR method was used to read and rank passages with accurate results within shorter periods than ML methods. Being a retrieval-based method, the DPR method used a dense vector representation of text to identify relevant passages from a collection of documents in open-domain QA systems. A transformer model was utilized to encode passages, and then a scoring function was used to rank the passages according to their relevance to a given query (Alsubhi, Jamal & Alhothali, 2022). BM25 served as a ranking mechanism employed by search engines to assess the relevancy of a document to a specific search query. It was built based on SVM, but with addition functions of IR tasks, such as document classification and clustering. Additionally, BM25 finds applications in text vectorization, which is a process of transforming text into numerical vectors that can be used to determine the similarity between documents. However, the QA datasets considered by Alsubhi, Jamal & Alhothali (2022) were not enough for training the system because a low resource dataset or Arabic dataset was used.

As an extra step for IR, Hamza et al. (2022) classified questions before candidate answer extraction. They aimed to reduce searching space for correct answers by the use of three embeddings for the questions. The first embedding involved the use of word representations in BERT, embedding from language models (ELMo), AraBERT, and word to vector (W2V). Fused embedding with fine-tuning questions was the second method for embedding, while the third method involved utilizing a “boom one head” neural fusion model to derive features from both fine-tuning and existing embeddings of the questions. Additionally, the study used vanilla classifier to classify the questions. However, only the accuracy results of the classifier methods were discussed, while its application to Arabic QA datasets were excluded.

To increase the accuracy of QA systems utilizing datasets from the Holy Qur’an as an Islamic dataset, several studies were published in 2022 in the “5th Workshop Open-Source Arabic Corpora and Processing Tools with Shared Tasks on Qur’an QA and Fine-Grained Hate Speech Detection,” some of which are discussed as follows.

Premasiri et al. (2022) proposed the use of transfer learning (ensemble learning) to improve and increase Arabic QA datasets. Contributions to the field of Arabic QA were aimed by presenting Qur’an QA datasets. Different versions of Arabic transformer models, such as AraELECTRA, camelbert, mBERT, and AraBERT, were introduced. Thereafter, self-ensemble methods were applied to predicted the most accurate answers to the questions and overcome different results. However, the study did not consider the results of fine-tuning the transformer models using the Holy Qur’an dataset.

Furthermore, ElKomy & Sarhan (2022) attempted to improve the accuracy of the Qur’an QA dataset. An ensemble approach with post-processing operations after the fine-tuning stage was proposed in the study, which used BERT, AraBERT, ARBERT, and MARBERT transformer models to collect all prediction result of answers that were then merged. This approach was termed as ensemble-vanilla approach. Conversely, ElKomy & Sarhan (2022) suggested and implemented post-processing approaches by ranking the top 20 span answers. The answers were listed by a single model of the set model used in the ensemble approach or by the span-voting ensemble approach. However, variations were observed in the reported results owing to using extensive cross-validation.

Alnajjar & Hämäläinen (2022) designed a model based on multilingual BERT and fine-tuned it especially for Qur’an QA dataset. They reimplemented multilingual BERT model with an addition of Islamic data for pre-training the model. The Islamic data were collected from different Islamic websites and they provided explanations of the Qur’an (Tafseer and Fatwas). Thereafter, a comparison between the designed and multi-lingual BERT models was drawn. The new model had followed the post-processing step after predicting the answer to check the answer length. For example, questions on “who” should have short answers. Conversely, the new model scored approximately 34% in partial reciprocal rank (pRR).

To overcome the overfit of training models on Qur’an QA datasets, Aftab & Malik (2022) suggested and implemented regularization techniques such as data augmentation and wight-decay. The BERT model was used to train models, which were then fine-tuned to Qur’anic reading comprehension dataset (QRCD). The results showed improvement after using both regularization techniques. The use of another technique such as back translation was suggested to increase the Arabic QA dataset and enhance the performance of QA.

Mostafa & Mohamed (2022) suggested solutions to improve the performance of Qur’an QA using QRCD. For the study, AraELECTRA was used to train the model on Arabic QA dataset to enhance the fine-tuning phase. Before fine-tuning the model on the QRCD, TyDi QA, SQuAD, and ARCD were employed. Moreover, Mostafa & Mohamed (2022) experimented several loss functions, such as cross-entropy, focal-loss, and dice-loss, to find the best function for increasing the performance of their model. Among the considered loss functions, cross-entropy showed the best performance.

To develop an answer on QRCD, Touahri et al. (2022) designed a sequence-to-sequence model based on the mT5 language model. The model was implemented as base, large and extra-large. After training on the train set of the QRCD, the model was fine-tuned. Thereafter, the development set was used for evaluation, and the test set was utilized to generate predictions. The model had high performance on the development set. However, the performance was much lower on test set and a degradation in performance results was observed.

Alsaleh, Althabiti & Alshammari (2022) used three Arabic models, AraBERT, CAMeL-BERT, and ArabicBERT, for fine-tuning on QRCD. The experimental results showed that AraBERT outperformed the other Arabic pre-trained models. However, the highest result of accuracy still required improvements, especially when related to religion questions.

Singh (2022) suggested three solution for fine-tuning using QRCD. Three techniques known as semantic embeddings and clustering, Seq2Seq based text span extraction, and fine-tuning BERT model were used. The first technique was aimed at using sentence embedding for each answer in QA dataset using AraBERT. The second was aimed at generating the text span of answers using mT5 and mBART. Finally, the third solution involved the use of BERT. The suggested model treated a question-passage pair as a unified sequence and subsequently transformed it into an input embedding. Higher results were obtained from the third solution.

Keleg & Magdy (2022) implemented improvements in fine-tuning Arabic BERT on QCRD. They categorized datasets based on question types. Additionally, they built faithful splits to generate new training and development datasets. Thereafter, the datasets were concatenated to detect data leakage between two splits of the dataset. However, the results of accuracy were unsatisfactory as the size of the dataset was not enough for training.

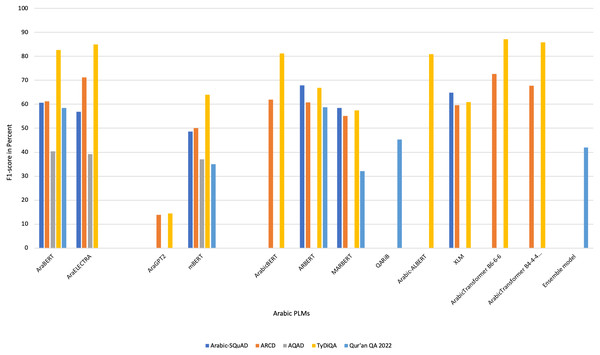

An ArabicTransformer model was proposed by Alrowili & Shanker (2021). The model comprised ELECTRA-objective and a funnel transformer. The funnel transformer was used to decrease the count of hidden states in a sequence by utilizing a pooling method, which results in a substantial decrease in the pre-training costs. To compress the complete sequence of hidden states of the encoder into a set of blocks, a pooling technique was employed. The ArabicTransformer model had one architecture B6-6-6, which consisted of three blocks. Each block had six layers, with a hidden size of 768. A B4-4-4 model design comprises three blocks, each having a hidden size of 768 across four layers. The model was trained on several tasks such as QA. However, the model was tested on only two QA datasets.

Data listed in Table 4 present a summary of the selected Arabic QA studies based on the study reference and year, name of proposed system or model, approach used in building the system or model, aimed open or closed-domain of study, utilized dataset to evaluate the proposed solution, evaluation result, and the limitation or recommended for the future work.

| Ref. | System or model/novelty | Approach | Domain | Methodology | Dataset | Evaluation result | Limitations/Future work |

|---|---|---|---|---|---|---|---|

| Ezzeldin, Kholief & El-Sonbaty (2013) | ALQASIM → It introduced a novel technique for analyzing reading test documents instead of questions for answer selection and validation. | RB | Open-domain | Analyze the reading documents instead of the questions using the following NLP tools: MADA and TOKAN morphological analyzer, POS, AWN, and removing stop words. To extract answers, score distance between answer and question locations. |

QA4MRE, Cross-language education and function (CLEF) | Accuracy: 0.31% C@1: 36% |

Low accuracy results not discussed. |

| Fareed, Mousa & Elsisi (2013) | N/A → It proposed a design for an Arabic question answering system based on query expansion ontology and an Arabic stemmer. | RB | Open-domain | Three main steps: Question analysis using AWN, document retrieval using JIRS, and Khojah stemmer. Answer extracting. |

CLEF, text retrieval conference (TREC) | Accuracy: 38.77% Mean Reciprocal Ratio (MRR): 16.20% Answered questions: 65.55% |

Authors are recommended to test the system on a larger dataset of questions. |

| Abdelnasser et al. (2014) | Al-Bayan → The system is specialized for the Holy Qur’an. The system retrieves the most relevant Qur’an verses and extracts the passage that contains the answer from the Qur’an and its interpretation books (Tafseer). | ML → SVM with NLP tools such as MADA | Closed-domain | Three components: Semantic IR to retrieve verses for user questions. MADA to analyze questions and SVM to classify questions. A third component to extract the ranked answers with their interpretations. |

Quranic Ontology and Tafseer Books → A total of 230 questions, comprising randomly collected queries from forums and common Quranic topics, were segregated into two sets. The first set comprised of 180 questions for training purposes, while the second set consisted of 50 questions for testing. | Accuracy: 85% Precision: 73% C@1:11% |

Authors are recommended to apply the proposed solution on list-type questions. |

| Kamal, Azim & Mahmoud (2014) | N/A → The proposed system uses information retrieval approaches to get to the closest answers to the input question, even if the question differs from the stored questions. | RB | Closed-domain | Three main steps: Question analysis → tokenization, normalization, and classifying questions. Candidate answer retrieval → NER and TDM Answer ranking → TF-IDF and SVD |

Fatwa → A compilation of 3,000 distinct passages on Islamic QA, sourced from various websites, was manually assembled into a fatwa. | Recall: 95.3% MRR: 0.916 |

Several processing steps for each question, document, and passage may cause delay in retrieving answers. The study is focused on IR rather than RC. |

| Sadek (2014) | N/A → The paper proposed a new strategy for developing QA systems for the Arabic language, specifically for answering ”why” and ”how to” questions. | RB | Closed-domain → Extracting answers by applying text mining approach. | Combine two models: Pattern recognizer to apply linguistic patterns and relationships among sentences. Text parser that uses RST to analyze texts from a discourse perspective. Answer extraction by VSM. |

Dataset for “why” and “how to.” Set of articles collected from the contemporary Arabic corpus (415 texts, 70 “why” questions, 20 “how to” questions) with their answers. |

Recall: 81% Precisions: 78% Accuracy: 68% |

The proposed solution was built by calculating the pattern similarity between words, which may hold different meanings. |

| Abouenour (2014) | IDRAAQ → It consisted of three-levels approaches to improve a system for Arabic QA task using existing resources and several techniques. | RB | Open-domain | Three-level approach based on the level of keywords, structure, and semantic meaning. Apply QE using AWN for question analysis. Use N-gram model for document retrieval. Use CGs to represent meanings between questions and passages to predict the answers. |

TREC, CLEF | TREC+ CLEF: 26.76% accuracy, 11.58 MRR |

The system requires larger datasets to introduce more accurate results. |

| Al-Chalabi, Ray & Shaalan (2015) | N/A → The proposed a method to add semantically equivalent keywords in Arabic questions by using semantic resources, which can improve the accuracy of Arabic QA systems. | RB | Open-domain | Apply QE method through the addition of semantically equivalent terms to increase the likelihood of retrieving documents containing relevant information. Use AWN tool as a semantic resource to find synonyms for words in questions. |

TREC, CLEF, Arabic questions. | MRR: 2.18 out of 3. | Results are not discussed in detail and are poorly presented. |

| AlShawakfa (2016) | N/A → The originality of this research lies in the creation of an extensive collection of over 60 tagging rules, 15+ question analysis rules, and 20+ question patterns. to enhance the precision and correctness of answers generated in the context of Arabic QA. | RB | Open-domain | More than 95 tags rules, question analysis rules, and question patterns are combined and used to build the system. VSM is used for IR. |

Dataset collected from Wikipedia dated 2010. Contains 75 documents with 335 questions. | F1: 87% Accuracy: 78% Recall: 97% |

The system is based on syntactic analysis of words, which is a less accurate and time-consuming approach. |

| Sadek & Meziane (2016) | N/A → The paper developed of a new Arabic text parser that is oriented towards QA systems dealing with ”why” and ”how to” questions. | RB | Closed-domain | Apply Arabic text parser designed for QA systems that handle “why” and “how to” questions. RST is used for describing relations in text. | “Why” and “how to” QA dataset consisting of documents from open-source Arabic corpora. | Recall: 68% MRR: 0.62. | Authors are recommended to use investigate query expansion techniques for better results. |

| Ahmed & Anto (2016) | N/A → The novelty of this paper lies in the use of several techniques to analyze the question, including a Stanford POS Tagger & parser for Arabic language, NER, tokenizer, Stop-word removal, question expansion, question classification, and question focus extraction components. | Hybrid approach (RB+ML) →SVM classifier | Open-domain | Analyze questions by: ATB morphological analyses, and then tokenize questions. Remove stop words. QE using AWN. Classify questions using SVM. Focus on questions using Stanford POS tagger for Arabic. retrieving document using VSM. Extract questions based on Arabic tagger. |

TREC | MRR: 65% | Answer extraction based on an Arabic tagger may reduce accuracy. |

| Tatu et al. (2016) | SAQ → This framework transforms the semantic knowledge extracted from natural language texts into a language-agnostic RDF representation and indexes it into a scalable triplestore. | RB based on ontology | Open-domain | Transfer unstructured text in questions into RDF as structured format, and then to SPARQL to obtain the answers. | TREC | MRR: 65.82% | Dataset is not large enough to evaluate the system. |

| Shaker et al. (2016) | N/A → The paper introduced ontology-based approach for Arabic QA in the domain of Islamic Fatwa. | RB based on ontology | Closed-domain | Comprises several components including: Question pre-processing → stop word removal. Question analysis → similarity of cosine and Jaccard algorithms to classify questions. Question expansion → words in the query are analyzed in terms of semantic, morphology, and spilling errors. Specific-domain ontology → constructed using TFIDF from the dataset. Open-domain ontology → constructed using AWN. |

Fatwas collected from Ibn-Othaimeen Prayer Fatawas Book. | Closed-domain: precision: 72% Recall: 59% F1: 65% Open-domain: precision: 92% Recall: 90% F1: 91% |

Authors are recommended to extend the classes of ontology to include more Islamic concepts |

| Azmi & Alshenaifi (2017) | Lemaza → It is an Arabic why-question answering system that uses the RST to automatically answer why-questions for Arabic texts. | RB | Open-domain | Utilize RST. The process of analyzing question, pre-processing and retrieving document, and extracting answer are broken down into four components. | “Why” QA dataset consisting of documents from open-source Arabic corpora with 110 “why” question and answer pairs. | Recall: 72.7% Precisions: 78.7% C@1: 78.68% |

Authors are suggested to expand the test collection by incorporating a more extensive corpus, resulting in an increase in the number of questions. |

| Nabil et al. (2017) | AlQuAnS → It introduced a new answer extraction pattern that matches the patterns formed according to the question type with the sentences in the retrieved passages in order to provide the correct answer. | Hybrid approach (RB+ ML) → SVM classifier | Open-domain | IR using semantic QE and ranking retrieved passages using a semantic-based process called MADAMIRA. | CLEF, TREC |

Accuracy = 15.30% | Researchers are suggested to use DL techniques in future works. |

| Albarghothi, Khater & Shaalan (2017) | N/A → The paper introduced system based on ontology that utilizes semantic web and ontology technologies to represent domain-specific data that can be used to answer natural language inquiries. | RB based on ontology | Closed-domain | Build pathology ontology → using semantic web and Protégé tool Select questions and process it. Answer questions → using Jena framework based SPARQL query. |

Pathology dataset | Precession: 81% Recall: 93% F1: 86% |

Authors should cover more domains. |

| Ben-Sghaier, Bakari & Neji (2018) | NArQAS → The system combines reasoning procedures, NLP techniques, and recognizing textual entailment technology to develop precise answers to natural language questions. | RB → combination of semantic analyzer with logical reasoning; | Open-domain | Three main steps: question analysis, passage retrieval, and logical representation of relationships in text. RTE technique used to extract the exact answer among several candidates. |

A collection of 250 questions gathered from TREC, CLEF, FAQ, and online forums forming a corpus of question-based texts. | Accuracy: 68% for answering factoid questions from the web. | Use and integration of NLP tools with semantic analyzer is time-consuming. Authors are suggested to use DL word embedding. |

| Ismail & Homsi (2018) | N/A → The paper introduced a new publicly available dataset called DAWQAS, which consists of 3205 why QA pairs in Arabic language. | Hybrid approach (RB+ ML) → SVM, and bag of words | Closed-domain for “why” questions | SVM classifies selected documents from the web. Bag-of-words convert documents into vectors. RR is calculated. Retrieve answers by TF-IDF. |

DAWQAS | F1 = 71% | Authors are suggested to add more examples to train the algorithm using DL and classify the questions automatically. |

| Mozannar et al. (2019) | SOQAL → The paper introduced ARCD dataset. Also, it used TF-IDF approach for document retrieval and BERT for neural reading comprehension. | Hybrid approach (RB+ DL) → TF-IDF and BERT | Open-domain | Retrieve Arabic documents related to a question using TF-IDF; pass those documents to BERT to extract an answer. | Arabic-SQuAD, ARCD | F1-Arabic-SQuAD = 48% EM-Arabic-SQuAD = 34% F1-ARCD = 51% EM-ARC = 19% |

The study uses BERT as a reader, which is not a model specific for Arabic text such as AraBERT. |

| Al-Shenak, Nahar & Halawani (2019) | AQAS → The novelty of this paper is proposing an enhanced method and system for Arabic QA. The proposed system uses SVM, SVD, and LSI to classify the query in two phases. | ML → SVM, SVD, and LSI | Open-domain | SVM, SVD, and LSI are used for classifying the questions and retrieving relevant information. | TREC | Results for classification step: Precision = 98% Recall = 97% F1= 98% For querying: Precision (average): 88% Precision (minimum): 17% |

The study focuses on classifying questions rather than extracting correct answers. |

| Bakari & Neji (2020) | NArQAS → The system integrates RTE technique with semantic and logical representations to determine the relation of textual entailment between the logical representations of the question and the text passage, | RB | Open-domain | Analyze questions, extract features of the questions, identify relevant passages based on features of the questions. | AQA-WebCorp | Accuracy = 74% | The study does not retrieve passages. |

| Antoun, Baly & Hajj (2020a) | AraBERT → It described the process of pretraining the BERT transformer model specifically for the Arabic language. | DL → AraBERT | Closed-domain | Two main phases: pre-train and fine-tune the model. | -TyDiQA -ARCD |

EM-TyDiQA = 71% F1-TyDiQA = 83% EM-ARCD = 31% F1-ARCD = 65% |

Improvements to RC are required in the pre-training phase. |

| Antoun, Baly & Hajj (2020c) | AraELECTRA → This model introduced different approach that uses the replaced token detection (RTD) objective instead of the traditional masked language modeling (MLM) objective used in AraBERT. | DL → AraELECTRA | Closed-domain | Two main phases: pre-train and fine-tune the model. | -TyDiQA -ARCD |

EM-TyDiQA = 74% F1-TyDiQA = 86% EM-ARCD = 37% F1-EM-ARCD = 71% |

Improvements to RC are required in the pre-training phase. |

| Antoun, Baly & Hajj (2020b) | AraGPT2 → The paper presented the first advanced Arabic language generation model. The model is trained on a large corpus of internet text and news articles. | DL → AraGPT2 | Closed-domain | Two main phases: pre-train and fine-tune the model. | -TyDiQA -ARCD |

EM-TyDiQA = 3% F1-TyDiQA = 14% EM-ARCD = 4% F1-EM-ARCD = 13% |

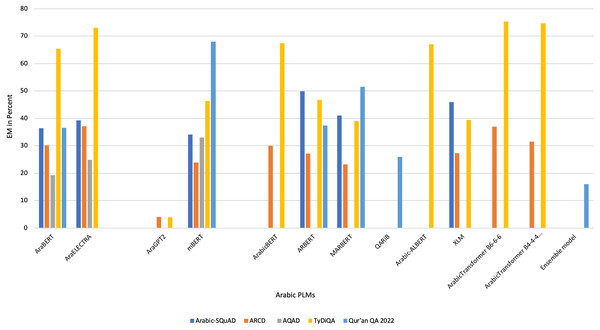

The study is not suitable for QA systems as it generates texts rather than extracting answers. |