Object-based multiscale segmentation incorporating texture and edge features of high-resolution remote sensing images

- Published

- Accepted

- Received

- Academic Editor

- Jingzhe Wang

- Subject Areas

- Algorithms and Analysis of Algorithms, Computer Vision, Data Mining and Machine Learning, Spatial and Geographic Information Systems

- Keywords

- Texture feature, Edge intensity, Time-frequency analysis, Multiscale segmentation, Object-based

- Copyright

- © 2023 Shen et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2023. Object-based multiscale segmentation incorporating texture and edge features of high-resolution remote sensing images. PeerJ Computer Science 9:e1290 https://doi.org/10.7717/peerj-cs.1290

Abstract

Multiscale segmentation (MSS) is crucial in object-based image analysis methods (OBIA). How to describe the underlying features of remote sensing images and combine multiple features for object-based multiscale image segmentation is a hotspot in the field of OBIA. Traditional object-based segmentation methods mostly use spectral and shape features of remote sensing images and pay less attention to texture and edge features. We analyze traditional image segmentation methods and object-based MSS methods. Then, on the basis of comparing image texture feature description methods, a method for remote sensing image texture feature description based on time-frequency analysis is proposed. In addition, a method for measuring the texture heterogeneity of image objects is constructed on this basis. Using bottom-up region merging as an MSS strategy, an object-based MSS algorithm for remote sensing images combined with texture feature is proposed. Finally, based on the edge feature of remote sensing images, a description method of remote sensing image edge intensity and an edge fusion cost criterion are proposed. Combined with the heterogeneity criterion, an object-based MSS algorithm combining spectral, shape, texture, and edge features is proposed. Experiment results show that the comprehensive features object-based MSS algorithm proposed in this article can obtain more complete segmentation objects when segmenting ground objects with rich texture information and slender shapes and is not prone to over-segmentation. Compare with the traditional object-based segmentation algorithm, the average accuracy of the algorithm is increased by 4.54%, and the region ratio is close to 1, which will be more conducive to the subsequent processing and analysis of remote sensing images. In addition, the object-based MSS algorithm proposed in this article can effectively obtain more complete ground objects and can be widely used in scenes such as building extraction.

Introduction

Multiscale segmentation (MSS) is vital for high-resolution remote sensing image analysis (Zhang et al., 2014; Johnson & Xie, 2013; Zhang, Xiao & Feng, 2017). The MSS algorithm can establish a strong foundation for further image analysis, such as terrain classification, target recognition, information extraction, etc. (Johnson et al., 2015; Zhang et al., 2015; Ma et al., 2018; Johnson, Tateishi & Hoan, 2013). The MSS is executed based on a variety of low-level features of the image, such as spectral feature and shape feature. The features of the image objects are comprehensively utilized and analyzed, and then the image objects are identified and processed to achieve further applications such as object detection. The MSS algorithm using spectral features and shape features has been widely studied. With the development of the MSS algorithm, scholars continue to integrate new features into the algorithm.

In recent years, some scholars have focused on improving and optimizing MSS algorithms by incorporating spectral and texture features. Wang & Li (2014) proposed a segmentation method of high spatial resolution remote sensing images based on the fusion of spectral, texture, and shape features. They used nonsubsampled contourlet transform to obtain texture features of the regions, and proposed an integrated region merging criterion by combining the texture, spectral, and shape features. Di et al. (2017) proposed a method for the multi-scale segmentation of high-resolution remote sensing images by integrating multiple features. They used Canny operator to extract edge information, and proposed the adaptive rule of Mumford-Shah region merging combination with spectral and texture information for segmentation. Fu et al. (2018) proposed a fast and efficient framework for multiscale and multifeatured hierarchical image segmentation. The algorithm successfully integrate spectral information, texture information, and structural information from a small number of superpixels to enhance expressiveness. Lu, Wang & Yin (2019) proposed a super-pixel method based on the simple linear iterative clustering for remote sensing images segmentation at high spatial resolution. They modified the algorithm by incorporating grey-level co-occurrence matrix texture with color features and improved the measure approach with weighted distance of texture and color similarity. Hu et al. (2021) proposed an MSS algorithm using a scale-sets structure, in which each segment is represented as a node of the hierarchy. In the algorithm, segments are described using spectral, textural, and geometric features, and then are classified using a random forest classifier for further applications.

Additionally, some algorithms and frameworks that adopt edge features have also been further developed. Ji, Zhang & Zhang (2013) proposed an improved edge linking method for segmentation of remotely sensed imagery. This method generated edges using the heuristic search method, which links all the initial edge points based on the gradient strength, gradient direction, and edge direction. Precise, continuous, and one-pixel-wide edges were produced for edge-based segmentation. Zhang et al. (2013) proposed a boundary-constrained multi-scale segmentation method. In this method, to improve the accuracy of object boundaries, the property of edge strength is used as a merging criterion. Wang & Li (2014) proposed a hard-boundary constraint and two-stage merging method for remote sensing image segmentation. Edge-constrained watershed segmentation and edge allocation were used to obtain initial small segments. In the first stage, they proposed a hard-boundary ratio to control the merge effectively. The second non-constrained merging stage is conducted on the initial object primitives, which results in final segmentation. Zhang, Xiao & Feng (2014) proposed a fast hierarchical segmentation method for high-resolution remote sensing images. They proposed an adaptive edge penalty function to formulate the merging criterion, serving as a semantic factor, which can help to remove meaningless weak edges within objects. Su (2019) proposed a new segmentation technique by fusing a region-merging method with an unsupervised segmentation evaluation technique called under- and over-segmentation aware, which is improved using edge information. The research on edge features has further improved the accuracy of the traditional MSS algorithm.

Scholars have also adopted methods such as optimization theory, semantics, and deep learning to broaden the research scope of MSS algorithms. Zhang, Tong & Chen (2012) made use of self-organizing, adaptive, and self-learning characteristics of evolutionary computation to automatically optimize the parameters of the multi-scale segmentation algorithm according to the evaluation of segmentation results. Shen et al. (2019) proposed an adaptive parameter optimization method for MSS. To find the optimal scale of objects, a local spectral heterogeneity measure was applied by calculating the spectral angle between inter and intra objects. Jiang, Huo & Feng (2020) proposed an optimal segmentation algorithm. This algorithm combines the principal component analysis (PCA) method with fuzzy c-means (FCM) method. Zhao et al. (2022) proposed an end-to-end attention-based semantic segmentation network (SSAtNet). In the encoder phase, they designed a more effective ResNet-101 backbone to capture detailed features. The aforementioned research further laid the foundation for the development of the MSS algorithm.

Much of the extant research has mainly focused on spectral features, shape features, texture features and edge features. However, less research has considered all these features. To address this problem, we start from the aspect of texture feature and propose the concept of texture heterogeneity. Then, combining texture feature with spectral feature and shape feature, a set of control experiments is designed. The results show that the algorithm greatly improves the accuracy of the MSS algorithm. Next, the concept of edge intensity is proposed and combined with texture, spectrum, and shape feature. Two sets of control experiments are designed, and the results show that the algorithm further improves the segmentation accuracy of the multiscale and multifeatured algorithm. The rest of the article is organized as follows: In the METHODS section, we review traditional MSS methods and object-based segmentation methods and describe the remote sensing image texture feature. Then, we propose the concepts of texture heterogeneity, edge intensity, and region ratio and apply them to traditional MSS methods in the RESULTS. At the same time, we created three groups of control experiments to test the accuracy of the proposed algorithm and obtain the comparison results with the traditional algorithm. Finally, the full text is summarized in the CONCLUSION.

Methods

Multiscale image segmentation with color and shape features

MSS is used in the famous remote sensing image analysis software eCognition Developer, which is produced by Definiens (Munich, Germany) and serves as the core algorithm for object-based image analysis. The basic idea of MSS is that images in nature conform to the fractal theory: that is, a typical structure will appear on different scales of an image, and show a certain degree of irregularity and self-similarity on various scales. The fractal feature is particularly obvious in remote sensing images. Mountains and rivers in the images all have fractal features. For example, in remote sensing images of urban areas, dense building areas will present: at a fine scale (small scale), buildings and building shadows form light and dark texture features; at a medium scale, buildings and the open space between the buildings form a texture feature of light and dark; and at a coarse scale (large scale), the regularly arranged buildings and buildings also form a periodic texture feature. This self-similarity feature is manifested in the fractal feature of remote sensing images.

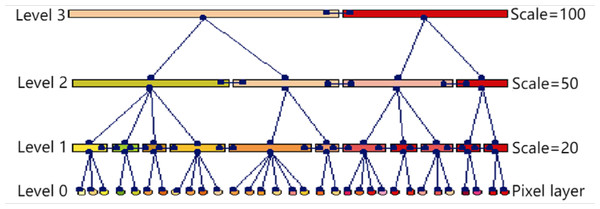

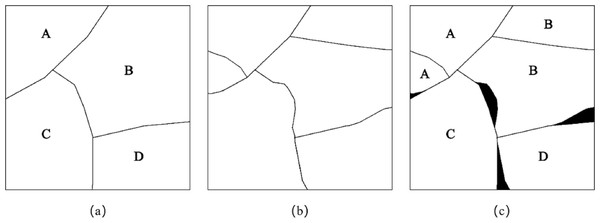

In many different segmentation scales, the MSS yields the segmentation result of the image at the corresponding scale, i.e., the image object collection. Connecting image objects of different scales in the same area in the image forms a hierarchical network of image objects, as shown in Fig. 1. In the hierarchical network, each image object not only saves its attribute information, such as spectral mean, variance, area, perimeter, edge, and other information, but also saves its adjacent object information and affiliation (child object and parents object). When comparing two image objects with similar attributes in a remote sensing image, it is particularly important to analyze their scale level and the semantic information between adjacent objects and subordinate objects.

Figure 1: Image object hierarchical network diagram.

The MSS is a bottom-up region merging algorithm. The algorithm adopts the merging criterion of the least increase in heterogeneity as the regional merging strategy. At the beginning of the segmentation, the algorithm regards each pixel in the image as a minimum image object and finds the local best mutual matching object for merging through the corresponding merging criteria. The algorithm regards the heterogeneity growth threshold as the scale parameter in image segmentation and, by setting different scale parameters, the image can be segmented at multiple scales.

In the MSS, the degree of object heterogeneity represents the degree of heterogeneity of the internal attributes of an image object. The algorithm uses the growth value of the object’s heterogeneity and its threshold as the criteria for judging whether the object is merged and with whom. The measurement method of image object heterogeneity is the core of the whole algorithm. The algorithm considers the spectral feature and shape feature of the image and proposes two standards for measuring the heterogeneity of image objects: spectral heterogeneity and shape heterogeneity. Spectral heterogeneity describes the degree of heterogeneity of the spectrum inside an image object. The definition of spectral heterogeneity hcolor is as follows: (1)

c is spectral channel and ωc represents weighting factor of c-th band, which is supposed to meet 0 ≤ ωc ≤ 1 and ∑cωc = 1.σc represents the standard deviation of spectral values in the c-th band. For the shape feature of the remote sensing image object, the algorithm proposes two criteria for shape heterogeneity: compact heterogeneity and smooth heterogeneity. Compact heterogeneity describes the compactness in the shape of image objects. The closer the shape of the object is to a circle, the greater the compactness and the smaller the value of the compact heterogeneity. hcmpct can be defined as follows: (2)

n is the area of the image object (number of pixels), and l is the perimeter of the image object. Smoothing heterogeneity describes how smooth the image object is in shape. The closer the shape of the object is to a rectangle, the smoother the edge of the object, and the smaller the value of the smoothing heterogeneity. hsmooth can be defined as follow: (3)

In the formula, l represents the perimeter of the image object, and b represents the perimeter of the smallest circumscribed rectangle of the image object. In raster images, the algorithm believes that the smallest bounding rectangle can be replaced, approximately, by the bounding box of the object. Therefore, when the calculated image object Obj1 and the image object Obj2 are merged into the image object ObjMerge, the algorithm defines the growth value f of the overall object heterogeneity as the weighted sum of the spectral heterogeneity growth value Δhcolor and the shape heterogeneity growth value Δhshape: (4)

ωcolor and ωshape are the spectral weighting factor and shape weighting factor, respectively, which must meet 0 ≤ ωcolor ≤ 1, 0 ≤ ωshape ≤ 1 and ωcolor + ωshape = 1. The growth value Δhcolor of spectral heterogeneity is defined as: (5)

In the formula, c represents the band, and ωc represents the weighting factor of the c-th spectral band, which must meet 0 ≤ ωc ≤ 1 and ∑cωc = 1.nObj1, nObj2, nMerge is the area (that is, the number of pixels) of the object Obj1, the object Obj2, and the combined object. , , is the standard deviation of the spectral value of the object Obj1, the object Obj2, and the combined object in the c-th band. The algorithm defines the shape heterogeneity growth value Δhshape as the weighted sum of the compact heterogeneity growth value Δhcmpct and the smooth heterogeneity growth value Δhsmooth: (6)

ωcmpct and ωsmooth represent the compact weight factor and smooth weight factor, respectively, which must meet 0 ≤ ωcmpct ≤ 1, 0 ≤ ωsmooth ≤ 1, and ωcmpct + ωsmooth = 1. Compact heterogeneity growth value Δhcmpct and smooth heterogeneity growth Δhsmooth are defined as: (7) (8)

In the above two formulas, nObj1, nObj2, nMerge is the area (the number of pixels) of the object Obj1, the object Obj2, and the combined object, respectively. lObj1, lObj2, lMerge is the perimeter of the object Obj1, the object Obj2, and the merged object, respectively. bObj1, bObj2, bMerge is the perimeter of the smallest bounding rectangle of the object Obj1, the object Obj2, and the merged object, respectively. It can be seen from the above description that the image segmentation result obtained by the MSS meets the minimum sum of the weighted heterogeneity of the image object on a certain scale s. At this scale, the image objects in the segmentation result are small enough in attribute differences, and the attribute differences between image objects are large enough.

Multiscale segmentation incorporating texture feature

The object-based image analysis method takes the image object as the basic unit. The segmentation results of images at different scales are obtained through the bottom-up region merging process. The segmentation process considers the spectral and shape features of the image object. The texture homogeneity between adjacent pixels in the image is not considered. Based on the analysis image texture, we propose a remote sensing image texture feature descriptor based on time-frequency analysis. Furthermore, this section constructs the texture heterogeneity measure of the image object based on this method, and an MSS algorithm for object-based remote sensing images combined with texture feature is proposed.

Texture feature descriptor

When humans observe an image, they will find that although some ground objects do not have regularity in a local area, they will show a certain law when viewed as a whole. This feature of local irregularity and overall regularity is called texture. Humans have a clear perceptual understanding of the texture feature in images, but it is difficult to determine a unified mathematical definition. Currently, the commonly used methods of texture feature description can be divided into four categories: statistics-based methods, structure-based methods, model-based methods, and time-frequency analysis-based methods (Zhang & Tan, 2002).

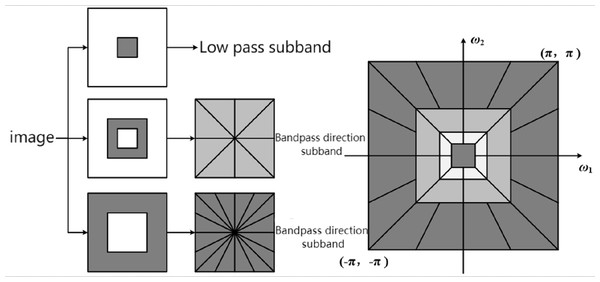

Scholars have successively proposed methods to description texture feature based on time-frequency analysis. The most famous is the Laws texture (Laws, 1980). The basic idea is to first use multiple small convolution templates to filter the image to obtain features such as horizontal edges, high-frequency points, and vertical edges, and then use a larger moving window to convolve to obtain local texture energy. In terms of time-frequency analysis, after wavelet, wavelet tree and Gabor wavelet, nonsampled contourlet transform (NSCT) has gradually become an area of research interest. As shown in Fig. 2, the process is mainly divided into two steps: First, the image is divided into low-frequency subbands and multiscale high-frequency subbands with a nonsampled pyramid structure, and the multiscale decomposition results are obtained. Then, a nonsampled directional filter bank is used to decompose each high frequency subband in multiple directions. Since the filters used in the two steps are all nonsampled, the translation invariance of the filtering result is ensured.

We use nonsampled contourlet transformation to decompose the image to obtain multiscale and multidirectional subband fs, d, where s represents the scale and d represents the direction. Then, we use the local texture energy function to calculate the local energy of each scale and direction subband, and use the result as the texture feature of the image. Taking the window size as (2n + 1) × (2n + 1), the calculation formula for the texture feature Es, d at the coordinate (x, y) in the image is as follows: (9) (10) (11)

In the above formula, we believe that in a partial window, the contribution of NSCT coefficients to the texture feature of the center of the window is inconsistent. The farther from the center of the window, the smaller the contribution of the NSCT coefficient to the texture feature, and it conforms to the Gaussian distribution. It is worth mentioning that since the filter of NSCT is nonsampled, a texture feature matrix equal in size to the original image can be obtained by the method; that is, each pixel in the image has a corresponding texture feature. This provides the basis for the construction of texture heterogeneity in the next subsection.

Figure 2: Schematic diagram of NSCT decomposition process.

Heterogeneity of texture feature

The image object has heterogeneity in spectral feature. When there are two different texture regions in an image object, the object also has heterogeneity in texture feature. Based on the remote sensing image texture feature, we propose an object-based remote sensing image MSS algorithm that combines texture features. The algorithm puts forward the concept of texture heterogeneity, and comprehensively considers the spectral feature, shape feature, and texture feature of remote sensing images, and improves the heterogeneity criterion of the MSS. Texture heterogeneity describes the degree of heterogeneity in the texture feature within an image object. Texture heterogeneity htexture is defined as: (12)

s and d represent the scale and direction of the image texture feature, respectively. ωs, d represents the weighting factor of the texture feature in the s-th scale and d direction, which needs to meet 0 ≤ ωs,d ≤ 1 and ∑s,dωs,d = 1.σs,d represents the standard deviation of the texture feature of the image object in the s-th scale and d direction. Δhtexture is the texture heterogeneity growth value, which is defined as follows: (13)

nObj1, nObj2, and nMerge are the area of the object Obj1, Obj2 and the merged object respectively. , , and are the standard deviation of texture feature in s-scale and d-direction of the Obj1,Obj2 and the merged object respectively. Therefore, we compute the increase in the overall heterogeneity of the object f through the weighted sum of Δhcolor, Δhshape, and Δhtexture. (14)

ωcolor, ωshape, and ωtexture represent spectral weighting factor, shape weighting factor, and texture weighting factor, respectively, which should be meet 0 ≤ ωcolor ≤ 1, 0 ≤ ωshape ≤ 1, and ωcolor + ωshape + ωtexture = 1. Using the improved heterogeneity criterion as the merging criterion for the object-based MSS process of remote sensing images can make full use of the texture information of the features in remote sensing images and improve the segmentation accuracy of remote sensing images with rich texture information.

Multiscale segmentation incorporating edge feature

Edge intensity descriptor

In the object-based MSS of remote sensing images, the shape heterogeneity criterion plays a role in controlling the shape of image objects. The shape heterogeneity criterion uses two indicators of compact heterogeneity and smooth heterogeneity to control the segmentation results. According to Eqs. (2) and (3), the algorithm considers that image objects that are closer to a circle have less compact heterogeneity, and image objects that are closer to a square have less smooth heterogeneity.

Some slender features in the image, such as rivers, roads, ditches, etc., will have relatively high shape heterogeneity. When segmenting images with such features, the algorithm often divides these elongated features into segments and cannot segment similar features into complete objects. To describe the true shape of the features more effectively, we propose an edge merging cost criterion based on the edge feature of the image. Combined with the heterogeneity criterion, an object-based multiscale segmentation algorithm combining spectral, shape, texture, and edge features is proposed.

In an image, there are often obvious boundaries between areas of different types, and there are discontinuous grayscale changes at the boundaries, which constitute the edges in the image. In object-based image segmentation, once the edge of the image object is determined, the shape of the object is also determined. Common gradient operators include Roberts cross-gradient operator (Roberts & Lawrence, 1965), Prewitt operator, Sobel operator (Sobel, 1970), etc. Some scholars also use the feature of zero-crossing at the edge points of the second-order differential of the image and extract the edge of the image by calculating the second-order partial derivatives of the image in the x and y directions, such as the Laplacian operator. The above operators are more sensitive to noise, so it is easy to detect wrong edge points when processing noisy images.

Therefore, some scholars consider smoothing the image before detecting with the operator. Canny derived the optimal edge detection operator in two-dimensional space based on three basic criteria: low error rate, precise position and single edge point response (Canny, 1986). By considering the pros and cons of various edge detection operators, we select the Canny operator to construct the edge intensity of the image. The Canny algorithm mathematically expresses the above three criteria and tries to find the optimal solution of the expression. The edge intensity M and the normal direction θ of the image at the point (x, y) calculated by the Canny algorithm are: (15) (16)

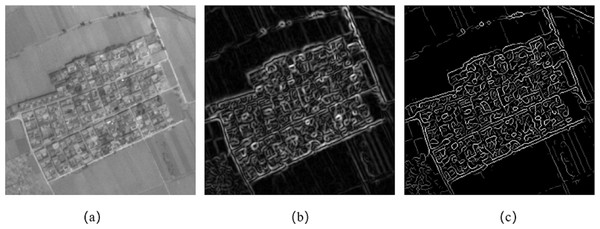

Since the edges detected by the gradient operator are generally thick, it is difficult to accurately locate the edge points. The Canny algorithm uses non-maximum suppression on the edge intensity map, and detects and connects the edge points through the dual-threshold edge tracking method. The difference is that we propose to use the method of non-maximum suppression to refine the edge intensity map and use the refined result as the edge intensity map of the image. Different from the final detection result of the Canny operator, the refined edge intensity map is not a binary image, so the intensity information of the edge points is retained, which is more conducive to the use of image edge feature in subsequent processing. Figure 3A is used for edge detection using Canny operator. Figures 3B and 3C show the Canny edge intensity map before and after thinning, respectively. It can be seen in the figure that the edge in the refined edge intensity map is only one pixel wide, and its edge point positioning is more accurate.

Edge merging cost criterion

The algorithm puts forward the concept of “edge merging cost,” and comprehensively considers the spectral, shape, texture, and edge features of remote sensing images. In the hierarchical stepwise optimization model proposed by Beaulieu & Goldberg (1989), the image segmentation should be optimized with the smallest increase in the global expression error. The adjacent area with the smallest merging cost should be searched for each merging, and finally a multiscale expression model of the image is built. The MSS is essentially in line with the hierarchical iterative optimization model. The increase in object heterogeneity in the algorithm is the merging cost of the two objects, i.e., the expression error increase expressed by the hierarchical iterative optimization model. The article defines the edge merge cost when the object Obj1 and the object Obj2 are merged as follows: (17)

M(x, y) represents the edge intensity of the image in the point (x, y). Common denotes a set of points adjacent to each other in the Obj1 and Obj2, called adjacency edge. Common can be described as follows when four adjacency criteria are used: (18)

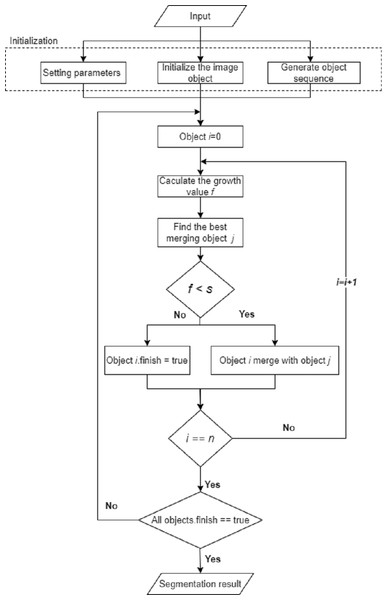

Algorithm flow

We propose an object-based remote sensing image MSS algorithm based on the edge merging cost criterion. The implementation process of the algorithm is shown in Fig. 4.

Figure 3: Edge intensity map.

(A) Image with edge detection by canny operator. (B) Canny edge intensity map before thinning. (C) Canny edge intensity map after thinning.Figure 4: Flow chart of MSS with texture and edge features.

When computing the image object Obj1 and the image object Obj2 to ObjMerge, the article’s algorithm defines the merging cost f generated during the merging as the weighted sum of the increase value of the object heterogeneity and the edge merging cost of the object: (19)

When performing bottom-up regional merging, the algorithm of the thesis adopts the criterion of minimum object merging cost as the regional merging strategy, combines the edge merging cost and the heterogeneity criterion to form a new merging criterion, and proposes a cost criterion based on the edge merging.

Results

Evaluation analysis

Carleer, Debeir & Wolff (2005) evaluated the segmentation accuracy through two indicators: mis-segment ratio (MR) and regions ratio (RR). Figure 5 is a schematic diagram of wrong segmentation, where Fig. 5A is the reference segmentation result, Fig. 5B is the algorithmic segmentation result, and Fig. 5C is the algorithmic wrong segmentation map, in which the black area is the wrong segmentation part.

Figure 5: Schematic diagram of wrong segmentation based on pixel number error.

The lower the MR, the higher the overall accuracy of image segmentation, and vice versa. The calculation formula of the wrong segmentation rate is as follows: (20)

Cij represents the total number of pixels whose reference category is j divided into category i, and Nref represents the number of reference result regions (i.e., the number of reference categories). The RR is used to comprehensively evaluate whether there is over-segmentation or under-segmentation in image segmentation. The RR is the ratio of the number Ns of the segmentation result area to the number Nref of the reference result area. The closer the RR is to 1, the better the segmentation result. The calculation formula of the regions ratio is as follows: (21)

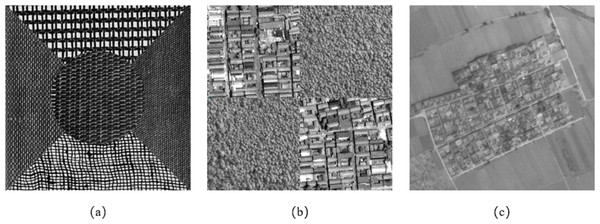

Experiment 1

To verify the performance of the proposed method, we used three sets of data to test the algorithm. In the first experiment, five different textures in the Brodatz standard texture library were selected and synthesized into a texture image, as shown in Fig. 6A. In the second experiment, two typical textures in remote sensing images, architectural area and woodland, were selected and synthesized into a texture image, as shown in Fig. 6B. The remote sensing texture area is selected from the IKONOS satellite remote sensing image, and the image spatial resolution is 1 meter. The third experiment selected a part of the remote sensing image of the ZY-3 satellite in the Wuhan area with a spatial resolution of 2.1 m, as shown in Fig. 6C.

To analyze the performance of the proposed method and the MSS in segmenting images with rich texture information, we increased the weight of texture feature and reduced edge intensity. The parameters are manually tuned according to the degree of image texture and edge features. We chose the multiresolution segmentation algorithm in eCognition Developer 8.8 software (herein eCognition software) as the contrast experiments, and compared and analyzed the results of the three groups of experiments.

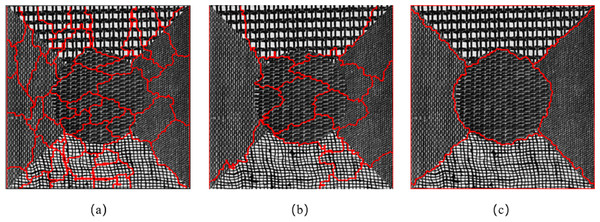

Figure 7 shows the segmentation result of the Brodatz texture synthetic image. Figures 7A and 7B show the segmentation results of eCognition software at different scales. The parameters in Fig. 7A are set to scale s = 30 and weight factor ωshape = 0.9 ωcmpct = 0.5. The parameters in Fig. 7B are set to scale s = 70 and weight factor ωshape = 0.8 ωcmpct = 0.5. Figure 7C is the segmentation result of the algorithm proposed in this article. The parameters are set to scale s = 100, weight factor ωshape = 0.05, ωtexture = 0.95, ωcmpct = 0.5. It can be seen from Fig. 7 that the algorithm proposed in this article divided five different texture types into five independent and complete regions. However, the segmentation results of the eCognition software failed to obtain a complete texture area at different scales, and there is a serious mis-segmentation at the boundary of the texture area.

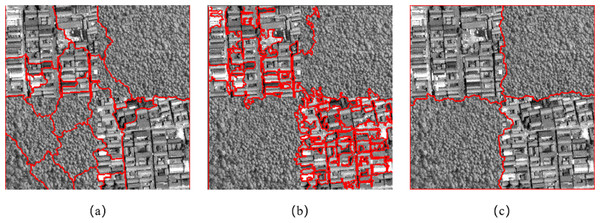

Figure 8 shows the segmentation result of remote sensing texture synthetic image. Figures 8A and 8B are the segmentation results of eCognition software at different scales. The parameters in Fig. 8A are set to scale s = 50, weight factor ωshape = 0.8, ωcmpct = 0.5. The parameters in Fig. 8B are set to scale s = 80, weight factor ωshape = 0.2, ωcmpct = 0.5. Figure 8C is the segmentation result of the algorithm proposed in this article. The parameters are set to scale s = 50, weight factor ωshape = 0.05, ωtexture = 0.95, ωcmpct = 0.7. It can be seen from Fig. 8 that the algorithm proposed in this article can better distinguish the two different remote sensing textures of the construction area and the woodland and divided the image into four independent and complete areas. However, the segmentation results of the eCognition software failed to obtain a complete texture area at different scales, and there is obvious mis-segmentation at the boundary of the texture area.

Figure 6: The image data selected in Experiment 1.

(A) Experiment 1a: different texture composite images from the Brodatz standard texture library. (B) Experiment 2b: different texture composite images from the IKONOS satellite remote sensing image. (C) Experiment 3c: the remote sensing image of the ZY-3 satellite in Wuhan area with a spatial resolution of 2.1 m.Figure 7: Comparison of segmentation results in Experiment 1a.

(A) The segmentation result of eCognition software at fine scale. (B) The segmentation result of eCognition software at coarse scale. (C) The segmentation result of the algorithm proposed in this article.Figure 8: Comparison of segmentation results in Experiment 1b.

(A) The segmentation result of eCognition software at fine scale. (B) The segmentation result of eCognition software at coarse scale. (C) The segmentation result of the algorithm proposed in this article.Figure 9 shows the segmentation result of the resource No. 3 image in the Wuhan area. Figures 9A and 9B show the segmentation results of eCognition software at different scales. The parameters of Fig. 9A are set to scale s = 61, weight factor ωshape = 0.7, ωcmpct = 0.0. The parameters of Fig. 9B are set to scale s = 80, weight factor ωshape = 0.3, ωcmpct = 0.5. Figure 9C is the segmentation result of the algorithm proposed in this article. The parameters are set to scale s =150, weight factor ωshape = 0.1, ωtexture = 0.8, ωcmpct = 0.3. It can be seen from Fig. 9 that the algorithm proposed in this article can better distinguish the construction area in the central area of the image. The cultivated land distributed on the upper side, lower side, left side and right side of the building area is divided into four complete areas, and the building area is divided into two areas.

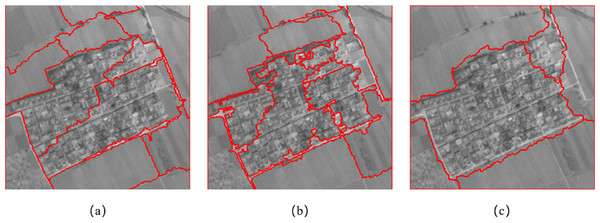

Figure 9: Comparison of segmentation results in Experiment 1c.

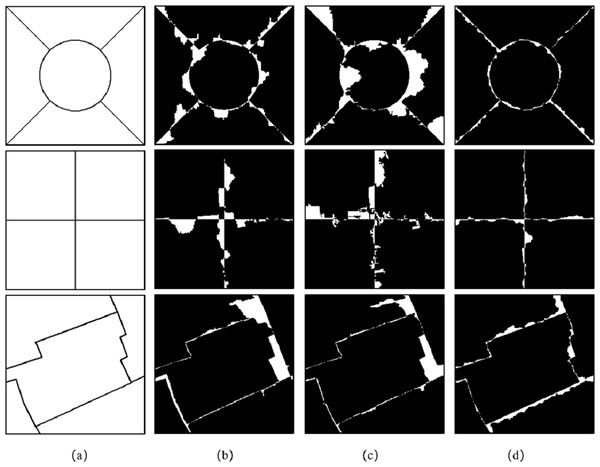

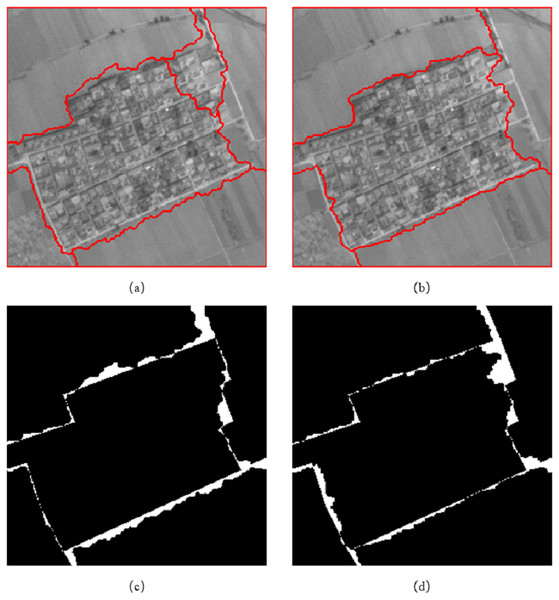

(A) The segmentation result of eCognition software at fine scale. (B) The segmentation result of eCognition software at coarse scale. (C) The segmentation result of the algorithm proposed in this article.We adopted the method of supervision and evaluation, based on the error of the number of pixels, and evaluated the accuracy of the image segmentation results through two indicators: the MR and the RR. In Fig. 10, column (a) is the reference segmentation results of the three sets of experimental data, columns (b) and (c) are the mis-segmented diagrams of the eCognition software segmentation results of the three sets of experimental data. Column (d) is the mis-segmented map of the segmentation result of the algorithm proposed in this article, in which the white part is the mis-segmented pixel.

Figure 10: Reference segmentation results and mis-segmented diagrams of Experiment 1.

Table 1 evaluates the segmentation results of Experiment 1. It can be seen from Table 1 that the MR of the segmentation results of the proposed algorithm for the three groups of experiments in the article are lower than the segmentation results obtained using eCognition software.

| eCognition algorithm (fine scale) | eCognition algorithm (coarse scale) | Algorithm of article | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Mis-segment ratio (%) | Regions ratio | scale | Mis-segment ratio (%) | Regions ratio | scale | Mis-segment ratio (%) | Regions ratio | scale | |

| Brodatz texture composite image | 8.36 | 10.2 | 30 | 14.35 | 2.4 | 70 | 3.23 | 1 | 100 |

| Remote sensing texture synthesis image | 5.65 | 6 | 50 | 6.47 | 8.75 | 80 | 2.48 | 1 | 50 |

| Resource No. 3 image | 7.41 | 2.8 | 60 | 5.84 | 3 | 80 | 4.68 | 1.2 | 150 |

Therefore, in the three sets of experiments, the eCognition software has different degrees of mis-segmentation at the boundary of the image texture area. In the later experiments, for the more complex texture area of the building area, the eCognition software has the phenomena of over-segmentation at different scales.

In summary, because the regions with rich texture information in the image show different spectral heterogeneity in the spectral feature, the traditional object-based MSS algorithm does not fully consider the texture information of the features in the image. Therefore, over-segmentation will occur when segmenting images with rich texture information.

Experiment 2

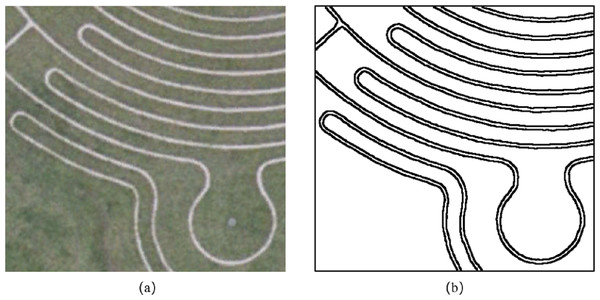

When segmenting images with less texture information and rich edge information, we increased the weight of the edge intensity and reduced the weight of the texture information. We selected a satellite remote sensing image of Milton Keynes, UK, as shown in Fig. 11A; the image was acquired in 2005. The area covered in the image includes the corner of a plant maze, and there are two dominant types of features, grass and roads. Through visual interpretation, we manually segmented the image and extracted eight roads and 10 grasslands separated by roads, totaling 18 areas. The reference segmentation result is shown in Fig. 11B. At the same time, we choose the MSS algorithm in eCognition software as a comparative experiment.

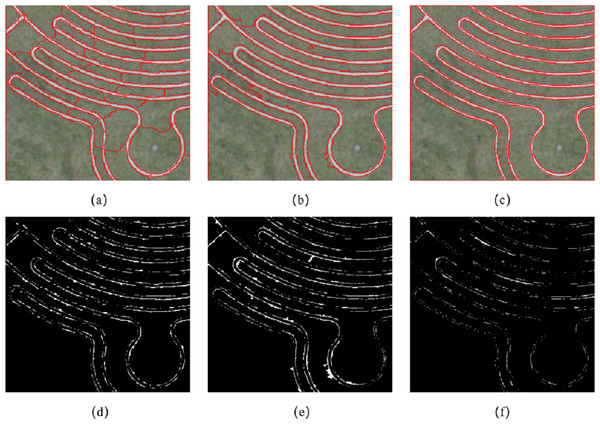

Figure 12 is the segmentation result of the first set of experiments. Figures 12A and 12B are the segmentation results by using the eCognition. The parameters of Fig. 12A are s = 70, ωshape = 0.8, ωcmpct = 0.5, while the parameters of Fig. 12B are s =150, ωshape = 0.1, ωcmpct = 0.5. Figure 12C is the segmentation result of this article’s algorithm, and the parameters are s =150, ωcolor = 0.5, ωedge = 0.5. Figures 12D, 12E and 12F indicates incorrect segmentation results of eCognition and our algorithm.

Figure 11: Experiment 2 image and reference segmentation results.

Figure 12: Experiment 2 image segmentation result comparison.

It can be seen from Fig. 12 that the algorithm proposed in the article divides the eight roads and 10 grasslands in the image into independent and complete objects. For the segmentation results of the eCognition software, when the shape weighting factor is large, the software segmented the road and grass in the image into several segments, failing to segment the complete objects, as shown in Fig. 12A. When the shape weighting factor is small, because the algorithm’s constraints on the shape feature are weakened, the road objects in the segmentation result have some burrs at different positions, as shown in Fig. 12B. The proposed algorithm made full use of the edge feature in the remote sensing image, and the location of the edge feature is more accurate.

Based on the error of the number of pixels, we evaluated the accuracy of the image segmentation results through two indicators: the MR and the RR. Table 2 shows the segmentation accuracy of Experiment 2 image by different algorithms. It can be seen from the table that the ratio of the segmentation results of eCognition software at different scales is greater than 1, and there are different degrees of over-segmentation. When the scale s = 150, the RR of the eCognition software segmentation result is closer to 1, but due to the lack of shape constraints, the positioning of the edge feature is inaccurate, and the MR is slightly higher than the result of the scale s = 70. The proposed algorithm divides the regions with similar features into independent and complete objects, the RR is equal to 1, and the MR is lower than the segmentation results of eCognition software at different scales.

| eCognition algorithm (fine scale) | eCognition algorithm (coarse scale) | Proposed method | |

|---|---|---|---|

| Mis-segment ratio (%) | 4.22 | 4.42 | 1.44 |

| Regions ratio | 3.06 | 1.22 | 1 |

Experiment 3

When segmenting images with rich texture information and edge information, we adaptively adjust the weights of textures and edges.

Figure 13A is the segmentation result of the algorithm proposed in Experiment 1, and Fig. 13B is the result of the object-based segmentation algorithm based on the edge merging cost criterion proposed in this section. The parameters in Fig. 13A are set to scale s =150 and weight factor ωshape = 0.1, ωcmpct = 0.3, ωtexture = 0.8. The parameters in Fig. 13B are set to scale s =150 and weight factor ωshape = 0.1, ωcmpct = 0.3, ωtexture = 0.7, ωedge = 0.2. Figures 13C and 13D are the corresponding mis-segmentation diagrams, respectively.

Figure 13: Comparison of the results of image segmentation of resource No. 3.

It can be seen from Fig. 13 that after the algorithm incorporates the edge feature, the accuracy of the boundary location of the texture area has been significantly improved, and the upper and lower boundaries of the building area can be found more accurately, and the building is divided into an independent and complete area. Table 3 shows the segmentation accuracy of the two algorithms. It can be seen from the table that the segmentation result of the algorithm based on the edge merging cost criterion is lower in the error segmentation rate than the object-based segmentation algorithm combined with the texture feature, and the RR is equal to 1.

| Method combined with texture features | Method combined with texture featuresand edge merging cost criterion | |

|---|---|---|

| Mis-segment ratio (%) | 4.68 | 4.48 |

| Regions ratio | 1.2 | 1 |

Through three sets of experiments, the article supports the effectiveness of the object-based MSS algorithm based on the edge merging cost criterion. While using the spectral, shape, and texture features of remote sensing images, the algorithm accounts for the edge feature of the image. The segmentation results can accurately locate the boundaries of the features, and its segmentation accuracy is better than traditional algorithms.

Conclusions

This article takes the MSS as an example, focusing on the framework and process of object-based MSS algorithms. In response to texture feature and edge feature of the ground features in remote sensing images are being rarely used, the specific improvements proposed in this article are as follows:

We proposed a remote sensing image texture feature description method based on time-frequency analysis, which constructs texture heterogeneity. Additionally, a combined texture feature-based object-based MSS algorithm is proposed. Experiments demonstrated that the algorithm can better distinguish different textures in remote sensing images, and it has a better segmentation effect on images with rich texture information.

We used the Canny operator to describe the edge intensity of remote sensing images, and proposed an edge merging cost criterion. Experiments demonstrated that the algorithm can locate the boundaries of features in remote sensing images more accurately, and it has a better segmentation effect on remote sensing images with rich texture information.

Through the algorithm proposed in this article, when segmenting features with rich texture information and slender shaped features, a more complete segmentation object can be obtained, and over segmentation does not occur easily, which will be more conducive to follow up processing and analysis of remote sensing images. The proposed object-based MSS algorithm proposed can effectively obtain more complete ground objects, thereby solving the problem of determining objects in the visual attention model. The research results can be widely used in objected building extraction from high-resolution remote sensing images. A limitation of this study is that we do not use the more popular deep learning method, but use a more traditional segmentation algorithm, so how to combine the deep learning method is a direction for future research.

Supplemental Information

Code of the object-based multiscale segmentation Method incorporating texture and edge features

The program is written in C++ language, and use a graphical user interface to read, process and show the experimental images automatically. The parameters can be set from the parameter dialog box.

Data of the object-based multiscale segmentation Experiments incorporating texture and edge features

The input data, process data and result data. “Figure6a.bmp” is the raw data for Figure6(a). “Figure6b.bmp” is the raw data for Figure6(b). “Figure6c.bmp” is the raw data for Figure6(c). “Figure11.bmp” is the raw data for Figure11.